"主不在乎" ----《三体·黑暗森林》

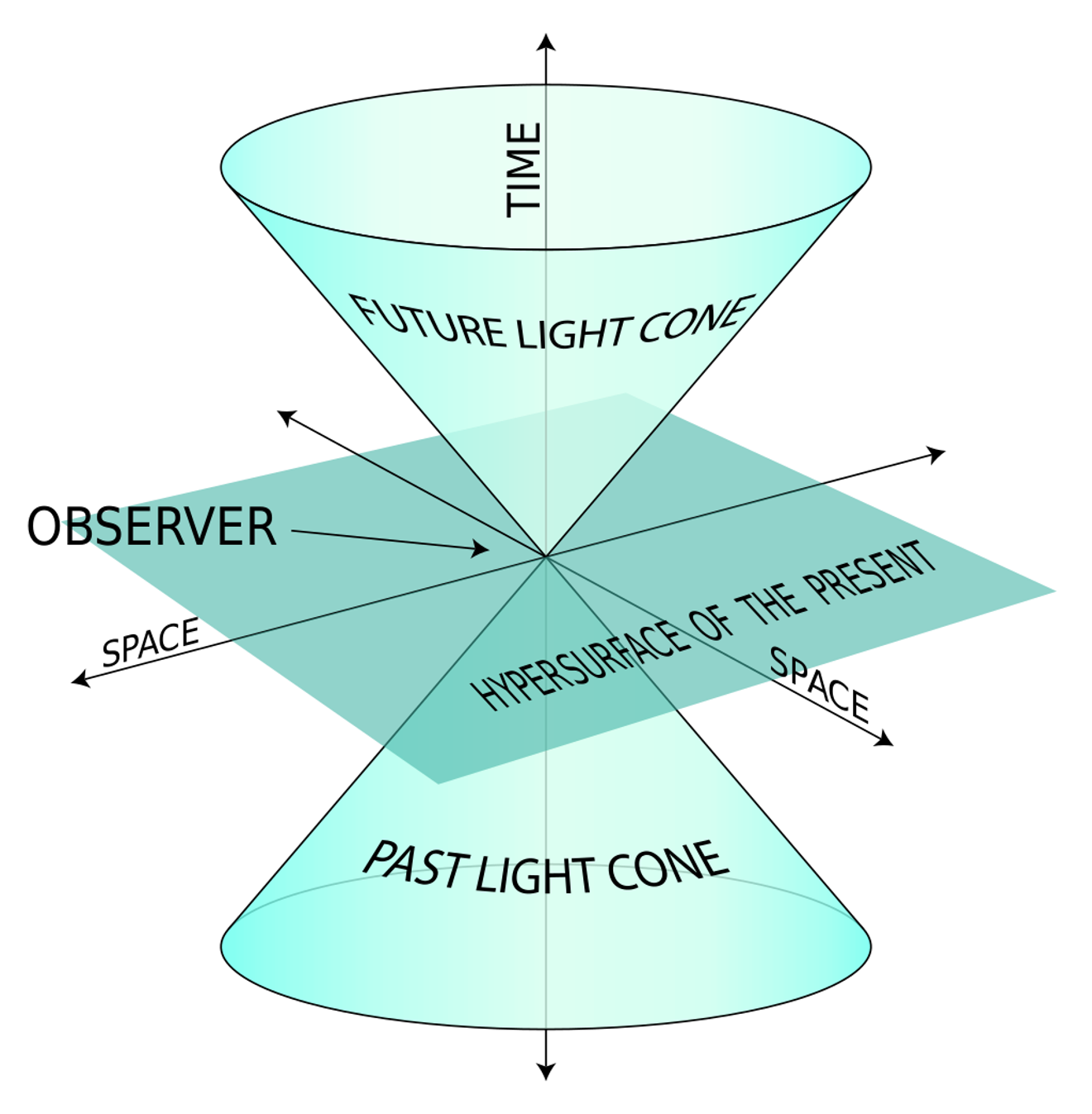

"光锥之内就是命运"

本次测试所用训练数据集和成品LORA/train data&lora file in this test

相关清理代码/测试图片/训练设置在本页附件内

clean code /test image /train config in this page attachments

微博上看到个很有趣的项目,声称可以保护图片使其无法训练。

I saw an interesting project on Weibo, claiming to protect images from being trained.

”Mist是一项图像预处理工具,旨在保护图像的风格和内容不被最先进的AI-for-Art应用程序(如Stable Diffusion上的LoRA,SDEdit和 DreamBooth功能和Scenario.gg等)模仿。通过在图像上添加水印,Mist使AI-for-Art应用程序无法识别并模仿这些图像。如果AI-for-Art应用程序尝试模仿这些经Mist处理过的图像,所输出的图像将被扰乱,且无法作为艺术作品使用。。“

”Mist is a powerful image preprocessing tool designed for the purpose of protecting the style and content of images from being mimicked by common AI-for-Art applications, including LoRA, SDEdit and DreamBooth functions in Stable diffusion, Scenario.gg, etc. By adding watermarks to the images, Mist renders them unrecognizable and inimitable for the models employed by AI-for-Art applications. Attempts by AI-for-Art applications to mimic these Misted images will be ineffective, and the output image of such mimicry will be scrambled and unusable as artwork.“

https://weibo.com/7480644963/4979895282962181

https://mist-project.github.io/

下载,这个项目环境配置本身比较困难,而且刚性需求bf16(我在一台2080ti设备测试,其实有一部分可以运行在fp16上,但急着测试没时间修改代码,本机有4090重新配环境太麻烦),最后还是请朋友帮忙处理了图片。

After downloading it, configuring the project environment itself was quite difficult, and it required bf16 which was a rigid requirement. (I tested it on a 2080ti device, although part of it can run on fp16, but I didn't have time to modify the code for testing urgently, and it's too troublesome to reconfigure the environment on my local machine with a 4090).

In the end, I had to ask a friend for help with image processing.

本次测试所用训练集/原图/lora文件已附上,请自取。

”https://civitai.com/models/238469/mist-and-mist-fxxker-lora-trianing-test-lora-and-data“

Please feel free to take the training set/original images/Lora files used for this testing

测试介绍/Introduction to testing

测试图片/Testing images

本次测试图片分为四组。The testing images are divided into four groups

1.通过爬虫获取的原图。Original images obtained through web scraping on Gelbooru

2.经过mist v2在默认配置下处理的原图。Original images processed through mist v2 with default configuration

3.mist-fxxker,使用第一阶段clean 处理[2]图片(注:该阶段处理耗时约25s/106张图@8c zen4)

mist-fxxker, using the first stage clean processing for [2] images (Note: This stage takes about 25s/106 images@8c zen4)

4.mist-fxxker, 使用clean+SCUNET+NAFNET 处理[2]图片(注,该阶段 8s/每张图 @4090)

mist-fxxker, using clean+SCUNET+NAFNET processing for [2] images (Note: This stage takes 8s per image @4090).

测试模型&参数/base model ¶meter testing

1.训练使用nai 1.5 ,7g ckpt. /Training base nai 1.5, 7g ckpt

MD5: ac7102bfdc46c7416d9b6e18ea7d89b0

SHA256:a7529df02340e5b4c3870c894c1ae84f22ea7b37fd0633e5bacfad9618228032

2.出图使用anything3.0 /Image generation using anything3.0

MD5:2be13e503d5eee9d57d15f1688ae9894

SHA256:67a115286b56c086b36e323cfef32d7e3afbe20c750c4386a238a11feb6872f7

3.参数因本人太久没有训练1.5lora,参考 琥珀青叶 推荐&经验小幅度修改。/ I haven't trained with 1.5lora for a long time. Training parameters from KohakuBlueleaf and modified slightly .

4.图片采用 narugo1992 所推荐的three stage切片方法处理(小规模测试里,未经three stage处理放大特征的话,很难学习到mist v2的效果)

Images were processed using the three-stage method by narugo1992 . (Without the three-stage processing, it is difficult to train the effects of mist v2 in testing.)

测试流程/Testing Process

1.通过爬虫获取booru上一定数量柚鸟夏图片

Obtain a certain number of "Yutori Natsu" images from booru,using a web crawler

2.通过mist v2 & mist fxxker 处理,获取剩余三组图片。

Process the remaining three sets of images using Mist v2 & Mist Fxxker.

3.把四组图片当作下载后原图,引入训练工作流,进行打标,识别,切片,处理后获取四组训练集。

Treat the four sets of images as original downloaded images .

introduce them into the training workflow for tagging, recognition, slicing, and processing.

This will result in obtaining four sets of training datasets.

4. 用这四组训练集训练产生对应lora

Train Lora using these four sets of training datasets

5.测试lora

test Lora

测试结果/Results

说明:结合图片观感,本人认为在15ep以后,已经基本达成了角色拟合和训练需求,正常训练时也不会超过这么多ep,因而测试基于15ep,其余lora和训练集请自取测试。

NOTE: I believe that after 15 epochs, the character alignment and training requirements have been basically achieved. During normal training, the number of epochs will not exceed this much. Therefore, the test is based on 15 epochs, while the remaining Lora and training set can be tested at yourself.

总测试参数 /Total test parameter :

DPM++ 2M Karras,40steps,512*768,cfg 7

if Hires. fix:

R-ESRGAN 4x+ Anime6B 10steps 0.5

all neg:

(worst quality, low quality:1.4), (zombie, sketch, interlocked fingers, comic) ,

Trigger Words:natsu \(blue archive\)

直接出图测试Direct testing

测试1/test1:

prompts:

natsu \(blue archive\),1girl, halo,solo, side_ponytail, simple_background, white_background, halo, ?, ahoge, hair_ornament, juice_box, looking_at_viewer, milk_carton, drinking_straw, serafuku, blush, long_sleeves, red_neckerchief, upper_body, holding, black_sailor_collar,

测试2/test2:

prompts:

natsu \(blue archive\),1girl, solo, halo, pleated_skirt, black_sailor_collar, side_ponytail, milk_carton, chibi, black_skirt, puffy_long_sleeves, ahoge, white_cardigan, white_footwear, black_thighhighs, shoes, white_background, v-shaped_eyebrows, full_body, +_+, blush_stickers, standing, sparkle, two-tone_background, holding, twitter_username, :o, red_neckerchief, serafuku, pink_background, open_mouth

测试3/test3:

prompts:

natsu \(blue archive\),1girl, cherry_blossoms, outdoors, side_ponytail, solo, black_thighhighs, halo, drinking_straw, ahoge, tree, white_cardigan, looking_at_viewer, milk_carton, long_sleeves, pleated_skirt, day, neckerchief, open_mouth, holding, juice_box, black_sailor_collar, blush, black_skirt, serafuku, building, zettai_ryouiki

小结:测试原图放在附件了,可以自行对比查看。

Summary: The original test image is attached for reference and comparison.

就目前测试而言,单步骤的clean过后,即便放大后仔细查看,肉眼也很难分辨图片是否经过mistv2污染。

Based on the current testing, even after a single-step cleaning process, it is difficult to visually distinguish whether the image has been contaminated by mistv2 when viewed carefully at a large scale.

即便不经过任何处理,mist的污染也需要放大图片同时提高显示器亮度才能发现(这是100%污染图源作为训练素材)项目地址展示结果目前暂时无法复现。

Even without any processing, the contamination caused by mistv2 can only be detected by enlarging the image and increasing the brightness of the display (this applies to the training material with 100% misv v2 train images).

The project address showing the results cannot be reproduced temporarily at the moment.

直接出图后高清修复测试 after Hires. fix

经过上一步,下面我们只测试经过mistv2处理后训练集直出的lora在higher fix后的表现

After the previous step, now we will only test the performance of LORA (base Mistv2 image) on the training set using higher fix.

高清修复参数 /Hires. fix parameter: R-ESRGAN 4x+ Anime6B 10steps 0.5

出图后清洗 /gen&clean(noise lora)

只经过clean /only clean

特别加测 不使用three stage的结果/Especially test the results of without using the three-stage method.

SD1.5 补充测试/add test

论文里使用的是sd1.5底模,因此我简单尝试了一下在sd1.5能否复现论文所展示的model attack性能。图片显示即便被攻击过的数据集也并没有影响到对角色特征的学习,mistv2带来的污染在使用对应lora后也能够清除。其他方面我不太能确定,毕竟sd1.5模型对于二次元角色本身就是个灾难,自己看图吧。

In the paper used SD1.5 as the base model, so I attempted to replicate the model attack performance shown in the paper. The images demonstrate that the attacked dataset does not affect the learning of character features. The pollution caused by mistv2 can also be cleared using the corresponding lora. I am not entirely sure about other aspects, as the SD1.5 model itself is a disaster for anime characters. Take a look at the images yourself.

test lora and image :https://civitai.com/models/238469?modelVersionId=270388

总结/Summary

什么样情况下lora会受到mistV2的影响/Under what circumstances will lora be affected by mistV2

1.训练集没有经过常见的预处理流程/The training set has not undergone common preprocessing procedures

2.使用了three stage方法/three-stage method is used

3.mist v2处理后图片比例占绝对优势。higher proportion of images after mist V2

1,2,3任何一项的缺失都会让mist V2的效果显著下降。

The absence of any of the three factors mentioned above will significantly reduce the effect of mist V2.

污染了怎么办/How

1.训练前:请使用最简单的方法清除即可,0.25s/per image。

Before training: simple clean( fxxker first step)0.25s/per image

2.训练后:请以适当的负数权重合并我的调整lora

After training: Please merge my adjusting lora with appropriate negative weights.

https://civitai.com/models/238578

3.出图阶段: 同上,可以请谁写个小扩展?

Same as above, can someone code a extension for this?

评价/Evaluation

it is better than nothing.