DPD May 10 2025: this text is written for diffusion models. With the advancement of the modern flow models like HiDream and Sd 3.5 I presume some of the arguments are obsolete. Folks say the flow models (opposed to the diffusion ones) are easier to train [1]

UPD: here is the flux version of the masked conditioning approach. It seems that the problems discussed here makes the workflow fail.

Disclaimer: here we focus on every approach other than inpainting and img2img. In other words if one doesn't have any references (including backgrounds to inpaint on) whatsoever.

UPD2 [Feb. 19 2025]: contrary to what's stated in the disclaimer I was finally able to achieve the goal set in this article using img2img. The workflow can be found here. Also I was given an advise regarding the regional prompters which I might consider adding to this text.

While not in its entirety, the issue of controlling three characters by LoRAs and ControlNET seems to be resolved (at least using Illustrous). Proofs are below.

UPD3 [Feb. 23 2025]: now the abovementioned workflow supports facial expressions!

The setup

So, you came up with an idea of creating your own amateur comics in SD. While most scenes involve a single character, there might be scenes with multiple characters (possibly interacting) present.

You have generated the openpose, depth and canny images to turn them into a scene. As soon as this is done, you wire up a ComfyUI workflow that includes such things as LoRAs, regional prompting, masking and such a fancy stuff as GLIGEN, IP adapter or the Attention Coupling. Then you launch the generation and get... this:

Had it been your fault? Where have you miscalculated? Is it possible to create a frame from a scratch if you don't have a sketch or a reference?

In this article I would like to address this issue and discuss the possible workarounds I've come up with.

Why would one need it in the first place?

Making your manga/comics, of course! Without any significant effort I was able to generate a LoRA for all the main characters of a manga I am making. By simple prompting, upscaling, detailing and even using the openpose I was able to generate 6 pages of my comics. But then I decided to place two characters by the table...

The State Of The Art

If you google "stable diffusion multiple characters" you would probably immediately stumble across a random comment on a forum I've never heard of:

But NovelAI and Stable Diffusion both have limitations. It's nearly impossible to generate two different specified characters, much less specify two characters interacting in a certain way.

This issue has been raised and discussed all around in Discord, AI forums and elsewhere. The SD models, as of now, struggle with capturing individual characters with the specified appearance, pose and the facial expression to depict it on an output image. To find out why this is the case, we need to dive a bit into the mechanism of how does the Stable Diffusion work.

How does Stable Diffusion Work?

The three main ingredients

The Stable Diffusion consists of the variational autoencoder (VAE), the text encoder and the UNet .

The VAE decompresses (or compresses) an image into a so-called Latent Image. It can be perceived as a translator from a human language (the visible pixels) to a language of a neural network. One trick is applied, though. Each "pixel" of a latent image corresponds to an 8x8 (16x16 for flux) pixel area of an actual image. Hence the optimization.

The text encoder translates English into a conditioning via an embedding. The latter can be seen as a "hint" to the denoising algorithm.

As figured out by scientists, most of the physical equations are symmetric w.r.t the time direction. The only exception are the equations of the thermodynamics that benefited the Stable diffusion a lot. A good explanation of why is there a Diffusion in the naming of SD can be found here. For our topic we would only need to know that the SD attempts to look at a noise as at a "result of an unspecified diffusion" and try to predict what it "was" (to denoise the image). This is precisely what does the UNet do.

Controlling the denoising

This subsection is dedicated to a brief glance at the denoising routine. If you have an intuition, feel free to skip this part and proceed to a next section.

The process of turning the initial noisy image into the resulting one is guided by the conditioning assistance. Namely, the most popular tools are:

Prompting (CLIP encoding);

Textual inversions (embeddings);

Adaptation models (LoRA, Dreambooth, Lycoris, etc.);

ControlNET (openpose, canny, depth, etc.);

IP adapter;

GLIGEN;

Attention coupling.

All of the mentioned tricks can be applied to a certain area specified by an user either by a conditioning set area or by setting a mask. I did not find any differences in the quality between the area and mask conditioning but having more tools is always better than having less.

An another thing that controls the denoising is the noise predictor itself - the KSampler. It allows the user to pick a bunch of a parameters such as the denoising strength, step number, CFG and the scheduler.

The denoising strength: make it too small and you'll have a lot of noise, set it too high and a lot of details (usually including an unwanted ones) would tend to appear;

The step number - how many denoising rounds are scheduled to be applied;

CFG - how closely does the sampler follow the models. Set too low and the output would appear to be more random. Set too high and the generation would appear too constrained in terms of the variations and the images might become roasted (akin to when one overtrains a LoRA);

The scheduler - defines how would the denoising value would change over time.

Throughout this article we will be using most of the abovementioned to tackle down the problem of getting a better result at generating the multiple character images (and having a control over its content).

Decomposing the problem

Let's restrict the problem to the one of generating two characters. The input conditions may vary:

Character's appearance might be controlled by LoRAs/IP adapter or one would restrict his expectations to generating just random characters controlled by the prompt solely;

Character's posture can be either controlled by the ControlNET, LoRA or left to to be decided by a chance;

Characters can either interact with the surroundings or not;

The user might have the references to construct the resulting image on, or might not.

Obviously, each combination of the above inputs would likely affect the workflow a user might need to use. Let's speculate on some of these by proceeding from the basic needs to an expectation of picky users.

Just a couple of girls with a fancy background

Arguably, the Noisy Latent Composition is a best way to go in that case. It is easy to implement in a workflow, to use, is quite stable and fairly unsusceptible to a quality deterioration. By proceeding to the mentioned link one can get the basics about that approach, so let us proceed further.

Now let's control them with LoRAs and do something about poses

This is when it gets fancier. The two main ideas that compete in fulfilling that task is the Inpainting and the same old Latent Noise Composition but in a slightly fancier manner.

The Inpainting usage

I have a little experience in doing the inpainting since rarely have an image for a background. So I prefer to automate the process, but some users don't have an urge to do so.

This approach tries to generate an image utilizing a local context and to blend it into the resulting one. Sometimes the generated characters might be cropped by the boundaries since those simply do not fit into the specified area.

For sure, someone need to either generate a background or to have one already. To let us go further.

The controlled Latent Noise Composition

The idea here it to generate two latent images of the desired characters controlled by LoRAs and the ControlNET and then blend them like in photoshop (but in latent). Then the resulting latent image is passed to a yet another KSampler with a blended conditioning as well.

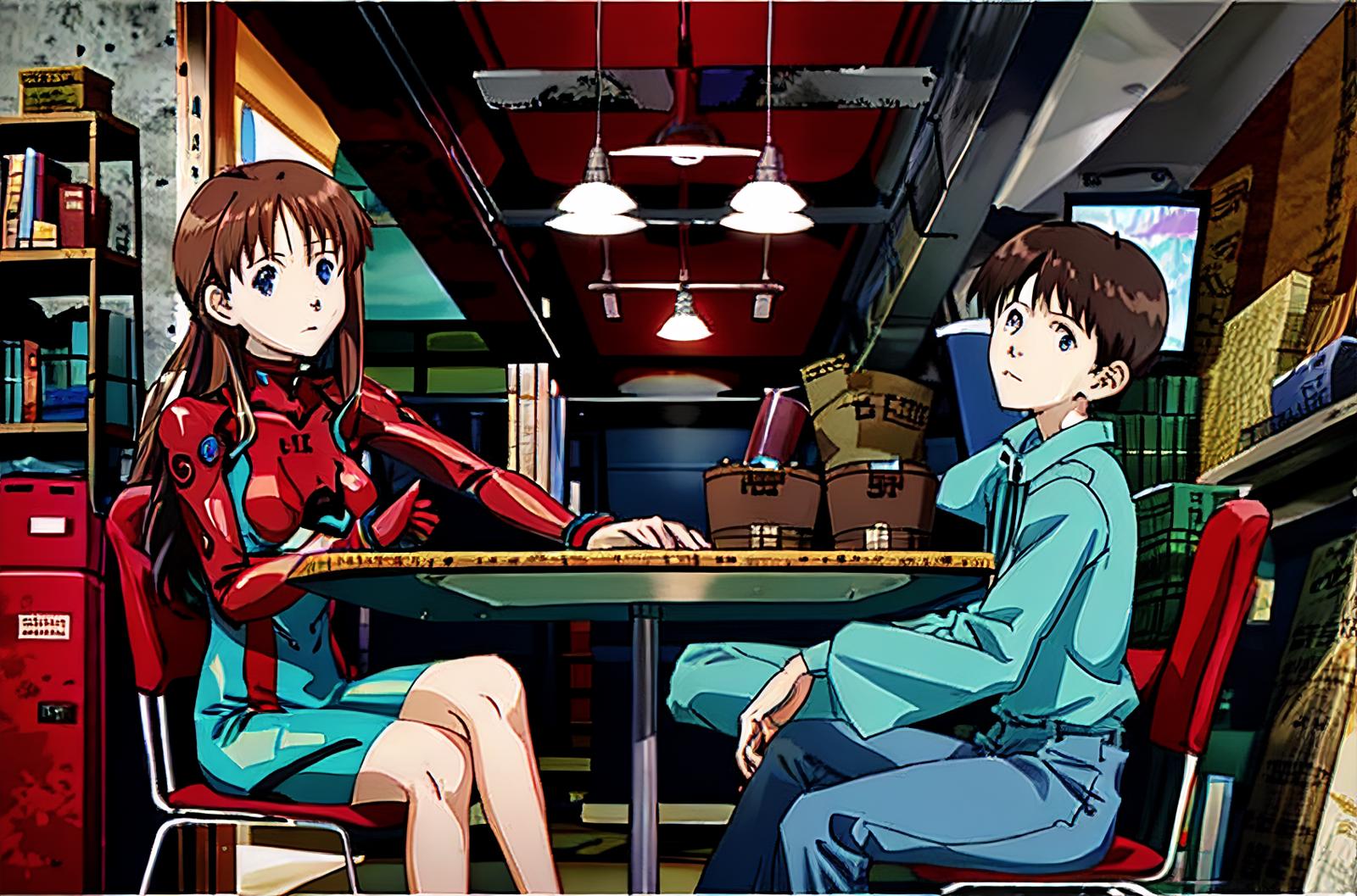

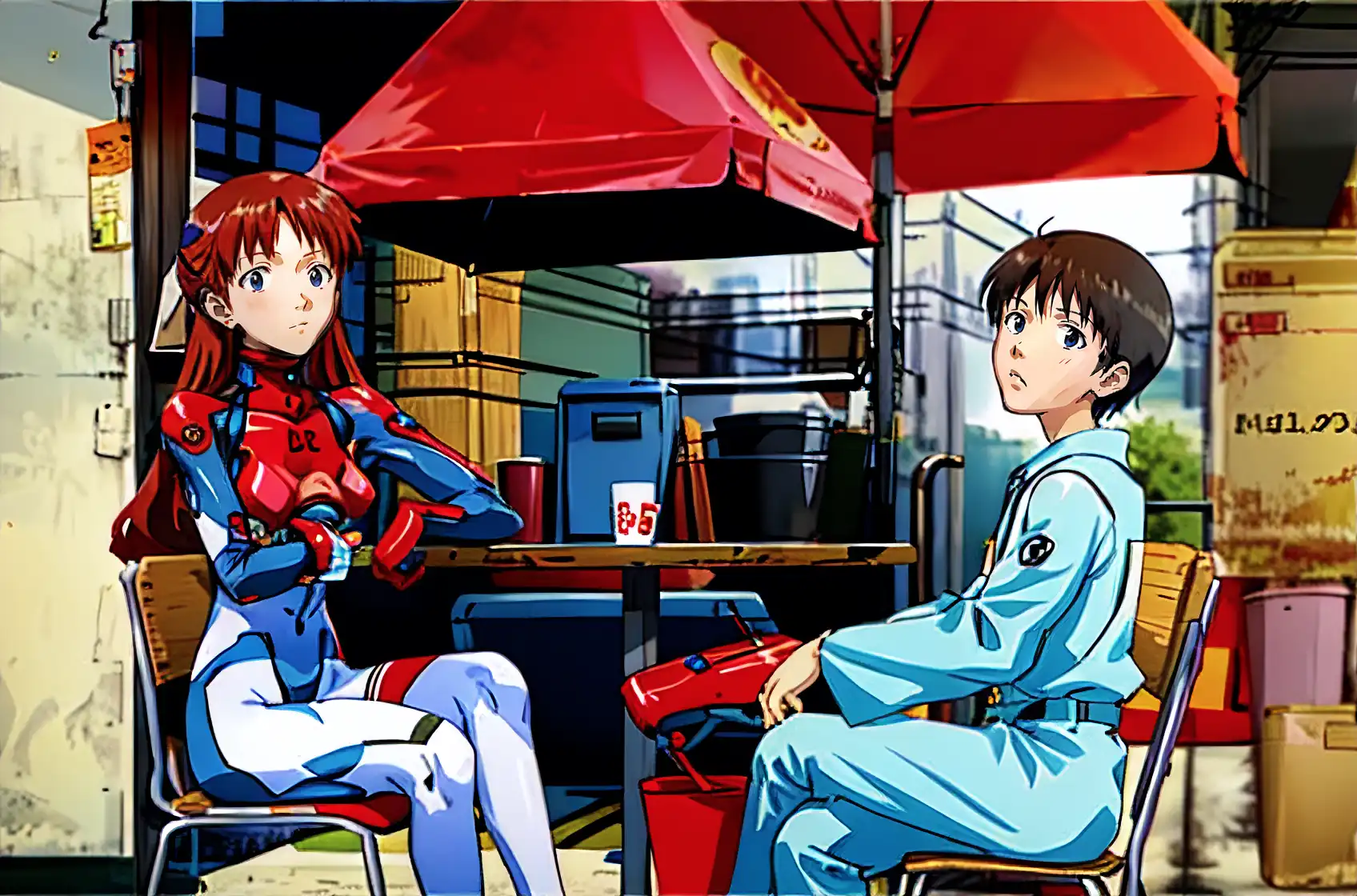

An example of such a workflow can be found here. A quick messing around with seeds and settings gives such a picture:

Let's have a brief analysis what happened here.

First, Shinji has red spots in hair. It is easy to see that this artifact comes from Aska. What is happening here is that modern neural networks have a thing called attention. To produce a consistent image, Stable Diffusion needs to keep in "mind" that when it draws Aska, it draws Aska. When the area with Aska within is over, the SD should more or less forget that she was in frame. Otherwise she would blend with Shinji (and the wall behind: see a red patch and a red stain on the wall). On the other hand what if we have a reflection? Aska should be in it, so the network should "remember" that Aska was around.

Second, the image is low detailed. I tend to blame the way the conditioning in SD works. In authomatic1111 and in compel (I am unaware if that's the case in comfyUI) the prompt's embeddings are fed into the UNet in batches. That way the 75 (77 in SDXL) tokens limit is surpassed.

Avoid ControlNET, embrace LoRAs

Character's posture can (to some extent) be controlled by LoRAs like this one for example. Just keep the prompt clean sharp and detailed enough, remember using activation words and the result would not disappoint you... unless:

You want the characters to be placed within the corresponding dedicated areas;

You have found a LoRA for the postures you need;

This LoRA is compatible with a model you use;

The characters do not interact with the background in a way the LoRA does not handle.

The best thing about this approach is that this is the only way to make characters interact with the background and themselves (with one exotic exception for the latter that we will discuss later on).

Now make them interact with the background

I am not aware of any person obsessed with placing two well-defined characters in a frame, whose poses and the interaction with a background is controlled. Except me, of course.

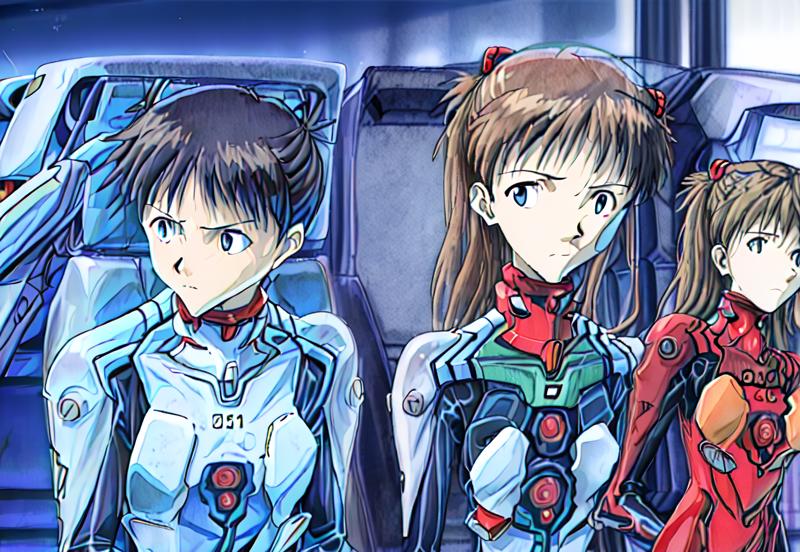

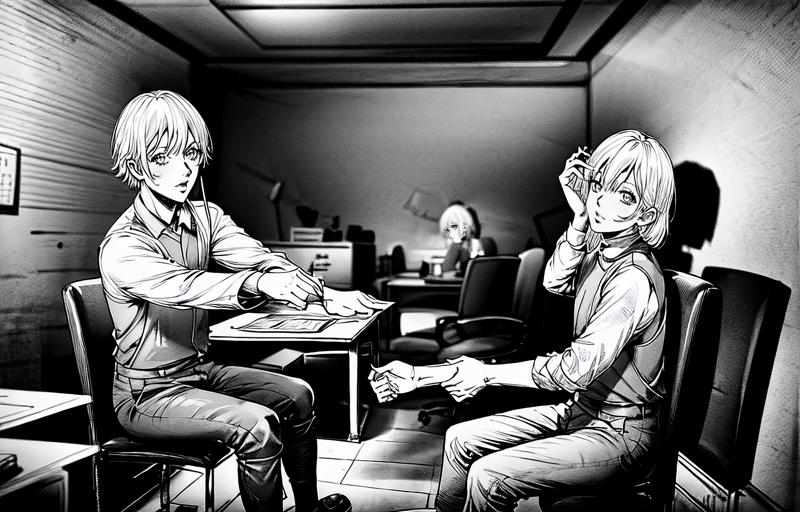

If one is indifferent to the position and the exact pose of the characters, he could go for a pose LoRA. So, behold: my solution. Here's an output:

And, of course, there are the input images:

Clumsy? Yes. Blurry? Yes, I use ControlNET depth for the interaction with the background. Inconsistent? For sure! That's the best one out of +30 images.

The latter workflow works about as follows:

First, we load all the models (checkpoint, vae, loras, controlnet etc.)

Then we define a positive(+) and negative(-) prompts for both characters and the background (6 in total)

We load the images for the controlnet and the corresponding masks

We proceed by applying the controlnet to the conditioning

We apply the character's conditioning (prompt+lora+controlnet)

We assign the mentioned conditionings to the characters and apply that to the dedicated area which is feathered (kindly suggested by Moist-Apartment-6904). The background is everything, but characters (thanks to Moist-Apartment-6904 once again)

We combine all the conditionings

We upscale the result and detail the faces!

An another (realistic) version of this approach by the same old Moist-Apartment-6904 can be found here.

In this settings we encounter next issues.

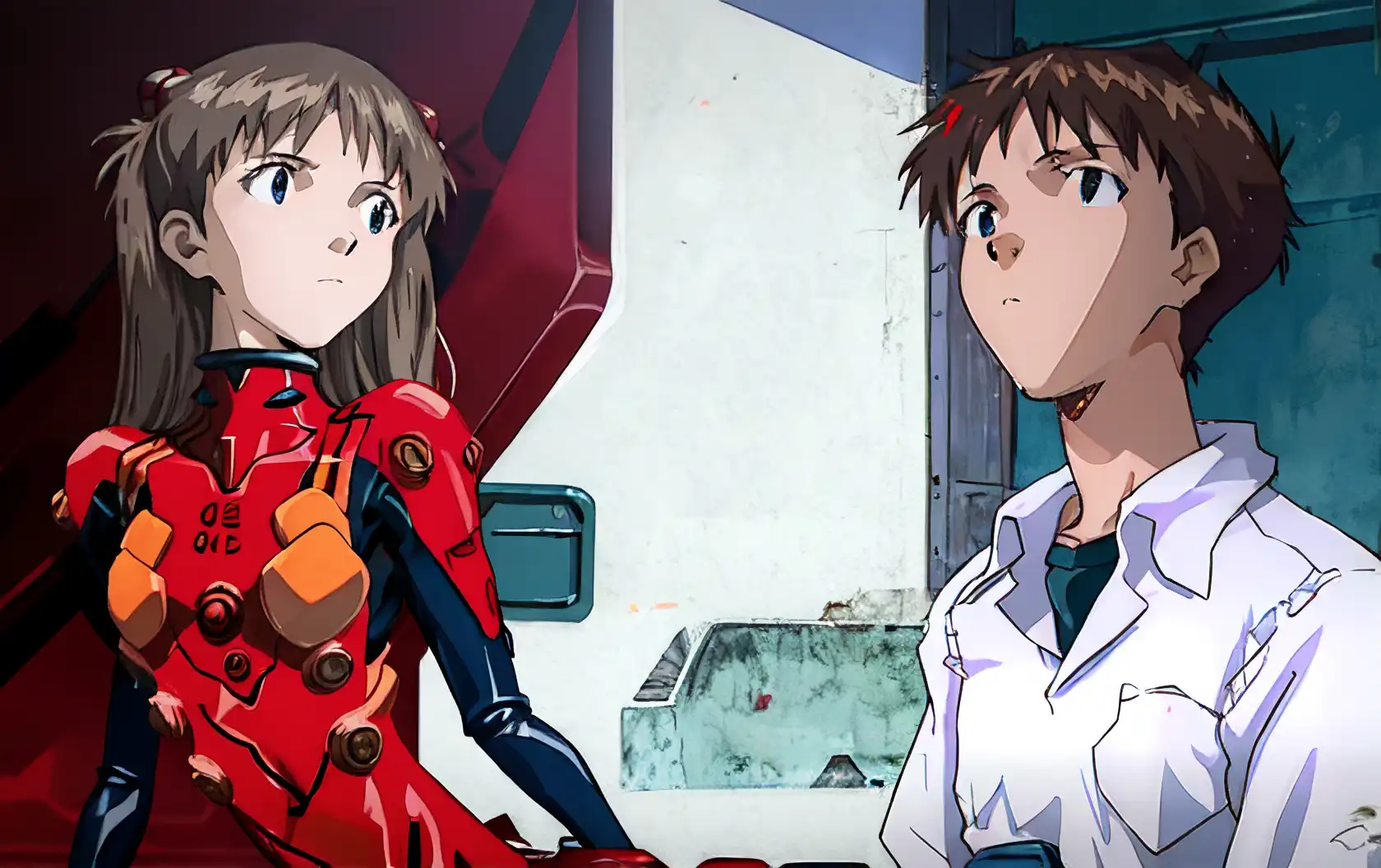

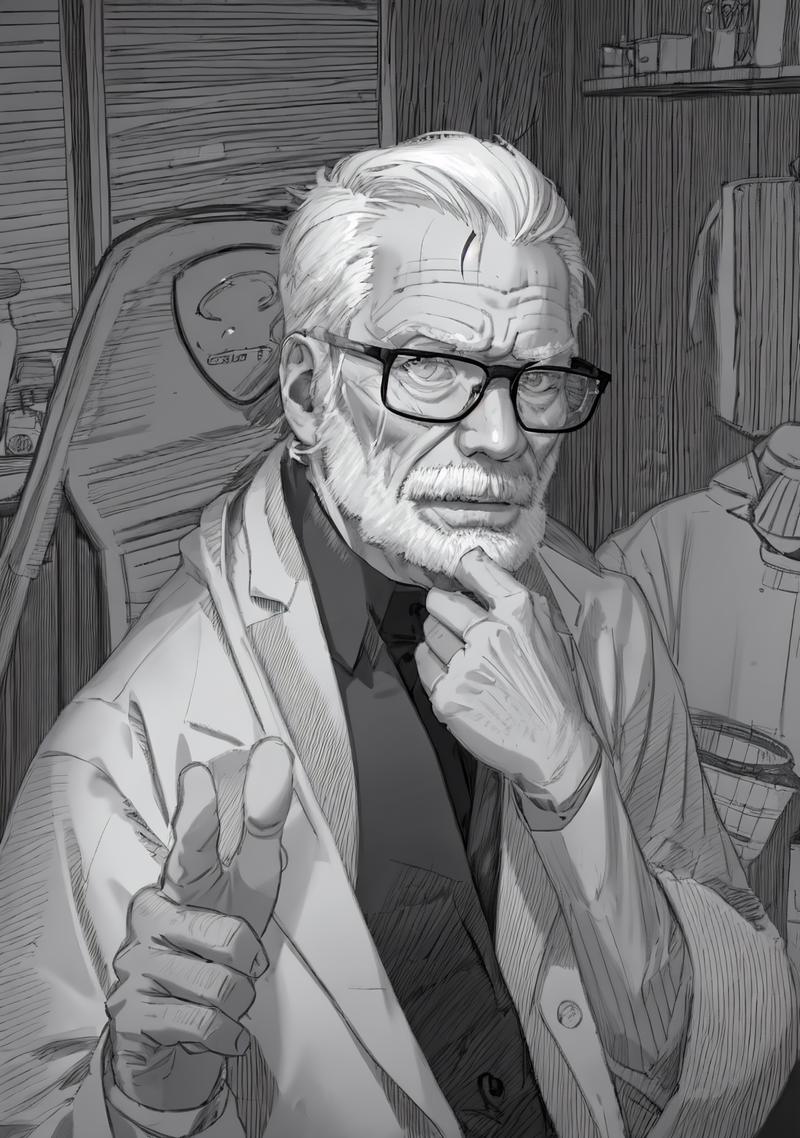

While it works fairly acceptable for Aska ans Shingi, let's take look how it works for my characters. The character one, Aaron:

Character 2: Heinz (underrepresented in the dataset)

The Latent Noise Composition approach:

The approach discussed in this section:

That tells us that:

I suck at training LoRAs on characters that don't yet exist (probably I will write an another article on how to make a LoRA for the characters that you have designed without having a dataset at the first hand).

The model should have at least a glimpse of what characters you are using. It fails to keep the novel characters in mind (or there is a bug in my workflow)

So, fail again.

Characters tend to interact with each other

In the section about the LoRAs I have mentioned the extravagant way to make characters interact. Well, I lied... One can possibly make them at least shake their hands.

The hint is to place a controlNET canny made of an image of hands precisely where the hands interact (on top of the other conditionings). It would have been great if any reader provide such a workflow (I would include that in a next iteration of this article). It exists, but lost in the depth of the civitAI workflows. Hopefully you would encounter it once.

The hand detailer could be of a help too but I hadn't time to check.

Conclusion

Here we have discussed all the methods of placing characters within the frame except the inpainting and the img2img.

Sadly, having a full control even of two characters seems nearly impossible in SD as of now.

In this article we have discussed possible workarounds, but all of them either fail, or sacrifice certain aspects one wishes to have a control over. All the comics I know feature a single character per frame, or several ones that are not controlled (1,2).

The issues are:

The prompt bleeding, or the attention issue we have discussed.

Probably, the conditioning overwhelming. The amount of tokens is fairly small.

Lack of ad-hoc tool to resolve this issue

In conclusion I would like to give my personal opinion on whether this issue would be resolved. I find this scenario to behave similar as hands. An (ordinary) hand has five fingers which is already complex for SD to imitate since it lacks understanding of how do hands work. But the key observation here is that hands are complex: it has 5 fingers, 9 joints and it can hold an object.

The hands are an active area of research so:

By incorporating joints in hands we can replace those with entities of our image

By solving an issue of a hand holding something, we would probably resolve an issue of characters interacting with objects.

Once this issue is resolved, we can have a hope to place whoever we want wherever we want in a posture we want (and, hopefully, make the characters interact with each other). And, hence there is a demand on hands, we would get new tools rather soon.

The possible solutions might be:

Increasing the token lengths and adjusting the context window;

Enhancing the way how the masking and area prompting works;

Enhance the base models?

I am curious to hear your opinions and observations, so that we can tackle down this problem if possible.