Main problem: Decoupling concepts that are highly correlated and prevent concept leakage.

Note:

I made this an article but I want this to be closer to a forum/discussion board. Instead of an article where I present all the information, I want this to be a community involved probing into the depth of Loras. I will present some of my findings, but I want other people to present their ideas or findings regarding this matter. I will try to keep all of the examples in this article SFW.

For this article, the baseline/focus would be on SD1.5 Anime models, danbooru based tags, and solving the problem with Lycoris or Loras (I will only focus on Lycoris as it modifies both the attention and conv layers). I assume a good "cleaned" dataset, good tagging, and decent lora settings as a base standard and these ideas might add the "extra spice" to elevate the quality.

As an incentive for some, I plan on testing and tipping good ideas.

Purpose:

The problem of decoupling is a huge problem for most AI applications and it's a problem for SD/loras as well. Many concepts are inherently correlated and as a creator, the best way to tackle them is to create a lora with this in mind. I'm ignoring inherent problems with the checkpoints because I don't have the time or resources to tackle them. My plan is to find a solution that "works" under a imperfect system (SD 1.5), which is basically solving as much problems with a lora.

Benefits I see by solving this problem:

Many character outfits may be separated from the character and allows others to wear the outfit. It's easy to make a clothing lora (like Starbucks apron that's heavily meme-d), but we might be able to separate a specific type of clothing that's highly correlated with a specific character.

Similar concepts may be separated into better subsets.

Better object generation (see test results) using loras.

Higher sensitivity to tags, without influencing the rest of the generations.

Better control with (intentional) concept leakage

etc

Methods considered in tackling this problem:

Better tagging, pruning, and creating new word combinations to utilize some base knowledge.

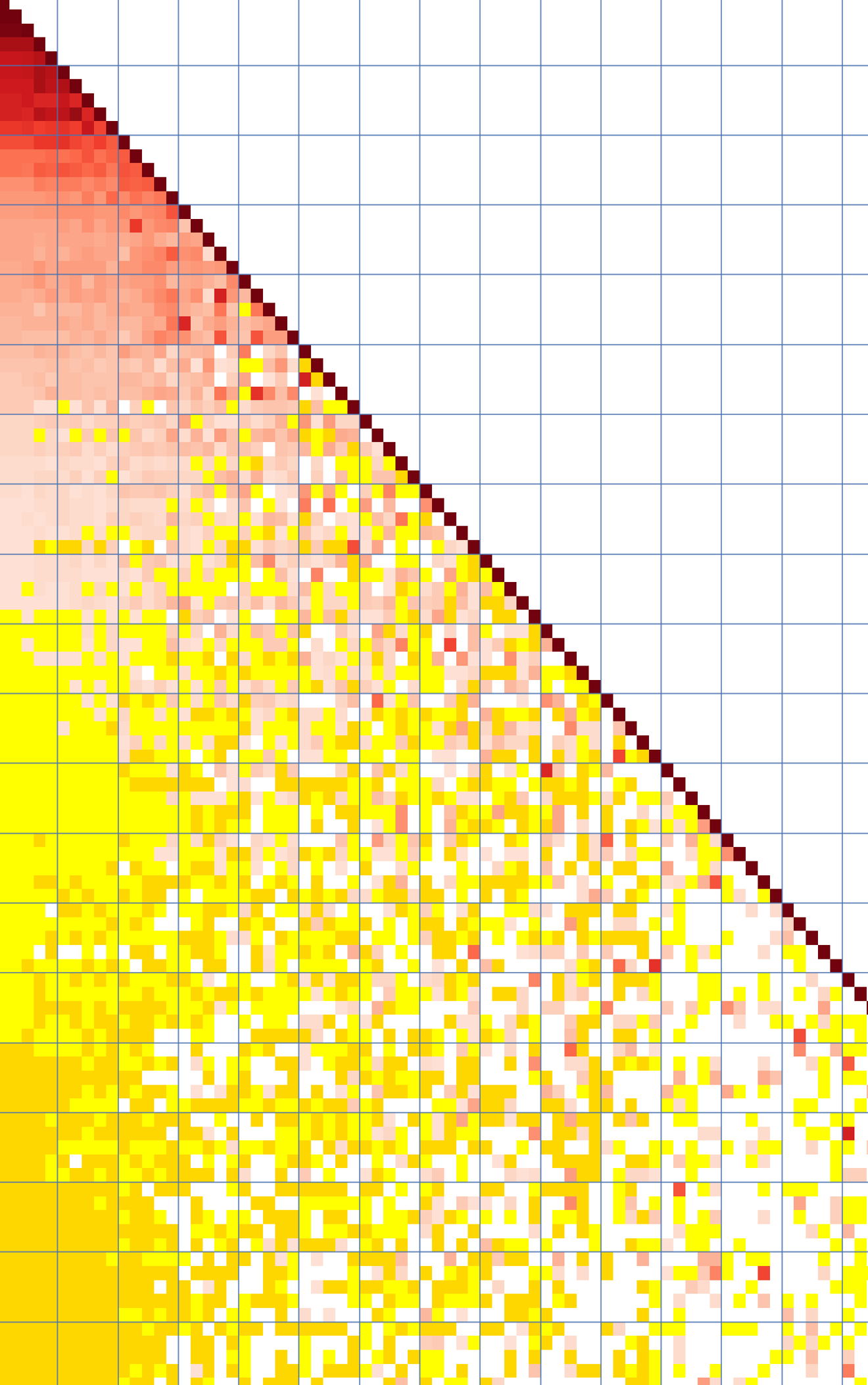

better understanding of the attention mechanism. (I sometimes use the cross attention extension to visualize the mask, but having better knowledge of tags and where they're generated is beneficial)

better training settings (modifying memory [dimensions], changing LR, multiple trigger words, etc)

The actual images in the dataset (I'm going to ignore this bc sometimes we don't have the luxury to choose the images in a dataset, also basic editing is assumed for good loras)

The use of the hyphen, "-", and making new words. Please check my test results for more info and why I think this might be revolutionary.

...

Wasabi's test results and memo:

I have general rules and several case-by-case solutions. I started looking into this since mid-December so some of my recent loras utilize my findings, but I keep on finding weird/useful results that are not mentioned anywhere or well investigated.

A general rule that works well is better tagging, separating subsets from larger sets, and intentionally using lesser known tags to absorb concepts (mostly for clothing/tattoos).

I have found a very interesting result with the hyphen, "-", which started with my Hijikata Lora (2 weeks ago). I tried to embed the mayonnaise bottle with the character (bc it's his favorite food) and I struggled with it. "Mayonnaise bottle" is the proper danbooru tag, but due to the tokenization, the generated object had a wine bottle texture/shape, which makes sense. My next step was to retag the "mayonnaise bottle" as "mayonnaise container" which made it better, but it wasn't enough. Out of desperation, I added a hyphen and retagged it as "mayonnaise-container", which increased the generation quality by a substantial amount. I'm still testing the effects of the hyphen, but it works to better entangle separate concepts. I won't go into it, but I'm currently making 2 multi-concept NSFW loras, tentacles and tattoos, and the hyphen plays a huge role in the tags. As an example, if a dataset contains cross tattoos but they're placed on different parts of the body, tagging them with cross-[body parts], like "cross-arm", "cross-chest", "cross-legs", and using them as trigger words is better than other methods because it utilizes the base knowledge of the tags (known tokens), and it can generalize and you might be able to move the tattoo to locations that are not in the dataset (ex: "cross-back" or "cross-face").

Other results/memos:

NA

I plan on keeping this updated with my notes. please feel free to add ideas, corrections, tests results, and anything to the discussion.