Many of the online services for AI art/image generation are blazingly fast. Personally, I am happy that I found CivitAI, which provides a decent selection of checkpoints, Loras etc and the speed is not at all bad for a free server. Which made me wonder what kind of hardware would one need to setup up say a personal Stable Diffusion server. Thus started my journey!

AUTOMATIC1111

(https://github.com/AUTOMATIC1111/stable-diffusion-webui)

After seeing some mentions about this project in CivitAI and other sites, I decided to give it a spin and installed it on my Apple M1 laptop. I was quickly disappointed because not only was the speed so poor (about 4 mins for 512x512 image), I could neither increase the image size nor other SDXL checkpoints such as StarlightXL.

8 GPU Server:

I decided it must be the poor GPU in my laptop that is the culprit. I had an unused Crypto mining rig with the following specs:

Intel(R) Celeron(R) CPU G530 @ 2.40GHz (2 Cores)

4GB DDR3 RAM

8 x ZOTAC GeForce RTX 3060 12GB RAM

128GB ATA SSD HEORIADY

Hive OS (Linux)This is a decent machine for mining ETHASH giving about 400Mh/s. After putting the Hive OS into maintenance mode and upgrading the software/drivers, I installed AUTOMATIC1111 in it and had to tinker a bit because installer for AUTOMATIC1111 was attempting to install an old version of PyTorch. Finally got it running. But I was super disappointed. If my Macbook took 4 mins, this took more than 2 hours to generate a single 512x512 image using SD 1.5 and Euler Ancestral sampler. Also only the first GPU was being used.

Easy Diffusion

(https://github.com/easydiffusion/easydiffusion)

Further research showed me that trying to get AUTOMATIC1111/stable-diffusion-webui to use more than one GPU is futile at the moment. A forum comment led me to Easy Diffusion, which not only supports multiple GPUs but the user interface is rich. What I really liked was the ease of installation. A simple download of a zip file, unzip it and run the start.sh or start.bat. From there, the startup script downloaded everything needed including the right Python interpreter, NVidia drivers and stable diffusion models etc. But my excitement and hope was short lived because after all that the server would not startup. I was almost ready to give up until I noticed that minimum memory requirements, which is 8GB. I ordered a 8GB RAM and while waiting for it, I decided to add 32GB of swap space to see if it would allow me to run the server even if it would only crawl. Adding swap space did allow me start the web-ui, but I noticed that server would spend almost 8 mins to prepare each GPU before actually running the model. Then image generation would take about 45 mins to complete for only 25 steps. The image generation would fail most often and forget about creating anything higher than 512x512.

8 GB RAM

In the meantime the RAM I ordered arrived the next day and I promptly installed it. Replacing the 4GB RAM with a 8GB module made a noticeable different. The server started quick, 512x512 image generations took about 56 secs average for 150 steps when using SD 1.5 or DreamShaper V8. Things are starting to look promising. The best part is that Easy Diffusion uses all eight 8 GPUs. The BIOS on my motherboard says it can support 32 GB (but as 4 cards), but the motherboard has only one slot. I am not sure if I can put 16GB or better a 32 GB module in it. I will order a 16GB and a 32GB later to see if I can bump the systems's RAM high.

Here are samples using DreamShaper V8:

Hyperrealistic photo of rooftop: (batman-standing).

Hey! where is the batman?

Seed: 3347074776, Dimensions: 512x512, Sampler: euler_a, Inference Steps: 150, Guidance Scale: 7.5

Processed 1 images in 56 seconds.

Could he be batman?

Seed: 1186799135, Dimensions: 1024x768, Sampler: euler_a, Inference Steps: 150, Guidance Scale: 7.5.

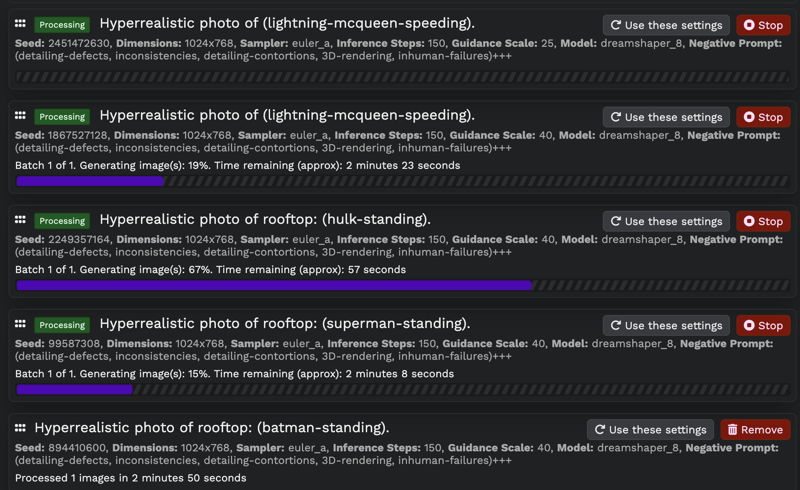

Processed 1 images in 3 minutes 59 secondsYay!, I can finally generate higher resolution images. But here is the best part! I can run up to 8 image generations simultaneously.

Finally, my own Stable Diffusion server that is close to useable, if only I can run StartLightXL or Juggernaut XL in it. I tried to run StartLightXL on this setup. But I think that model I have is corrupted. I will try it again later.

In the meantime, changing the guidance scale started to produce some good images using DreamShaper V8.

prompt: Hyperrealistic photo of (lightning-mcqueen-speeding).

Seed: 2451472630, Dimensions: 1024x768, Sampler: euler_a, Inference Steps: 150, Guidance Scale: 25, Model: dreamshaper_8, Negative Prompt: (detailing-defects, inconsistencies, detailing-contortions, 3D-rendering, inhuman-failures)+++Performance Tweaks

Now that I have a stable system running, I applied the following changes:

Changed the GPU memory usage setting in Easy Diffusion from "Moderate" to "High", because all the GPUs in my rig have 12G each.

I was tempted to tweak the GPU performance parameters, but I noticed that running a 7GB checkpoint in the GPU wasn't even making scratch. The GPUs were around 21°, utilizing only about 30 Watts.

XFormers - I previously removed XFormers because it was failing miserably repeatedly even with generating 512x512 image using DreamShaper. But looked at deeply again because my more knowledgable pal stapfschuh pinged me about using it. XFormers can speed up the image generation. With XFormers installed I am able to generate 1024x768 sized images using DreamShaper in just 14 seconds!

diffusers - Still I could not get run any of my favorite SDXL models. There were posts and troubleshooting guides which said that diffusers must be used to run SDXL models. The trouble was that every time I started the system with an SDXL model, the server would take a very long time only to crash at the end, without any kind of error message. Typically this means a memory issue. So I loaded htop on another terminal while the easy diffusion server was loading. Sure enough, I noticed that the 32G swap space I added before was almost close to 100% utilization. So I quickly added another 16G swap space on the fly and boom, the server to literally started to run! The first run was slow, close to 3 mins for 1024x768, 25 steps using an SDXL 1.0 checkpoint. But after that the it only takes 10 seconds average.

Recommendations:

The hardware requirements for AUTOMATIC1111 and Easy Diffusion mention that a system with 8GB RAM is sufficient to run stable diffusion models. But this is not accurate. If your system has only 8 GB, then the system must be configured with at least 16 GB virtual memory. However, I would recommend installing as much physical memory you can before adding virtual memory.

My hardware recommendations

I think if that you use a system with the following minimum specs, then you can generate 1024x768 images in about 5 seconds average using a single GPU.

Intel CPU @ 3 GHz with 4 to 8 cores (Yeah I prefer Intel. But you can use AMD if you want)

16 GB DDR4 RAM

128 to 256 GB SSD.

Any decent RTX GPU with 12GB RAM

I would recommend a total memory of 32 GB, preferably more of it coming from Physical RAM. If you are going to be relying heavily on virtual memory at least make sure that the you are using a high speed SSD.

What about multiple GPUs?

Well, my setup works very well, but with few caveats. Since my CPU has only two cores, and my system only has 8 GB DDR3 RAM, when I trigger a batch of 8 jobs (because I have 8 GPUs), it takes an average of about 40 seconds for all the jobs to complete. This is because of all the CPU and memory throttling trying to run 8 jobs in parallel. So if you really want to use multiple GPUs, then I would recommend using a CPU with at least matching number of cores and add about 8GB extra RAM for each additional GPU. Why because the diffuser runs on the CPU/main memory mainly, loading and unloading the prepared models into GPUs.

Multiple GPUs Enable Workflow Chaining:

I noticed this while playing with Easy Diffusion’s face fix, upscale options. With only one GPU enabled, all these happens sequentially one the same GPU. But with more GPUs, separate GPUs are used for each step, freeing up each GPU to perform the same action on the next image.

We all should appreciate CivitAI!

As I was tinkering with my setup, I even looked into using a Cloud GPU, which turns out be costly for my needs. I am not sure what I kind of setup CivitAI is using to provide us this service free, but I am sure it is not cheap or easy considering the speed at which the image requests are being procesed. If you are using CivitAI, I highly encourage you to show your appreciation and support by spending few dollars every month. Thank You CivitAi!

I didn't go through the technical stuff in detailed in this article. But please feel free to drop a comment if you suggestions or questions.

![[SOLVED] Stable Diffusion on 8 GPUs](https://image.civitai.com/xG1nkqKTMzGDvpLrqFT7WA/a45a6171-5947-45a8-8574-6ebe17b7f4ac/width=1320/a45a6171-5947-45a8-8574-6ebe17b7f4ac.jpeg)