So did you find a solution to the problem I left you with in my previous article? Here is that problem again.

Hyperrealistic side-view photo of three-on-rooftop: (batman-kneeling:1.0), facing (spiderman-standing:1.2), next to (hulk-lying:1.0)

The problem here is that the attributes are bleeding heavily because of the training bias. You may wonder, why I keep calling this training bias. Ideally, to the AI it should not matter who the character is. However, because of the way the models are trained, say with more data on batman and less data about spiderman, or even if we use same amount of data on all three characters, but with one character having more distinct features (for ex. batman), then the model will end up giving higher weights to some.

Let me show what I mean.

Hyperrealistic side-view photo of three-on-rooftop: (man-kneeling), facing (man-kneeling), next to (man-kneeling)

Now the AI does not have any problem giving us the scene we want. Why? Because all are men and they are all doing the same action.

But we want our Batman, Hulk and Spider Man! How can we make the AI to draw a scene with all these three doing exactly what we want? Well, one way is to play the long game of adjusting the inline weights. Here is an example:

Hyperrealistic photo of three-on-rooftop: (batman-kneeling:1.0), facing (spiderman-kneeling:1.2), facing (hulk-kneeling:1.0)

This picture is much better than before. But you can see that Spiderman eyes are bleeding into the other two characters. Hulk is wearing batman's belt and sigil. You may be able to get the scene perfect by tweaking the weights and trying different seeds. But there is a better way. You can use a technique called blend to specify how different characters/objects should be melded into a image.

Here is an example.

Hyperrealistic photo of three-kneeling-on-rooftop: (batman, spiderman, hulk).blend(0.2,0.4,0.2)

Blend is not really an function (like the ones you use in spreadsheets or scripts). But because these LLMs are trained to understand many things, it seems to work very well. What I really think that is happening here is that the keyword "blend" is triggering nodes within checkpoint that are related to training images of blended objects. Of course some UIs such as Easy Diffusion use prompt processor that actually supports this format.

Here are couple simple examples of blend function in action:

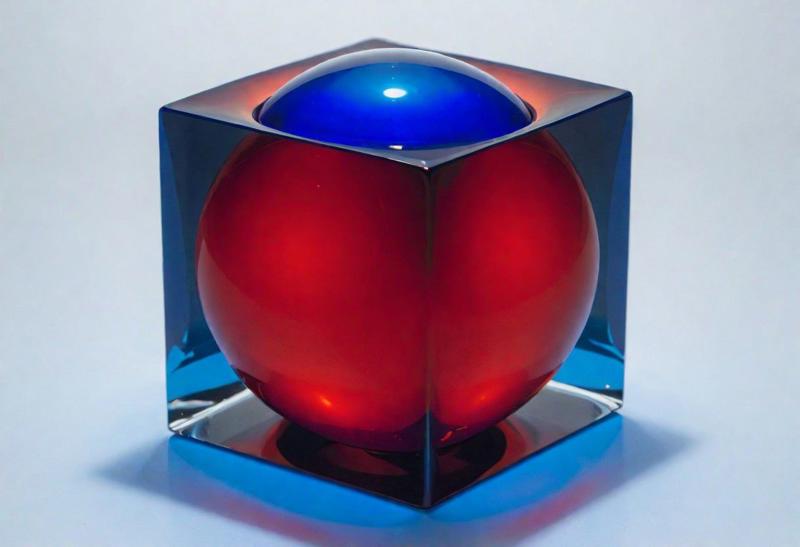

("blue sphere", "red cube").blend(0.5,0.5)

("blue sphere", "red cube").blend(0.7,0.3)

Its good that the blend function seems to work. I am sure that it is not going to work on all checkpoints or UI. So in the next article we will explore some more reliable techniques to build complex scenes quickly.

Hope you found this useful. Do you have other such techniques that works for you? Please drop a comment below.