...Huh?

If you have an NVIDIA GPU with 12gb of VRAM or more, NVIDIA's TensorRT extension for Automatic1111 is a huge game-changer. I can't believe I haven't seen more info about this extension. Below you'll find guidance on installation and tips on how to use it effectively with checkpoints, LoRA, and hires.fix.

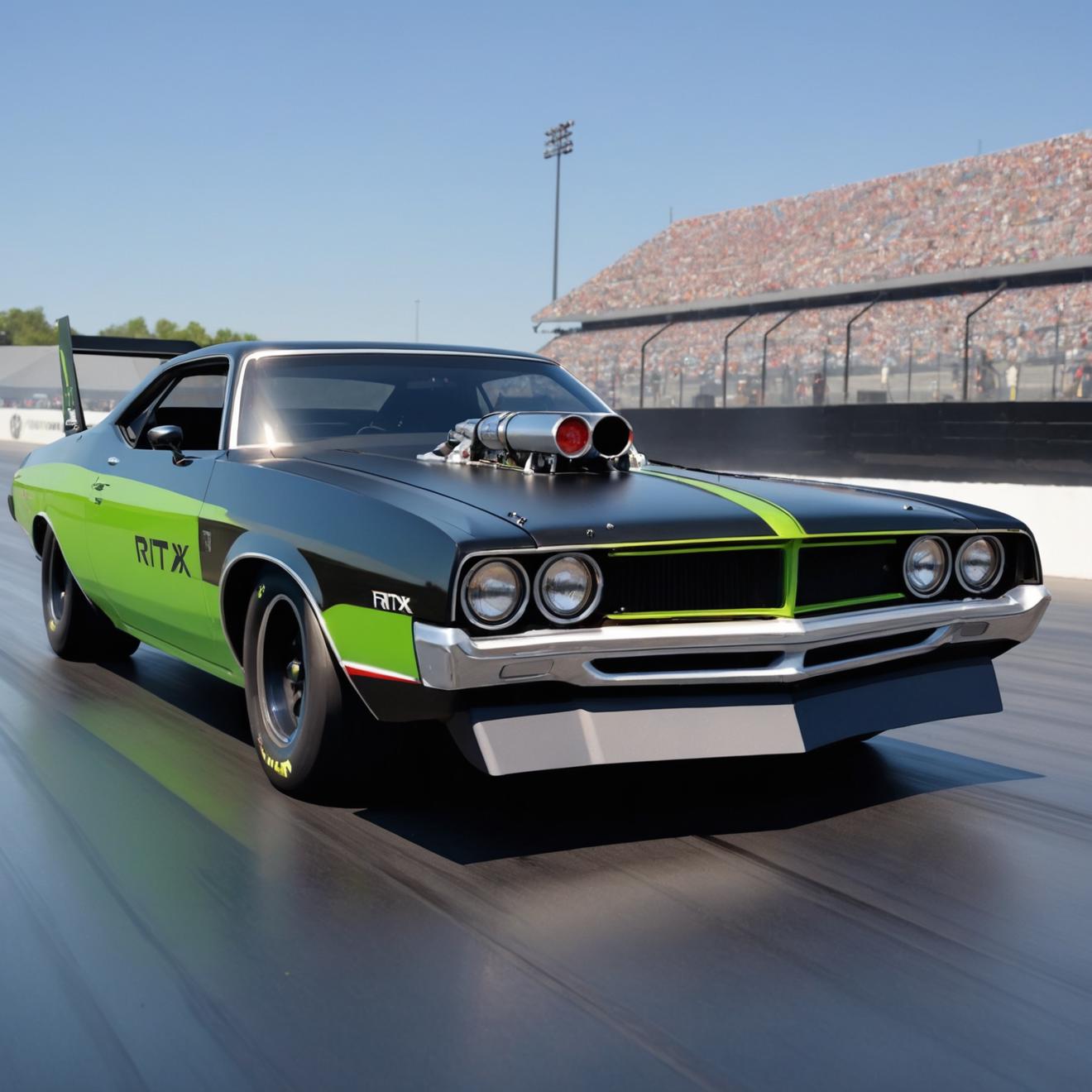

Once you have everything ready, you can generate 1024x1024 SDXL images with 55 steps and hires.fix to 1638x1638 with 15 steps in about 15 seconds on a 4090.

Most of my tests so far have yielded ~50% speed increases, and you can use much higher step counts while barely impacting time to generate. After a few days of messing with it, I can't live without this extension.

It's not very complicated to get up and running, but some additional steps are required to use it with any combination of checkpoints and LoRAs. It also uses a lot of disk space for the engines it needs to create. The instructions on NVIDIA's github repo are a little vague and out of date in some areas, so here's a walk-through!

Make sure to do all steps in the order they appear here, or you'll have a bad time trying to generate custom engines.

Overview:

Install Extension and initial setup

Generate static engine for checkpoint

Generate dynamic engine for checkpoint (requires static engine first or you get errors)

Generating LoRA weights

Installation

In Automatic1111, Select the Extensions tab and click on Install from URL.

Copy the link to the repository and paste it into URL for extension's git repository:

Click the Install button.

Don't touch anything and let it install. When it's done, you'll see a message in Automatic1111.

After the install is complete, click on the Installed tab and hit the Apply and restart UI button to reload Automatic1111.

After Automatic1111 is reloaded click on the TensorRT tab, and hit the Export Default Engine button.

This part takes a while.. you can watch Automatic1111's console window to keep track of progress. You might as well go have a snack or something, even on a 4090 I think it took at least ten minutes. Again, it's best not to touch or do anything else while it cooks. TensorRT seems to want all the VRAM you can throw at it.

Next, go to Automatic1111's Settings tab, type "quicksettings" into the search box at the upper left, and hit enter.

My quick settings are: sd_model_checkpoint, sd_vae, CLIP_stop_at_last_layers, and sd_unet. The one that's required for TensorRT is sd_unet. Click in the quick settings field, and a drop-down menu will appear. Find sd_unet and select it to add it to your quick settings.

Once it shows up in your quick settings, hit the big Apply settings button above to save it.

Go to the Extensions tab again, and hit the Apply and restart UI button to reload Automatic1111.

After things have reloaded, set your sd_unet to "Automatic".

Now, for each checkpoint you want to use while TensorRT is active, you need to generate an engine for it. Buckle up, you're about to go twice as fast! First we need to generate a static engine, and then we can create dynamic engines for other resolutions.

Generating static TensorRT Engines for Checkpoints

I seem to get errors about VRAM unless I generate a static engine first, so we'll generate a static 1024x1024 SDXL engine, and then a dynamic SDXL engine for the resolution we'll end up at using hires.fix.

Load a checkpoint how you normally would in Automatic1111. I use SDXL so this example shows you how to create engines for it with the supported batch size of 1. SD1.5 is supported as well and can generate engines that go up to a batch size of 4.

Once your checkpoint is loaded, navigate to the TensorRT tab.

Pick 1024x1024 in the Preset drop-down menu. Click the Export Engine button when done.

Don't touch anything and wait for it to generate a TensorRT engine for your particular checkpoint and resolution settings. This part is VRAM intensive!

Once the engine has generated, you'll get a message on the extension page. Hit the refresh button by your SD Unet quick settings, and you'll see your new engine in the drop-down (I used the fantastic Cheyenne checkpoint for this demonstration):

Note: I'm not sure if you need to select the active checkpoint's engine or not vs leaving it on Automatic, but I always select the engine matching the checkpoint I'm using.

Generate some stuff and enjoy the massive speed boost! The next section will walk you through generating a dynamic engine, which you need if you want to do upscaling with hires.fix or any custom resolutions instead of 1024x1024.

Generating Dynamic TensorRT Engines for Checkpoints

Load a checkpoint how you normally would in Automatic1111. I use SDXL and hires.fix, so this example shows you how to create a Dynamic TensorRT engine based on generating at 1024x1024 with hires.fix upscaling to 1638x1638.

Once your checkpoint is loaded, navigate to the TensorRT tab.

Pick 1024x1024 in the Preset drop-down menu. Uncheck "Use static shapes" and set the optimal and max height/width fields to 1638x1638. Click the Export Engine button when done.

Don't touch anything and wait for it to generate a TensorRT engine for your particular checkpoint and resolution settings. This part is VRAM intensive!

Note: If you want to use different resolutions, the Min height/width is the resolution your checkpoint generates to. Set Optimal and Max height/width to what hires.fix will be upscaling to.

Once the engine has generated, you'll get a message on the extension page. Hit the refresh button by your SD Unet quick settings, and you'll see your new engine in the drop-down (I used the fantastic Cheyenne checkpoint for this demonstration):

Note: I'm not sure if you need to select the active checkpoint's engine or not vs leaving it on Automatic, but I always select the engine matching the checkpoint I'm using.

Generate some stuff and enjoy the massive speed boost! If you want to use LoRAs as well, check out the next section. If you want to try it with a different checkpoint, load it normally, generate a static engine, then generate dynamic engines based on the resolution you want to use.

Generating TensorRT weights for LoRAs

To use LoRA with TensorRT, you need to export weights so the extension can work with the LoRA (or something like that, based on their documentation). This part's easy, but with one caveat: The extension only sees LoRAs that are in your root models/LoRA folder, not sub-folders. So if you have a ton and have organized them into folders, you'll need to move the LoRA you want to export weights for into the root LoRA folder. The good news is, once the weights are generated, you can move it back to where it belongs!

For a LoRA sitting in the root LoRA models folder, navigate to the TensorRT tab and pick the TensorRT LoRA tab. Select the LoRA you want to export TensorRT weights for, and hit the Convert to TensorRT button that appears.

Don't touch anything and wait until it says it's done. Once it does, you can use the LoRA like you normally would in a prompt!

Thanks for reading, and I hope you enjoy the warp speed. Please post your experience in the comments, and if you see anything that can be improved on the tutorial, let me know!