Training a LyCORIS for SDXL works pretty much the same way as training a LoRA/LyCORIS for SD1.5 with the main difference being the increased image resolution, which will bloat training time if using the same training settings as 1.5.

If you already know how to train a style LoRA, the following post discusses how to train a LoRA in Kohya on 8GB VRAM:

https://www.reddit.com/r/StableDiffusion/comments/15l9onu/its_possible_to_train_xl_lora_on_8gb_in/

I have attached the training settings I am using to this guide. It's for a LyCORIS but they're pretty interchangeable now I think.

There are many guides on how to train a LoRA so I will keep this very short.

Collect a dataset for the style

Clean and tag the dataset for training

Train the LoRA in Kohya

Test the LoRA

Collecting a dataset for the style:

You need to find around 100-200 images for the style you want to train. Anything less can still work but will be more limited and you will want to train with many epochs later in Kohya.

To find the images check:

Kemono.su | e-hentai.org | rule34.xxx | https://danbooru.donmai.us | X/Instagram/reddit/deviantart/artstation/etc.

You can use programs like these to make it easier:

https://github.com/Bionus/imgbrd-grabber

https://github.com/AlphaSlayer1964/kemono-dl

Place all the images into the same folder.

Clean and tag the dataset for training:

First, run dupeguru on the Pictures setting on your folder containing all the images.

https://dupeguru.voltaicideas.net

Delete any duplicate images. Also delete any gifs/mp4s/non images.

Sort the images by date and delete any old images that do not match the style you are attempting to create. Depending on how you got the images some old images will be mixed in with the newer images later, delete them whenever you find them. This is entirely subjective but will have a large impact on how strong and accurate the resulting style is.

(*OPTIONAL - SPEEDS UP LAMA*)

Sort your images into 3 folders:

Square

Vertical

Horizontal

Some images are very obvious which shape they fit, some images you can use this step to remove a lot of the unwanted parts of the image by cropping it to a different shape quickly. Comic panels that have one large section that you want to train on could be used by cropping them here.

Now put the images from each folder into:

Square images will be cropped and resized at 1024x1024

Vertical images and horizontal images can be cropped and resized to around 832x1216 or 886x1182

Save all the output images to the same folder.

Now you want to sort the images into 3 new folders to clean up any text that might be on them.

Good - Images that are ready for training

BGRem - Images where you want to remove the whole background, will also remove any speech bubbles usually.

Lama - Images that have some small issues like little bits of text over a character or a watermark.

I also make 2 more empty folders

Bgremd - Images with their backgrounds removed

Lamad - Images that have been cleaned with lama

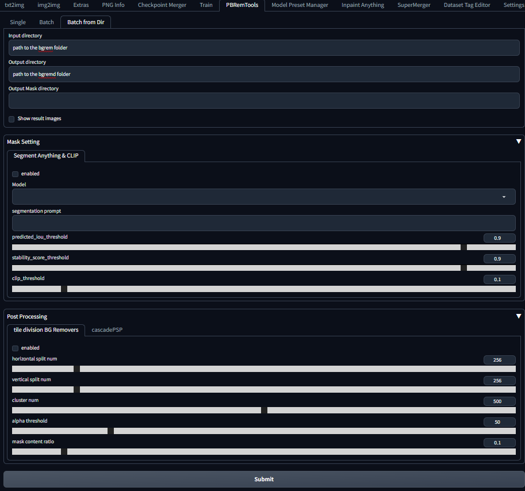

Bgrem A1111:

Hit submit and wait for it to finish. Check the resulting images, if any became very rough looking or had text survive then send the original image (from the bgrem folder) to the lama folder.

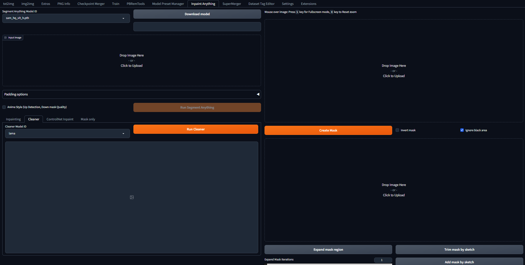

Lama A1111:

Drop an image from the lama folder in the top left. Paint over anything you want to clean in the bottom right and hit 'add mask by sketch'. Then hit 'run cleaner'. Avoid painting over characters. Save the resulting image to the lamad folder.

If it deforms the image too much just send the original from the lama folder to the good folder or delete it, depending on how much you want to it to learn from that image.

Now extract all the images from the bgremd, lamad and good folders and put them into a new folder called 'X_artstyle' where X is the number of repeats you want. This will repeat the entire dataset in that folder when training, so if you have 200 images and title the folder '5_artstyle' you would get 1000 steps in your training. I usually aim for 2500 steps but more is usually better it just takes longer. Some styles will need longer in the oven than others, but I wouldn't go over 10 repeats here, just do more epochs later.

Now you need to tag the images.

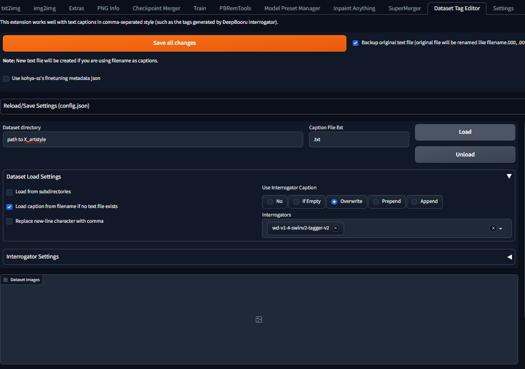

Dataset tag editor:

Hit load on that extension in A1111. I usually just hit save all changes when it finishes and call it a day, but if you want to you could edit the tags manually in that extension or save the changes first and then edit the tags with:

https://github.com/starik222/BooruDatasetTagManager

The more effort you put in tagging the images for any dataset - the higher the quality of the resulting model. This goes for characters, styles and especially checkpoints and is probably the reason that ponyXL is so good. It is by far the most time consuming step if you choose to do it, but if there is a style that you really want to turn out well then you should manually tag it with a long description for each image (ie. mature businesswoman sitting on chair in office looking at viewer) followed by tags for every element of the image.

You need to leave in any tags describing what is in the image and ideally remove any tags related to the style itself (realistic, 3dcg, sketch etc.). Don't add a trigger word, only really worth having for characters. If you are training a character, you should describe their unique outfits with unique tags (ie. Tsunade's green jacket would be tsunJacket) and include a trigger word that is unique for the character. Do not include tags for things that should not change with the character (ie. Tsunade's blue diamond on her head would not get a tag, nor would you mention her hair color since it should always be blonde).

Now the dataset is ready for training.

Train the LoRA in Kohya:

https://github.com/bmaltais/kohya_ss

Use the attached .json for training. Or use edgLoRAXL preset in kohya if you really need to make a LoRA.

You want around ~3+ thousand steps at a batch size of 2, so if you have a dataset of 100 images do 10 repeats and 6+ epochs for 3000+ steps. Do more epochs if it needs it when testing.

Batch size is going to depend on your VRAM and the image sizes. Lower it if you need to. Don't go above a batch size of 2 even if you can, it will negatively impact accuracy.

Hit start training when ready, it will take a while to finish.

Test the LoRA

Pretty self explanatory. If the style isn't coming through super strong on PonyXL you might need to put '3d' in the negatives, or retrain with more repeats/epochs.

Here is a style LoRA I trained using this method, I've attached the training data on the model page if you want to see what it looks like before it was curated/after it was cleaned (Pony V1.0).

https://civitai.com/models/211726/incase-style-lora-for-ponyxl-15

If the json isn't working I'll just paste the contents here:

{

"LoRA_type": "LyCORIS/LoCon",

"LyCORIS_preset": "full",

"adaptive_noise_scale": 0,

"additional_parameters": "--max_grad_norm=0",

"block_alphas": "",

"block_dims": "",

"block_lr_zero_threshold": "",

"bucket_no_upscale": true,

"bucket_reso_steps": 64,

"cache_latents": true,

"cache_latents_to_disk": true,

"caption_dropout_every_n_epochs": 0.0,

"caption_dropout_rate": 0,

"caption_extension": ".txt",

"clip_skip": "1",

"color_aug": false,

"constrain": 0.0,

"conv_alpha": 4,

"conv_block_alphas": "",

"conv_block_dims": "",

"conv_dim": 8,

"debiased_estimation_loss": false,

"decompose_both": false,

"dim_from_weights": false,

"down_lr_weight": "",

"enable_bucket": true,

"epoch": 3,

"factor": -1,

"flip_aug": false,

"fp8_base": false,

"full_bf16": false,

"full_fp16": false,

"gpu_ids": "",

"gradient_accumulation_steps": 1,

"gradient_checkpointing": true,

"keep_tokens": "0",

"learning_rate": 1.0,

"logging_dir": "",

"lora_network_weights": "",

"lr_scheduler": "cosine",

"lr_scheduler_args": "",

"lr_scheduler_num_cycles": "",

"lr_scheduler_power": "",

"lr_warmup": 0,

"max_bucket_reso": 2048,

"max_data_loader_n_workers": "0",

"max_grad_norm": 1,

"max_resolution": "1024,1024",

"max_timestep": 1000,

"max_token_length": "225",

"max_train_epochs": "",

"max_train_steps": "",

"mem_eff_attn": false,

"mid_lr_weight": "",

"min_bucket_reso": 256,

"min_snr_gamma": 0,

"min_timestep": 0,

"mixed_precision": "fp16",

"model_list": "custom",

"module_dropout": 0,

"multi_gpu": false,

"multires_noise_discount": 0.3,

"multires_noise_iterations": 6,

"network_alpha": 4,

"network_dim": 8,

"network_dropout": 0,

"noise_offset": 0.0357,

"noise_offset_type": "Multires",

"num_cpu_threads_per_process": 2,

"num_machines": 1,

"num_processes": 1,

"optimizer": "Prodigy",

"optimizer_args": "decouple=True weight_decay=0.5 betas=0.9,0.99 use_bias_correction=False",

"output_dir": "",

"output_name": "",

"persistent_data_loader_workers": false,

"pretrained_model_name_or_path": "",

"prior_loss_weight": 1.0,

"random_crop": false,

"rank_dropout": 0,

"rank_dropout_scale": false,

"reg_data_dir": "",

"rescaled": false,

"resume": "",

"sample_every_n_epochs": 0,

"sample_every_n_steps": 0,

"sample_prompts": "",

"sample_sampler": "euler_a",

"save_every_n_epochs": 1,

"save_every_n_steps": 0,

"save_last_n_steps": 0,

"save_last_n_steps_state": 0,

"save_model_as": "safetensors",

"save_precision": "fp16",

"save_state": false,

"scale_v_pred_loss_like_noise_pred": false,

"scale_weight_norms": 1,

"sdxl": true,

"sdxl_cache_text_encoder_outputs": false,

"sdxl_no_half_vae": true,

"seed": "12345",

"shuffle_caption": true,

"stop_text_encoder_training_pct": 0,

"text_encoder_lr": 1.0,

"train_batch_size": 2,

"train_data_dir": "",

"train_norm": false,

"train_on_input": false,

"training_comment": "",

"unet_lr": 1.0,

"unit": 1,

"up_lr_weight": "",

"use_cp": true,

"use_scalar": false,

"use_tucker": false,

"use_wandb": false,

"v2": false,

"v_parameterization": false,

"v_pred_like_loss": 0,

"vae": "",

"vae_batch_size": 0,

"wandb_api_key": "",

"weighted_captions": false,

"xformers": "xformers"

}