If you are new to Stable Diffusion and want to learn easily to train it with very best possible results, this article is prepared for this purpose with everything you need.

Most up to date original Source of this article (Public Post) : https://www.patreon.com/posts/full-workflow-sd-98620163

I have developed better workflows recently than that I did with Automatic1111 DreamBooth extension. Much better than that old one. Moreover, Automatic1111 DreamBooth extension is not anymore maintained so do not use it.

I did 120+ trainings for SDXL to find the very best workflow and settings.

I did over 70+ trainings for SD 1.5 based models to find very best workflow and settings.

If you have further questions please leave a comment and hopefully I will keep updating this article.

If you are overwhelmed with all the info, I am giving 1 to 1 private lectures as well

Message me from Discord

Requirements

You need to have Python 3.10.11 installed. I suggest you to install as shown in below video:

Also install ffmpeg, C++ tools & Git.

Don’t forget to install the Visual Studio 2015, 2017, 2019, and 2022 redistributable as well.

Install Python directly into your C drive like shown in above video.

How To Install & Use Kohya SS GUI On Windows & RunPod & Kaggle

The big video I explained how to install and use Kohya GUI on Windows is in below video:

The big video I explained how to install Kohya GUI on RunPod is below:

These 2 above videos shows how to train LoRA but everything same with DreamBooth. Just use DreamBooth tab and our shared best configs.

Our RunPod Kohya GUI SS installer script: https://www.patreon.com/posts/84898806

A quick video where I explained how to quickly use our Kohya GUI configs is below:

If you can’t afford RunPod and don’t have a decent GPU, we have a Free Kaggle account notebook that supports Kohya SS GUI and it is shown in below video:

Kaggle Kohya Script notebook link : https://www.patreon.com/posts/kohya-sdxl-lora-88397937

This notebook has a cell that downloads best SDXL and SD 1.5 configs for Kaggle.

On Kaggle, I suggest you to train SD 1.5 model. It is better since on Kaggle we can’t use BF16 for SDXL training due to GPU model limitation. Kaggle GPUs are old. Also we can’t train with full precision Float (FP32) due to VRAM limitation.

However on Kaggle we can train SD 1.5 with full precision Float (FP32) therefore can obtain amazing results.

Also on this Kaggle video (below link) I have explained my recent 200 repeat and saving every N steps checkpoints strategy which is better:

For example if you have 16 training images, 200 repeat and 1 epoch with reg images means 16x200x1x2 = 6400 steps. The last 2 comes from reg images. So if you want to save 10 checkpoints and compare, save every 641 steps. This will give you 10 checkpoints.

After training completed, do x/y/z checkpoint comparison and decide the best model.

x/y/z checkpoint comparison is shown in below video:

Why 200 repeat and 1 epoch better? Because with 200 repeating, we are able to utilize more ground truth absolute quality regularization / classification images.

Ground Truth Very Best Regularization / Classification Images

You can see our both Man and Woman reg / class images datasets here with all resolutions you may need (all pre-prepared — takes days) : https://www.patreon.com/posts/massive-4k-woman-87700469

These regularization / classification images are manually collected by me and prepared by me from Unsplash and sorted with best quality. The preparatın took over few weeks in total. There are 5200 images for each class Man and Woman.

After Detailer Extension

To improve faces significantly, I use After Detailer (ADetailer) extension. This extension automatically masks the face and inpaint it with the settings you provide. This will improve face significantly. Of course, the results depends on your initial training quality and the quality and resemble and quality of the initially generated image.

In below video how to use ADetailer extension is explained:

Always you can look video chapters : 32:07 How to install after detailer (adetailer) extension to improve faces automatically

After installing ADetailer extension from extensions menu, please restart the Automatic1111 for it to fully and properly install

Specific settings for SD 1.5 and SDXL shown below in their sections

How To Crop And Resize Your Training Images

You can use our state of the art auto crop and resize scripts. They are shared here : https://www.patreon.com/posts/sota-subject-and-88391247

You can watch crop and resize scripts video in below link:

The first cropping script will zoom in the person in the image with as much as trying to match the target aspect ratio without cropping any part of the human subject. It supports may other classes as well such as : ‘person’, ‘bicycle’, ‘car’,… Please check the patreon post for full classes.

Then with resize script, we focus on the face on the image and resize into exact target resolution. This crops the person body parts if necessary with focusing face.

Which Captions / Tokens To Use For Training

For training captions / tagging, if it is a person i just use ohwx man as training token and man as reg images token. You can define them either via folder name or with image_file_name.txt files. If you are training a woman use woman or whatever you are training use that class token. Like ohwx car.

If you want to train a style or other stuff, try ohwx + class + captioning and compare it with just ohwx class.

If you want to learn what is ohwx and why we use such rare token watch below video:

This above is old but it is gem to learn rare tokens and class tokens

You can use our SOTA captioning models for captioning which includes batch captioning for the following models: Clip Interrogator (115 Clip Vision Models), LLaVA (the strongest one), CogVLM, Qwen-VL, Blip2 & Kosmos-2 (even works with 2 GB VRAM GPU best one for weak GPUs).

You can download 1 click installers and batch captioning supporting amazing Gradio web apps having scripts from here : https://www.patreon.com/posts/sota-image-for-2-90744385

Each script has instructions and installers for Windows & RunPod. Hopefully I will add Free Kaggle notebooks as well.

If you are local linux user, just change the paths of RunPod scripts and use them on your local machine.

Each script has default very best image captioning prompts set in the GUI apps.

Once you generated captions with our SOTA captioning tools, you can use Kohya GUI’s amazing caption manipulation screen as shown below.

You can download the full resolution of below image : Kohya Image Caption.png

How To Make Kohya GUI To Use Captions Instead Of Folder Names

Once the Caption Extension is set and enabled, it will read the captions from the folder and ignore the folder names.

The repeating count number will be still valid.

You can download below image full size from : Kohya Enable Image Caption.png

SD 1.5 Based Models Configuration

Download best configuration from here : https://www.patreon.com/posts/very-best-kohya-97379147

For SD 1.5 I used 768x768 training, no bucketing (I crop training images into 768x768 aspect ratio and resize them to 768x768) and generate images in 1024x1024.

Use same resolution of training images resolution for regularization / classification images resolution.

Once you become a more experienced, you can enable bucketing and do bucketing enabled different aspect ratio and resolution including trainings

I use After Detailer (ADetailer) with the following configs for SD 1.5

Everything default except below:

Prompt : photo of ohwx man if realism is targeted

If you are targeting stylization such as 3D you need to try with full prompt or like 3D image of ohwx man

Set ADetailer inpainting resolution to 768x768 : Remember we are generating images in 1024x1024 but when inpainting face, it is best to inpaint with native resolution we trained.

The denoise strength I set is 0.5 and I also set separate number of steps for better quality like 70

Here ADetailer settings for SD 1.5 download image to see : SD 1.5 ADetailer Settings.png

I compared 1024x1024 training vs 768x768 training for SD 1.5 and 768x768 performed better even though we generate images in 1024x1024.

Also not all SD 1.5 models will support 1024x1024 resolution. To find which model is best, I compared 161 SD 1.5 models. You can watch its video below:

The Kohya SS GUI config for SD 1.5 automatically uses the best SD 1.5 realism model that supports 1024x1024 image generation and automatically downloads it into your PC.

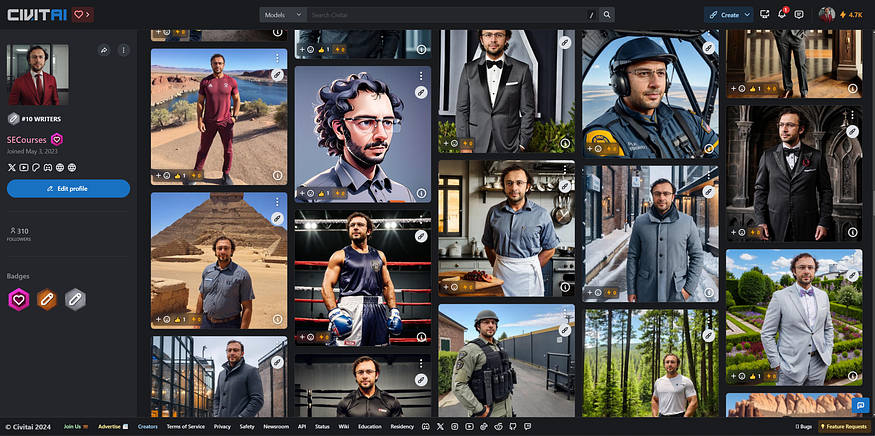

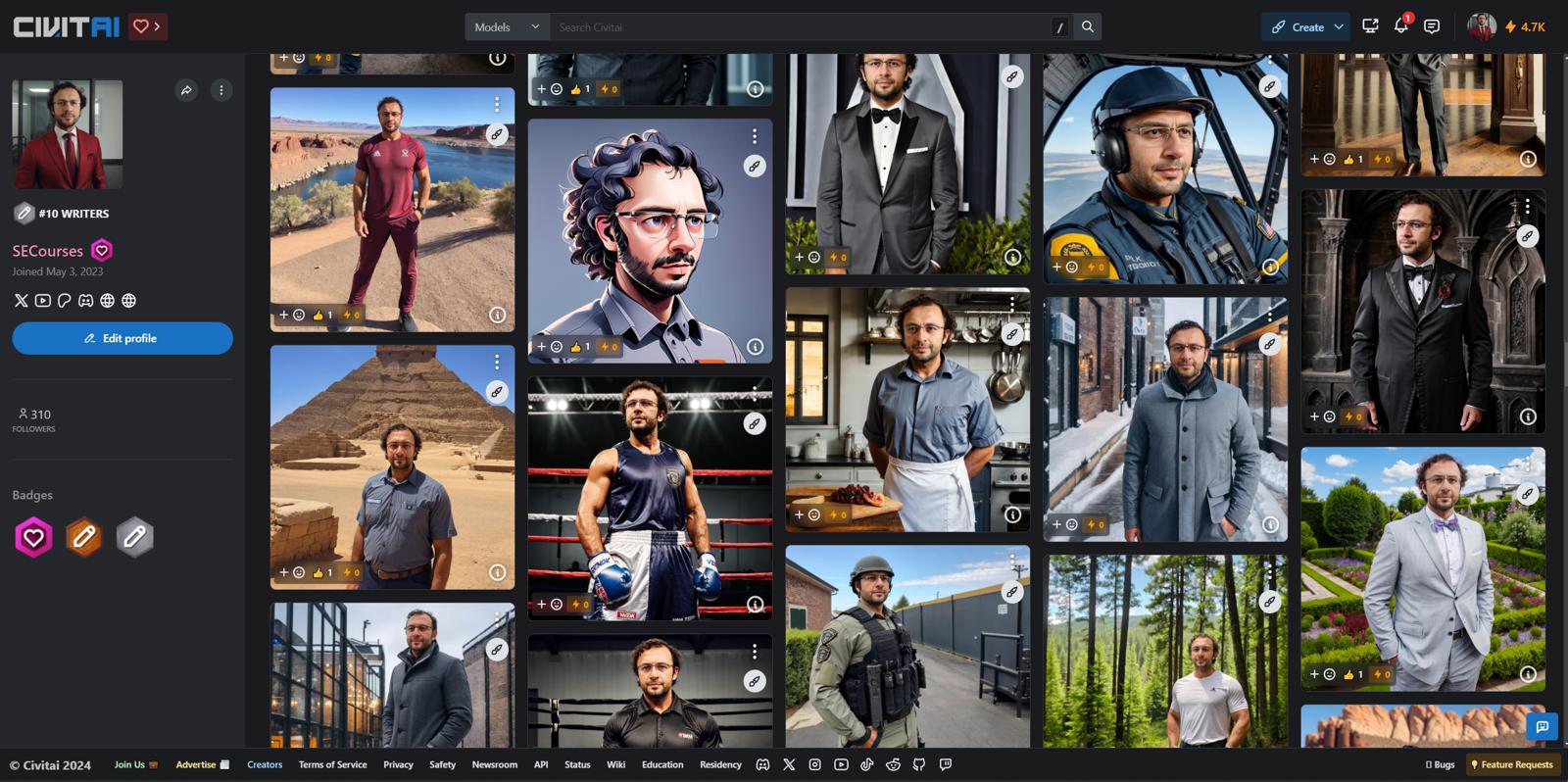

SD 1.5 Based Models Results & Prompts

20 Images : https://civitai.com/posts/1300478

20 Images : https://civitai.com/posts/1300774

20 Images : https://civitai.com/posts/1300759

What If You Want LoRA For SD 1.5 Based Models?

LoRA is optimized version of DreamBooth training. Therefore, no matter what configuration you use, it will be inferior to the DreamBooth training. Moreover, LoRA has infinite number of configuration combination. Therefore, I don’t research LoRA.

Also as mentioned in this paper, https://arxiv.org/abs/2402.09353, LoRA lacks a significant feature : They found that LoRA either increases or decreases magnitude and direction updates proportionally but seems to lack the capability of making only subtle directional changes as found in full fine-tuning. Therefore do full DreamBooth / Fine Tuning and extract LoRA is best.

So what you can do If you need LoRA?

It is so easy. We use Kohya SS GUI LoRA extraction tool. Recently I contacted Kohya for this and he even Improved its logic. Now even low VRAM GPUs can extract LoRA very well.

Open your latest version Kohya SS GUI and extract as shown in below image

The best model that our config auto trains on is Hyper Realism V3 which you can download safetensors file here : https://civitai.com/models/158959/hyper-realism

You need to set your base model whatever you trained on. If you used our default config, you need to download that safetensors file and use it as base.

You can download full resolution of below image : SD 1.5 LoRA Extract.png

SDXL Based Models Configuration

Download best configuration from here : https://www.patreon.com/posts/89213064

For SDXL I used 1024x1024 training, no bucketing (I crop training images into 1024x1024 aspect ratio and resize them to 1024x1024) and generate images in 1024x1024.

Use same resolution of training images resolution for regularization / classification images resolution.

Once you become a more experienced, you can enable bucketing and do bucketing enabled different aspect ratio and resolution including trainings

I use After Detailer (ADetailer) with the following configs for SDXL

Everything default except below:

Prompt : photo of ohwx man if realism is targeted

If you are targeting stylization such as 3D you need to try with full prompt or like 3D image of ohwx man

The denoise strength I set is 0.5 and I also set separate number of steps for better quality like 70

Here ADetailer settings for SDXL download image to see : SDXL ADetailer Settings.png

For realism I still find SDXL 1.0 is best but you can try other models and compare results

Some custom models may require longer training or higher learning rate

SDXL Based Models Results & Prompts

20 Images : https://civitai.com/posts/1238778

20 Images : https://civitai.com/posts/1238769

What If You Want LoRA For SDXL Based Models?

LoRA is optimized version of DreamBooth training. Therefore, no matter what configuration you use, it will be inferior to the DreamBooth training. Moreover, LoRA has infinite number of configuration combination. Therefore, I don’t research LoRA.

So what you can do If you need LoRA?

It is so easy. We use Kohya SS GUI LoRA extraction tool. Recently I contacted Kohya for this and he even Improved its logic. Now even low VRAM GPUs can extract LoRA very well.

Open your latest version Kohya SS GUI and extract as shown in below image

You need to set your base model whatever you trained on.

Using LoRA on custom stylized models yields good stylized images

You can download full resolution of below image : SDXL LoRA Extract.png