THIS IS JUST A SMALL PART OF THE GUIDE

You can find it here as a PDF

https://www.patreon.com/posts/get-your-free-99183367

📖 100+ pages of deep-dive strategies

🌟 18K words & prompts for endless inspiration

🚀 Expert techniques to elevate your art

🎨 Example Images and renderings

Stable Diffusion

Ultimate Workflow Guide

Navigating the currents of creativity with Stable Diffusion requires more than a spark of inspiration; it demands a mastery of workflow. This guide serves as your compass through the nuanced process of transforming a mere idea into a visual masterpiece. Within these pages, you will learn not just to operate AI but to orchestrate it, ensuring that every command flows into a symphony of pixels that captures your vision with precision. Embark on this journey to harness the full potential of Stable Diffusion and become fluent in the language of AI-driven artistry.

1. What do I need to get Started?

Before you begin your journey into the realm of AI-generated imagery, it's important to ensure that you have all the necessary tools and resources. This guide is tailored around the use of Stable Diffusion via the Automatic 1111 interface. Although we will be focusing on this specific UI, the knowledge and skills you acquire here can be applied to other user interfaces like ComfyUI, Vlad Diffusion, Leonardo.AI, AI-Turbo, and many others. Automatic 1111

1.1 Automatic 1111: Your First Date with AI

Automatic1111 WebUI stands as a paradigm shift in the creation of AI-generated images. This powerful tool offers a user-friendly interface that streamlines the image generation process, making it accessible to artists and creators without the need for in-depth coding knowledge. Its robust and efficient diffusion process ensures accurate and high-quality results, fostering a space where creativity is only limited by imagination.

While it is not as powerful as ComfyUi it surely is easier to use and understand for beginners.

For a detailed walkthrough on installing Automatic 1111 and understanding its basic settings, please refer to my extensive tutorial on YouTube, "Best Free AI Image Generator: Stable Diffusion-Automatic 1111 EASY install guide and basic settings".

To follow this guide is urge you to update your Automatic 1111 to version 1.6 at least.

1.2 Hardware The Unsung Hero of AI Art

To ensure a smooth and efficient image generation process, the following hardware specifications are recommended:

RAM: At least 16 GB for optimal performance.

GPU: NVIDIA (GTX 20XX series or newer) with a minimum of 4 GB VRAM, crucial for running the AI models.

Operating System: Compatible with Linux or Windows 7/8/10/11+, providing flexibility across different platforms.

Disk Space: A minimum of 10GB available disk space to accommodate the software, models, and your creations.

1.3 Generating Images: The Prompt Whisperer

At the heart of AI image generation is your topic—what you aim to create. A precise topic translates into a more effective prompt, guiding the AI to realize your vision. For a deep dive into crafting such prompts, refer to my "Ultimate Beginners Guide to AI Image Generation."

Available for a preview in a free demo or in full for my patrons, this guide will elevate your prompt-crafting skills, ensuring your ideas are impeccably translated into AI-generated art.

2. Getting into the Heart of Automatic 1111

AI image generation is not just about technology; it's an extension of your creative self, a digital paintbrush that turns your ideas into visual realities. As we explore Automatic 1111, remember that while settings abound, this guide will focus on the essential ones to get you started, applicable whether you prefer using SDXL or SD 1.5.

2.1 Finding a Model - Is Stable Diffusion Not Enough?

The base model, Stable Diffusion 1.5, is a robust starting point, but the world of AI image generation is vast. To truly refine your artwork, I recommend exploring civitai.com. Here, create an account and navigate to 'Models', sorted by 'Highest rated All Time'.

Choosing a model can be daunting, but let your creative intentions guide you. Whether your goal is anime, semi-realistic, or photorealistic art, pick a model whose demo images resonate with your vision.

My personal top picks include:

Dreamshaper 8: For those who are into ethereal and fantastical imagery.

RevAnimated: A must-try for enthusiasts looking to infuse a dynamic and lively energy into their creations.

3. CyberRealistic 4.0: Ideal for lifelike images, this model excels in photorealism, capturing the fine details that make digital art indistinguishable from actual photographs.

4. DivineEleganceMix: For art that embodies grace and finesse, this model is unmatched. It's the artist's choice for creating works with a sophisticated and celestial quality.

5. Analog Madness - Realistic model:

Also very good for realistic images. Does have a certain raw photography touch.

2.1.1 Stable Diffusion 1.5: The OG Pixel Chef

Text-to-image generation model using latent diffusion.

Released in October 2022 by Runway ML and Stability AI.

Uses a pre-trained CLIP ViT-L/14 text encoder.

Base Model Generates images at 512 x 512 resolution.

Inherited Models can go 768 x 512 or 512 x 768, some even 768 x 768

2.1.2 Stable Diffusion 2.1: The SFW Sheriff

High-resolution image synthesis model, also utilizing latent diffusion.

Released in December 2022 by Stability AI.

Supports negative and weighted prompts for finer control over results.

Capable of generating more detailed images at 768 x 768 pixels

2.1.3 Stable Diffusion XL (SDXL): The Big Picture

The latest version as of July 2023, with advancements in AI image generation.

Better understanding of shorter prompts and more accurate replication of human anatomy, reducing "uncanny valley" effects.

Features negative prompts, allowing users to specify elements to exclude from the generated images.

Needs mor VRAM

Conclusion

No one really uses SD 2.1. While this model was promising the Community rejected it sfw content restriction. SD 1.5 is still the most used model. It needs the least VRAM and thanks to the community has a strong tool chain and Model support.

While Stable Diffusion XL should be the newest and greatest Model, it feels its not quite there yet.

Images feel incomplete. Still the community is fast in creating custom Models I am sure a few months from now the tide will have shifted towards SDXL.

3. Basic Workflow: The AI Artist's Spell book

First you must build a base prompt like you learned in my “Ultimate Beginners Guide to AI Image Generation." Guide. What you also can do is go to civitAI and grab a prompt you like

We will use this:

Positive:

cyberpunk, skyscraper,looking at viewer, eye contact, smiling, (bokeh, best quality, masterpiece, highres:1) 1girl, purple hair, ((purple eyeshadow)) , short emo_hairstyle , RAW candid cinema, 16mm, color graded portra 400 film, remarkable color, ultra realistic, textured skin, remarkable detailed pupils, realistic dull skin noise, visible skin detail, skin fuzz, dry skin, shot with cinematic camera

Negative:

nsfw canvas frame, (high contrast:1.2), (over saturated:1.2), (glossy:1.1), cartoon, 3d, ((disfigured)), ((bad art)), ((b&w)), blurry, ((bad anatomy)), (((bad proportions))), ((extra limbs)), cloned face, (((disfigured))), extra limbs, (bad anatomy), gross proportions, (malformed limbs), ((missing arms)), ((missing legs)), (((extra arms))), (((extra legs))), mutated hands, (fused fingers), (too many fingers), (((long neck))), Photoshop, video game, ugly, tiling, poorly drawn hands, 3d render

Model: Analog Madness

3.1 Basic Settings - What does CFG Scale again?

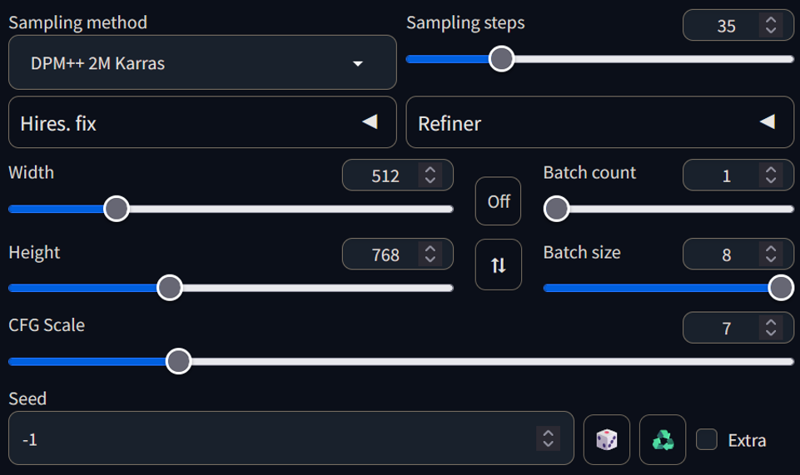

When you open Automatic 1111 this is what you see. It’s overwhelming at first. But as soon as you know what you are doing it gets easy. Lets go trough each of these.

Note: Most of the Images I show here are enhanced using the workflow I describe in Chapter 5.1 Image 2 Image – Stop using High-res fix!

3.1.1 Sampling Method:

The sampling method in Stable Diffusion, an AI model used for generating images, works like a guide or a decision-maker in the process of creating an image from a textual description. In simple terms, it's like a director who decides how the scene (the image) should be developed based on a script (the text prompt).

Imagine you give an artist a brief description of what you want in a painting. The artist then makes a series of choices about how to interpret your description – what colors to use, where to place objects, what style to paint in, etc. The sampling method in Stable Diffusion does something similar, but in a digital and algorithmic way.

When Stable Diffusion generates an image, it starts with a random pattern or noise. The sampling method then steps in to guide the transformation of this noise into a coherent image that matches your description. It does this by making a series of decisions at each step, based on the information it has learned from seeing many other images and descriptions.

This method helps ensure that the final image is not only a close match to your description but also visually appealing and coherent. It's like having a smart assistant who knows a lot about art and helps the artist (the AI model) make the best choices to bring your idea to life.

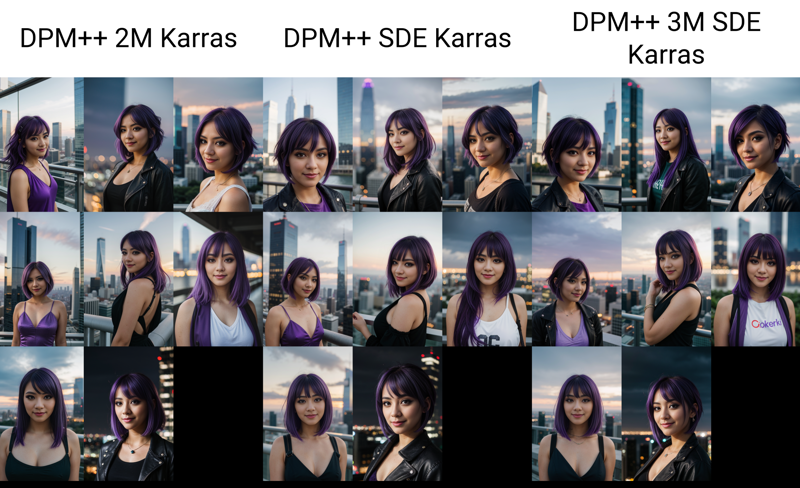

So which Sampler should you choose? While there is not a definitive answer. The Both most used are Euler A and DPM++ 2M Karras. DPM++ 2M Karras has more consistency but when you go for surreal or want more variation in your pictures Euler A is worth a try.

3.1.2 Sampling Steps

Sampling Steps is also a term that can confuse if you are new to this.

Image generation works as follows.

Input Prompt: The process begins with a textual prompt that describes the desired image.

Start with Noise: The model initializes with a canvas of random noise, a starting point with no structure.

Iterative Refinement: In each step, the model refines this noise. It makes choices about colors, shapes, and composition based on the input prompt and its trained knowledge of images.

Decision Making: The sampling method helps the model decide how to evolve the image at each step, aiming for a balance between matching the prompt and creating a visually coherent picture.

Progression of Steps: More steps (like 50, 70, or 90) mean more refinement opportunities, but beyond a certain point, improvements might not be noticeable.

Final Image: The process ends when the set number of steps is reached, resulting in the final image that reflects the input prompt.

10 Steps:

Fewer Iterations: With only 10 steps, the model has fewer iterations to refine the image. This means it makes larger, more significant changes at each step.

Rougher Details: The resulting image might be less detailed and more abstract, as there's less opportunity for fine-tuning and adding small details.

Faster Generation: The process is quicker because there are fewer steps for the model to go through.

Potential for Higher Creativity: Sometimes, fewer steps can lead to more creative or stylized interpretations, as the model makes broader strokes.

30 Steps:

More Iterations: With 30 steps, the model goes through more iterations, allowing for gradual and finer adjustments at each step.

Finer Details: This often results in images with more detailed and refined features. The model has more opportunities to correct and enhance small elements.

Slower Generation: The process takes longer due to the increased number of steps.

Higher Fidelity: The final image is typically closer to a realistic or high-fidelity representation, assuming that's what the prompt is guiding towards.

Why don’t we see huge improvements beyond the 50 Step Mark?

Diminishing Returns Beyond a Certain Point: There's often a point of diminishing returns in the number of steps where additional iterations don't lead to noticeable improvements. After a certain number of steps, the model may have already refined the image to a point where further adjustments are either too subtle to notice or don't contribute to a better representation of the prompt.

Model's Limitations: Each AI model has its limitations in terms of how well it can interpret and render an image. Beyond a certain complexity or level of detail, additional steps might not yield significant improvements because the model has reached its capability limit.

Prompt Specificity and Complexity: The nature of the prompt can also play a role. If the prompt is straightforward or if the model finds a 'good enough' solution early in the process, additional steps might not lead to significant changes. Conversely, very complex or abstract prompts might benefit from more steps.

Algorithm Efficiency: Stable Diffusion is designed to work efficiently. It might reach an optimal solution before the set number of steps, especially if the prompt is well within the model's training and capabilities.

Subjective Perception: Sometimes, the differences between images generated with different numbers of steps can be subtle and may not be easily noticeable, especially if the changes are in fine details or texture.

Parameter Settings: Other parameters in the image generation process, like the temperature setting, can also influence the output more significantly than just the number of steps.

In practical terms, finding the optimal number of steps often involves experimentation. For many prompts and purposes, a moderate number of steps (like 30-50) might be sufficient to achieve a good balance between image quality and generation time. Going much beyond that might not always result in perceptibly better images.

3.1.3 Refiner

The Refiner is a feature in Automatic 1111, specifically designed for use with the SDXL (Stable Diffusion eXtra Large) model. The core idea behind the image generation process in SDXL involves a two-step approach. Initially, the Base Model generates a lower resolution image, typically at 128 x 128 pixels. Following this, the Refiner, acting as a secondary model, steps in to enhance this image to a higher resolution, typically 1024 x 1024 pixels, before the image generation is deemed complete. However, this separate refinement step has become less prevalent in many modern XL models, as they often incorporate the refining capabilities directly within the main model architecture. The technical implementation of this integrated approach allows for more efficient and streamlined image generation, often with improved quality in the final output.

3.1.4 Width and Height

I've covered the ins and outs of width and height in Chapter 2.1, where we delved into the resolution specifics for SD 1.5 and SDXL. To keep things fresh and avoid repetition, I won't go into too much detail here again. Just remember, these dimensions are all about the resolution of your final image – a crucial piece of the puzzle for bringing your creative visions to life!

3.1.5 Batch size and Batch count

Batchsize in Automatic 1111 determines the number of images generated per batch. A larger batchsize produces more images at once, offering variety but requiring more computing power and time.

Batchcount controls how many times the model generates these batches. Increasing the batchcount results in a greater total number of images, useful for extensive exploration of a prompt, but also increases overall resource use and generation time.

3.1.6 CFG Scale

CFG Scale, or "Classifier Free Guidance Scale," is a setting in image generation models like Stable Diffusion. It influences how closely the generated image adheres to the textual prompt. Here's a concise description:

Function:

CFG Scale modulates the influence of the textual prompt on the image generation process. A higher CFG Scale makes the AI pay more attention to the details of the prompt, aiming to generate images that more closely match the input description.

Impact:

Low CFG Scale: Results in more creative, abstract, or loosely interpreted images. The model takes more liberties with the prompt.

High CFG Scale: Produces images that more strictly adhere to the prompt, with greater detail and accuracy as per the input text.

Essentially, CFG Scale is a knob that adjusts the balance between creative freedom and fidelity to the textual prompt in the image generation process.

Take a look at the contrast between a low and high CFG scale. To highlight the differences more vividly, I've incorporated the 'Blessed Tech - World Morph Lora' into our prompt. This addition enhances the visual distinction we're exploring. You can find more about this specific tech at Blessed Tech - World Morph Lora. Our prompt is enriched with elements like 'blessedtech', 'blessed', and 'aura', each weighted at 0.95, to demonstrate the impact of CFG scale adjustments more effectively.

So, our positive Prompt looks like this:

long shot scenic professional photograph of cyberpunk, skyscraper,looking at viewer, eye contact, smiling, (bokeh, best quality, masterpiece, highres:1) 1girl, purple hair, ((purple eyeshadow)) , short emo_hairstyle , <lora:BlessedTech:0.95> blessedtech, blessed, aura, perfect viewpoint, highly detailed, wide-angle lens, hyper realistic, with dramatic sky, polarizing filter, natural lighting, vivid colors, everything in sharp focus, HDR, UHD, 64K

What about High-res fix? This is a topic I will cover in chapter 5.

3.1.7 Seed

The "Seed" in image generation models like Stable Diffusion is a setting that plays a crucial role in determining the randomness of the generated images. Here's a brief explanation:

Function:

A seed is a numerical value that initializes the random number generator used in the image creation process. It's like the starting point for all the randomness that follows.

Impact:

Consistency: Using the same seed with the same model and prompt will produce the same image every time. It ensures reproducibility in the generation process.

Variety: Changing the seed value, even slightly, results in a different set of random calculations, leading to a noticeably different image, even with the same prompt.

In essence, the seed is like a 'recipe number' for the randomness in the image generation process. It allows for control over the reproducibility of results and exploration of variations in generated images.

3.2 Loras, Embeddings, Lycoris can I eat that?

Terms like 'Loras', 'Embeddings', and 'Lycoris' might sound...

As Said in the beginning, tHIS IS JUST A SMALL PART OF THE GUIDE

You can find it here as a PDF

https://www.patreon.com/posts/get-your-free-99183367

📖 100+ pages of deep-dive strategies

🌟 18K words & prompts for endless inspiration

🚀 Expert techniques to elevate your art

🎨 Example Images and renderings

These chapter you are missing.