Low VRAM Adventures

[🔗link to the series announcement]

ComfyUI Workflow attached!

You will need to download SVD models from huggingface and possibly the rife VFI models.

SVD

Stable Video Diffusion (SVD) is a video generation model provided by StabilityAI.

The research paper mentions text to video, I have not seen it in the wild.

There are two versions :

v1.0 released in November 2023.

v1.1 released in February 2024.

SVD is an image to video model that only takes one image as input and does not expose precise generative controls. SVD generates a fixed number of frames, there are two SVD 1.0 models, generating 14 (regular) and 25 frames (XT) respectively, SVD 1.1 only exists as XT model. 25 frames equal to about 4 seconds of video.

SVD offer limited controls to the user:

width and height, or output video size

augmentation_level or how much it will modify the source image

motion_bucket_id. The name can be misleading, you are not able to select a precise animation (like panning, zooming,...), but it will animate more with a higher value.

StabilityAI claims generations to be less than 2 minutes.

How does it perform on Low VRAM systems?

Let's find out!

Test setup

Unless specified, data comes from generations on the system described in the announcement article.

i58400

16G RAM 2133Mhz

Nvidia GTX1060 6G

Windows 10 64bits

ComfyUI Portable + run_nvidia_gpu

Cuda 12

Test MO

I only run tests on the XT / 25 frames models

constant motion_bucket_id

constant augmentation_level

contant frames and fps

constant sampler values except for the seed (forgot…)

constant video cfg

variable resolution (constrained on the large side)

480px

512px

600px

768px

1024px

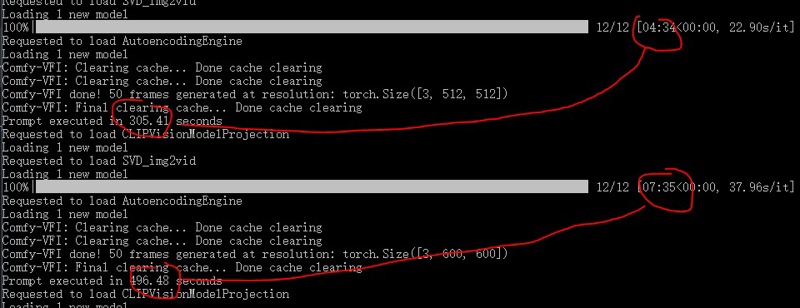

I record ComfyUI self reported times

For this article I did not run 100s of generations. I have used SVD for months already and I know that its render times are very consistent for a given resolution. It does occasionally have a first generation much slower than the rest of a batch, but I would exclude those data points.

I have run the smaller resolutions up to 20 times (480-768) and the bigger once or twice.

It would take days just to run the bigger generations 10x times.

Welcome to the Low VRAM world!

Results

Generation Times (on 1060 6Gb)

SVD 1.0 XT

| Long Side (pixels) | Time |

| | wide* | square |

|——————————————————————+———————————+————————————|

| 480 | 2m 10s | 4m 00s |

| 512 | 2m 30s | 4m 50s |

| 600 | 3m 30s | 7m 30s |

| 768 | 6m 30s | 25m |

| 1024 | 25m | 105m |

* wide: proportional to the native 1024×576 resolution.SVD 1.1

| Long Side (pixels) | Time |

| | wide* | square |

|——————————————————————+———————————+————————————|

| 480 | 2m 20s | 4m 00s |

| 512 | 2m 30s | 4m 30s |

| 600 | 3m 30s | 7m 30s |

| 768 | 7m | 25m |

| 1024 | 29m | 120m |

* wide: proportional to the native 1024×576 resolution.Interpretation

Essentially, render time is identical.

Only the 1024px wide side generations show significant difference. This can be due to the small dataset. I'm not running 1000~1200 minutes of render to test 5 times each.

We can also tell that render time grows exponentially with the size.

Render quality

Unsurprisingly 480 and 512 renders have no apparent difference in quality and very little render time difference.

For "fast" square generations, I would recommend 512.

600, 768 and 1024 all show significant quality improvements to lower resolutions.

Due to the random nature of animations and high risk of a completely blurry output, I would not want to use any generations running longer than 10 minutes.

Sample

I'll be honest. I do not think that my source images are very good to compare SVD 1.0 and 1.1 outputs. In retrospect I should have gone with common scenes, such as a car on a road or a train or a cat.

See for yourself:

SVD 1.0 XT Square generations: https://civitai.com/posts/1560464

SVD 1.0 XT Wide generations: https://civitai.com/posts/1560500

SVD 1.1 Square generations: https://civitai.com/posts/1560414

SVD 1.1 Wide generations: https://civitai.com/posts/1560440