首先,Yuno779是我很尊重的模型训练师,之前指责的问题我都对文章进行了修正.但这次对我进行指责,特别是中间还有很多诽谤和根本没有了解清楚就发出的指责,我感到很失望.还有不知道为什么2023年7-8月关于SD模型发展的个人观点及GhostReview框架这两篇文章的事,本来都是自己瞎写点观点,其中不止一次提到自己的业余,然后别人认为正确了,现在都是我的错了,把隔了7个月的文章被拉出来挂....我都奔35的人了,生活工作确实没啥时间,我集中一点一点来回应.

Translated by ChatGPT.

First of all, Yuno779 is a model trainer whom I greatly respect. I have made corrections to the issues that were previously pointed out in the articles. However, this time, being criticized by them, especially with many defamatory allegations made without a clear understanding, has left me feeling very disappointed. Also, I don't understand why my personal opinions on the development of SD models and the GhostReview framework in July-August 2023 were brought up. These two articles were initially just me sharing some amateur viewpoints, mentioning multiple times that I am not a professional. However, now it seems everything that others have approved has become my fault, with articles from seven months ago being brought up and criticized. I am almost 35 years old, with little time due to life and work commitments. I will respond gradually and address each point accordingly.

1. “我标榜自己的模型质量极好,而又拉踩其他任何模型,作者.”

"I boast about the extremely high quality of my own model while criticizing any other models, author."

首先,关于SD模型的发展的那篇文章是写于AI模型薅羊毛时期,一开头就说明了.当时的语气过激,现在看来是极为不恰当的,在此先道歉.除了在那篇文章因为当时社区充斥着薅羊毛的模型,所以带着情绪化的AOE了风格极其固定,泛化性极其差的模型是垃圾,还有之后GhostReview跑了个分数之外(如果你将这两者视为踩的话),还有某美院那件事之外,我没有踩过其他任何模型,作者!就算发了模型评测框架代码之后,有人问我A某模型是不是不能用,我的回答都是如果你用LoRA比较多就慎用.这里公开悬赏证据,可以在公开平台或群聊,找到我踩别人模型的图片证据,且确切为我本人(为了保证可溯和不可作假),我承诺踩一个模型或作者社交媒体公开道歉并给作者本人转账100,欢迎踊跃提供证据.

First of all, the article about the development of SD models was written during the era of AI model exploitation in China, many people used "trash model" to earn money from the platform,such as LiblibAI,Tusi,etc. It was explicitly stated at the beginning. The tone back then was overly heated, which now appears highly inappropriate, and for that, I apologize. Apart from the emotional critique in that article, which labeled models with a fixed style and extremely poor generalization caused by the abundance of exploitative models in the community as "garbage," and aside from the subsequent GhostReview incident (if you consider these as criticisms), as well as the incident involving a certain art institute, I have not criticized any other models or authors! Even after releasing the model evaluation framework code, when someone asked me if Model A was unusable, my response has always been cautious, especially if you heavily rely on LoRA. Here, I offer a reward for evidence. You can find proof of me critiquing someone else's model in an open platform or group chat with images, and it must definitively prove it was me (to ensure traceability and prevent falsification). I promise that if I criticize a model or author, I will publicly apologize on social media and transfer 100RMB. Feel free to provide evidence.

然后,标榜自己质量极好这件事.首先,GhostMix是去年5月做的,23年10月5日登上All Time Highest Rated第一名,作为每一个模型作者来说都是极大的荣耀,我又没有模型来赚钱,作为自我宣传高兴,有什么问题吗?其次,GhostMix真的”极好”吗?哪怕看完GhostReview的结论,GhostMix美学分数和良图率上落后AWpainting,在风格和LoRA兼容性上得分也落后于REV. GhostMix的质量放在当时确实不错,拿着当时我宣传GhostMix的文章来说标榜质量极好?不如拿明朝的剑去斩清朝的官?

Next, let's address the matter of boasting about one's exceptionally high quality. Firstly, GhostMix was created in May of last year and on October 5th, 23, it reached the top spot on the All Time Highest Rated list. For any model author, this is indeed a great honor, and since I do not create models for profit, but rather for self-promotion, is there any issue with that? Secondly, is GhostMix truly "exceptional"? Even after reading the conclusions from GhostReview, GhostMix lags behind AWpainting in terms of aesthetic scores and quality image rate, and also falls short in style and LoRA compatibility compared to REV. While GhostMix's quality was decent at the time, is it appropriate to boast about its exceptional quality using the article promoting GhostMix as a benchmark? Would it not be akin to using a sword from the Ming Dynasty to cut down Qing Dynasty officials?

2. “一大堆民科理论”

"a bunch of pseudo-scientific theories"

GhostReview提出了一共提出三个方面:1. ckpt出图质量和泛化性分析(Prompt兼容性,画面质量,良图率)2.ckpt风格兼容性分析(画风兼容性)3.ckpt对LoRA兼容性分析.

首先,针对指责最多的一点,ckpt对LoRA兼容性分析我承认错误.事实上去年11月styleloss有争议的时候,我也已经在第一时间针对文章的进行批注.

虽然是错的,但是我想强调,LoRA的兼容性测试并不是随随便便拿一个LoRA就来测,测试的前提是:1.一批风格性LoRA 2.LoRA本身的泛化性不错,所以选了C站好评率最高的风格LoRA,因为前提是足够多的ckpt都能出此风格的结果.而当初做这个测试的目的是在当时真人过拟合模型充斥市面的情况下,此项目作为ckpt画风兼容性测试的后续测试,测试ckpt在风格LoRA下能不能出对应的风格(即检测ckpt画风是否固定).下图就是我原项目的结果,左侧为检测目标,右图上为评分高的模型,右图下为评分低.

为什么解释这个,是因为我做的事情跟LiblibAI在根本上就不一样...我解释过我是怎么做的,给了他们代码,但是他们选择用他们自己的方法...然后说这得怪我?最后我还是承认错误,ckpt对LoRA兼容性分析是个错误,不能这样子做.

Regarding the three aspects presented by GhostReview: 1. ckpt image quality and generalization analysis (Prompt compatibility, image quality, good image rate) 2. ckpt style compatibility analysis (style compatibility) 3. ckpt LoRA compatibility analysis

First of all, I admit my mistake in the analysis of ckpt LoRA compatibility. In fact, last November when there was controversy over style loss, I also made annotations to the article promptly.

Though I was wrong, I want to emphasize that LoRA compatibility testing is not done randomly with any LoRA. The premise of the test is: 1. Using a set of stylistic LoRAs 2. LoRA itself has good generalization. Therefore, I chose the highly-rated stylistic LoRA from site C, because the premise is that a sufficient number of ckpts can produce results in this style. The purpose of conducting this test at that time was to check whether ckpt can produce corresponding style under style LoRA, i.e., to see if ckpt's style is fixed. The image below shows the results of my original project, with the left side being the target of evaluation, the top right showing models with high scores, and the bottom right showing models with low scores.

Why explain this? It's because what I did fundamentally differs from LiblibAI... I have explained how I did it, provided them with the code, but they chose to use their own methods... and then blame me for it? In the end, I still admit the error—conducting the ckpt LoRA compatibility analysis was wrong and shouldn't have been done in that manner.

除了LoRA兼容性分析,GhostReview还涉及ckpt出图质量和泛化性分析(Prompt兼容性,画面质量,良图率)和ckpt风格兼容性分析(画风兼容性).这些基本角度多多少少都有之后的paper作为支撑,比如11月的斯坦福的Holistic Evaluation of Text-To-Image Models (HEIM),大量使用LAION-AESTHETICS,ClipScore作为检测工具,而风格相关的则是用MS-COCO (Art styles) 测AlignmentTest.当然从EMU,SVD到HEIM,这些后来的文章都在强调人工评测的重要性,但这些文章都在我做完之后发的

Apart from LoRA compatibility analysis, GhostReview also involves ckpt image quality and generalization analysis (Prompt compatibility, image quality, good image rate) and ckpt style compatibility analysis (style compatibility). These fundamental perspectives are more or less supported by later papers. For example, the November Stanford paper "Holistic Evaluation of Text-To-Image Models (HEIM)" heavily employs LAION-AESTHETICS and ClipScore as evaluation tools, while style-related aspects are measured using MS-COCO (Art styles) for AlignmentTest. Of course, from EMU, SVD to HEIM, these subsequent articles all emphasize the importance of human evaluation, but all of these papers were published after my work was completed.

关于Styleloss.这里有谣言”如之前的 ghostreview 测评工具,lycoris论文[2309.14859]早在23年9月已经提及了相关的东西,但是23年11月的ghostreview仍然使用了相关错误的方法。”首先自己可以上GhostReview的Github看看,2023年8月23日之后就根本没有碰过(见下图),lycoris论文9月发的,我代码8月5日传的,23日的更新是加了HPSV2进去,所以使用Styleloss并不是我看了lycoris论文后才选择的.事实上Styleloss作为著名的风格迁移组件之一,任何DeepLearning相关课程都会学到的,e.g.李沐,吴恩达.其次,我想问什么叫”相关错误的方法”? 首先你图中lycoris文中的结论是虽然使用StyleLoss来衡量风格相似性具有明显优势,但这种度量(Metric)可能仍然无法获得在比较不同模型复制的风格时应考虑的所有细微元素。其次,lycoris文中也是将StyleLoss作为Base Model Preservation部分的研究指标,虽然得出的结论是不太行.但是结合我之前说的对风格LoRA做分析这点,Styleloss在这里依然是较为合理选择的指标来的,如果因为不能绝对准确得出结果就叫”相关错误的方法”,那99%的指标都别用了,事实上LAION-AESTHETICS,ClipScore,FID之类的存在的.

还有我11月初在C站看到这篇文章,为什么没有改代码? 1.确实忙(双11,请假去云栖,之后疯狂加班) 2.一时找不到更好指标+反正代码也没人看+加班加忘了.again,我不是大学生,在现在这样的经济环境下,能有工作就已经开香槟了,哪有时间管没人看的东西. 3.12月被人造谣,就更不能改了,证据就在github,每人都可以看.

Regarding StyleLoss, there are rumors circulating that "just like the previous ghostreview evaluation tool, the lycoris paper [2309.14859] mentioned something related as early as September 23, 23, but the November 23 ghostreview still used related incorrect methods." Firstly, you can check GhostReview's GitHub yourself. I haven't touched it at all since August 23, 2023 (as shown in the attached image). The lycoris paper was published in September, while I uploaded the code on August 5. The update on the 23rd added HPSV2, so the use of StyleLoss was not a decision made after reading the lycoris paper. In fact, StyleLoss is a well-known component of style transfer that is covered in any Deep Learning-related courses, for example, by Li Mu and Andrew Ng.

I also want to question what is meant by "related incorrect methods"? Firstly, the conclusion in your screenshot from the lycoris paper indicates that while using StyleLoss to measure style similarity has clear advantages, this metric may still not capture all subtle elements to consider when comparing style replication across different models. Additionally, the lycoris paper also used StyleLoss as a research indicator in the Base Model Preservation section, although the outcome was not very successful. However, considering my previous point about analyzing style LoRA, StyleLoss is still a reasonable choice of metric here. If calling it "related incorrect methods" is due to the inability to achieve absolute accuracy in results, then almost 99% of metrics should not be used. In fact, metrics like LAION-AESTHETICS, ClipScore, FID, etc., exist for this reason.

Regarding why I didn't change the code after seeing this article on the C site in early November:

I was indeed busy (Double Eleven, taking leave to attend the Yunqi Conference, followed by working overtime).

I temporarily couldn't find a better metric, and besides, no one was viewing the code, plus I forgot due to working overtime. Again, I'm not a college student. In the current economic environment, having a job is reason enough to celebrate, let alone having time to attend to things that no one is looking at.

In December, I was falsely accused, so I couldn't make changes anymore. The evidence is on GitHub; anyone can review it.

3. 天天看论文.这点最近确实因为忙没有了,但幸亏之前看的一些东西看完都发了出来自己的想法,知乎和B站动态就有很多,包括很多冷门的工作,放一篇文章,一篇想法自己去看吧...

I used to read research papers every day. Recently, due to being busy, I haven't been able to keep up with it. Fortunately, I had shared my thoughts on many things I read before, including a lot of obscure works, on platforms like Zhihu and Bilibili. You can find many of my thoughts there. For the more niche topics, feel free to explore them yourself by reading an article or forming your own ideas about them.

StyleCrafter:利用StyleAdapter进行风格化的文生视频(论文概述3) - 知乎 (zhihu.com)

4. 关于训练 About Training

首先我从来没有说过我训练很强,我从来都说GhostMix是融合模型,没有经过训练,从来没有遮掩.事实上,ghost_test2版本是在我测试参数过程中运气好出来的(要不名字也是不是ghost_test2了),成了效果最好的一版.所以我也犹豫过要不要把这个版本config发出来,因为参数确实很傻逼.下面是ghost_test2的每一个save point的ckpt.

Firstly, I have never claimed that my training is very strong. I have always said GhostMix is a fusion model that has not been trained and have never tried to cover this fact. In fact, the ghost_test2 version was produced during my parameter testing process through some stroke of luck (or it wouldn't have been named ghost_test2), and it turned out to be the best-performing version. So, I have also hesitated about whether to share the configuration of this version because the parameters indeed look a bit silly. Below are the checkpoints for each save point of ghost_test2.

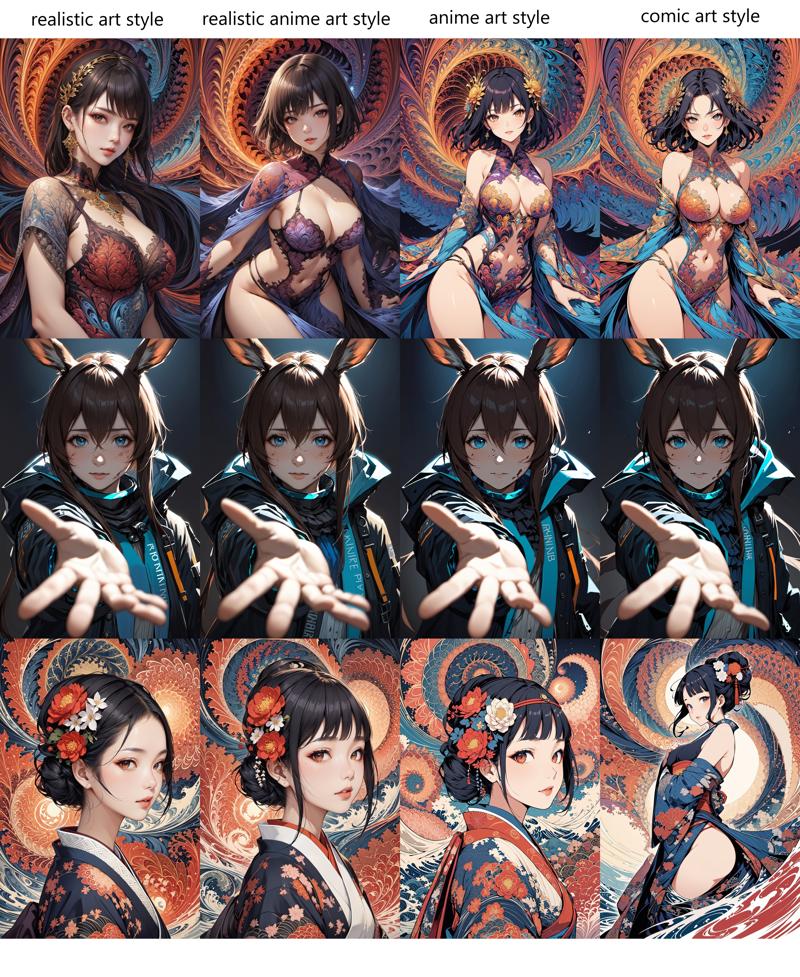

但你说ghost_test2训练之后融合跟你AnimagineV31直接融合两个模型一样,就哈哈哈,首先我发的时候没有AnimagineV31,其次xx art style系列到底那个更有用,我相信没有眼瞎的都看得出答案.图1GhostXL,图2 AnimagineV31融合HelloWorld及Dreamshaper.

But when you said the fusion effect of ghost_test2 after training is the same as directly fusing two models in your AnimagineV31, that's hilarious. First of all, when I shared it, there was no AnimagineV31. Secondly, as for the xx art style series, which one is more useful, I believe anyone can see the answer without being blind. Figure 1 is GhostXL, and Figure 2 is AnimagineV31 fused with HelloWorld and Dreamshaper.

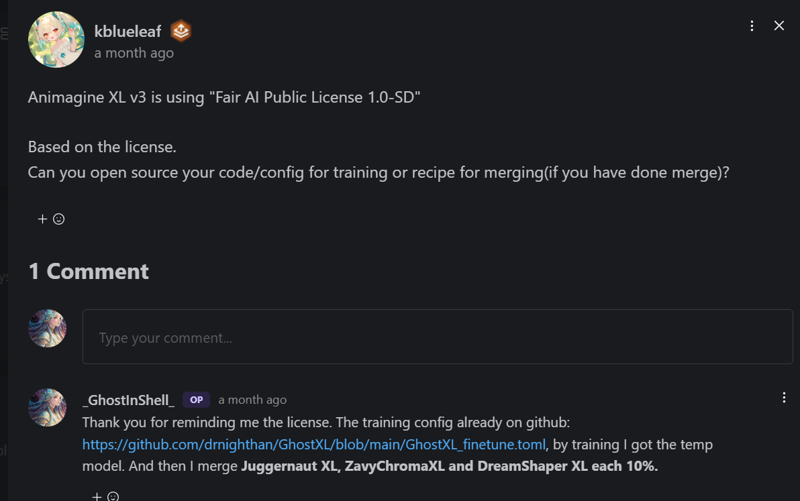

5. “而根据AnimagineXL模型的开源协议,这些东西其实都应该是作者自己公开的。”在GhostXL发布的当天就公开了下图,但merge部分确实是测试融合配方版本多导致版本错误,就不太确定没有写在详情里面,本人已经对Hello World的作者郑重道歉.

According to the open-source license for the AnimagineXL model, these things should actually be disclosed by the author. On the day GhostXL was released, the image below was made public. However, the merging part was indeed a test fusion formula version which led to version errors, so I am not very sure why it wasn't documented in the details. I hereby offer a sincere apology to the author of HelloWorld.

6. 关于现在ckpt应该是解决做的到的问题,然后LoRA,controlnet等是解决做的对的问题.

Regarding the current checkpoint (ckpt) issue is to solve the can do problem. Meanwhile, LoRA, ControlNet, and others are addressing the right problem.

首先,关于LoRA兼容性问题,我上面已经说过了,我再次为我的无知道歉.再次这篇文章是写于AI模型薅羊毛时期,一开头就说明了.当时的语气过激,现在看来是极为不恰当的,再次道歉.还有这篇文章是纯粹我个人观点并自己很业余,在里面提到好几次,写这篇文章的目的也是希望在当时的时候,让大家多关注模型画风兼容性等问题.

然后,就是这句:关于现在ckpt应该是解决做的到的问题,然后LoRA,controlnet等是解决做的对的问题.但是就这句话,我依然认为这句话是对的,特别是在当时SDXL还没有发布的情况下,你要想画特定风格的特定人物,就应该是ckpt应该是解决能出类似风格的图片之后,然后LoRA,controlnet等去做到精准出特定风格与人物.现在SDXL,比如Juggernaut XL,ZavyChromaXL都是这类模型的代表,我觉得很不错.

Firstly, with regards to the LoRA compatibility issue, as I have mentioned before, I apologize for my ignorance. I emphasize again that this article was written during the period when AI models were rapidly advancing in China, many people used "trash model" to earn money from the platform,such as LiblibAI,Tusi,etc. The tone used at that time was overly intense, which now seems highly inappropriate, and I apologize for it. Furthermore, I want to clarify that this article solely reflects my personal opinions and is written from an amateur perspective. The primary aim of the article was to draw attention to issues such as model style compatibility.

Now, regarding the statement: "Regarding the current ckpt issue, it should be possible to resolve, and LoRA, ControlNet, etc., are addressing the right problems," I still believe this statement holds true. Particularly, at a time when SDXL had not been released, if you aimed to create specific styles for particular characters, the focus should be on ensuring that ckpt can generate images similar to the desired style. Subsequently, models like LoRA, ControlNet, among others, can then strive for accurate depiction of specific styles and characters. With the advent of models like SDXL, such as Juggernaut XL and ZavyChromaXL, which represent this approach, I find them quite impressive.

所有回应结束,这也是我想说的一切了,信也好不信也罢,不会再进行任何自证.做模型曾经是给我带来快乐的东西,但这快一个月这一切都没有给我带来任何快乐,除了被骂就是被骂.我需要休息一段时间,可能是一天,一个月,也可能是永远,再见各位.

All responses end here. This is all I want to say. Whether believed or not, I will no longer seek any self-justification. Modeling used to bring me joy, but in the past month, it has brought me no joy at all, just criticism. I need a break, maybe for a day, maybe forever. Goodbye, everyone.