INTRODUCTION

Hello, I’m VegaProxy!

This article shares my limited insights on LoRA creation and dataset preparation. I’ll open this up by saying I'm not a leading expert in this field, I'm just a guy with far too much time on his hands, but my experience has led me to develop theories and practices that I’d like to share. I mainly want to contribute to the broader knowledge on Image Training, and Workflow management, so you can be more informed going forward (if you don't know some of what I touch already), and perhaps you can advance this topic as you apply it in your own way.

This article was inspired by a fledgling LoRA creator crystalkalem who reached out to me for advice. I appreciate the drive for knowledge, and I'm always happy to share it when I can, that way I can help others grow and improve in this field. I also want to emphasize the importance of a learning mindset especially in the field of AI and image generation, cause thinking outside the box, and being comfortable asking questions even when you know the answer will sometimes yield results you could never expect, or predict. this is what separates growth, from stagnation.

Hardware

Ok, so to start out I want to share my Hardware, I think it's important to get that out of the way, cause while I know cloud solutions exist I primarily train locally ATM, and my perspectives come from that basis, so knowing the hardware can help you understand what works, and how well it works

GPU: Nvidia RTX 3060 12GB vRAM

CPU: Ryzen 7 5700G

RAM: 16GB

some may not be aware of the importance of vRAM, especially if they don't locally generate, but it's very important, in-fact while you can get away with 8GB of vRAM it is recommended to have at least 12GB, or more, otherwise you will need to use limiting presets to avoid overloading your PC when locally training in Kohya, in-fact 12GB is not immune to this as I had to handle overloading on my PC on occasion until I decided to switch to LOW vRAM solutions, this is a common issue not many coming into the field knows about, so it needs to be addressed that way they can curb it, and make the characters they admire.

I've included my recommended SDXL presets in the attachments these include Low vRAM versions, I recommend using them if you get any errors, these are primarily Character Focused Presets, but the creator for Autism Mix XL (Pony), and the Nyantcha Style has given their own Style presets that utilize Tag Settings if you want to add them to your Style (for example I add an artist name), or without any tag settings if you just want a passive Style LoRA. those are in the Attachments as well

Workflow

I typically start off by asking myself whether I want to make a Character/Concept/Style each of these have a vastly different approach in both obtaining good training images, and in explaining them via Booru Tags which is my preferred method as I feel it is more easy to break down as a trainer, and mix/play around with as a generator

I then check Booru Sites, or the Anime/Show relating to the character, or franchise if it's a Character/Style based on an Anime/Show, but if it's a style based on an artist I'll either support their patreon, and get their images from them directly while supporting them, or use Booru Sites if that option isn't available, I usually prefer getting at least 40 images if it's a character although that isn't always possible, at least 100 images if it's a concept, or at least 150 images if it's a style the amount may increase depending on the complexity though, so don't be afraid to explore a little if the results don't exactly meet your expectations.

Also, this is VERY important. I don't crop any of the images, nor do I recommend anyone do so, this is something people use to do more often back with SD 1.5 LoRAs, but it's just plainly a bad idea, and it was as much then, as it is now ... the idea was, that because the Base Models was trained on 512x512 Square images, that you should do the same for LoRAs, and that theory has been increased to 1024x1024 in recent time, but it has been shown to remove flexibility from a LoRA/Embedding when used in certain circumstances, not to mention most mixes disregard square form factors anyway, so just leave images as they are, and rename each image numerically from 1 to the amount you have downloaded, and make the same amount of .txt files this will setup your base Dataset in a clean easily viewable form factor

DataSetTagManager

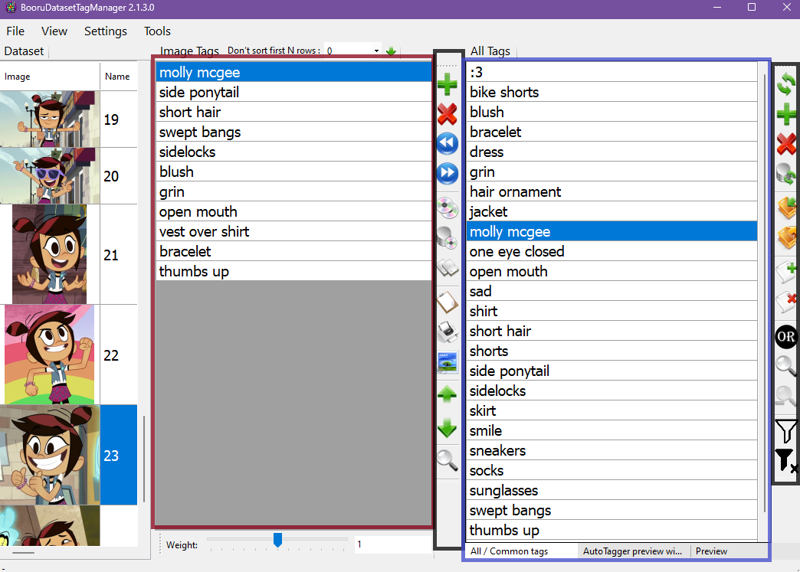

Now let's load DataSetTagManager I'll show you some of the layout here

RED = Tag Window that displays all tags related to the selected image on the left

BLUE = Tag Window that displays All, or All Common tags between every image

BLACK = Function Buttons that effect Tag Windows directly to their left, they have several functions hover over them in the App to learn more

the point of this is to get comfortable with seeing the UI, cause this tool will be your friend in quickly tagging upwards of 150+ images which can save you literally HOURS of prep work

I'll include an example Dataset, that you can use to see how a fine tuned Dataset would look in the program, so feel free to play around until you can understand, and replicate it, also keep in mind I use a scan line tagging method, and it takes into account visual info from Top to Bottom, some fun back story about that I named it "Scan Line Tagging" after the inspiration I had back in 2022 when I first started using this style of tagging, for image generation, and latter training as LoRAs started to release, anyway the inspiration OFC was CRT TVs/Monitors, and how they would render Frames to the TV by using Scan Lines often going from top to bottom, I decided to do this because I thought if I'm making an AI image I should also think how I believe an AI would process images, ofc AI is much more complex then that, but it stuck, and I find it to be easy to follow, and modify I recommend it to any beginner, or even professional in the field as it gets you to think about what your adding, and why your adding it.

Also while tagging in this method is straight forward, the way you should approach Characters / Concepts / Styles I feel should be completely different to each other, here is how I would tackle each individually

Character Tagging

When tagging a character, I focused on the specific features and attributes that define that character. This sometimes includes physical characteristics like hair color and facial features, eye color, clothing, and accessories, as well as expressions and poses that are all characteristic of their personality. The goal is to capture the essence of the character in the tags. below is an example I made for the Junior Creator it's Molly Mcgee from Disney's The Ghost and Molly Mcgee, take a look at how I decided to tag them, as well as the Scan Line Tagging method I used, also keep in mind I ONLY add tags that are fully visible, how I see it is if you can't fully see it, then the AI might as well not be able to see it at all, so for instance she is wearing a skirt, and it is partially visible, but I omitted it from the tags.

think about tagging like this, not adding something is often better then adding it at all, or another way you can think of it is sometimes Less is More

Training Tags

molly mcgee, side ponytail, short hair, swept bangs, sidelocks, blush, grin, open mouth, vest over shirt, bracelet, thumbs upConcept Tagging:

Concept tagging is about capturing the action or situation depicted in the image. Instead of focusing on the character’s attributes unless those are the focus of the concept you're making, overall though you’re focusing on what the character is doing or experiencing. For instance, if your concept is ‘Eating a Sandwich’, you might use tags like ‘holding sandwich’, ‘taking a bite’, ‘happy expression’, etc.

Style Tagging:

IMO this is the easiest to make, just write the name of the artist, and the AI will interpret the rest, adding more details will only serve to remove the flexibility of a Character, or Concept added alongside a Style, remember the less tags you use the more flexible the AI can be when interpreting the images given, and this flexibility should be taken the most advantage of when making a Style, less can be more.

As far as setting up a Kohya preset I won't be going into detail on that, fortunately there exists presets you can load which will remove a lot of the load off your shoulders just remember to take into account the limits of the hardware you're using if you have 12GB of vRAM, or less look for Low vRAM presets, otherwise if you have 24GB + of vRAM just go with what preset works best for you

I have included a list of presets above you're free to use those, but don't be afraid to explore other presets made by creators out there, and see if they work best for you.

Once you pick out a Base Model you prefer to use like Autism Mix XL / Animagine XL / Pony Mix XL in my case I generate anime/manga styled characters as I deviate from the meta of realistic preferring instead for more stylized subjects you might like another Base Model leaning to more realistic sides, then you're ready to go load that, and use it

Although keep in mind, LoRAs are best used with the Model you trained them on, this has many reasons like how the weights in the Base Model are used as a reference for the LoRA when training, and not every Base Model will be able to interpret those training weights the same, so it is always recommended to use the LoRAs alongside the trained base model for the best results, and the same goes for additional LoRAs I don't know how often I've seen generations ruined because someone forgot to consider the LoRAs they used was both trained in different Base Models and therefore contradicted each other, I know sometimes it works, but the difference between sometimes, and always is huge when you want to make a high quality memorable generation that you can be proud enough to use as a Wallpaper for instance.

Thank-you for reading. I hope this provided some insight that you otherwise didn't know before

Also, if you're curious crystalkalem agreed to me publishing both the training data here, as well as the LoRA for download on my page, considering the nature of my work, and this characters stark difference to my mature subject matter in many of my other LoRAs I'll ask everyone who wants this LoRA, to please be of the same mind of restraint with such a young character, this is however all I can ask given the internet, please.