general: everything depends on how many photos you have and in what quality. The checkpoints you use as a source-model, always different, sometimes worse and sometimes better. i have tryed 20 checkpoints with same photos and the results are often different.

Even if the trained lora looks good with source-model-A, there can be very different results with source-model-B.

...........................................................................................

so where i start... i have installed kohya

https://github.com/bmaltais/kohya_ss#installation

its a stand alone software!

there i use "LoRA" should not that diffenrent than Dreambooth

so most of the parameter could stay as they are!

addition to SDXL

uses much more VRAM because images should be 1024pix resolution

learning is faster

optimizer "Adafactor"

-> all in all more or less the same, lower traininrates(all three) at around 0.00001

but first the pictures:

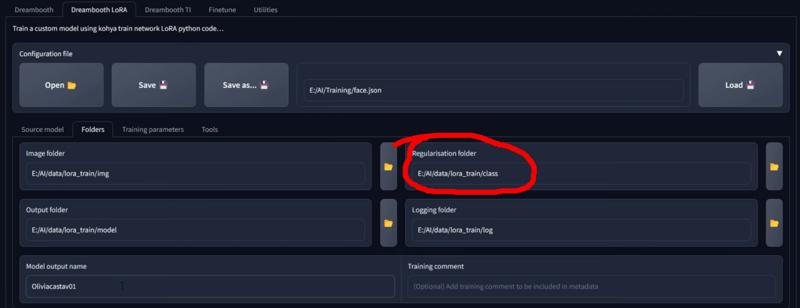

you need a folder, name it like you want!

than 3 folders in your folder "images", "logs" and "models", i suggest also "reg" (see below)

in folder images you need essential a folder named "010_prompt" or another number.

where 010 means 10 iterations and promt is your main idea, example "a portrait photo of a woman" so the foldername is "010_a portrait photo of a woman".

in that folder place your images, may 3 better 10 up to 50.

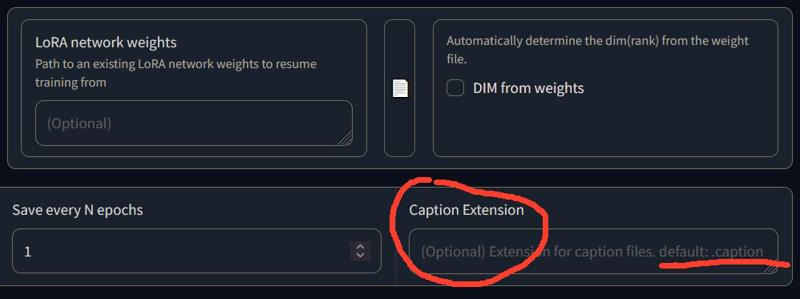

the name of the data files is not important but you need for every image a .txt or .caption file with the same name like the image. standard is caption but you can change to txt

in that file you can explain more what you see in your images.

"a face portrait of a woman with a smile" for example, BUT its better you put here a word that trigger you model later, so may "a face portrait of a woman called LJCandle . . .", a name that is unique. it give an automation unter "utilities" -> "BLIP to caption" it works mostly not that good but its a start

the reg folder: its optional but sometimes it reduces overtraining

create a folder with 001_reg

001 = 1 the training uses one time the images in it

you can see the images in this folder as a hint or sketch for the training. so you can really put sketches or similar images or styles there, about 5 images up to twice as many as you have in your imag-folder

so thats all for the file and folders, back to "LoRA"

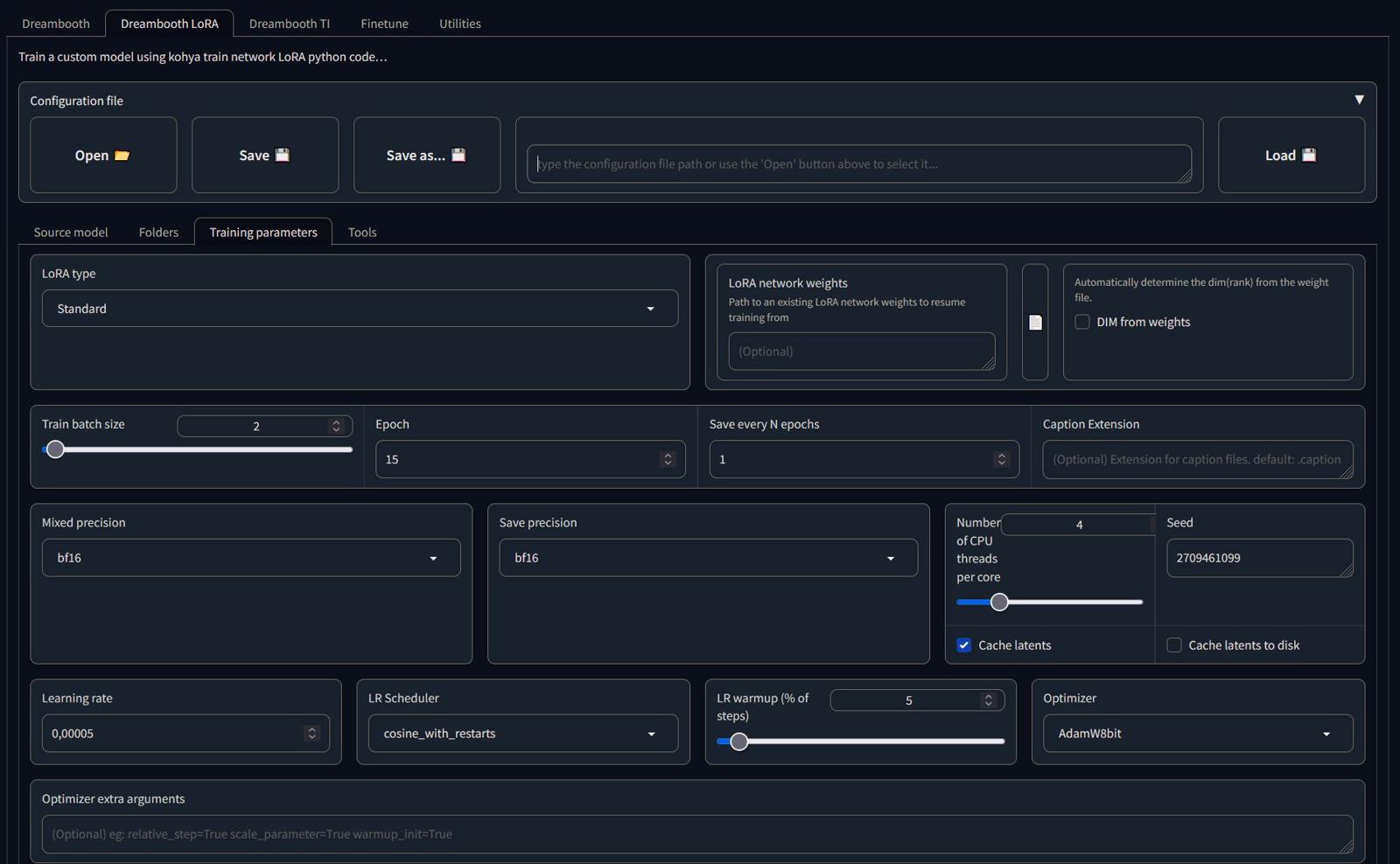

traing batch size, you can try 1 up to 4 is faster vor you (depends on VRAM)

download GPU-Z (if the VRAM is full you should change your settings)

but first only play with "learning rate"

standart: 0.00001 you can play from 0.00000 to 0.0005, for me a low rate is better for very similar pictures and a higher for pictures with more different content.

every time you change "Learning Rate", change the "Unet learning rate" to half size and "Text Encoder learning rate" to arround 1/4 of learning rate. (SDXL can be the same)

check latents, for me faster and less vram

for Adafactor ->

add in "Optimizer extra arguments": scale_parameter=False relative_step=False warmup_init=False

and in "Additional parameters": --network_train_unet_only

(for Adam or other optimizer can be different)

"epoch" standart is 1, so thats the more or less the same meaning like the number you give the folder "010..." BUT it would multiplied with the number of your folder

so folder number and epoch is multiplied and than multiplied by the number of pictures you have.

example: folder name "010_ . . . ." * epoche=20 * (number of pictures = 7) = 1400

so that a good start for itterations, play from 1000 up to 3000.

(hint, if you change the traing batch size too 2, the itterations divided per 2)

"Mixed precision" and "Save precision" for me work "bf16" (RTX Graphic)

"LR Scheduler" start with "constant" or "constant with warm up" 5%, or cosine

with warm up you can choose LR warmup (% of steps): 5 up to 10%

Network Rank (Dimension): 16 at max 64 (the more complex the higher)

Network Alpha: 8 at max 32 (half of Network Rank)

(hint) Save every N steps: eg 200 (you have learning steps between ! and you can zero "Save every N epochs")

max resoultion: max resolution of your image (i chose only one number the largest in my images)

check Enable buckets and min max 62 up to your maxresolution

SDXL Specific Parameters: check only "No half VAE"

check Gradient checkpointing

try Memory efficient attention

and VERY useful:

(hint) Sample every n steps: eg 100 ( its generates pictures and you can see how the model learn)

for this its useful to have a prompt!

"Sample prompts:" a photo of a young woman, looks at viewer, hyper realistic, intricate texture, film grain, film stock photograph --n painting, drawing, sketch, cartoon, anime, manga, render, CG, 3d, blurry, watermark, signature, label --w 1024 --h 704

--w and --h depends on your initial images (some errors if number not not divisible by 64)

images would be saved inside your model folder (sample)

there you see your prompt as txt file, you can change it while training ;)

choose a source model, a pretrained model name or path:

.../stable-diffusion-webui/models/Stable-diffusion/

your choice ! i suggest a base model

under folders select your folders, "model" and "logs" and "reg"

(hint, dont go into the folder only klick on it and select)

klick "train model"

you can press the "Pause" button at any time, read carefully if user warnings appear, press "space" to go on

while the trainig you see teh progress bar and 3 numbers

Average key norm: should start at 0.001 and should be approx 0.1 at 20%

Keys Scaled: should be zero, at max 10, its jumping 20-100 i would think the training dont work

avr_loss: most arround (0.2 - 0.05) the number should be slowly decreasing, if the number ist dropping fast i would think trainig is over.

i have forget important steps ???

watch youtube

eg this

or

or

and a lot of videos here:

https://github.com/FurkanGozukara/Stable-Diffusion

a hint, if you can merge a lora into a checkpoint ... do it !

its under "kohya" -> "Dreambooth LoRA Tools" -> "Merge LoRA"

select a model (checkpoint) than select a lora, merge percent 0.3 to 1

all depends on which checkpoint you select and how strong is your lora ...

but all in all ... for me the better results ;)

I get the best results when I merge the Lora with the control point I have chosen for learning.