This is a repost from my page at OpenArt.ai.

Workflow and assets can be downloaded from ko-fi

📽️ Tutorial: https://youtu.be/XO5eNJ1X2rI

SAMPLES

(I cannot upload a larger file! Sorry)

What does this workflow?

A background animation is created with AnimateDiff version 3 and Juggernaut. The foreground character animation (Vid2Vid) uses DreamShaper and uses LCM (with ADv3)

Seamless blending of both animations is done with TwoSamplerforMask nodes.

This method allows you to integrate two different models/samplers in one single video. This example uses two different checkpoints, loras and animateDiff models, but the method can also be used for image compositions where you want to use, for example, a realistic model for the foreground, and an artistic drawing model for the background.

Workflow is tested with SD1.5. SDXL or other SD models could be used, but controlnet models, loras, etc., should be changed to the corresponding version.

Tips

Workflow development and tutorials not only take part of my time, but also consume resources. Please consider a donation or to use the services of one of my affiliate links:

☕ Help me with a ko-fi: https://ko-fi.com/koalanation

🚨Use Runpod and I will get credits! https://runpod.io?ref=617ypn0k🚨

Run ComfyUI without installation with:

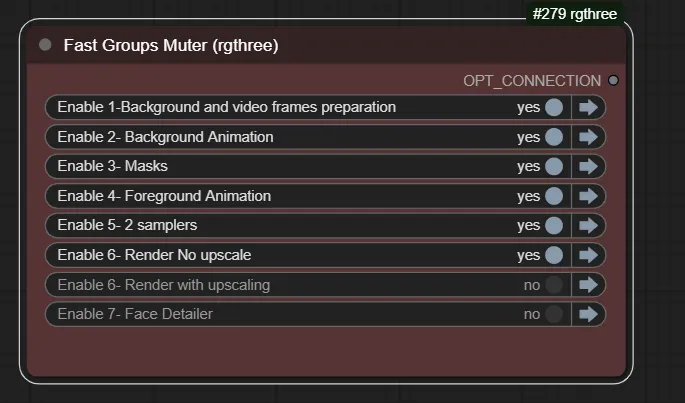

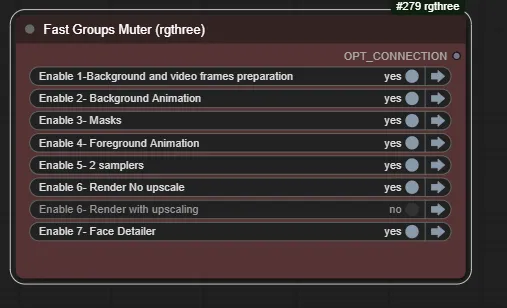

How the workflow works

1- Download resources

Workflow: Check out the attachment on your right menu

Download the following resources in OpenArt.ai:

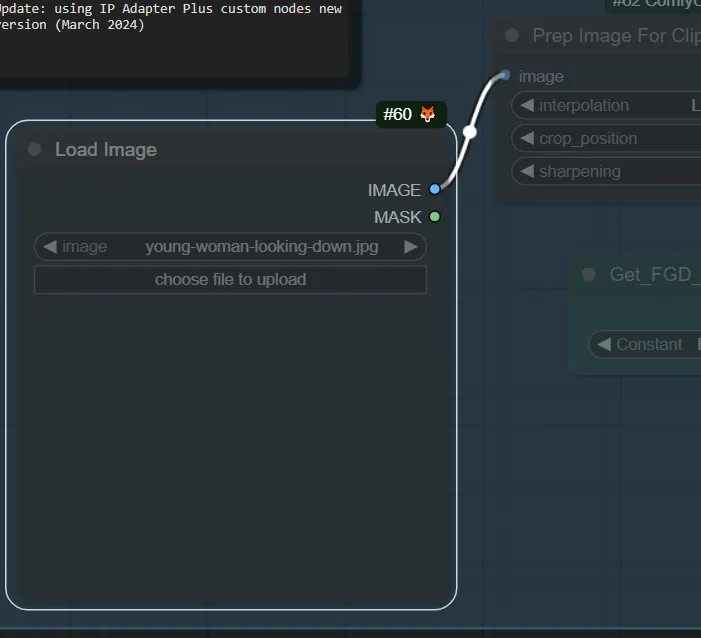

Reference image for IP Adapter

2- Workflow download/start

If you are going to use the runnable workflow feature of OpenArt.AI, click on the green button 'Launch on Cloud'. If you are going to run it elsewhere (locally, VM, etc.), click on the 'Download' button, or use the attachment in this article in Civit.ai.

3- Load the workflow

In ComfyUI by drag and drop of the workflow file in the ComfyUI canvas (will be automatic if you are using the runnable workflow of OpenArt)

4- Prepare resources

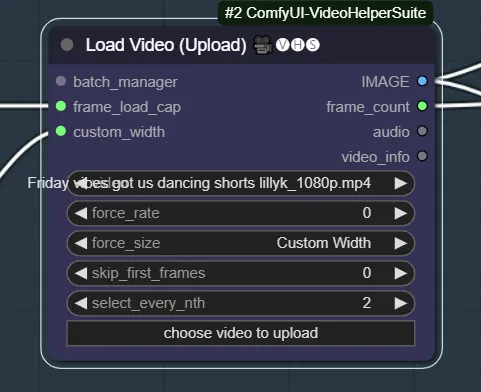

Load the Video, the background image and the IP Adapter reference image. Set the width and the frame load cap of the video.

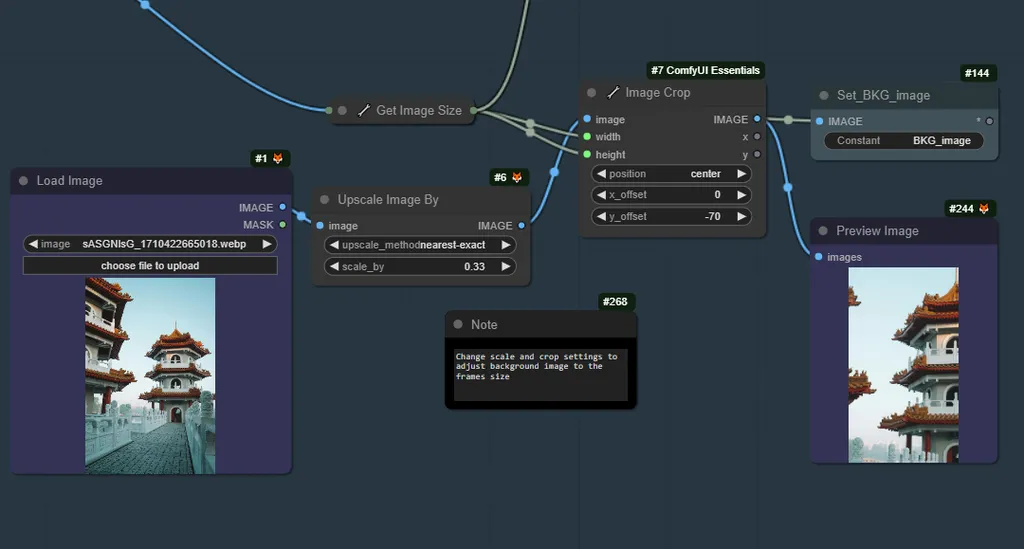

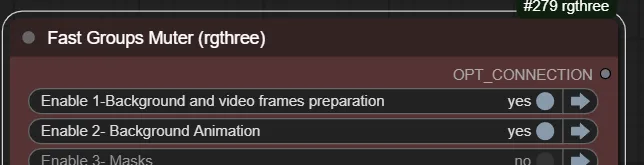

5- Create the Background image:

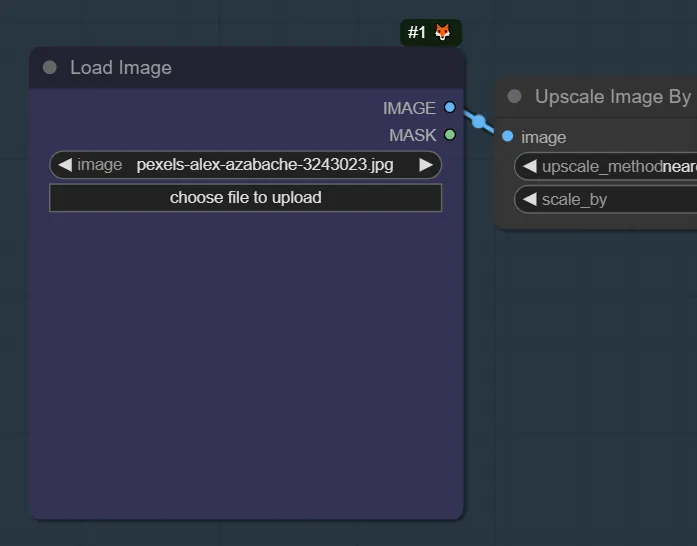

Adjust a background of your choice to the size of the frames of the original video

6- Background animation:

Create an animation using the background picture.

Activate the Background Animation node. Change the prompt to describe the animation, and run the workflow.

Choice in the example is to use AnimateDiff version 3. Latent will be later used for the TwoSamplerMask. LooseControlNet and Tile are recommended. Loras are optional.

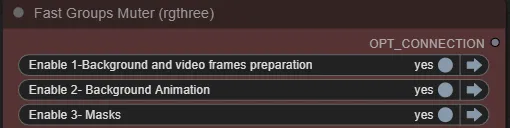

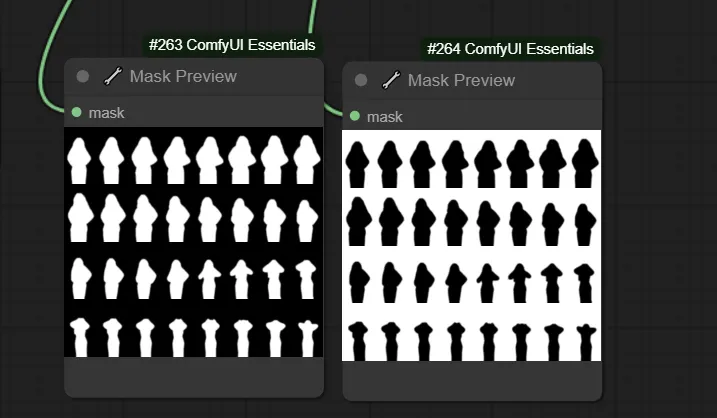

7- Create masks:

Masks for the foreground character from the starting video are created. Change the threshold detection level if needed

8- Foreground animation:

Create an animation of the foreground using the frames from the video.

Change the prompt according to the video you are making.

We use AnimateDiff v3 with LCM sampling. IP Adapter is used. Recommended ControlNets are ControlGif, Depth and OpenPose. Foreground mask is used in controlNets.

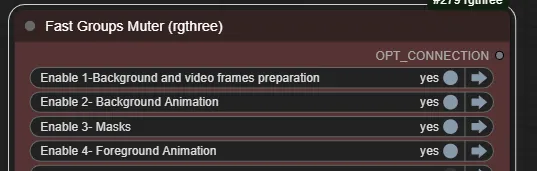

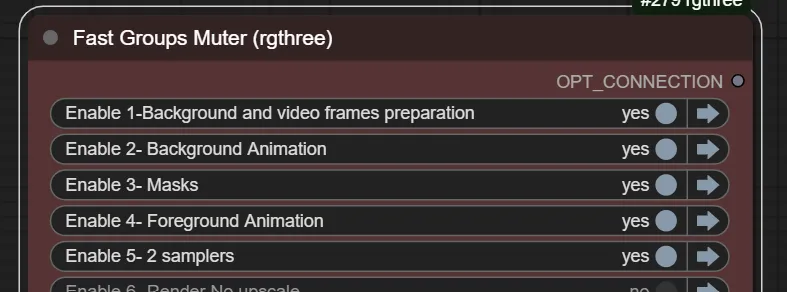

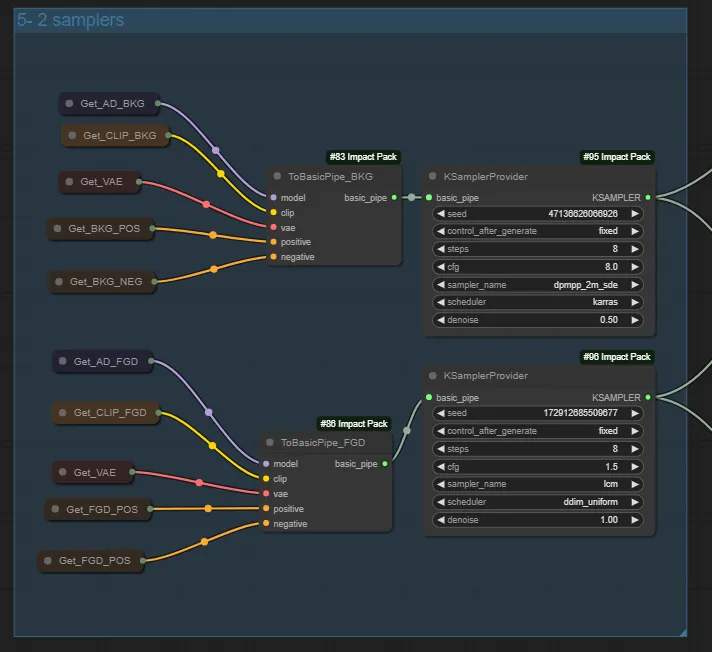

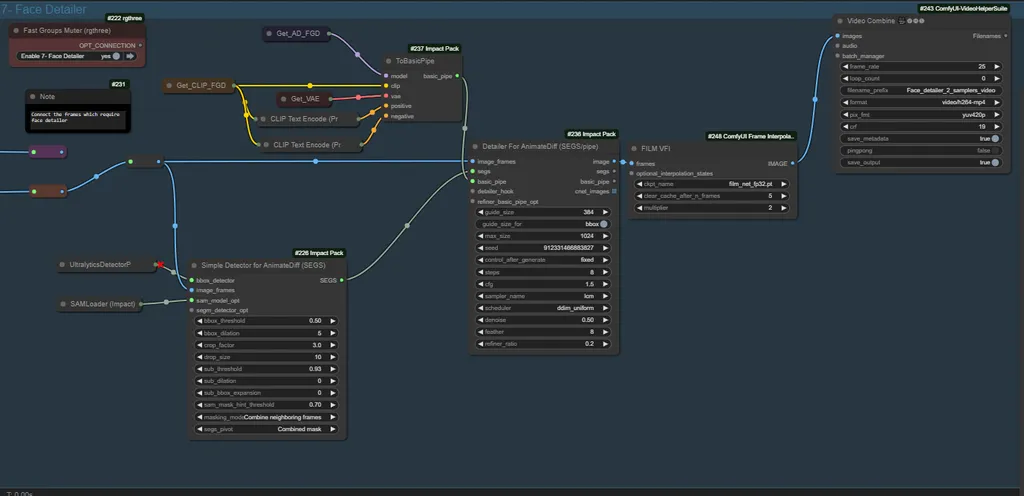

9- TwoSamplers:

provides two different samplers for rendering in step 6. Sampler 1 (background) uses AnimateDiff version 3 + Juggernaut, while sampler 2 AnimateDiff version 3 + Dreamshaper wtih LCM sampler. The settings, thus, need to be different. The background has a denoise of 0.5, while the foreground has a denoise of 1.0.

10- Rendering:

First option is to directly upscale (latent to 1.5x). Higher resolution, but time consuming. Second options to directly use the TwoSamplersforMask node, which uses. With the foreground mask we can have seamless integration of the background and foreground.

11- Face Detailer and Frame interpolation:

Face detailer and frame interpolation are added to correct face distortion and improve smoothness of the video. You need to connect either the upscaled or non-upscaled frames detector and the detailer

Runnable workflow specific instructions

In this version 2.1 I have adjusted the workflow (compared to the original in the tutorial), so it can be run in OpenArt.Ai (runnable workflow). Therefore, the loras are not used, and it is using AD v3 in the foreground, too. If you find a way to load the models (e.g. via Civit.ai node), it should be still possible to use the original workflow.

Be aware the frame load cap is limited in the runnable workflow because of machine limitations. OpenArt does you a great favor allowing you to use GPU computing for free, and it is nice for demonstration purposes, but for longer generations you will need to do it locally, VM or SD paid service with more computer power.

Obviously, you may want to change some the models, videos, etc.

Additional information and tips

Check out the assets to use in the example

In the runnable workflow, check that the models are all the right ones (see notes before)

Check notes in the workflow for additional instructions