Warning: This may be so abstract, or make you question about my intention. This is not a guide, this is just a summary of art exploration under CS research.

Change Log

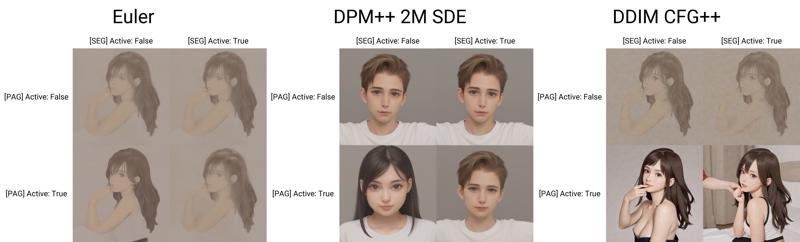

v2.0: I don't know exact effect, but CFG++ / SEG / PAG works!

v1.5: Added more examples for SDXL / Pony models.

v1.01: Added the post linking to my model!

Main Section

If you have watched how SD / LDM works, and how CLIP understanding the relationship between text and image, you may want to ask, how about there is no prompts given?

No matter if there is any word, or anything, even gibberish for example, rot42+xor which looks like hash, our poor SD model still throw an image out based from "garbage in, garbage out" basis. Given that "no words given" as "untitled" is somewhat meaningful (or just neutral), comparing to purely random and chaotic gibberish, would the model throw something neutral, or perhaps with legit content?

Seems that such task has been covered for a while, by calling the task "Unconditional Image Generation". Before SD (or LDM), GAN networks has been tried to generate images without human input, solely from pure noise, and segment the contents out of thin air. With sampler DDIM and conditioner CLIP introduced to LDM model, such process has been designed as "Classifier Guidance". However, classifier guidance was designed to have condition. Therefore, combining both concepts together...

Ah yes, now we have Classifier Free Guidance, the number "7" for no reason hyperparameter my friend. The "AI" secrectly make up the details when the "prompts" are somehow cannot describe the full picture. We can "abuse" the whole mechanism, by not providing the prompts at all, to let "AI" make up the whole picture by itself.

As soon as SD1.5 released ("the leak" also), drawing "untitled" is hard, because the noisy image without text prompt will keep flipping the "guidance" and make it hard to "converge" to some stable image contents. Now with a bigger model, insanely trained / merged for iterlations DGMLA from 216 SDXL models, believe or not, and a lot of additives such as FreeU, CFG rescaling (Dynamic Thresholding) Manifold-constrained Classifier Free Guidance (CFG++), Smoothed Energy Guidance and Perturbed-Attention Guidance, now pure untitled art is achievable, all you need is roll the dice and touch grass.

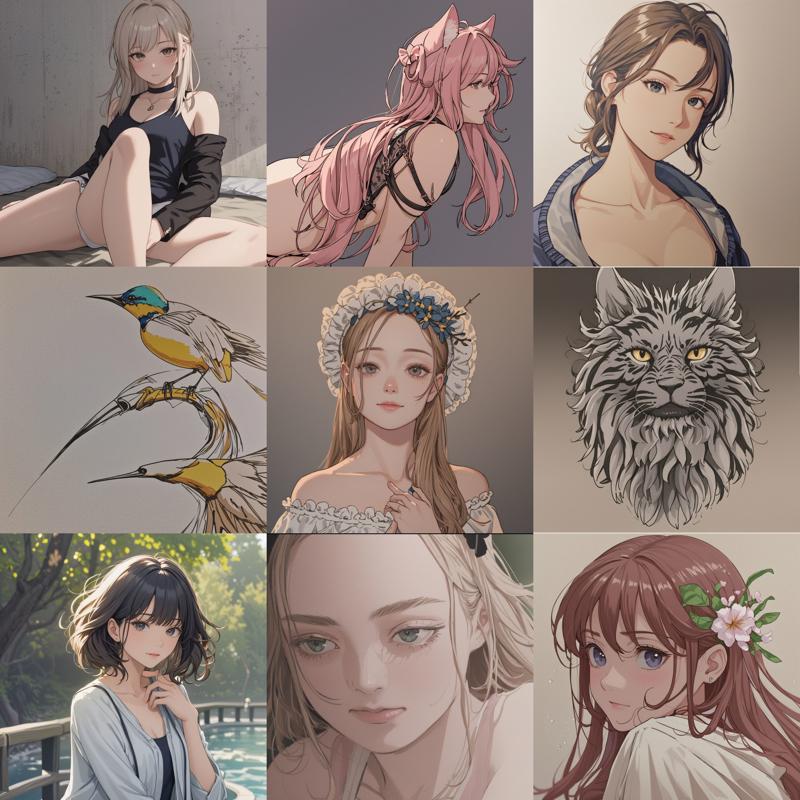

For the "untitled" content, sure it will be random, but it also reflects the bias and variance of your model, implied from the training material. For example, my model will be "LAION contents with Danbooru images with weighted distribusion". For special case, like this merged model, it will have a preferred art style, more than anime itself, because "AI" will choose to forgetting contradicted concepts, so it will automatically output masterpiece, best_quality, or a low amount of score_9 in style, or... half from them.

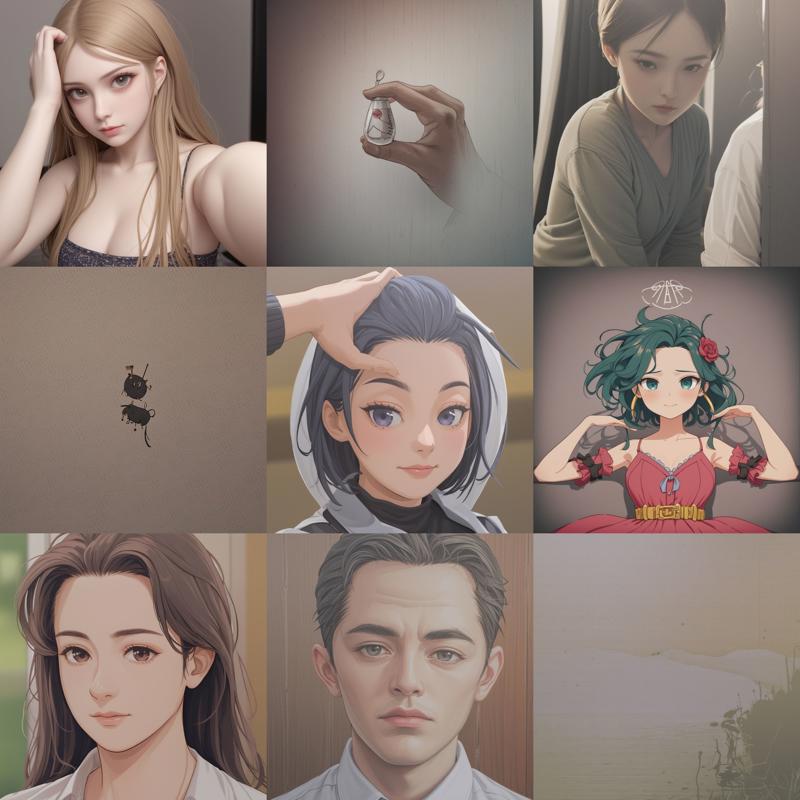

Cherrypicked example

Warning: The model will reflect its own "distribution" therefore it can be NSFW very often!

parameters

Steps: 48, Sampler: DDIM CFG++, Schedule type: Automatic, CFG scale: 1, Seed: 3045266444, Size: 1024x1024, Model hash: bdb9f136b6, Model: x215a-AstolfoMix-24101101-6e545a3, VAE hash: 235745af8d, VAE: sdxl-vae-fp16-fix.vae.safetensors, Clip skip: 2, SEG Active: True, SEG Blur Sigma: 11, SEG Start Step: 0, SEG End Step: 2048, PAG Active: True, PAG SANF: True, PAG Scale: 1, PAG Start Step: 0, PAG End Step: 2048, Version: v1.10.1

Now enjoy 2 of the examples of "untitled" images. The yield depends on the model, mine is around 0.2, 2 out of 10 images are having legit content.

parameters

Steps: 48, Sampler: Euler, Schedule type: Automatic, CFG scale: 3, Seed: 3649863581, Size: 1024x1024, Model hash: e276a52700, Model: x72a-AstolfoMix-240421-feefbf4, VAE hash: 26cc240b77, VAE: sd_xl_base_1.0.vae.safetensors, Clip skip: 2, FreeU Stages: "[{\"backbone_factor\": 1.1, \"skip_factor\": 0.6}, {\"backbone_factor\": 1.2, \"skip_factor\": 0.4}]", FreeU Schedule: "0.0, 1.0, 0.0", FreeU Version: 2, Dynamic thresholding enabled: True, Mimic scale: 1, Separate Feature Channels: False, Scaling Startpoint: MEAN, Variability Measure: AD, Interpolate Phi: 0.3, Threshold percentile: 100, PAG Active: True, PAG Scale: 1, Version: v1.9.3

parameters

Steps: 48, Sampler: Euler, Schedule type: Automatic, CFG scale: 3, Seed: 2373230122, Size: 768x1344, Model hash: e276a52700, Model: x72a-AstolfoMix-240421-feefbf4, VAE hash: 26cc240b77, VAE: sd_xl_base_1.0.vae.safetensors, Clip skip: 2, FreeU Stages: "[{\"backbone_factor\": 1.1, \"skip_factor\": 0.6}, {\"backbone_factor\": 1.2, \"skip_factor\": 0.4}]", FreeU Schedule: "0.0, 1.0, 0.0", FreeU Version: 2, Dynamic thresholding enabled: True, Mimic scale: 1, Separate Feature Channels: False, Scaling Startpoint: MEAN, Variability Measure: AD, Interpolate Phi: 0.3, Threshold percentile: 100, PAG Active: True, PAG Scale: 1, Version: v1.9.3

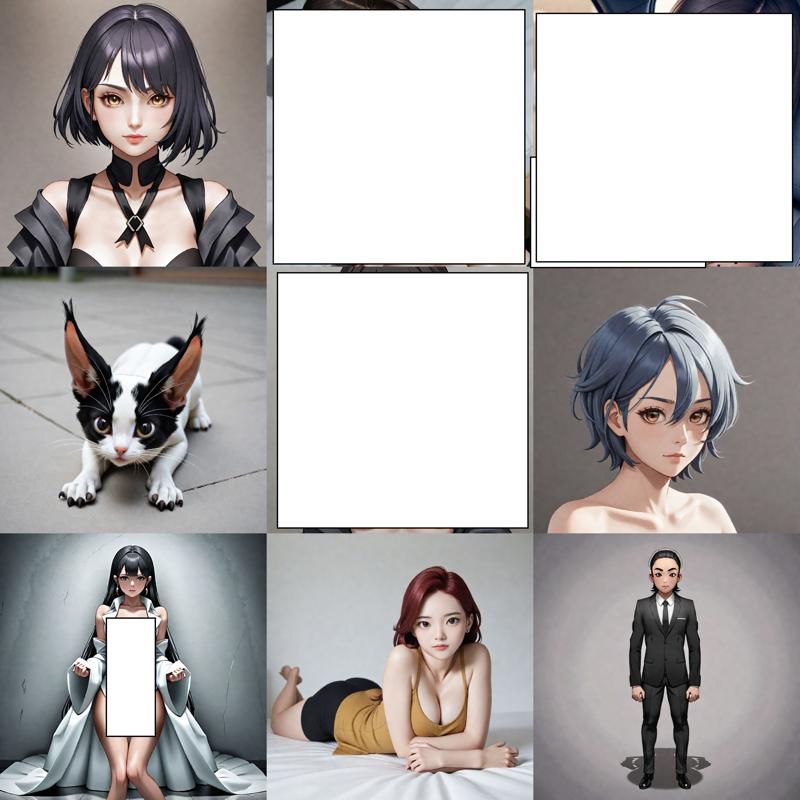

Batched, random examples

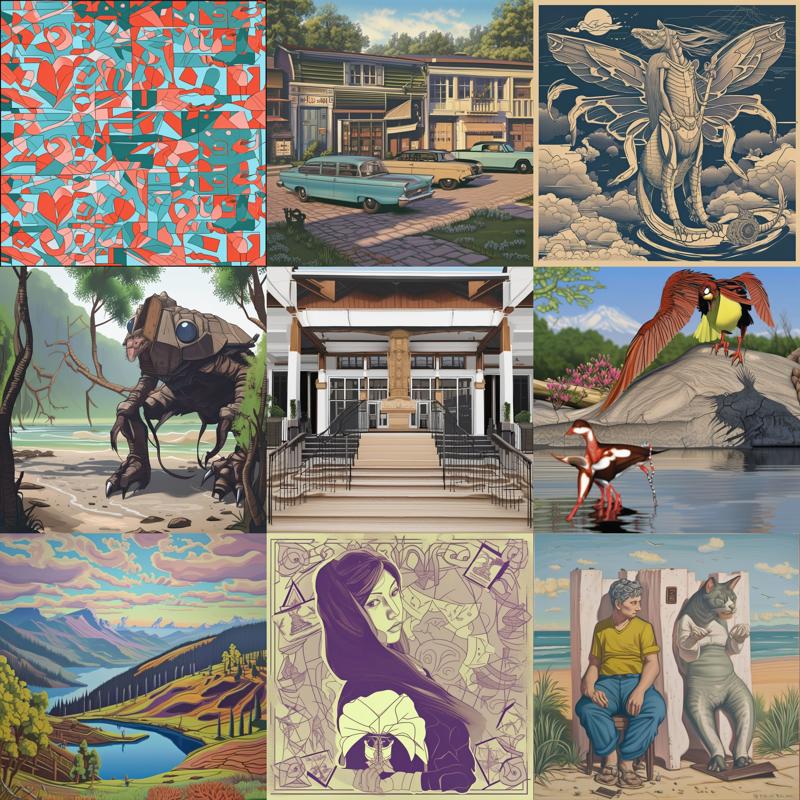

This may help us knowing which finetuning streadgy has been applied towards model (as merging models has been faded out).

This is the original SDXL, you may spot some artwork:

Animagine XL 3.1. It is highly colorful but fragmented:

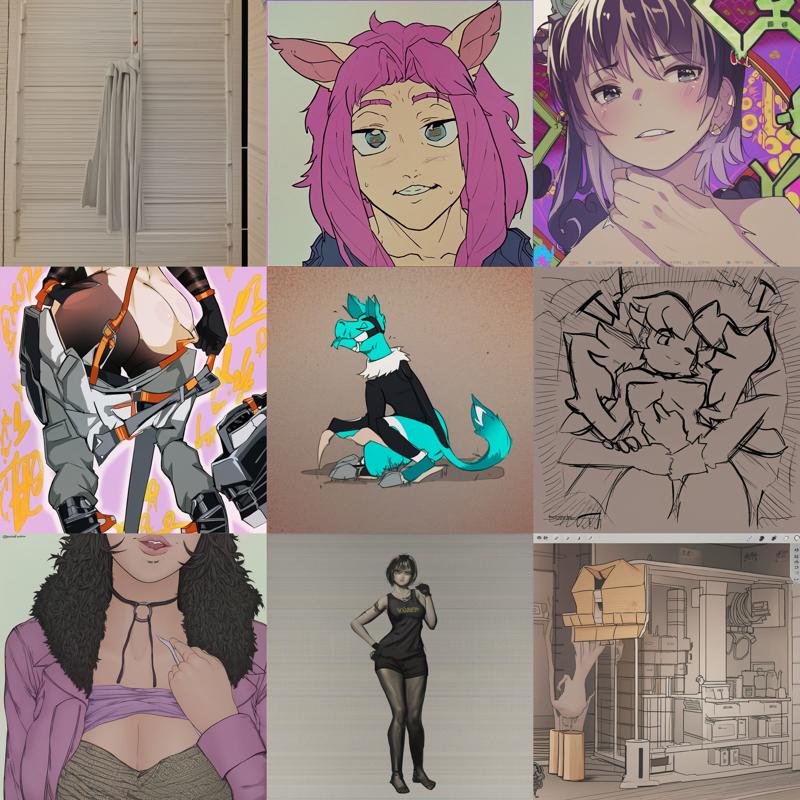

Kohaku XL Epsilon. Notice for graphic design or character design:

Pony Diffusion V6 XL. Obviously there are different species:

Then it goes to my merged model. AstolfoMix-XL Baseline. Notice that it doesn't include Pony:

AstolfoMix-XL TGMD. Notice that merging algorithm has been applied, and there are many models included:

Using my recent SEG / PAG / CFG++ stack with AstolfoMix-XL DGMLA, things get escalated quickly:

Important XYZ Plots

I think it is self explained? Trust me, no prompts, just seed.