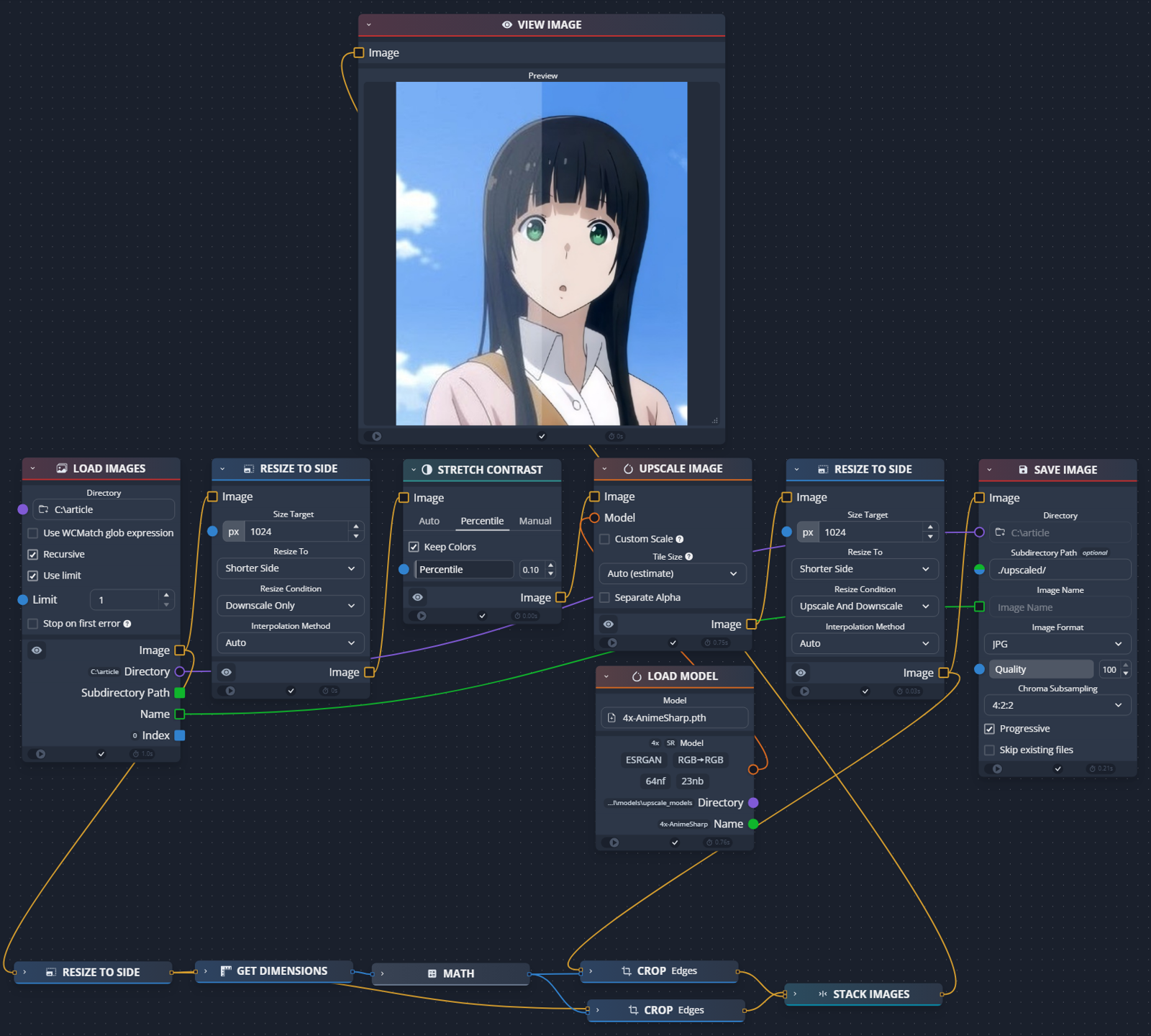

In this short guide i will show how i mass edit images with chaiNNer.

The workflow is attached to right side under "Attachments"

Updates

Update 9.08.24

Changed link in "Download from..." to point to releases instead of older version.

Changed installing instructions

Update V3 28.06.24

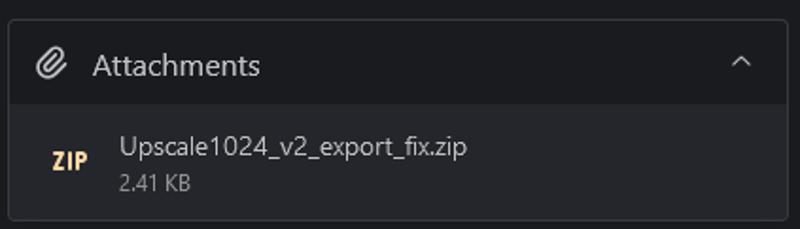

Added extremely bright yellow notes (why can't the notes be a bit darker?) (╯°□°)╯︵ ┻━┻

Lowered the value of stretch contrast to 0.01 (should be enough most of cases, with less loss of data)

Updated images on guide

Update 30.05.24

Clarified need to insert model before running.

Fix v2: 16.05.24

Forgot use limit on while testing. Could cause some confusion, so updated the workflow on site with it disabled.

Update V2: 15.05.24

Added better compare

Tuned down stretch contrast percentile 1% -> 0.1%. Accidentally left it on too much.

(If you get too dark images you can tone it down more)

What you need:

chaiNNER:

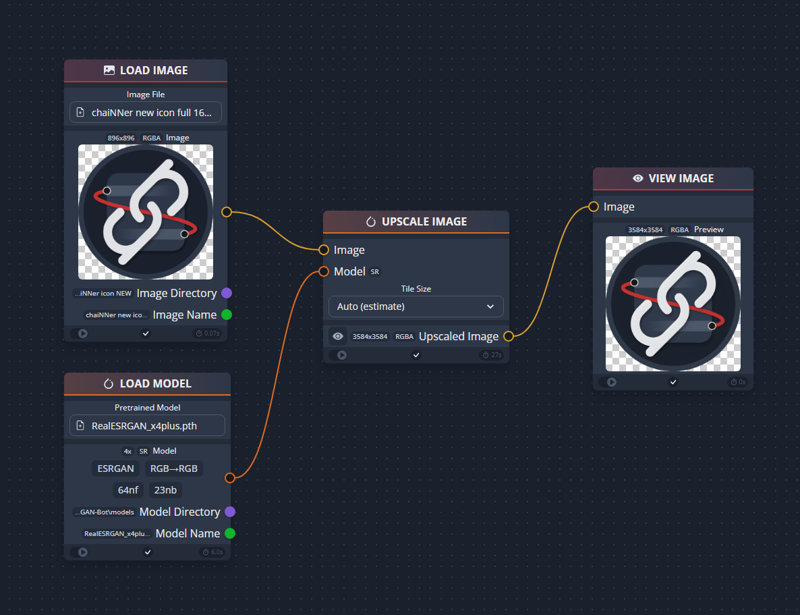

Node based image processing GUI

https://github.com/chaiNNer-org/chaiNNer

How to install:

Download from the github releases

Install chainner

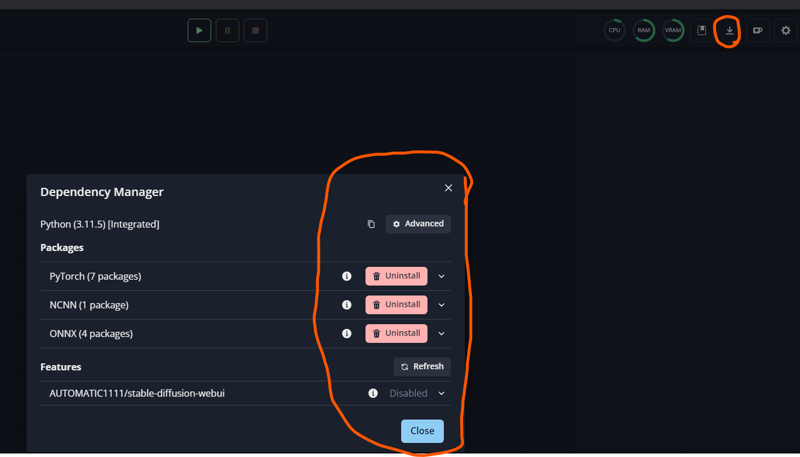

Install necessary depencies. I recommend installing them one by one. Clicking install all can cause it to not install anything. In some cases seems to throw error.

What can you do with it?

With chainner you make workflow, just like in comfyui. It is not as complicated and its rather easy to use. All the nodes chainner has are focused on image processing.

For example you can:

Upscaling with model / downscaling / resizing etc...

Image adjusting contrast / levels/ blurring / sharpening etc..

And all of this can be done in batch

How i use chainner to batch prepare images for training

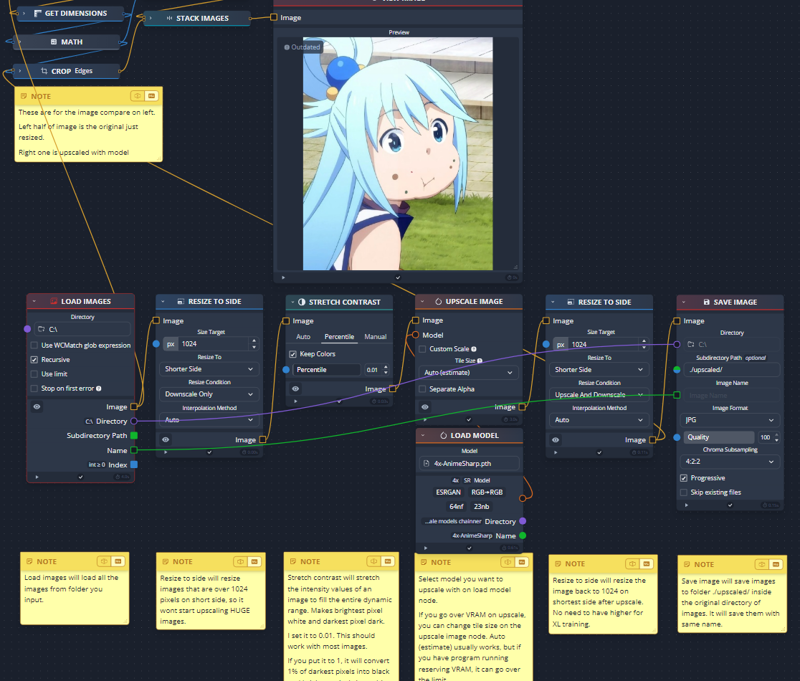

The workflow i use will be attached to this post ON RIGHT SIDE UNDER Attachments

What you need to do?

Drag and drop the workflow to chainner. The workflow is on the right side of this article ->

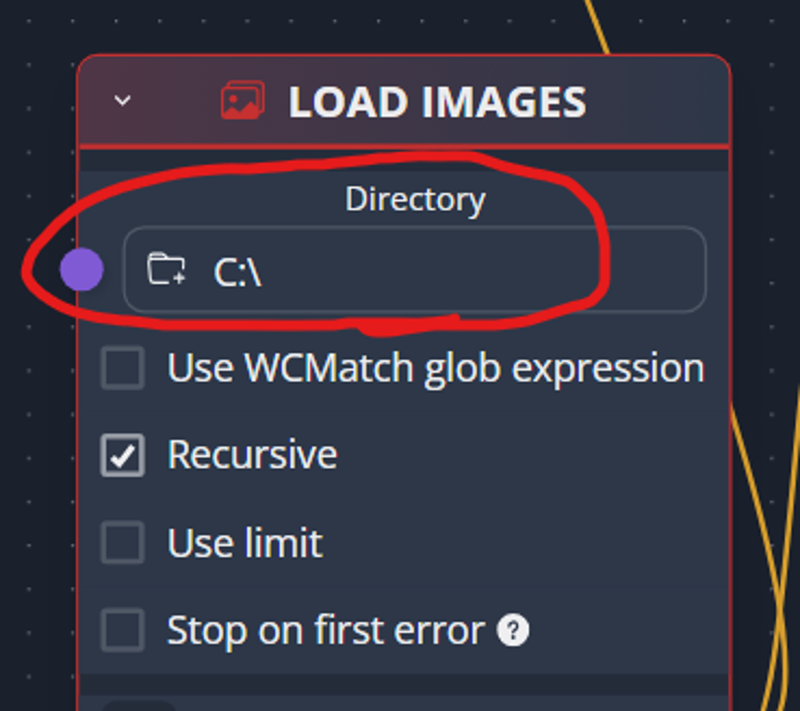

Input directory to load images.

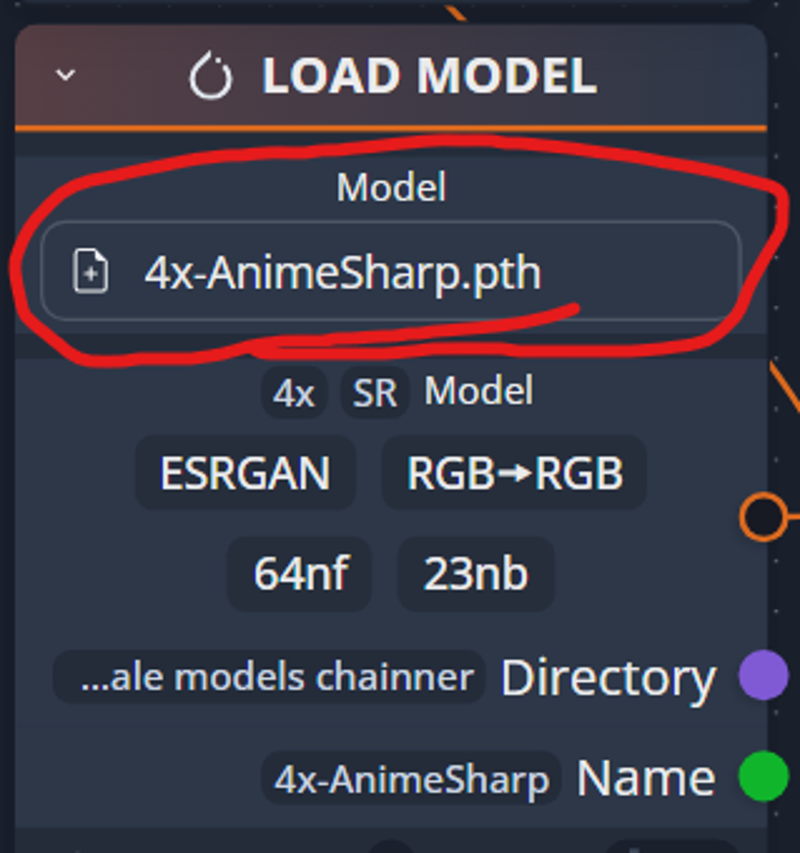

Input model to "Load model" node. If you do not do this it will throw error.

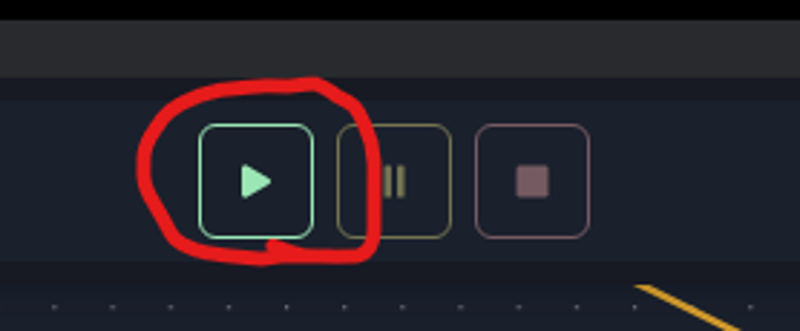

Press play button.

What does it do?

Resize lowest side of image to 1024 in case some of the images are huge resolution. (it will not resize any images that are below 1024 on one side before upscaling)

Stretch contrast of the image (This will make darkest pixel black and brightest pixel white. Good for images capped from animes)

Upscale image using model (you can change the model it uses to upscale). If the data has artifacts or compression mess, i recommend using model that removes that for example RealESRGAN_x4plus_anime_6B. If the dataset is clear using model that sharpens more is good idea. For example 4x-AnimeSharp.

Resize images back to 1024 on lowest side. If you train with 1024 res for XL. As far as i know there is no reason to have higher res image.

Saves the edited images to upscale folder inside the image folder.

All these settings can be easily changed in the chainner.

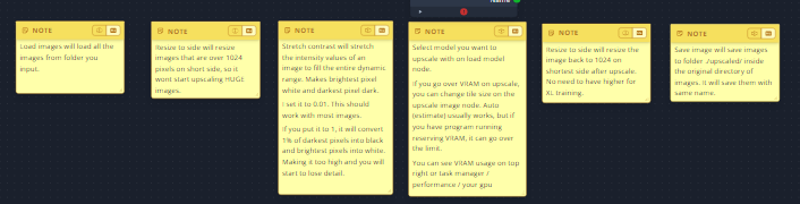

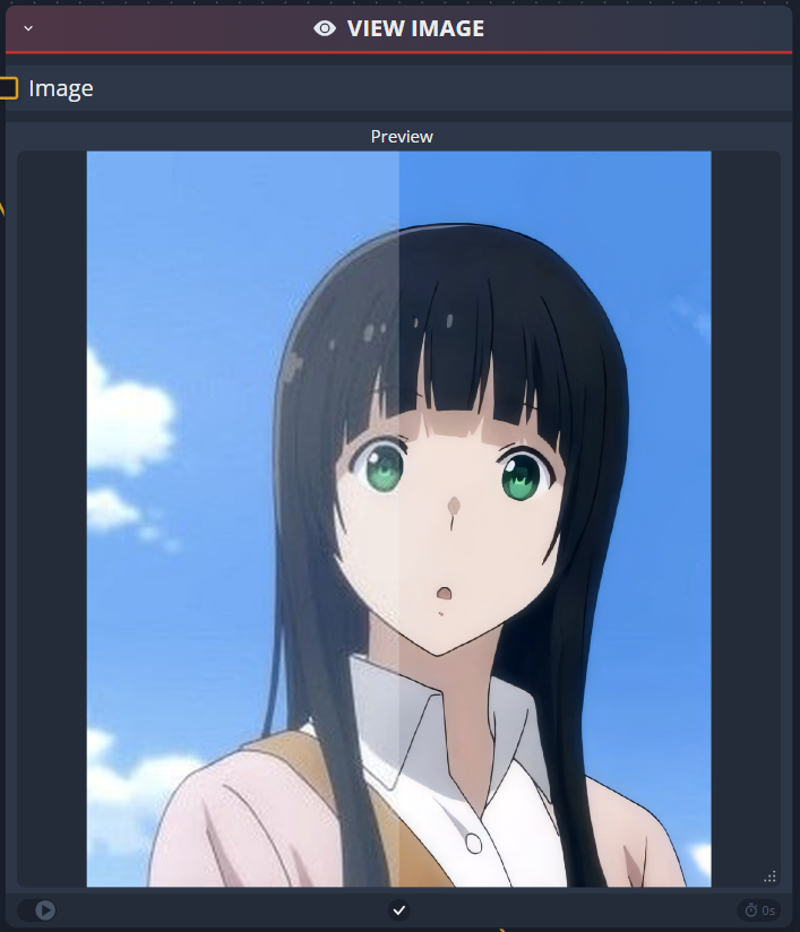

Here are few examples before and after

Before

After

Before

After

Before/After