Hello, I am going to explain how to make videos from the frames of other videos.

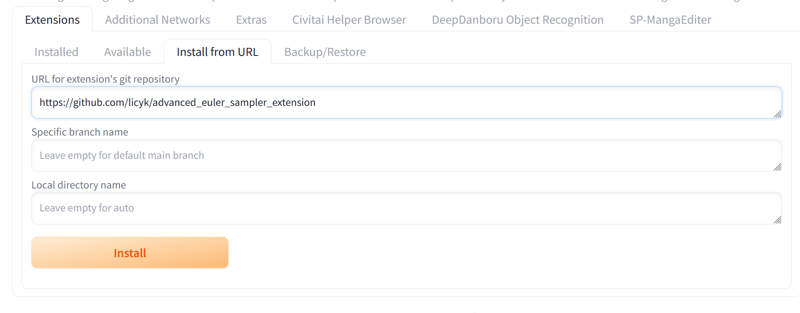

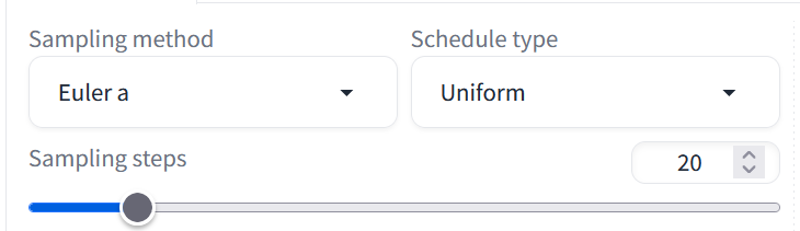

The first thing is to install Euler Smea Dyn. This sampling method is faster and makes the videos better. The images come out better also because it understands the prompts better.

Copy this link and paste it into automatic1111 to install it as an extension. https://github.com/licyk/advanced_euler_sampler_extension

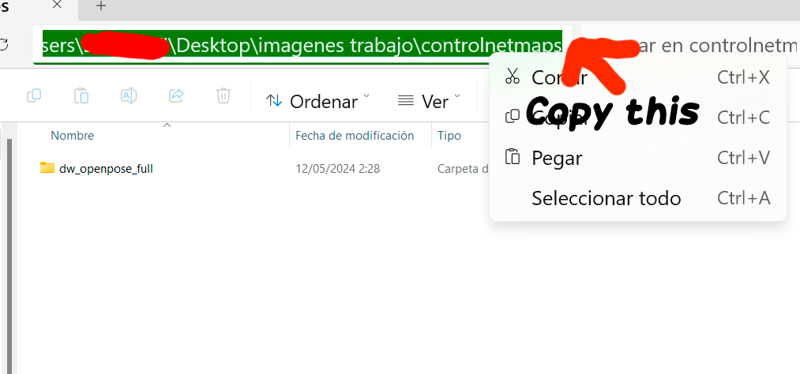

The next thing to do is go to settings and make these changes in controlnet.

Make a folder in which you want to save the frames that controlnet makes and then copy the folder path and paste it into controlnet settings.

Paste in controlnet settings in Automatic1111:

This way you can save all the poses of each frame and use them to make videos. Before knowing this, I had to save the frames 1 by 1!!

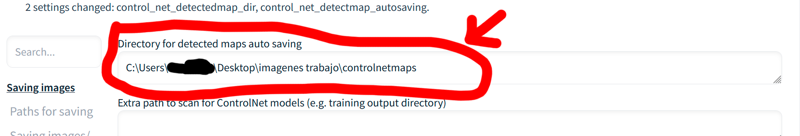

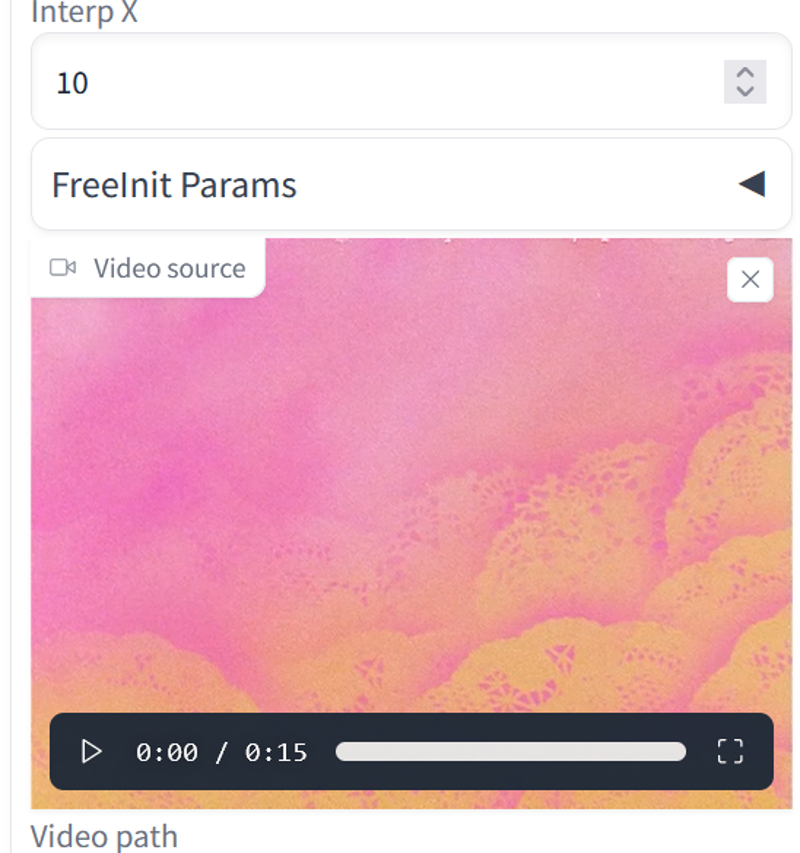

The next thing is to choose a video that we want to use. I used this one from sailormoon.

I use Jdownloader2 to download YouTube videos: https://jdownloader.org/es/download/index

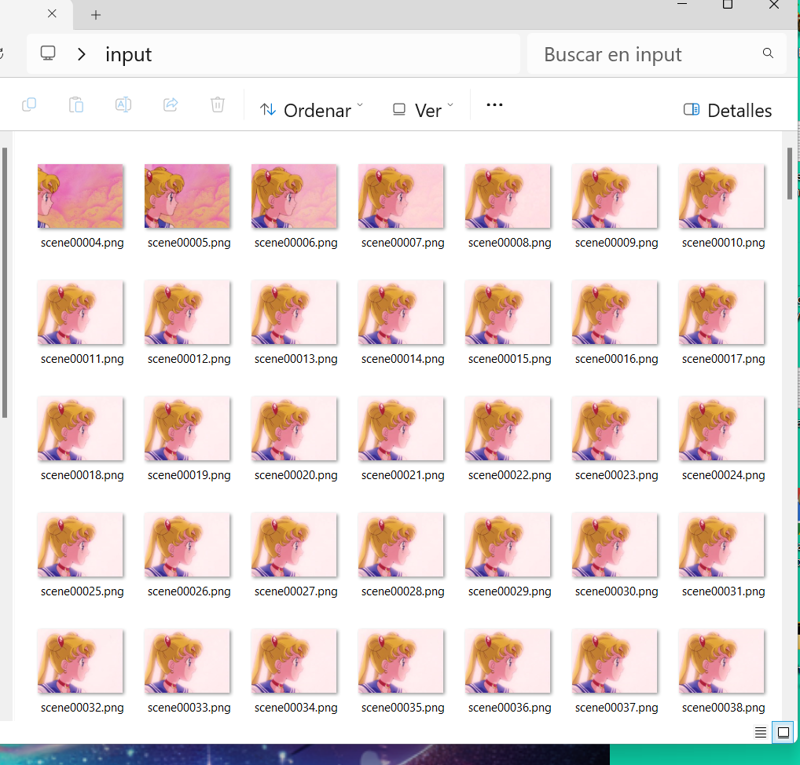

Once the video is chosen, I extract the frames using VLC:

1. Create a folder to store your frames and copy the path to it.

2. Click Tools -> Preferences in VLC.

3. Under “show settings”, click “all”.

4. Under “Video”, select “Filters”. Tick “Scene video filter”.

5. Expand “Filters” and select “Scene filter”,

6. Paste the path from earlier into “directory path prefix”.

7. Decide what proportion of the frames you want to export. Put 1 if you want all the frames

For example, if you want to export 1 in 12 frames, type “12” in the “recording ratio” box.

8. Click “save”.

9. Click Media -> Open Video and find your video. Patiently let the whole thing play.

10. Click Tools -> Preferences. Under “show settings”, click “all”. Under “video”, select “filters”. Uncheck “Scene video filter”. Click “save”. This is so that VLC won’t generate thumbnails the next time you play a video.

11. Open the folder you created earlier. The thumbnails should be there.

You can also extract the poses from the frames using img2img in Automatic1111, but it does not extract all of them, it only extracts those that are considered important.

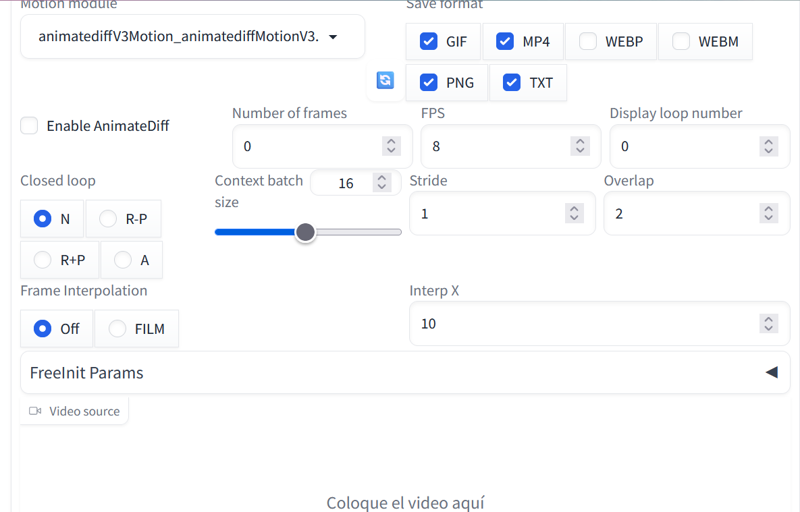

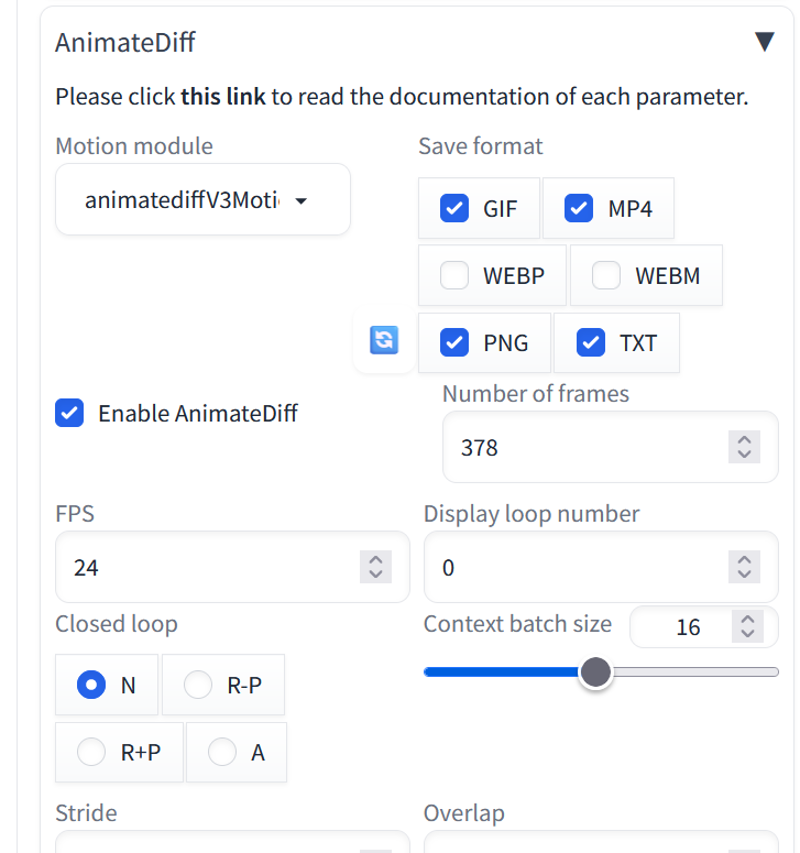

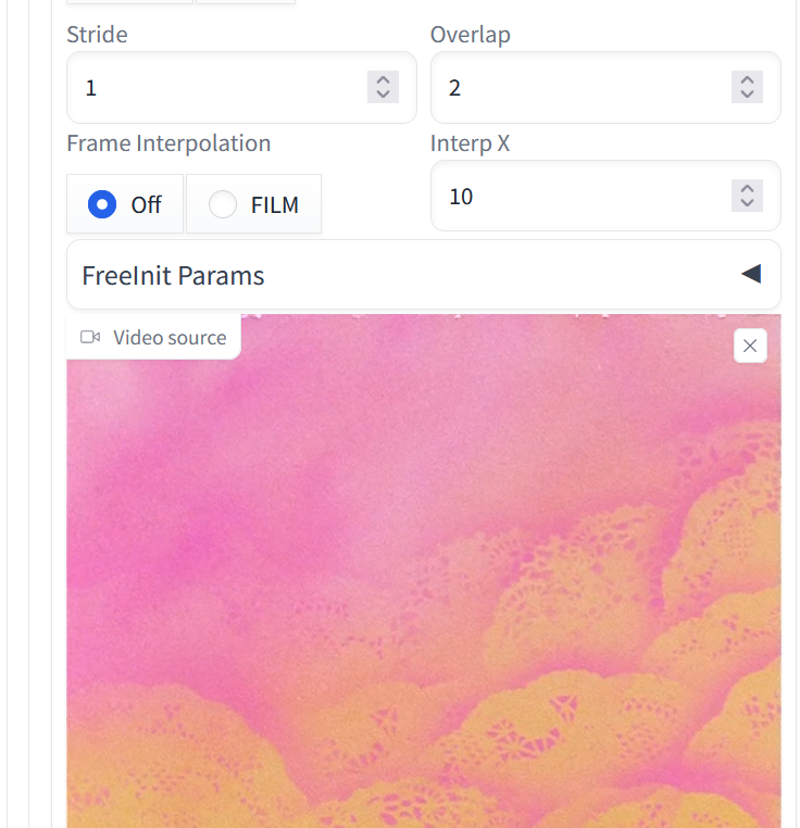

To do that, put the video on animatediff.

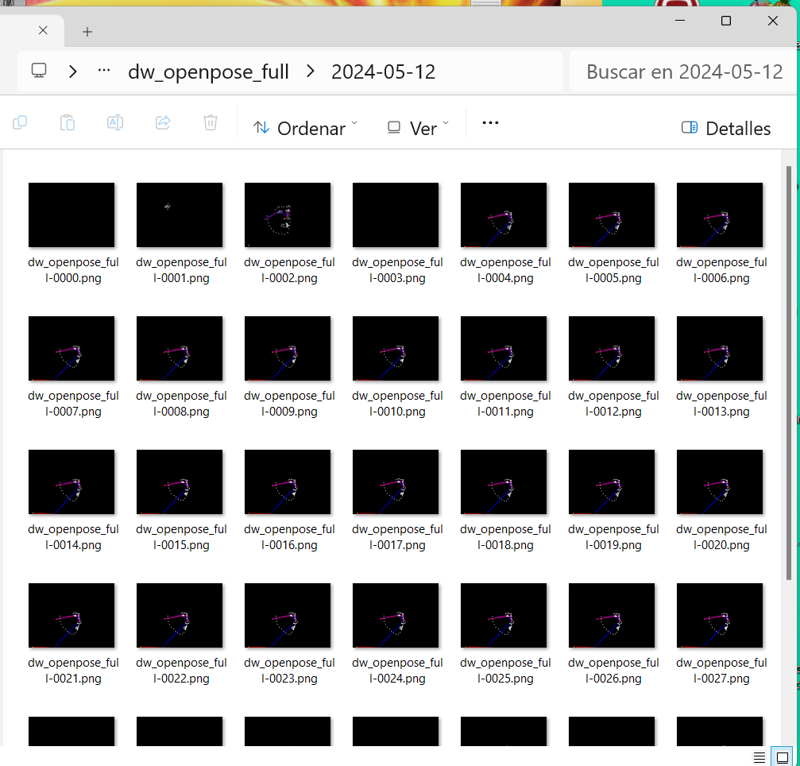

You must enable controlnet in pose so that it saves the frames.

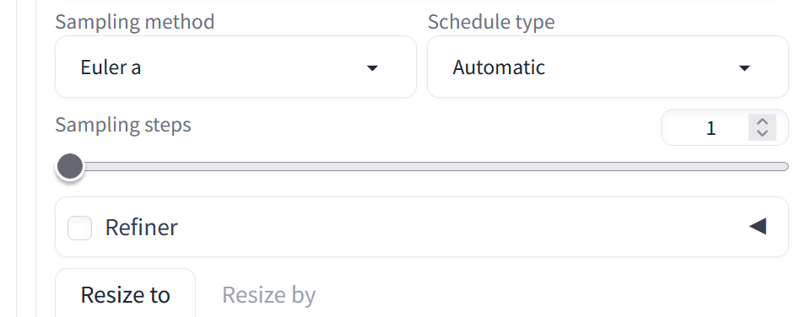

We only put it in one step, because we are only interested in the poses and this way it takes less time to do all the frames.

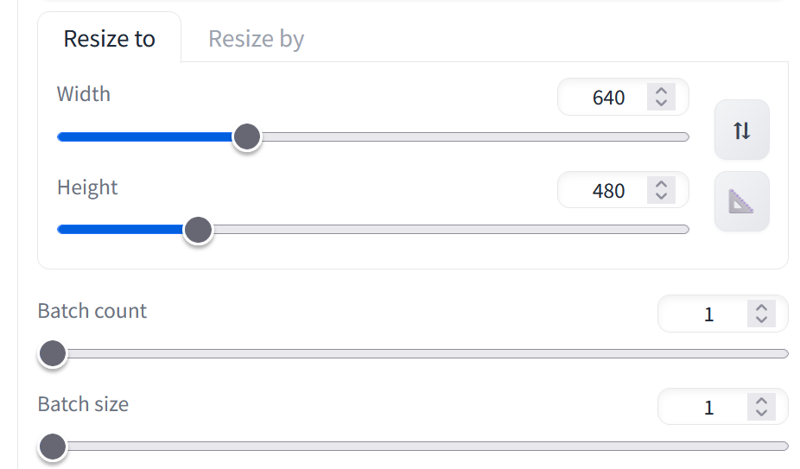

We will have to set the same resolution as the one in the video

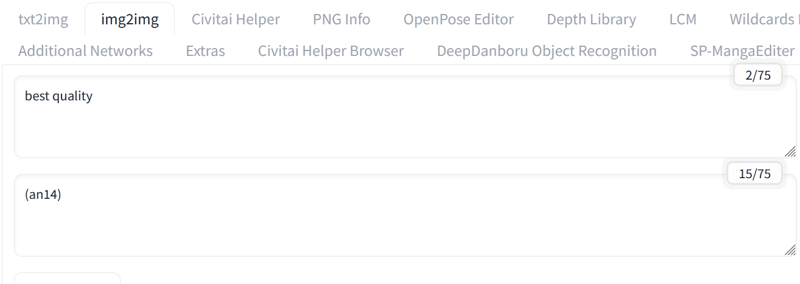

I put a prompt so that the "dynamic prompts" don't complain about not having any prompts:

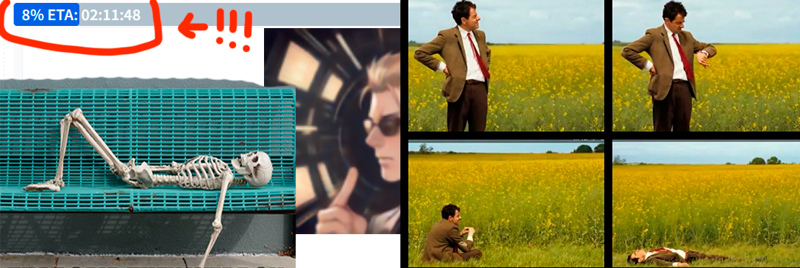

And the result will be this.

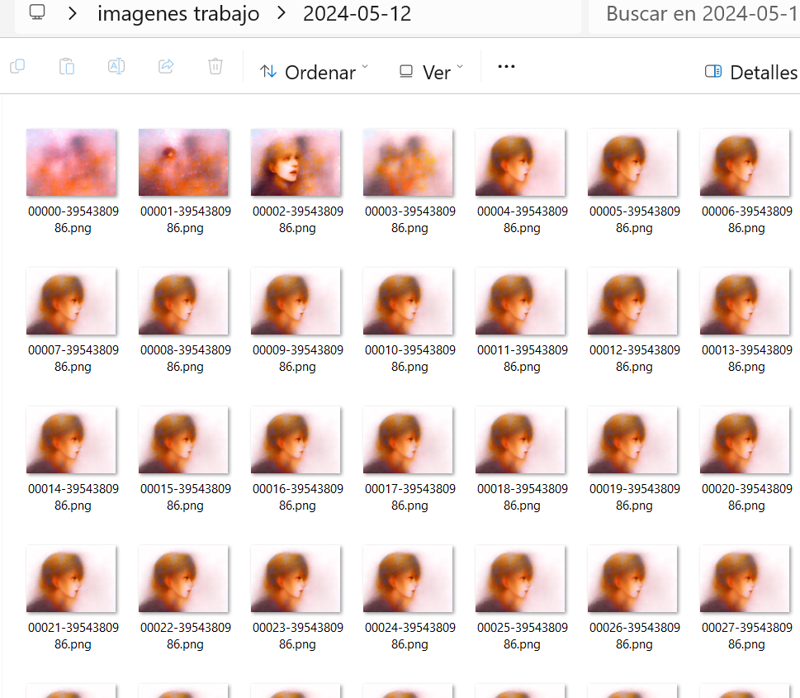

Since we made frames with just 1 step, in the images folder we will have something like this. That images can be deleted.

If we want to make images with the batch of poses that we have obtained, we have to use the normal euler, I get an error if I use the Euler Smea Dyn.

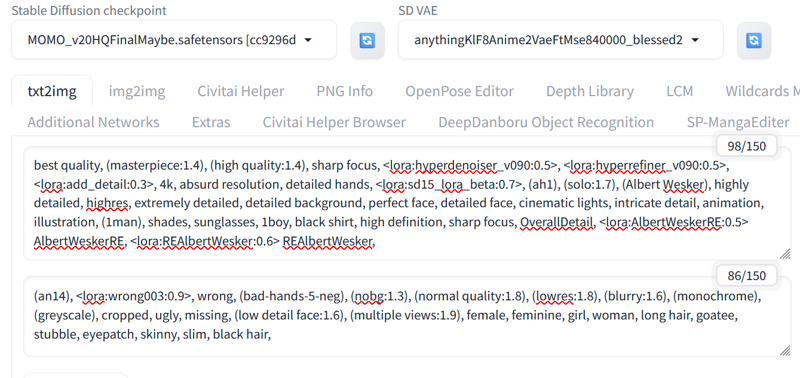

The next thing is to put the prompts we want. I'm going to make Wesker move like sailor moon, so I'll use these prompts:

prompts:

best quality, (masterpiece:1.4), (high quality:1.4), sharp focus, <lora:hyperdenoiser_v090:0.5>, <lora:hyperrefiner_v090:0.5>, <lora:add_detail:0.3>, 4k, absurd resolution, detailed hands, <lora:sd15_lora_beta:0.7>, (ah1), (solo:1.7), (Albert Wesker), highly detailed, highres, extremely detailed, detailed background, perfect face, detailed face, cinematic lights, intricate detail, animation, illustration, (1man), shades, sunglasses, 1boy, black shirt, high definition, sharp focus, OverallDetail, <lora:AlbertWeskerRE:0.5> AlbertWeskerRE, <lora:REAlbertWesker:0.6> REAlbertWesker,

negative:

(an14), <lora:wrong003:0.9>, wrong, (bad-hands-5-neg), (nobg:1.3), (normal quality:1.8), (lowres:1.8), (blurry:1.6), (monochrome), (greyscale), cropped, ugly, missing, (low detail face:1.6), (multiple views:1.9), female, feminine, girl, woman, long hair, goatee, stubble, eyepatch, skinny, slim, black hair,

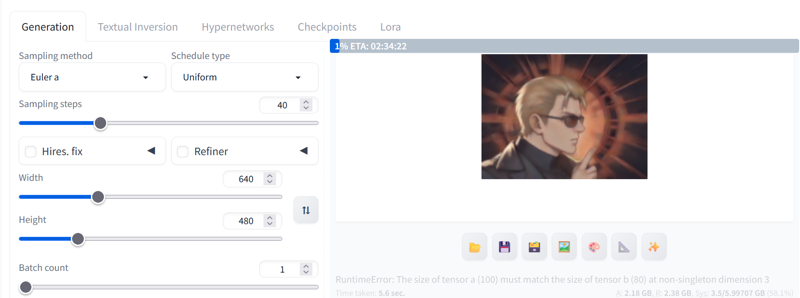

Then I put 40 steps. And the resolution of the pose frames.

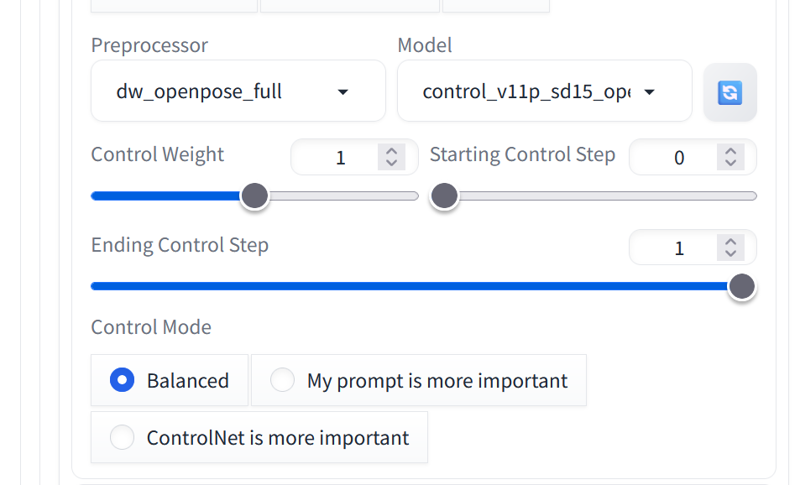

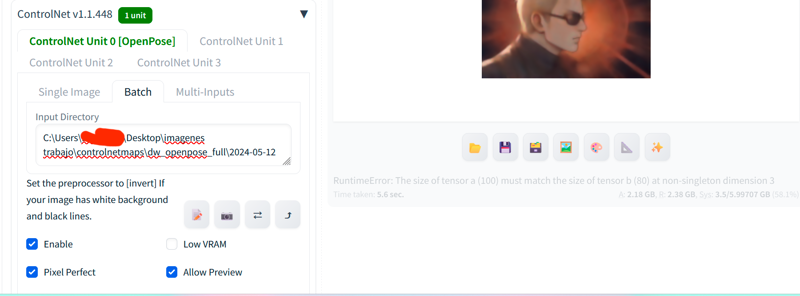

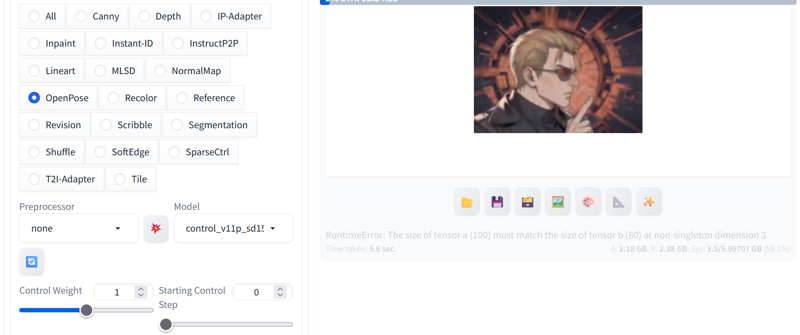

We activate controlnet and in batch we copy the path of the frames folder:

It is important that we do not put any preprocessor.

We only have to wait:

If we want to make a video with animatediff using those frames, we must also use temporalnet.

We will use 1 controlnet unit: 0 for openpose as we have seen before with the batch. We won't use a preprocessor either.

And that would be all for now, if I learn something more I will make more articles.

All the best!