Hi there! First post so bear with me lol

I've been asked how to replicate my looping images so here is the workflow I use to create most of my stuff. It's pretty simple but it's fast and gives a great quality with the LCM loras and AnimateDiff.

You will need to install some plugins from comfy manager to make it work properly but they're all useful addons you might end up getting anyway in my opinion.

How to use :

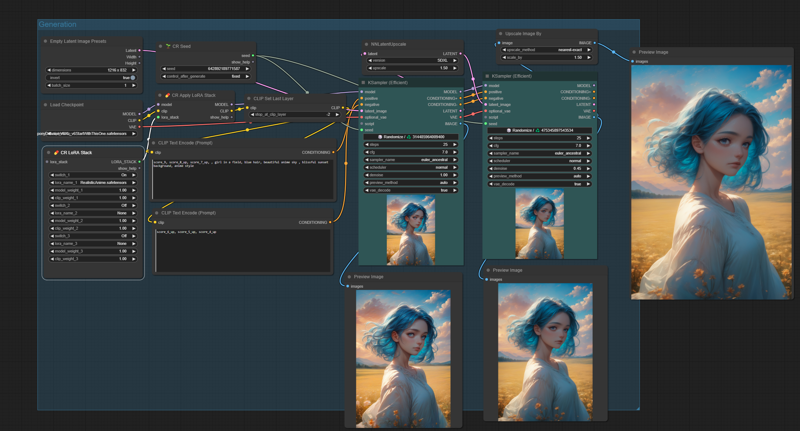

1. You can generate a guiding image for the animation with the Blue group on the left. I usually use Xl models but 1.5 works as well Just set group to never if you already have one.

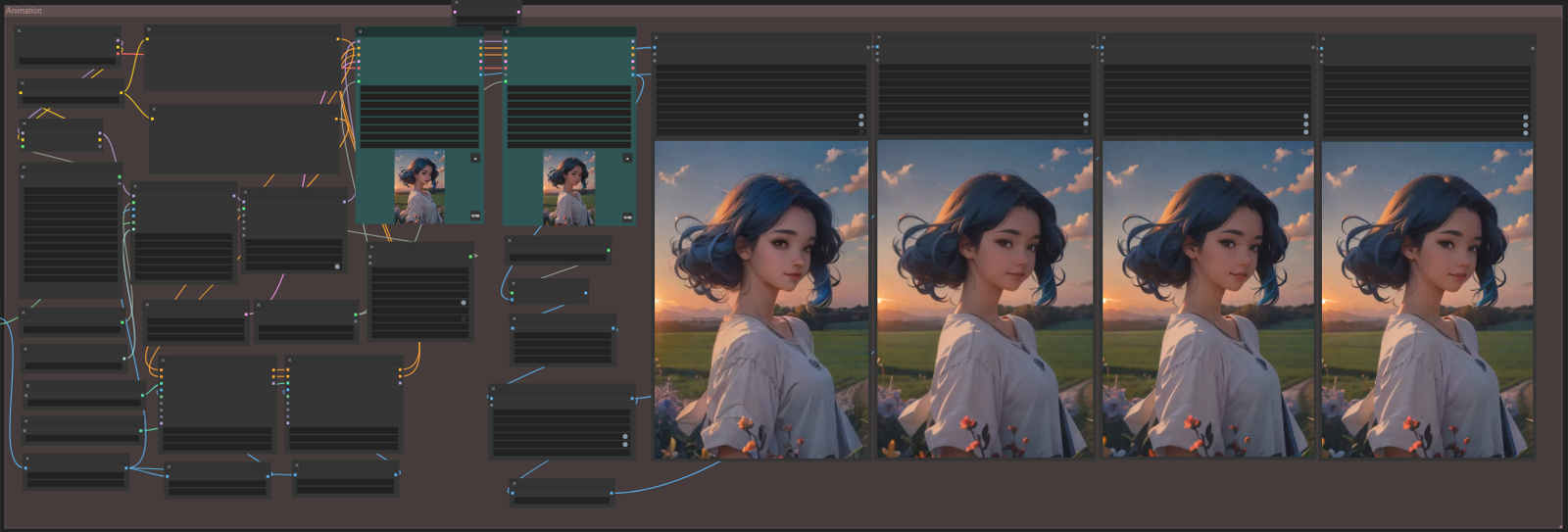

Animation

Load the image in the first node to the left

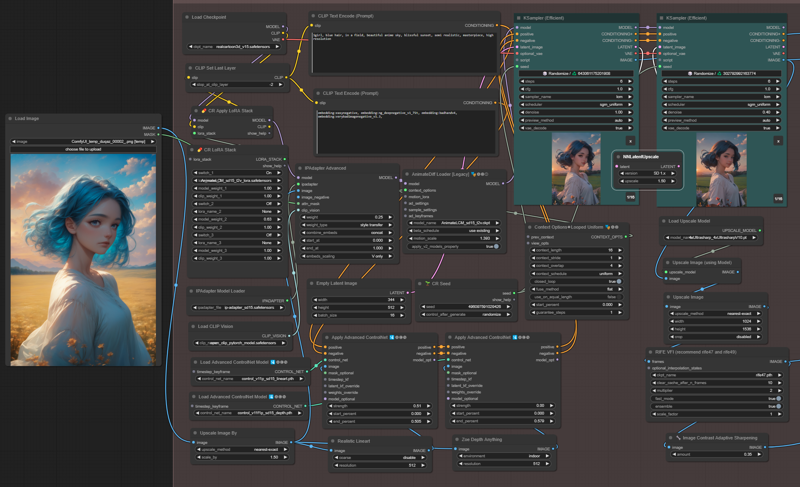

Now depending on your guide image, you'll need choose a 1.5 model that will work with your animation. I can recommend Aniverse, Realcartoon3D, Cyberealistic, Realistic vision. it should work with anything similar to those. I'll explain what everything does for those who don't know:

In the LoRA stack, we use the AnimateLCM lora to give detail to our animation so the model weight should be set to 1 in the stack. You can load 2 extra loras with the current setup but you can add more if you replace some things.

We can use ipadapter to increase likeness or transfer the style from the guide image. You can load different 1.5 ipadapter model for different results. The Load ClipVision is the default CV model. You can plug the model directly from the CR Apply Lora stack to AnimateDiff Loader if you don't want to use ipadapter.

In the AnimateDiff loader Node we load the LCM model and determine the strength of the movement in the animation. The goal is to balance this with the control nets to get something good and similar to our guide image.

Controlnets: The amount on movement and likeness is heavily influenced by the Controlnets so make sure to play around with the end % and strength to get the results you want. I use lineart & depth most of the time but you can try to add openpose and others as well.

For the Ksampler #1, we use 344x512 empty latent with a 16 batch size which means, in this case, the animation will be 16 frames. We use 6 steps and a cfg of 1 which means the negative prompt will be ignored. This makes the generation faster but you can play around with those values and the resolution for more detail at the cost of generation speed. This is rendered in the 1st video combine to the right

For Ksampler #2, we upscale our 16 frames by 1.5 with the NNlatentUpscale node and use those frames to generate 16 new higher quality/resolution frames. We then Render those at 12 fps in the Second Video Combine to the right.

Realistically we can stop there but NAH. We then load the 4x ultrasharp model and upscale our animation 4x and the scale it down to 1024x1536. Pretty sure it increase the quality of the images

Finally to make the animation smoother, we use RIFE VFI with the rife47 model to interpolate by 2x. This means that the model with create a new frame in between every single one of our frames and double our animation length to 32 frames while slowing down the movement by 2 as well.

This gives use a longer and smoother animation in the end. Rendered in the 3rd combine.The Last combine is extra sharpening, can CTRL+B if not needed.

That's it! You should have a looping animation similar to your main image (or not depending on your prompt).

Let me know if you have questions or feedback and gimme a follow for more stuff like this :)