Research Summary, Character Design - Conception

Where to start?

Coming from 3D i spent a good amount of time searching for references in common search engines. Every digital-painter or 3D-sculptor will tell you, NEVER paint from memory. Always have good references!

It is a big part of ones skill-set to mix the inspiration into something new and exciting. The birthplace of new IPs. To find real things close to the idea (surface structures, anatomy, material colors and behavior, etc) to bring the world over from brain into visual space.

What if i told you, the world you live in is a lie, Neo?!

What if you could CREATE your references EXACTLY how you want them?

.

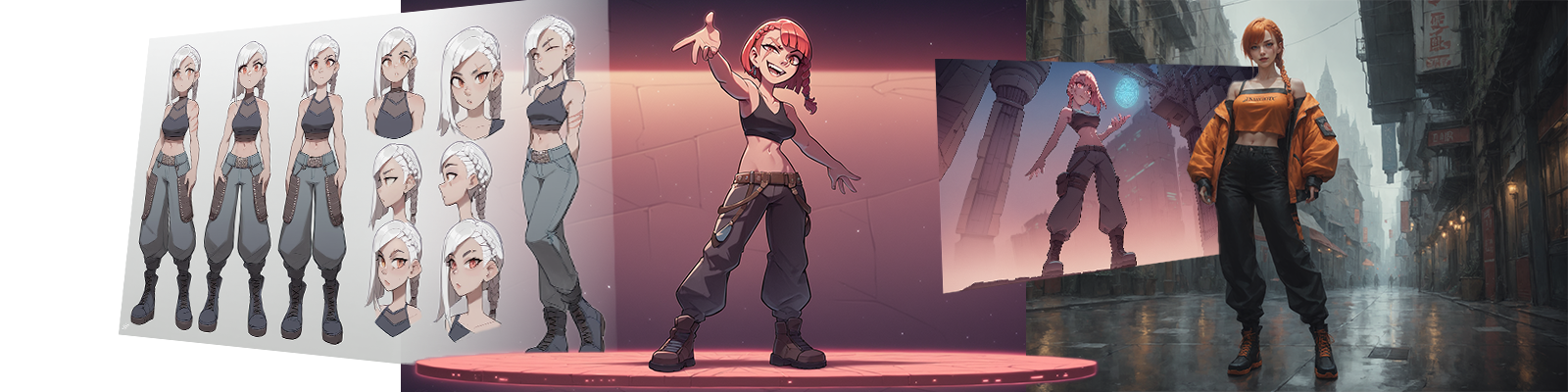

Those are some older 3D renders and my first serious tries with AI. (Don't look too close😅). I used 1.5 on a 1070 with controlNet - openpose

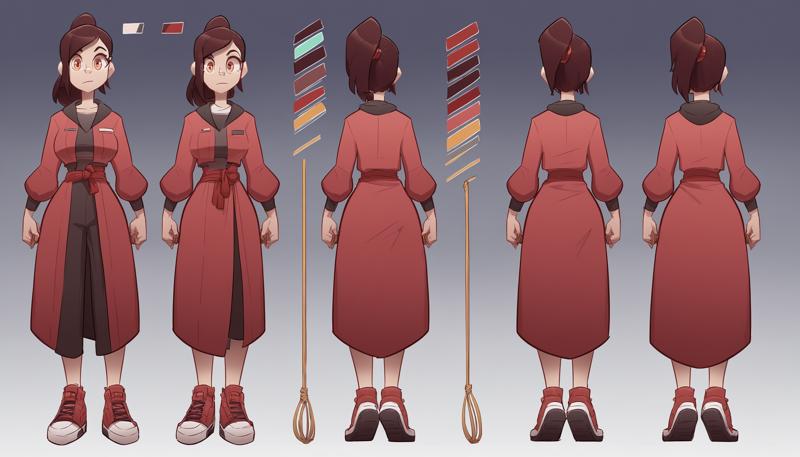

The goal was to get to a character sheet that is sculptable in 3D, meaning showing multiple angles of the same thing ideally the same size. This was the point where AI changed my mind. From unusable "midjourney discord toy" to a new set of amazing tools just waiting to be integrated in established production workflows.

more on that later in a different article. I theorized some elaborate techniques involving attention masking, cNets and ipadapter that basically work on every XL checkpoint. But there is no point in getting all worked up about consistency, if the thing you wanna make consistent is not there yet... your character 😁

The blank canvas

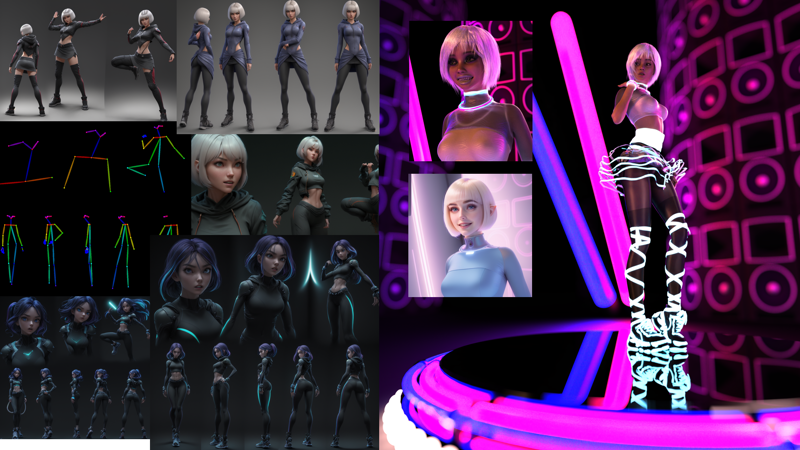

We start off with 2 prompts. One for the character and one for the design-environment that can later be switched for the setting.

Standard fashion prompting includes specific terms like "midriff" (belly free), or a certain descriptive tag structure "thigh-highs with pattern /(zebra/)".

Character design prompting can include tags like "characer sheet", "character turnaround", "character design" itself can help. The best way is to test out descriptive words that might aid the AI in understanding, it's concept time ^^

.

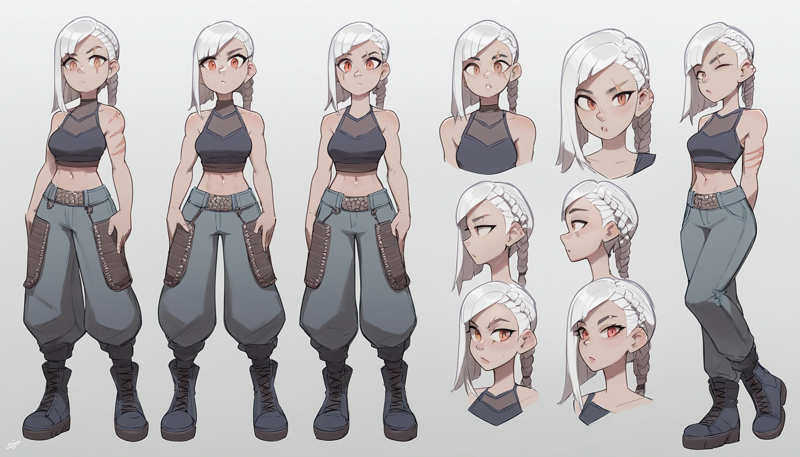

We block out the character.

.

Then we add details that reflect character traits. It is useful to make batches of 4 or more to see which item or description changes what else in the composition. Remember Everything is in everything. These cross aspects will make your character truly unique. We balance the weights till everything pops. If you are clever with your character composition it is only REALLY replicable with the prompt. Sure, one can just prompt what you see and get something similar. But never as consistently unique. If there is an aspect the general model does not get right (the cut elf ears in this case), or gets right in a portrait but not on a smaller scale, we can inpaint. (i didn't ^^)

.

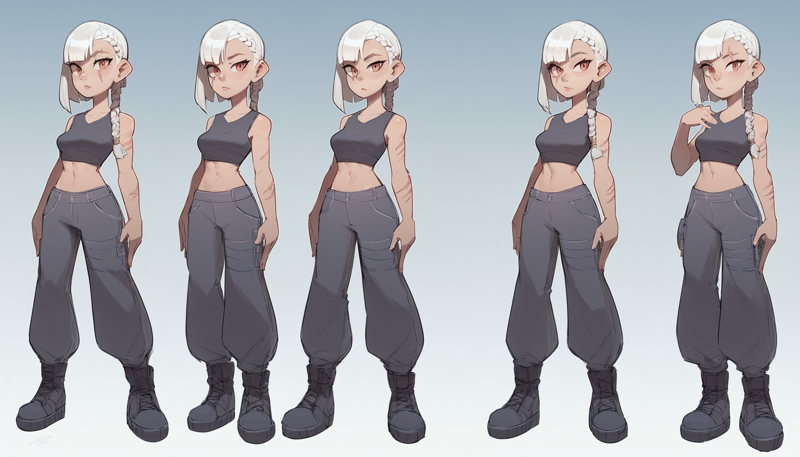

Now we get rid of the design prompt and replace it with a simple background. The character sheets and turnarounds can try to show variations. By removing that random we see what we're actually dealing with.

.

Sometimes it's nice to try removing the background prompt entirely (or reduce it to the most basic description, like "city", for some general structures to latch on to) and let the attention derive the setting from the character traits ... you might see where she came from. ^^ And we refine.

.

if you got the character prompt as close as possible to the vision it's time for some more refinement.

.

We now start switching models and further refine the character prompt. Some models will not make all the details pop. That is expected and needs to be accounted for. By switching you also get a feel for what model to use as native in the end. This is of course primarily dependent on the production design.

The prompt distillery

Now, that we are in the deep dive with model switching and the last stages of prompt refinement we should talk about a controversial thing. A thing SOME ppl (mode) will get an aneurism over.

HIGH STEP COUNT ... uuuhhhuhhuhhuhh ... ... say it again! ... hiiigh STEP COUNT .... uuuhuhhuhuuhuuhhhhh :D

Let's get one thing out of the way. Yes, it IS a waste of compute. Yes, you have diminishing returns and the effects are marginal. And yes, you do miss out on dope outfits.

BUT... It was not prompted now, WAS it? It's exactly that random we wanna get rid of for character design. This is with 60 steps.

.

This is the same seed with 150 steps, and everything got merged beautifully.

I made a comparison that shows how adding more tries to get it right really distills out the prompt. It also does not freak out as much on short sub-prompts. Remember, we switched the design sub-prompt out for a basic background one. "city, low sunlight, vendors" or something like that. And this is why you get a merge of every city on earth in anyway depicted in low sunlight with vendors. You might have noticed the variety of letters and languages displayed (incl but not limited to the design of the signs themselves). And the architectural time blend. High steps can tame that ultimate freakout that you usually have to specify for.

This is all on a (semi) normal sampler (DMP++ 3M SDE dynamic eta) mind you. High steps and the blending capabilities of it REALLY start to shine with ancestrals (and other forms of nose injection) and Prompt Control dynamic tags. [frog|(octopus is a:0.2) warrior wearing armor]

Why use pony?

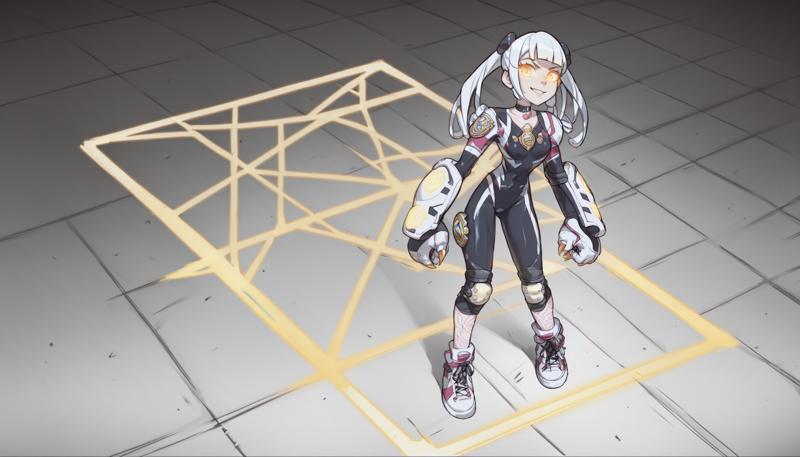

Well the pony is a naughty one (intentional or not), so you need to put it on a leash (don't prompt that) and you need to learn a new prompting style. But it's a character designer at heart and really damn good at it. It's not your common SD checkpoint, that much is certain.

It has many specialized merges by now to really distill the character sub-prompt.

yeah, but... WHY, tho?

Because pony is the best tool available for the job. Its anatomical comprehension and merge capabilities are unmatched.

...........................................................^

notice it put the rainbow symbol of the horse at the right place on the thigh of the human. The pony knows things. It also makes the best feet. 😅

That's all cool and stuff, but what now?

Now we generate hundreds of images and only keep the ones that are OUR character. That have "the thing". In the best quality possible. We structured the prompt into sub-prompts for a reason. We already switched out the setting. This now can be wildcarded to generate variety in backgrounds, poses, framing (portrait, full body), outfit variations and other things that would make a machine understand who it is you're showing.

With a large amount of cherrypicked diverse super images (mind the limbs, you don't wanna make hands an even worse issue ^^) we can now train a LoRA with it (a small brain-slug with skillz for the big brain checkpoint). Then use it to make the next set of super images to train the next LoRA and so on. Maybe merge the best epochs and do science. ^^ Till you have a solid character concept LoCon with multiple skins (if you're crazy) :D

and that's that for now... I will go deeper into this in separate articles. This one is long enough.

hope you enjoyed it ^^

- Hellrunner