Introduction

Pony Diffusion V6 XL is an excellent model that follows instructions precisely and creates quality results. However, some of its training data includes synthetic images generated by other AI models such as Stable Diffusion, MidJourney, and DALL-E 3. This can cause serious problems and reduce the overall quality of the model.

Synthetic AI-Generated Data: An Overview

Training generative AI models involves vast datasets, traditionally sourced from human-created content. With the proliferation of AI-generated images, datasets now increasingly include synthetic data from other AI models. This practice, while convenient, is fraught with potential pitfalls. Research by Boháček and Farid at Stanford University and UC Berkeley has highlighted that generative AI systems, when retrained on their own outputs, exhibit significant degradation in performance and image quality—a phenomenon known as model collapse.

Why Using Synthetic AI Data is Problematic

1. Model Collapse and Image Distortion: Generative models like Stable Diffusion, when retrained on AI-generated images, tend to produce distorted outputs. The study showed that even a small proportion (as low as 3%) of synthetic data could lead to significant degradation, reducing image diversity and introducing artifacts.

2. Loss of Diversity: AI-generated data often lacks the variability and nuance found in human-created images. As models ingest more synthetic data, they tend to produce repetitive and less diverse outputs. This loss of diversity diminishes the model's ability to generalize across different prompts and contexts, limiting its applicability and robustness.

3. Amplification of Defects: Synthetic images can contain subtle defects that are not immediately apparent. When used as training data, these defects become amplified in subsequent iterations, compounding errors and reducing the overall quality of the generated images. This issue persists even after retraining on real images, indicating that once a model is poisoned by synthetic data, it is challenging to fully recover.

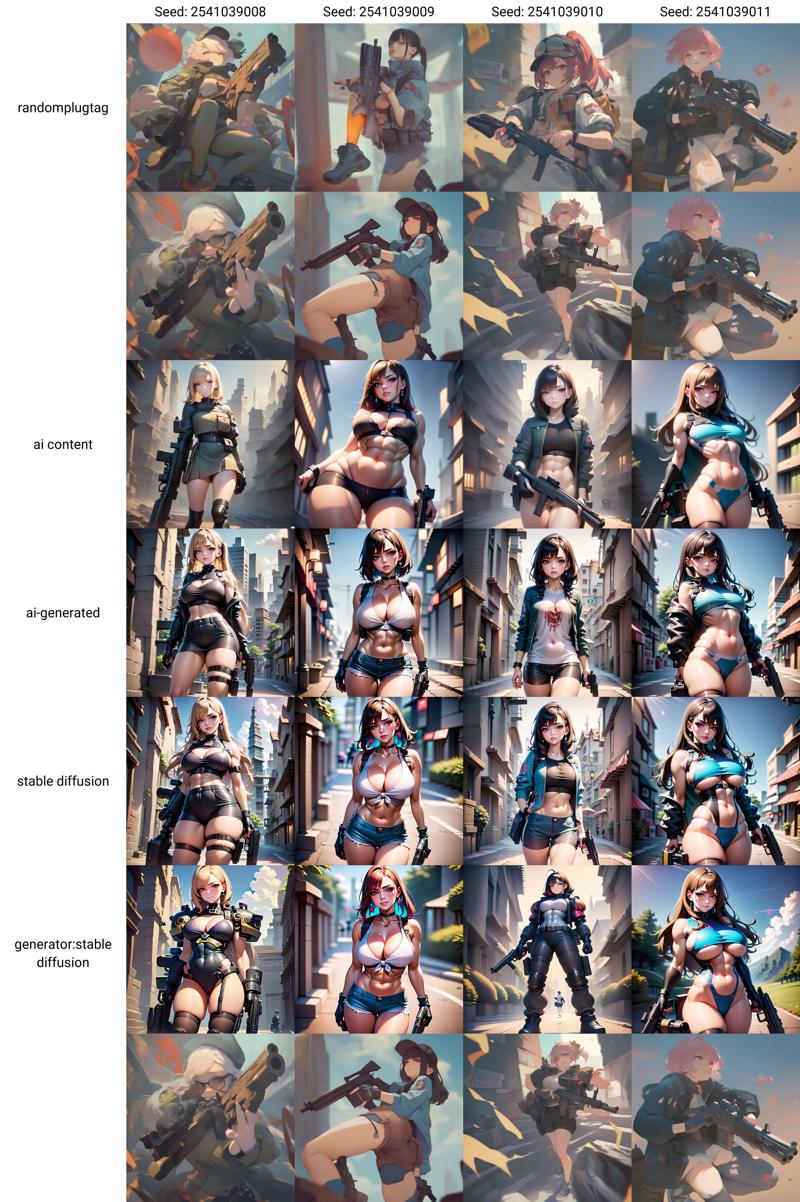

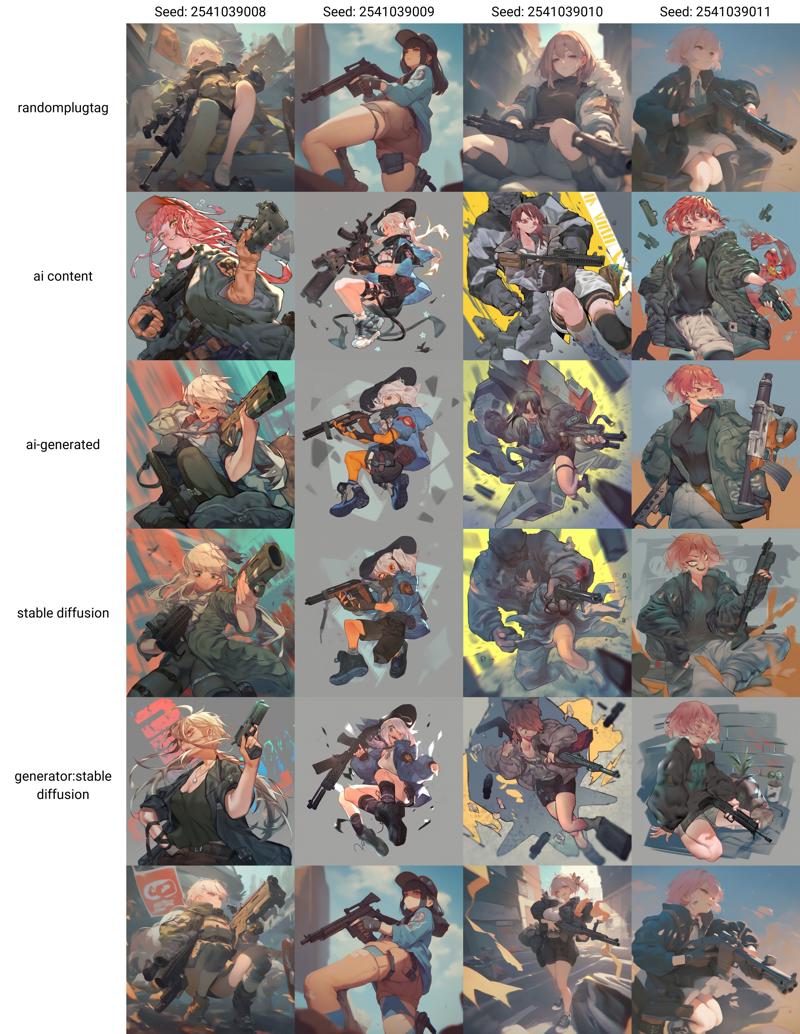

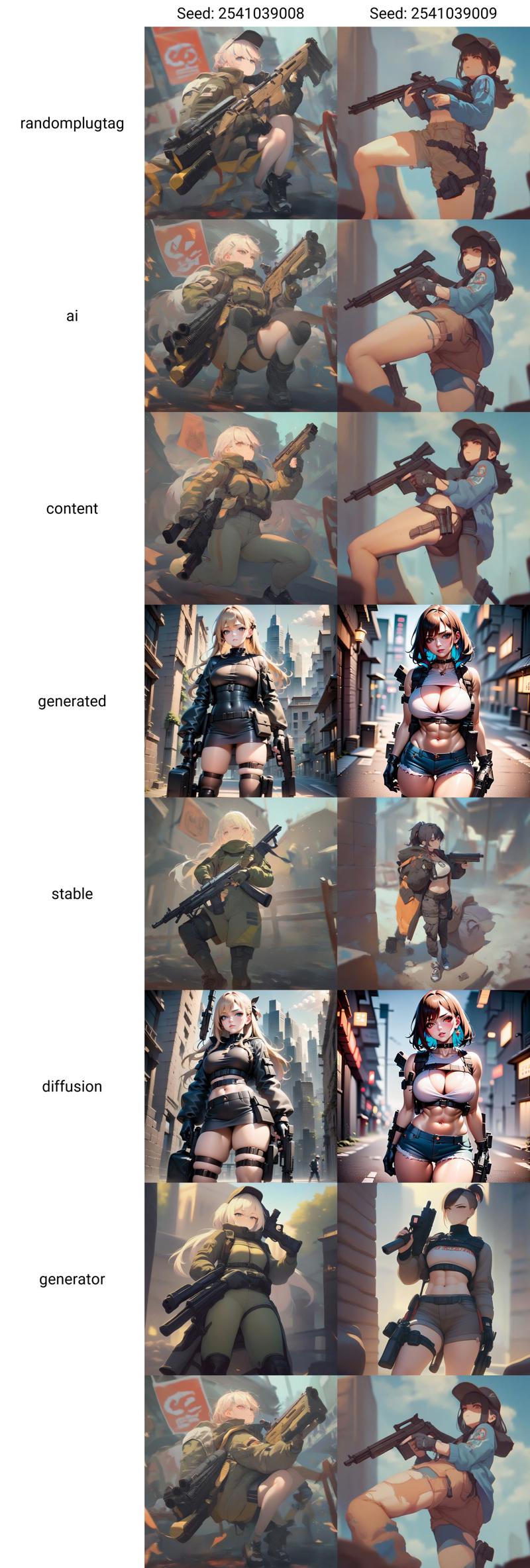

Examples of generation with different tags:

AI tags:

ai content, ai-generated, stable diffusion, generator:stable diffusion,

-->

generated, diffusionIn positive:

In negative:

Main triggers in a positive:

For developers

To mitigate these risks, it is crucial for developers to:

Prioritize Human-Created Content: Ensuring that the majority of training data is sourced from human-created images helps maintain quality and diversity.

Implement Robust Filtering Mechanisms: Using advanced detectors to identify and exclude AI-generated images from training datasets can prevent model poisoning.

Enhance Transparency and Provenance: Clear documentation of the data sources and training processes can help users understand the limitations and strengths of the model.

P.S.

I searched for appropriate tags for images generated by AI on several sites. I may not have found all the most useful tags that could improve the quality of image generation. If you know such tags, please share them in comments.