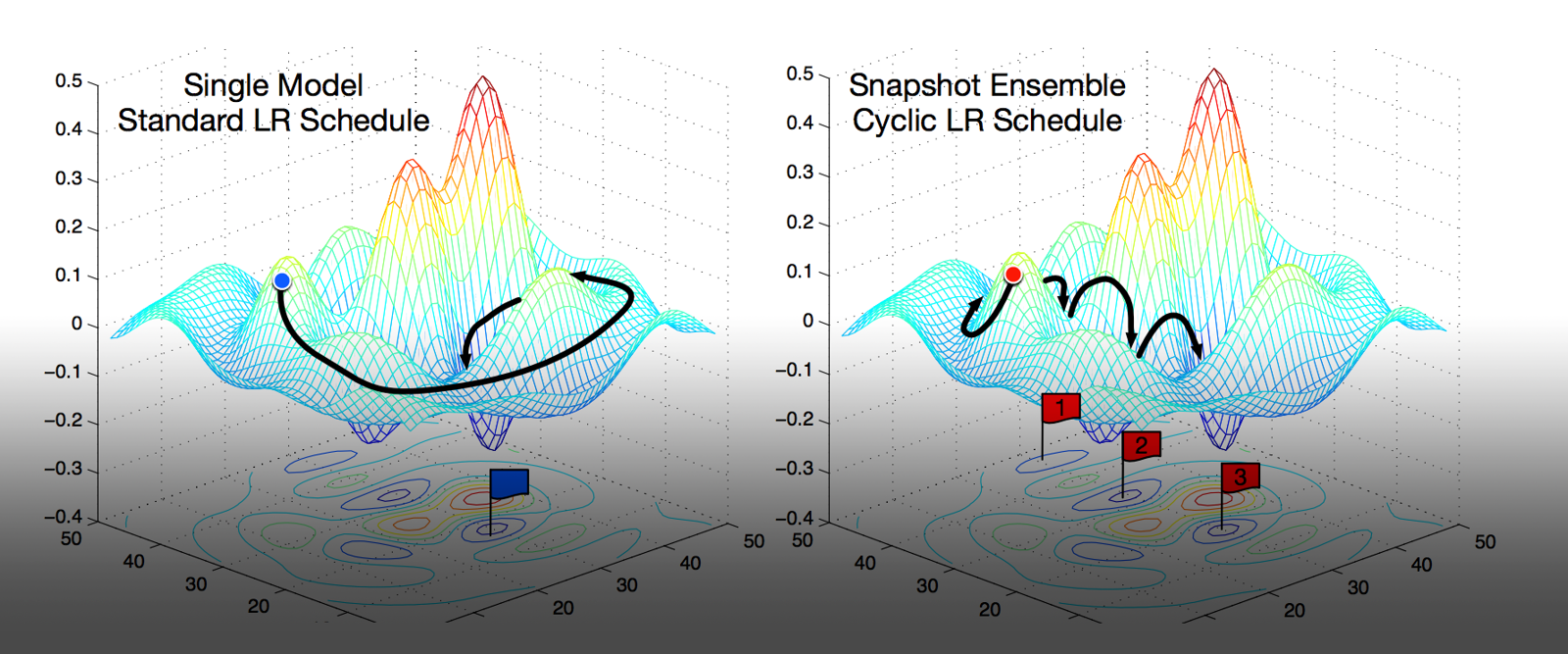

By using the same principle from snapshot ensembles, we can save an indefinite amount of loras as we train it to pick the best-looking one without worrying about how many steps we set it to train for.

Now you may be thinking, "But can't we just use the save every N steps/epochs option for this?" Well, yes and no, if you merely set it to blindly save every once in a while you will save them while the learning rate is high, yielding worse results (mostly resulting in bad anatomy from what I've seen), to prevent that we just have to use a cyclic scheduler and save at the lowest point of the schedule, for that we can use cosine_with_restarts then set our max train steps to 10000 (set wamup to zero, otherwise the cycles and saving will de-sync), to save every 500 steps we do 10000/500=20, meaning we set the number of cycles to 20 in the scheduler, if we wanted to save every 1000 steps it would be 10 cycles (10000/1000=10) and that's it, the learning rate will correctly decay and it will save a lora each time it does.

There's a caveat with that though, if we are using adaptive optimizers such as Dadapt and Prodigy this may end up frying the lora at the first restart because if the optimizer feels the learning rate is too low too early it will try to compensate for that by increasing its learning rate estimate, then when the scheduler restarts back to 1 the learning rate will be too high and ruin it, I don't know exactly what causes this but you have to watch out for it on tensorboard

https://i.imgur.com/x6cUlnV.png

In this example image only the first purple graph is a good one (you have to watch the lr/d*lr graph), the spikes before the learning rate decays in the other ones means it will blow up when it restarts.

That being said I prefer to use REX instead of cosine because I feel like cosine decays the LR too early, since I couldn't find a version of REX with restarts I made one myself (code attached to this article), I use it by saving it at venv\Lib\site-packages\custom\custom.py then setting lr_scheduler_type = "custom.custom.RexWithRestarts" and lr_scheduler_args = "first_cycle=1000", so it cycles every 1000 steps (idk if there's anything "wrong" with the scheduler code, its working for me though)