What a day! SD3 just landed and it's already been quite a ride, so gather 'round and take a moment to reflect on where we've been, where we are, and where we're headed.

How Does SD3 Compare to Prior Stability AI Releases?

"This is SD2 All Over Again"

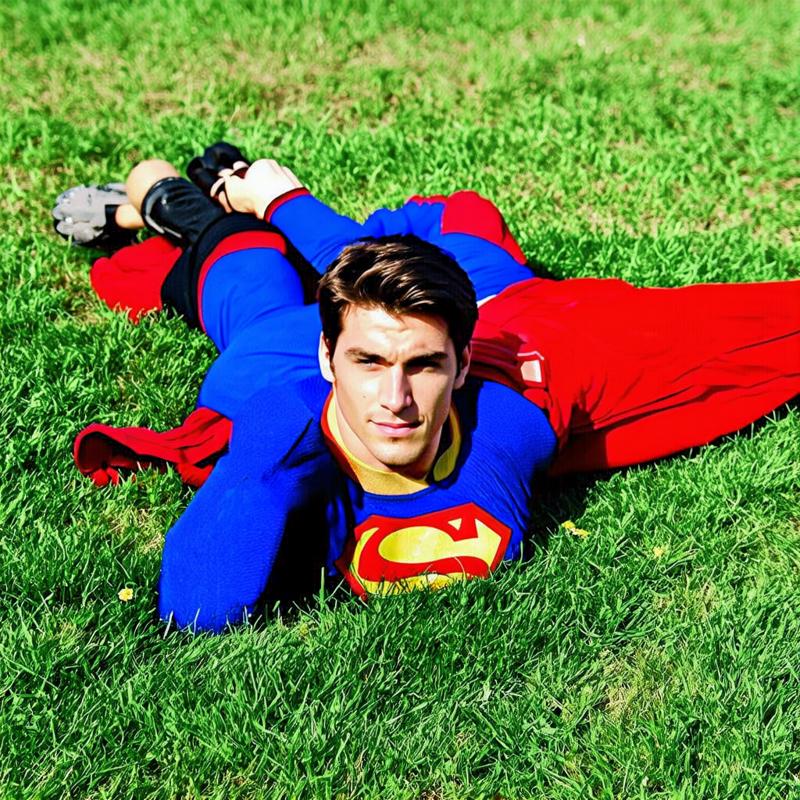

Let's address the elephant in the room: the heavy-handed censoring. It feels like we've been here before, right? Remember SD2? The rushed attempt to sanitize the dataset by Stability after the release of SD1 led to too much data being pruned in a way that made it so that the resulting model struggled to produce realistic humans. It appears that something similar may have happened in preparation for the public release of SD3 Medium. If you haven't already seen some of the bizarre results, I hope you enjoy this scene of "Superman lying in the grass".

Like SDXL, There Are Good Things, and With Community Love, It Could Be Great

When SDXL dropped in July 2023, the reactions were a mixed bag. Initially, community models based on SD1.5, with a bit of Hi-Res fix magic, outperformed SDXL. But we stuck with it. The solid foundation and higher resolution of SDXL allowed it to evolve and eventually surpass SD1.5 in most areas (except for those folks making animations).

SD3's bones seem promising too:

Text Rendering: I'd give it an 8/10.

Finer Details: Improvements in the VAE mean better detail in distant objects, buildings, and faces—when they're not turning into non-human monstrosities.

Prompt Adherence: This was hyped as a major improvement, but I'm not entirely convinced yet.

Why is SD3 Struggling with Human Anatomy?

The simple answer? Safety. But not safety for us—safety for Stability AI. It seems they're trying to avoid any liability for inappropriate content generated with their model, something they've likely faced significant scrutiny over since the "opening of Pandora's box" with the release of SD1. Months ago, Emad, Stability's then CEO, mentioned that SD3 might be the last model they release. Initially, we thought this meant it would be the best model and we'd never need anything else. But now, with uncertainty about the future of Stability AI, it seems there might be other reasons:

Financial Constraints: They might not have the financial means to create and release another model.

Reputation Management: They needed to finish wiping away the "mark of shame" from their prior models and demonstrate they prioritized safety eventually.

Business Viability: Spending millions of dollars to develop and then give away the model for free builds an ecosystem, but it doesn't build a sustainable business without a strong community business model.

What Now?

So, what's next for us, the open-source image gen community? We hope that, with fine-tuning, SD3 will follow SDXL's path and evolve with community support. However with a non-commercial license, it seems like this has already been stifled to some degree as well. But this is also a lesson for us to diversify and not be caught off guard in the future.

It's time to broaden our horizons. Other open-source entries deserve our attention:

PixArt Sigma: Released in April, it was overshadowed by the anticipation of SD3, but supports 4k image gen as well as enhanced prompt-adherence

Lumina Text-to-Image: Released in May, is a mix-and-match image gen pipeline that supports using various text encoders, parameter sizes, and VAEs to generate 2k images.

Hunyuan: Released in May, capable of understanding English and Chinese.

Lastly, maybe it's time we take matters into our own hands. Instead of relying on corporations to generously release models for commercial use, we could organize the best minds in our ecosystem and crowdfund the development of foundational models tailored to our needs. It's a big undertaking, but it might be the best way to ensure high-quality, open-source image models remain accessible for everyone.

Let me know what you're thinking about SD3 and what you predict will happen with the ecosystem over the next few months. Will SD3 get better? Will we adopt PixArt? Or do you wanna be a part of an initiative to build a community foundational model?