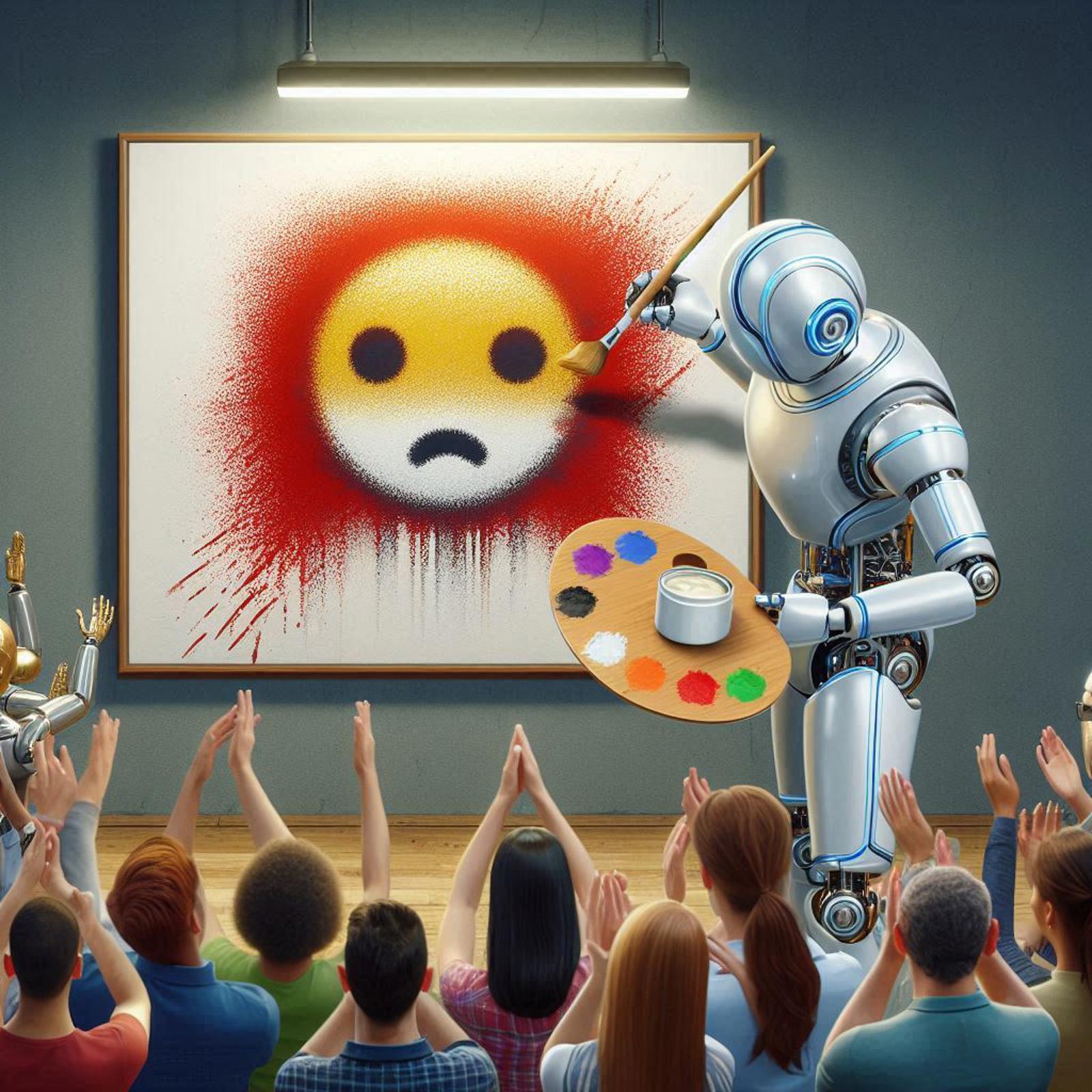

The recent release of Stability Diffusion 3 (SD3) by Stability.AI has sparked debate within the AI art community, particularly for platforms like CivitAI. While lauded for its potential, SD3's censored nature raises concerns about the future of open-source image generation.

✨Curating Creativity: A Double-Edged Sword

Stability.AI's decision to filter SD3 stems from a desire to mitigate the generation of potentially harmful content. However, this approach presents a challenge for open-source communities like CivitAI. Unrestricted exploration and experimentation are core tenets of this space, and pre-programmed limitations can stifle artistic expression.

✨Beyond the Filter Bubble: Maintaining Representational Diversity

Censorship in training data can lead to biased outputs. By filtering out certain content categories, SD3 may lose the ability to represent the full spectrum of human imagination. This homogenization can lead to repetitive, uninspired AI art.

✨Open Source, Open Minds? Rethinking Censorship and Control

Stability.AI champions open-source models, yet censorship contradicts this very principle. The CivitAI community advocates for user control through prompts and filters, fostering a more responsible approach to AI art generation.

✨Beyond the Workaround: Fostering a Collaborative Future

History suggests that censorship filters can be bypassed. This creates a cycle of workarounds and limitations, diverting focus from exploring the model's true potential. A more collaborative approach, where Stability.AI trusts the CivitAI community to wield its tools responsibly, could be more productive.

✨Moving Forward: A Call for Open Dialogue

The debate surrounding SD3 highlights the complexities of balancing safety with artistic freedom in the burgeoning field of AI art. CivitAI, with its commitment to responsible exploration, can be a valuable partner in this discussion. Let's continue the conversation – what are the best practices for ensuring responsible AI art creation in an open-source environment?