Below is a list of extensions for Stable Diffusion (mainly for Automatic1111 WebUI). I have included ones that efficiently enhanced my workflow, as well as other highly-rated extensions even if I haven't fully tested them yet. The aim is to build a handy list for everyone. I'll continue learning and expanding this list as I go.

Edit (Aug. 9, 2024): I've included new discoveries of handy extensions for a precise control in Stable Diffusion. Happy summer days, folks.

Get any insights to add? Please share them in the comment section, or you can vote or specify other tools in the Poll below (powered by StrawPoll).

How to Install the Extensions?

Using the Github link of the text2prompt extension as an example:

• Install from the extension tab on A1111 WebUI

Copy https://github.com/toshiaki1729/stable-diffusion-webui-text2prompt.git into "Install from URL" tab and hit "Install".

• Install Manually

Clone the repository into the extensions folder and restart the web UI.

git clone https://github.com/toshiaki1729/stable-diffusion-webui-text2prompt.git extensions/text2prompt

You can also download the zip file and unzip it to the extensions folder: \extensions\[extension name]

Part 1. Best Stable Diffusion Extensions for Image Upscaling and Enhancement

1. Tiled Diffusion & VAE

4.6k stars | 330 forks

https://github.com/pkuliyi2015/multidiffusion-upscaler-for-automatic1111

Tiled Diffusion allows you to upscale images larger than 2K at lower VRAM usage. It can be integrated into the Stable Diffusion upscaling workflow, after highres.fix 1.5x or 2x hits the CUDA memory constraints.

It supports integrating Regional Prompt Control, ControlNet, and DemoFusion into the workflow, making it flexible and convenient for the production pipeline.

Here is a detailed tutorial (PDF) by PotatCat: https://civitai.com/models/34726

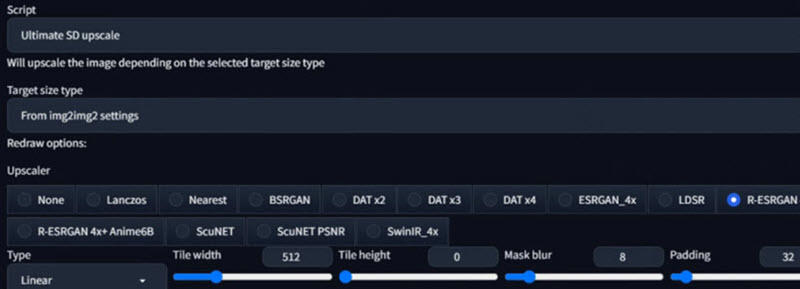

2. Ultimate SD Upscale

1.5k stars | 153 forks

https://github.com/Coyote-A/ultimate-upscale-for-automatic1111

Ultimate SD Upscale extension helps you to achieve higher resolutions, especially when highres.fix has cuda of out memory errors or cannot reach a larger size. It is better to combine this extension with ControlNet to keep the composition of the image, without extra heads or other image distortions.

It works by dividing the images into grids (tiles) and inpaints it. The extension will fix the seams along the edges and redraw them. You can tweak the mask blur and padding parameters to naturalize the seams. As for upscaling algorithms, the no-brainer choice is ESRGAN for photorealistic images, and R-ESRGAN 4x+ for the rest. For detailed tutorials and explanations on each parameter, read the Wiki page of this extension.

3. Standalone Software

If you have CUDA issues (low Vram), or you prefer batch upscaling tons of images with details kept, you can also try using a standalone AI image upscaler - Aiarty Image Enhancer. See how it handles 512px to 8K/16K upscaling in the video below.

Part 2. Best Extensions for Stable Diffusion Prompts and Tags

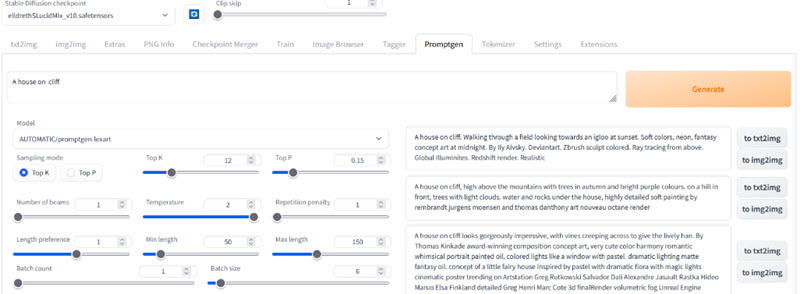

1. Promptgen

448 stars | 85 forks

https://github.com/AUTOMATIC1111/stable-diffusion-webui-promptgen

Promptgen lets you generate prompts right inside the Stable Diffusion WebUI. The author has GPT2 finetunes for prompts scraped from lexica.art and majinai.art (with/without NSFW filters). Users can add more models in the setting.

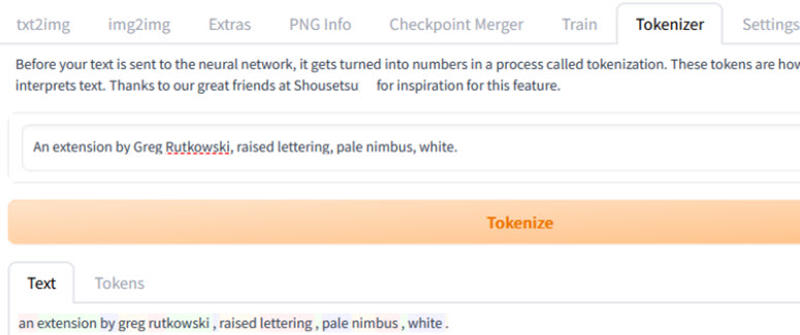

2. Tokenizer

139 stars | 23 forks

https://github.com/AUTOMATIC1111/stable-diffusion-webui-tokenizer

Tokenizer is an extension for stable-diffusion-webui that adds a tab that lets you preview how CLIP model would tokenize your text.

3. Model-keyword

233 stars | 13 forks

https://github.com/mix1009/model-keyword

Model-keyword is an extension for A1111 WebUI that can auto fill keyword for custom stable diffusion models and LoRA models. It works for txt2img and img2img. When prompting, make sure to enable this extension. It will detect the custom checkpoint model or LoRA you are using, and then insert the matching keyword.

4. Prompt Generator

228 stars | 19 forks

https://github.com/imrayya/stable-diffusion-webui-Prompt_Generator

Prompt Generator works in AUTOMATIC1111 WebUI. It helps you to extend the base prompt into detailed descriptions and styles. The script is based on distilgpt2-stable-diffusion-v2 by FredZhang7 and MagicPrompt-Stable-Diffusion by Gustavosta and it runs locally without internet access.

Fred's script can generate descriptive safebooru and danbooru tags, making it a handy extension for txt2img models focusing on anime styles. MagicPrompt series are GPT-2 models to generate prompts for AI images.

For more models, you can edit the json file at \extensions\stable-diffusion-webui-Prompt_Generator\models.json as long as the model is hosted on huggingface.

5. Text2prompt

159 stars | 15 forks

https://github.com/toshiaki1729/stable-diffusion-webui-text2prompt

It is another prompt generator for A1111 WebUI, mainly used for anime. You can increase the K and P value for the prompts to be more creative, or enable the Cutoff and Power option while decreasing Power value. For a more strict result, do the opposite.

Note: only prompts that contain some danbooru tags can be generated.

6. Novelai-2-local-prompt

69 stars | 14 forks

https://github.com/animerl/novelai-2-local-prompt

This script helps you to convert the NovelAI prompt format to Stable Diffusion compliable prompts. It also has a button to recall the previously used prompts.

7. Ranbooru

61 stars| 7 forks

https://github.com/Inzaniak/sd-webui-ranbooru

This extension can pick a random sets of tags from boorus pictures (incl. Gelbooru, Rule34, Safebooru, yande.re, konachan, aibooru, danbooru and xbooru) and add into your prompts. Recommended by ivragi.

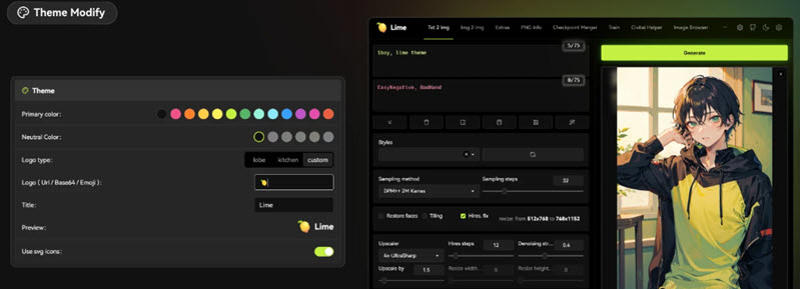

Part 3. UI-related Extensions for Stable Diffusion A1111

1. Stable Diffusion Themes – Lobe Theme

2.3K stars | 215 forks

https://github.com/lobehub/sd-webui-lobe-theme

If you don't like the default Gradio-powered WebUI interface, you can use this extension that comes with a modern sleek UI. Its Github page has detailed instructions on installation and customizing guidance.

Note: before installing the theme, please read carefully about the compatibility issues. For instance, Lobe Theme v3 only works for SD WebUI v1.6 and above. For older versions, you need to use the legacy themes.

2. A1111 WebUI Image Browser

596 stars | 109 forks

https://github.com/AlUlkesh/stable-diffusion-webui-images-browser

The extension helps you to review previously created images, examine the metadata associated with their generation, and utilize that data for further image generation processes such as text-to-image or image-to-image prompts, directly inside the A1111 WebUI.

It also allows you to curate a collection of your preferred images by adding them to a "favorites" album, and provides the functionality to remove images that are no longer of interest to you.

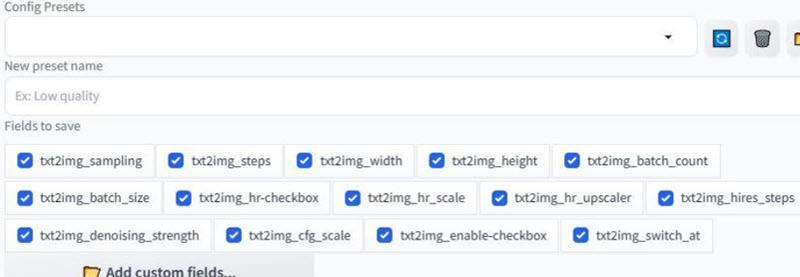

3. Config-Presets

252 stars | 16 forks

https://github.com/Zyin055/Config-Presets

It allows you to configure user-friendly, adjustable dropdown menus to facilitate the modification of parameters, both for the txt2img and the img2img tabs.

You can adjust values to your personal preferences to enhance your workflow efficiency.

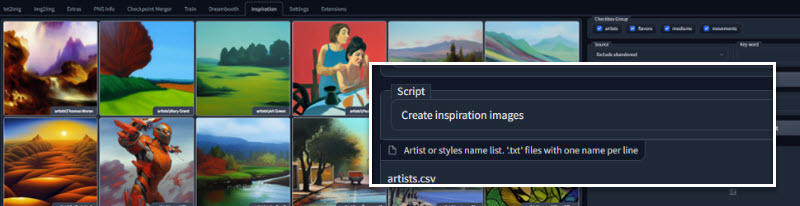

4. Inspiration (Artist Styles and Genre)

114 stars | 22 forks

https://github.com/yfszzx/stable-diffusion-webui-inspiration

The Stable Diffusion WebUI Inspiration extension can display random images with signature style of a particular artist or artistic genre. Upon selection, more images from that artist or genre will be presented, making it easy for you to visualize the wanted styles.

It has a vast collection of approximately 6000 artists and styles at your disposal, you can easily refine your search by using keywords to filter through artists or genres. You also have the option to save preferred styles to your favorites or to block those that do not appeal to you.

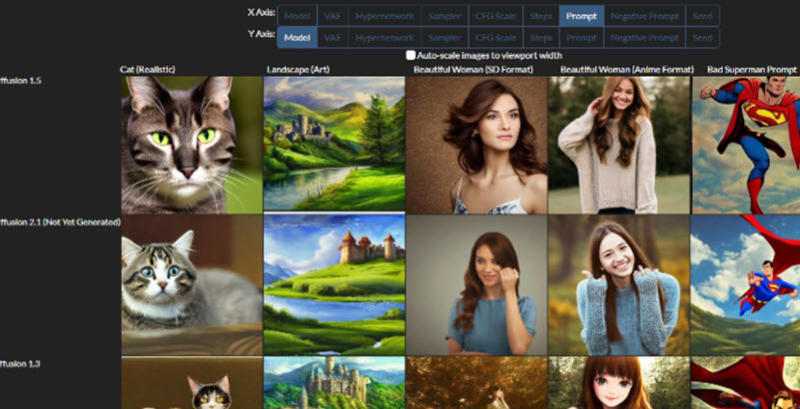

5. Infinity Grid Generator Script

174 stars | 24 forks

https://github.com/mcmonkeyprojects/sd-infinity-grid-generator-script

This extension is designed to generate infinite-dimensional grids. If you are familiar with the X/Y plot grid, this is the "infinite axis grid" that has more axes on it. Utilizing this tool, you can swiftly analyze outcomes from a myriad of parameter combinations.

Note: Please be aware that the time required to produce a grid is exponential. For example, with four variables, each offering five different options, the result would be a staggering 625 images, calculated as 5 raised to the power of 4.

Part 4. Best Stable Diffusion Extensions for Animations and Videos

1. Animate Diff

2.9k stars | 246 forks

https://github.com/continue-revolution/sd-webui-animatediff

Animate Diff is one of the most popular ways to generate videos with Stable Diffusion. As an easy-to-use video toolkit, you can also generate GIFs with this script.

The author of Animate Diff also created Segment Anything to enhance inpainting and ControlNet semantic segmentation.

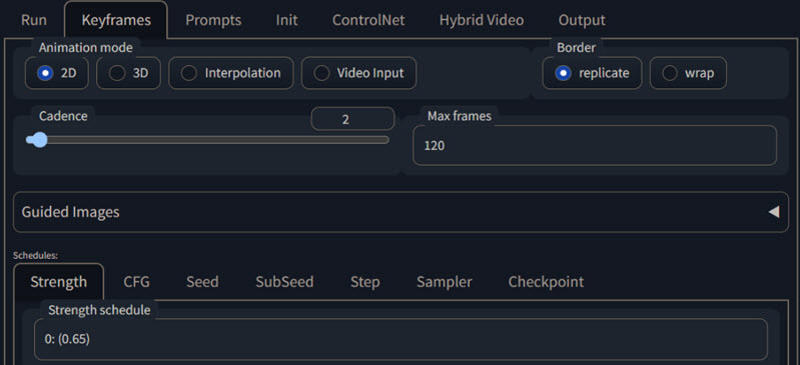

2. Deforum

2.6k stars | 383 forks

https://github.com/deforum-art/sd-webui-deforum

It is the official porting of Deforum for Stable Diffusion Automatic1111 WebUI. Using Deforum, you can create animation videos with a list of text prompts and camera movement settings.

The tool offers choices between 2D and 3D motion styles, allowing you to tailor the visual appearance of your animations or videos to your specific preferences. You can also use the interpolation feature.

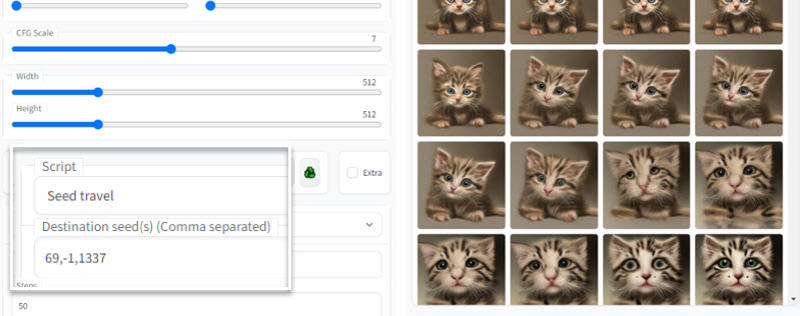

3. Seed Travel

302 stars | 25 forks

https://github.com/yownas/seed_travel

Seed Travel is an extension for the Stable Diffusion WebUI that helps you generate a series of images, based on the range of seeds you designate.

To illustrate, it compares all possible noise patterns as a map, with each seed represented as a point on that map. What seed traveling does is it picks two "points" on this map and then "travels" between them, delivering a series of images that you can use to create animations from these still frames.

4. Prompt Fusion

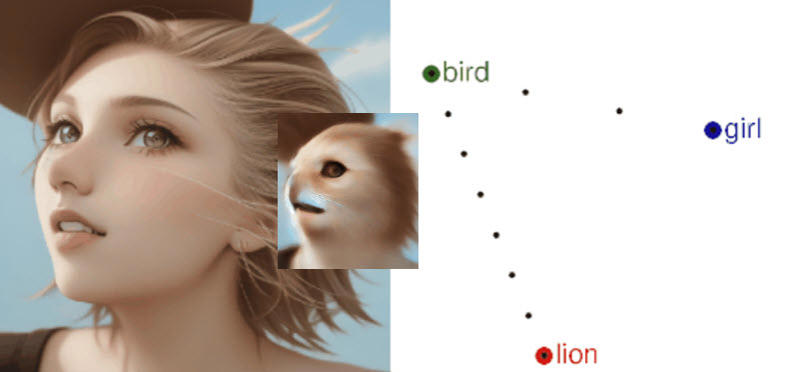

257 stars | 16 forks

https://github.com/ljleb/prompt-fusion-extension

Prompt Fusion allows you to "travel" during the sampling stage of a single image, thus creating consistent and slightly iterated visuals. The prompt interpolation feature allows you to create videos by progressively exploring the latent space to generate a sequence of images iteratively. It also has an attention interpolation feature for generating multiple images with subtle variations.

Other similar extensions:

Prompt Travel: https://github.com/Kahsolt/stable-diffusion-webui-prompt-travel

Part 5. Extensions for Controls, Editing and Fine-tuning

1. ControlNet

16.3k stars | 1.9k forks

https://github.com/Mikubill/sd-webui-controlnet

ControlNet is an advanced feature integrated into the Stable Diffusion model. It introduces a level of control over the image generation process that was previously challenging to achieve. The extension allows users to guide the diffusion process by providing specific constraints or conditions, using utilities such as OpenPose, Canny, HED, Scribble, Mlsd, Seg, Depth and Normal Map for various scenarios.

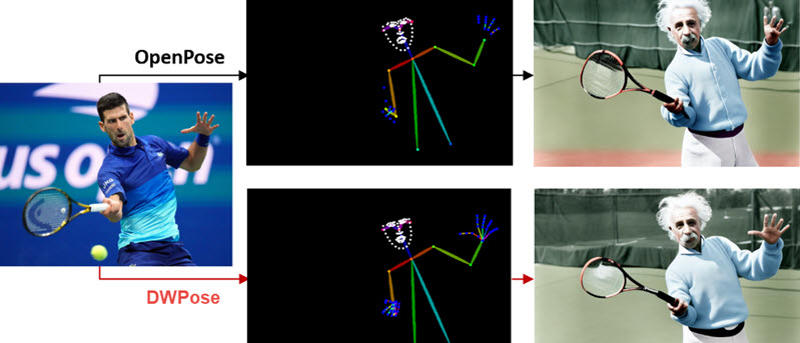

2. DWPose

2k stars | 133 forks

https://github.com/IDEA-Research/DWPose

DWPose claims to output better results than Openpose for ControlNet. It supports consistent and controllable image-to-video synthesis for character animation. Note: DWPose is implemented as a preprocessor (dw_openpose_full) for sd-webui-controlnet v1.1237 and higher.

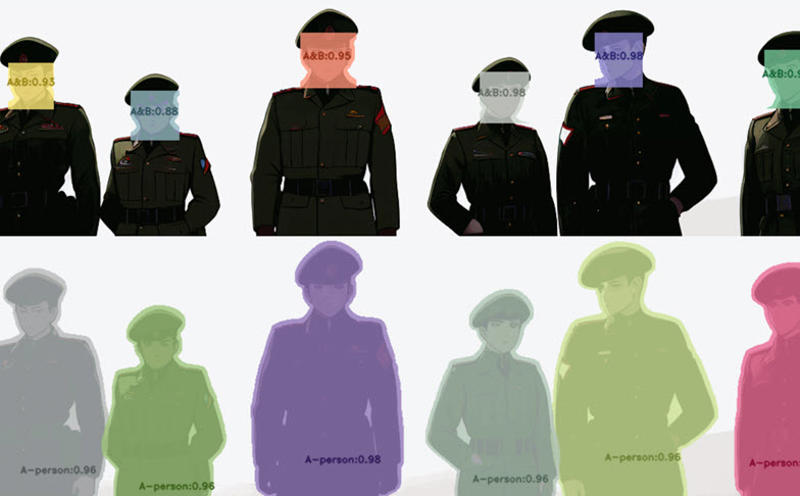

3. ADetailer

3.9k stars | 303 forks

https://github.com/Bing-su/adetailer

ADetailer is one of the most popular auto-masking and inpainting tools for Stable Diffusion WebUI. Once enabled, it can automatically detect, mask and enhance the faces with more details.

Depending on your image output, sometimes the enhanced image can be overfitting. You can experiment with the parameter settings for better results.

4. Detection Detailer

336 stars | 74 forks

https://github.com/dustysys/ddetailer

This extension is designed for object detection and auto-mask. It can segment people and faces from the background, and automatically improve the faces for details.

5. Reactor (Face Swap)

2.3k stars | 245 forks

https://github.com/Gourieff/sd-webui-reactor

Reactor is a face swap extension for Stable Diffusion WebUI. Before using this script, you need to install dependencies on your system. For instance, for A1111, you need to install Visual Studio or VS C++ Build Tools only (if you don't need the entire Visual Studio). The author's GitHub page has detailed tutorials on this.

You can index faces or specify the gender. If the generated image has blurry faces, you can enable the "Restore face" option.

You can also use Roop: https://github.com/s0md3v/sd-webui-roop

Disclaimer: It is imperative to exercise caution and respect local regulations when using face swap features. It is advised to seek permission from the concerned individual if the software is being employed to alter the visage of an actual person. It is crucial to explicitly state that the resulting image or video is a deepfake.

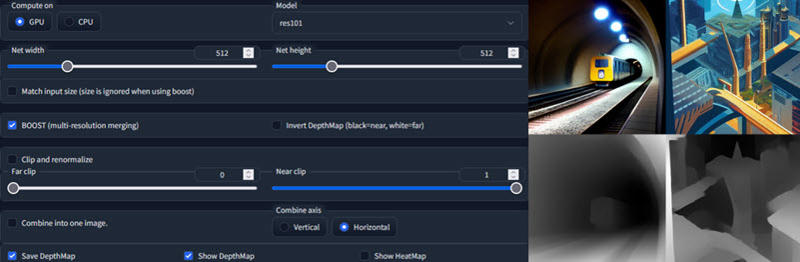

6. Depth Map Script

1.6k stars | 152 forks

https://github.com/thygate/stable-diffusion-webui-depthmap-script

This extension allows you to generate depth maps, 3D meshes, normalmaps and create 3D stereo image pairs.

For stereo images, you can either go with the "Generate Stereo SBS" or "Generator Anaglyph" options. "Generate 3D inpainted mesh" will deliver mesh needed to generate videos. Besides generation, you can also use the Depth Map script on existing images.

7. Dynamic Thresholding

1.1k stars | 100 forks

https://github.com/mcmonkeyprojects/sd-dynamic-thresholding

Dynamic thresholding (CFG Scale Fix) is an extension for several Stable Diffusion WebUI and ComfyUI. It allows you to use higher CFG scales without color issues. In this way, it gives you more flexible choices when generating images in Stable Diffusion, without worrying about possible distortions introduced by high CFG scales.

8. Multi-subject Render

365 stars | 27 forks

https://github.com/Extraltodeus/multi-subject-render

This add-on allows you to generate multiple subjects for a complex scene at once, powered by depth analysis. The usual prompt UI will be used to generate the background only. Therefore, you just need to describe the background/scenes/settings, without adding any token for the foreground subjects.

For the subjects, there is a "Foreground prompt" section. You can include all the subjects in one line, or use multiple lines, each line describing one character. There's a final blend section to configure. The blend width and height will be the final resolution of the image.

9. Composable LoRA

470 stars | 72 forks

https://github.com/opparco/stable-diffusion-webui-composable-lora

This extension can confine the impact of a LoRA to a designated subprompt. The benefit of this approach is that it can effectively mitigate the adverse impacts introduced by the built-in settings, where negative prompts are always affected by LoRA.

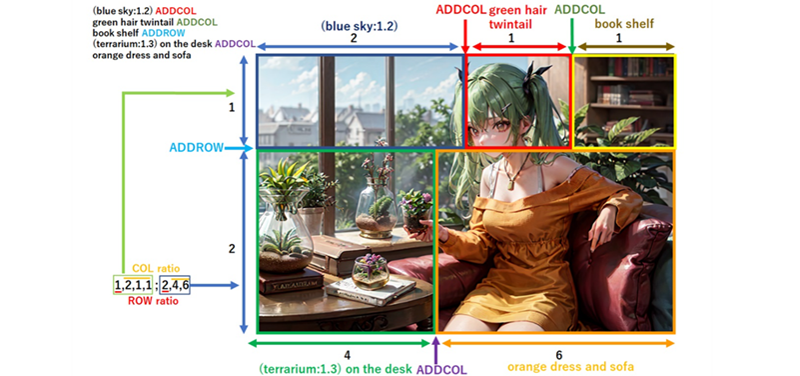

10. Regional Prompter

1.5k stars | 125 forks

https://github.com/hako-mikan/sd-webui-regional-prompter

Regional Prompter allows you to specify prompts for different regions of an image, thus achieving a precise control of content and colors. With a dedicated syntax, you can split the regions by columns and rows, or use masks for a more flexible regional control.

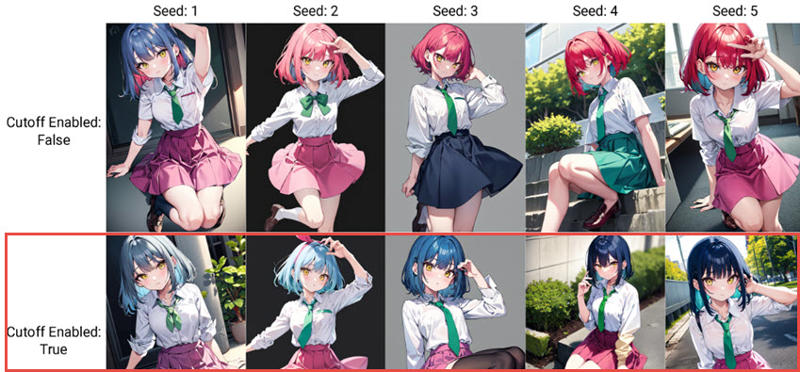

11. Cutoff for SD WebUI

1.2k stars | 85 forks

https://github.com/hnmr293/sd-webui-cutoff

This extension can limit the tokens' influence scope. What does that mean? Let's take a look at the following scenario.

We may find Stable Diffusion mess up with the image when there are lots of colors for different parts of the image. For instance, when we prefer an anime girl to have blue hair and wear a pink skit, the generated image may mess up the skirt and change the hair color to pink.

After enabling the Cutoff extension, we can put the words that we want to limit the scope in "Target tokens".

As you can see, the second rows are images generated with the Cutoff extension turned on. The hair colors (blue) and skirt colors (pink) are not messed up.

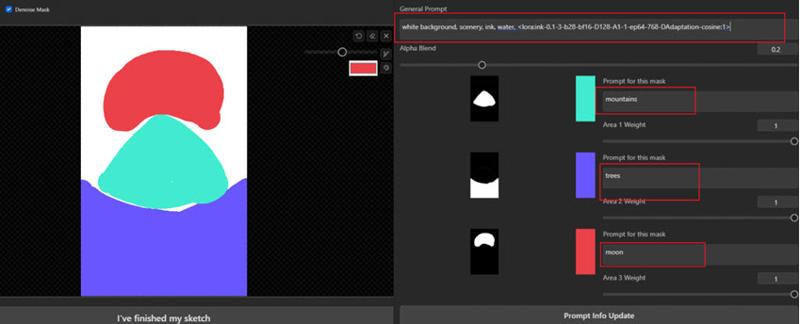

12. Latent Couple (Two Shot Diffusion Port)

704 stars| 105 forks

https://github.com/opparco/stable-diffusion-webui-two-shot

This extension allows you to determine the region of the latent space that reflects your subprompts. In plain language, you can mask the canvas, and control what subject will appear on each region. For instance, prompt for mask 1: mountains, for mask 2: trees, for mask 3: moon. The result is more controlled with the desired composition and subject matters.

Part 6. Other Handy Extensions for Stable Diffusion

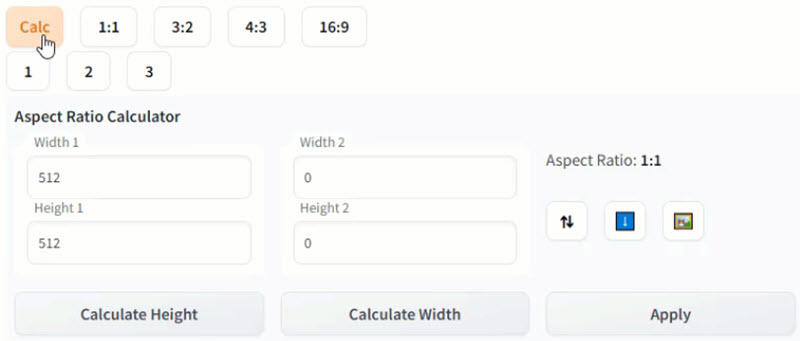

1. A1111 Aspect Ratio Selector

209 stars | 35 forks

https://github.com/alemelis/sd-webui-ar

Aspect Ratio Selector for Stable Diffusion Automatic1111 WebUI saves you from manually doing the math for different aspect ratios.

Install it by clicking the Extensions tab in WebUI > Install from URL > paste the url https://github.com/alemelis/sd-webui-ar > Install.

There will be aspect ratios appearing under width and height. For aspect ratios larger than 1 (such as 3:2, 4:3, 16:9), the script allows you to change the height while fixing the width. For aspect ratio smaller than 1 (such as 2:3, 3:4, 9:16), the width changes while the height is determined by you.

You can edit /sd-webui-ar/aspect_ratios.txt to personal preferences. The format is:

button-label, width, height, # optional comment.

Example:

1, 512, 512 # 1:1 square

# marks a comment, so lines starting with # will be ignored.

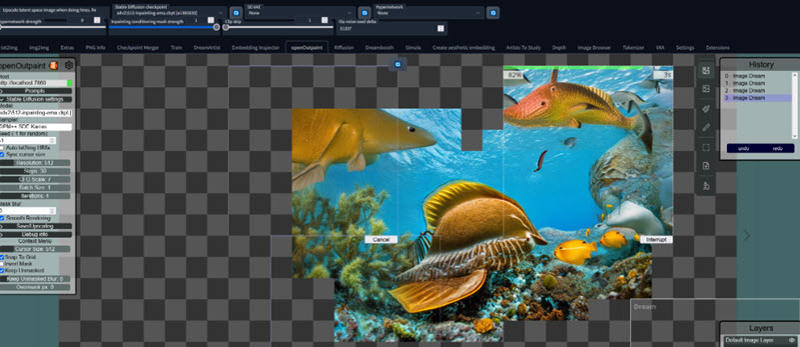

2. OpenOutpaint

395 stars | 24 forks

https://github.com/zero01101/openOutpaint-webUI-extension

It integrates the openOutpaint feature seamlessly into the AUTOMATIC1111 web user interface natively. You can also send the output from A1111 webUI txt2img and img2img tools directly to openOutpaint.

Please note that to activate this extension, the --api flag must be included in your webui-user launch script.

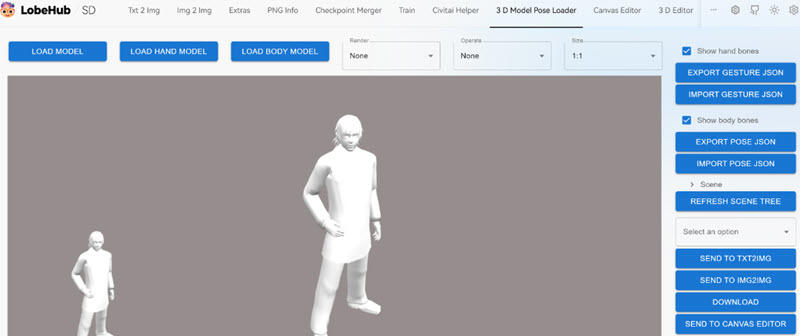

3. 3D Model Loader

228 stars | 22 forks

https://github.com/jtydhr88/sd-3dmodel-loader

Although Gradio has Gradio.Model3D component, but it only supports ojb, glb and gltf, so the author built this extension. You can load your 3D model or anime, edit model pose, and send it to txt2img/img2img.

4. Save Intermediate Images

109 stars | 16 forks

https://github.com/AlUlkesh/sd_save_intermediate_images

As its name indicates, this extension will save the intermediate images upon generation. You can also create videos (webm/mp4) and GIFs out of those images to showcase the "drawing process".

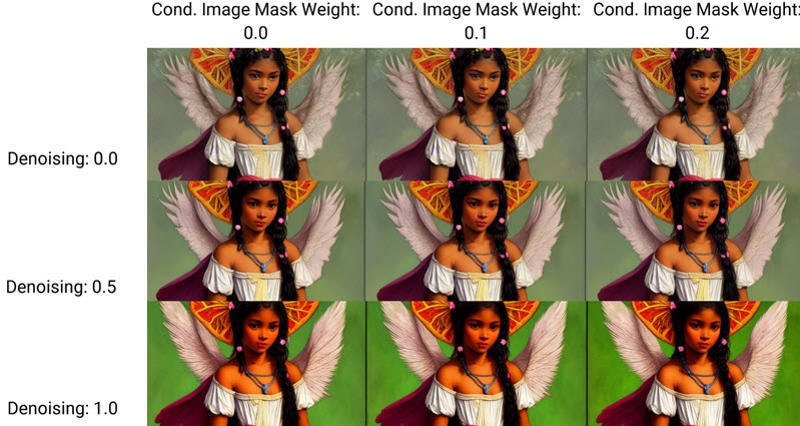

5. Conditioning-highres-fix

46 stars | 8 forks

https://github.com/klimaleksus/stable-diffusion-webui-conditioning-highres-fix

The script can improve the highres.fix feature for sd v1.5 inpainting model. Upon generating, it can rewrite the "Inpainting conditioning mask strength" value by making it relative to "Denoising strength" – set to half of the latter.

Here is a grid comparison provided by the author of this script. As you can see, the lower the Conditioning strength is, the better the image will be, with sharper quality. The AI image will become over saturated and simplified with high denoising strength.

6. SD Scale Calculator

https://preyx.github.io/sd-scale-calc/

This is not an extension for Stable Diffusion but an online tool, but it truly enhances my workflow, so I also bookmarked it here.

As its name suggests, SD scale calculator helps you to calculate the first-pass width and height by entering the target width and height. It is quite handy for highres. fix and img2img resolution settings.

Thanks for reading and participating in the ongoing vote. From the current results, it seems that many of you are interested in Stable Diffusion extensions for precise controls, editing, fine-tuning, and AI upscaling. Should I focus more on exploring extensions in these areas? Let me know your thoughts in the comment below.

![[35+ Resources] Must-have Extensions for Stable Diffusion](https://image.civitai.com/xG1nkqKTMzGDvpLrqFT7WA/07c88a2c-6be7-4419-8c7f-c0d293a8d99f/width=1320/girlanime4.jpeg)