Introduction

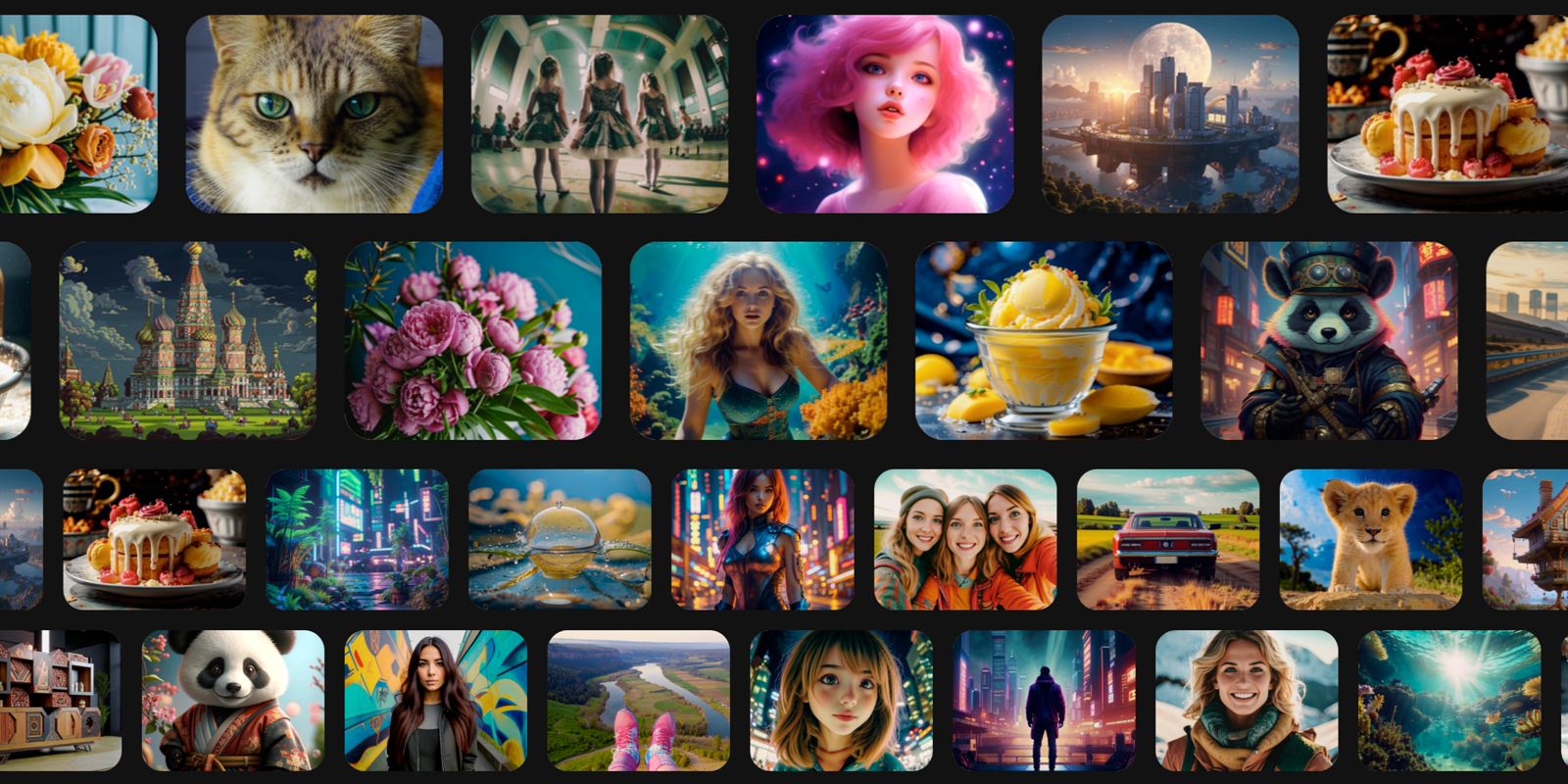

Despite impressive capabilities comparable to the likes of Stable Diffusion and Midjourney, Kandinsky has been undeservedly neglected by the general public. It's time to correct this injustice!

Kandinsky 3.1 is a feature-rich text-to-image model developed by the AI-Forever team. It is capable of generating images of stunning quality and detail based on text queries. The model has a wide range of features including:

Generate images at various resolutions, up to 4K

Style and composition control: Kandinsky allows you to use ControlNet customized specifically for their model.

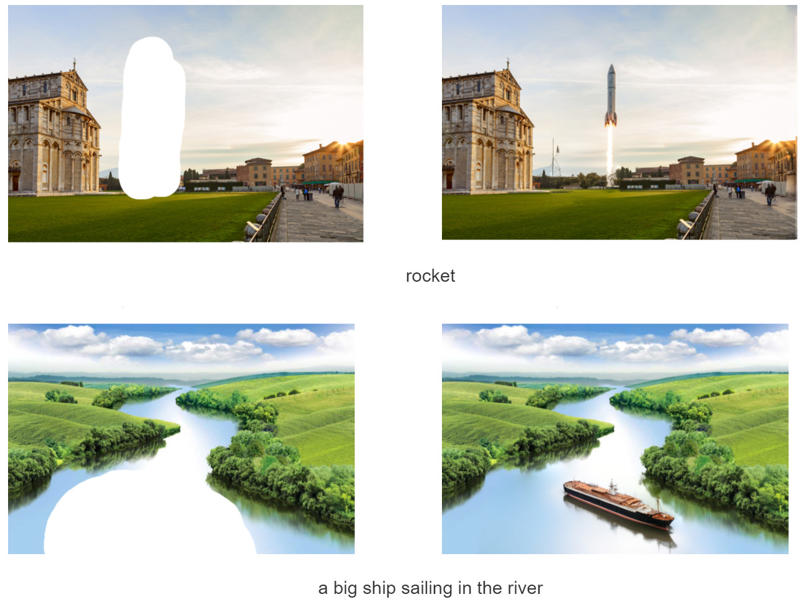

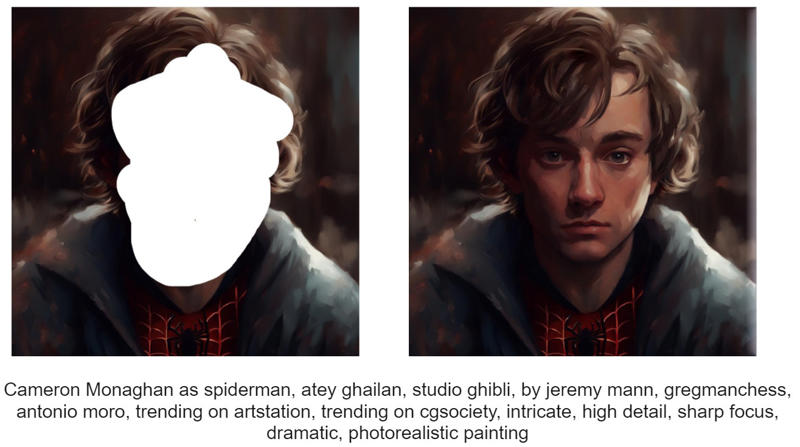

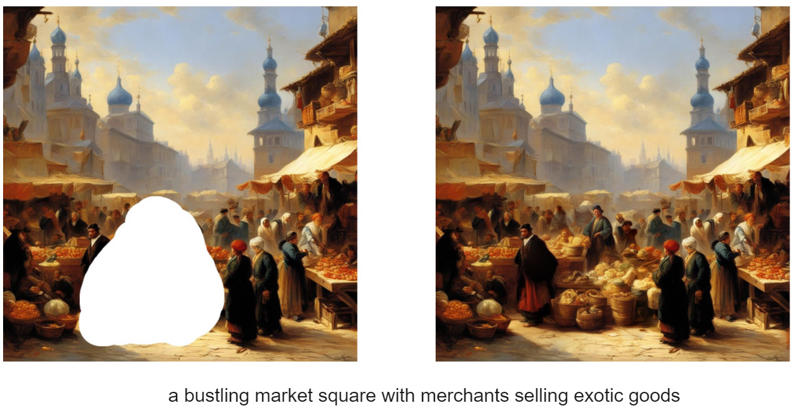

Image Editing by Text Description: Inpainting and Outpainting allow you to add new objects or modify existing objects in an image by simply describing the desired changes with text.

Combining multiple images into one: Image Blending opens the door to creative experimentation, allowing you to create unique compositions from multiple source images.

Kandinsky 3.1 is available for use now:

The source code for the model, released under the Apache 2.0 license, can be found on GitHub: https://github.com/ai-forever/Kandinsky-3

The model is available for download and use on Hugging Face: https://huggingface.co/ai-forever/Kandinsky3.1

The model has its own "AUTOMATIC1111", Kubin, for local use: https://github.com/seruva19/kubin

You can test Kandinsky's capabilities on the FusionBrain website: https://www.sberbank.com/promo/kandinsky/

A Telegram bot with extended functionality is also available: @kandinsky21_bot.

In this article, we'll take an in-depth look at the unique features of the Kandinsky 3.1, compare it to other popular models, and discuss why it deserves a place on Civitai.

If you don't want to read about model development and just want to see the results of Kandinsky 3.1, you can skip to "Kandinsky 3.1 is the ideological continuation of the Kandinsky model" part.

A bit of development history

Kandinsky 2.0, the first model

Nov 24, 2022 Sber AI released Kandinsky 2.0 the first multi-lingual text-to-image diffusion model that understands queries in over 100 languages!

Kandinsky 2.0 generation for the query "A teddy bear на Красной площади".

The training utilized the power of supercomputers, requiring 196 NVIDIA A100 cards with 80GB of memory on each card. The entire training took 14 days or 65,856 GPU-hours. First, the model was trained for 5 days at 256×256 resolution, then 6 days at 512×512 resolution and 3 days on the cleanest possible data.

Already at that point the model could do inpainting and outpainting.

Examples of prompts in different languages:

Kandinsky 2.1 - Image Blending and Variation Generation

Kandinsky 2.1 Generations

Image Blending

The column on the left is the "style" image that we want to transfer to the original image via diffusion.

Original image

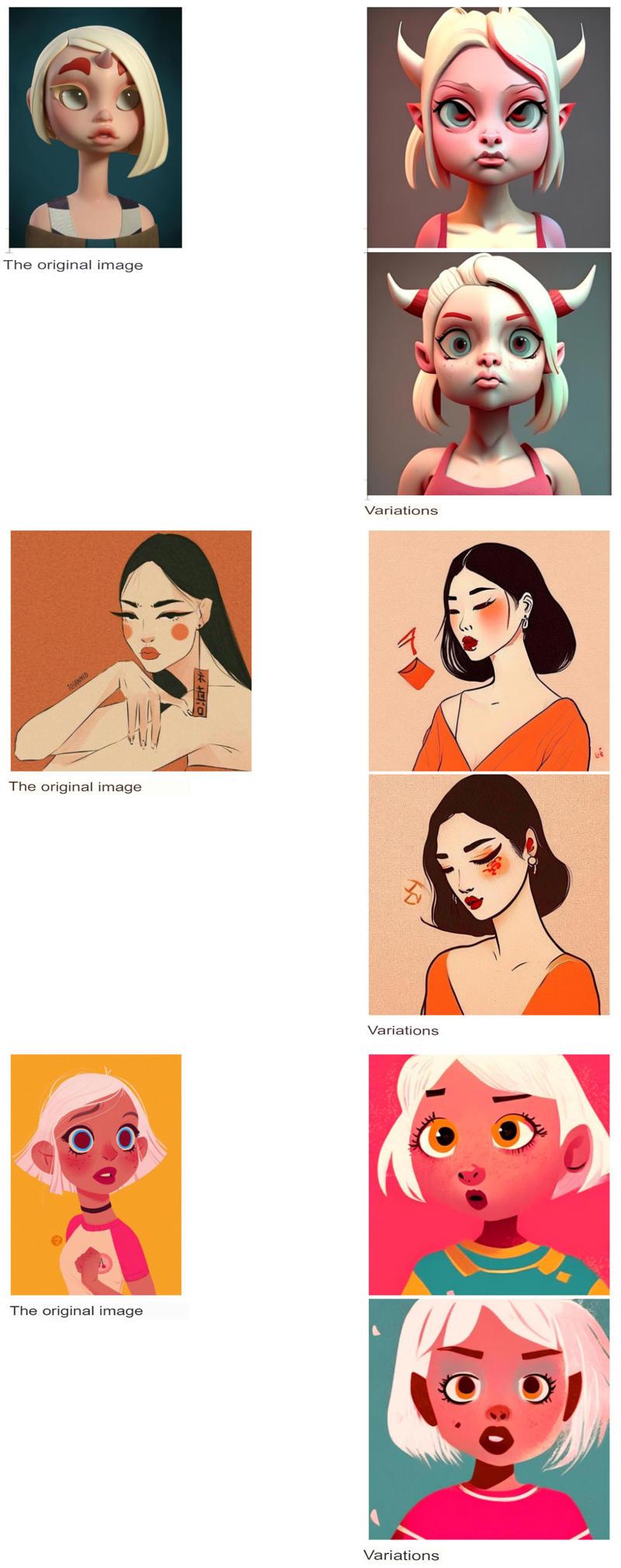

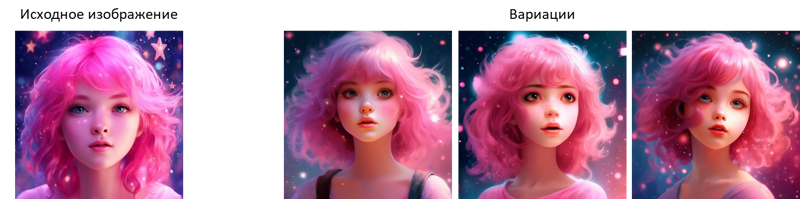

Synthesis of images similar to the reference image

The column on the left contains the original images and the remaining images are its variations synthesized using the diffusion model.

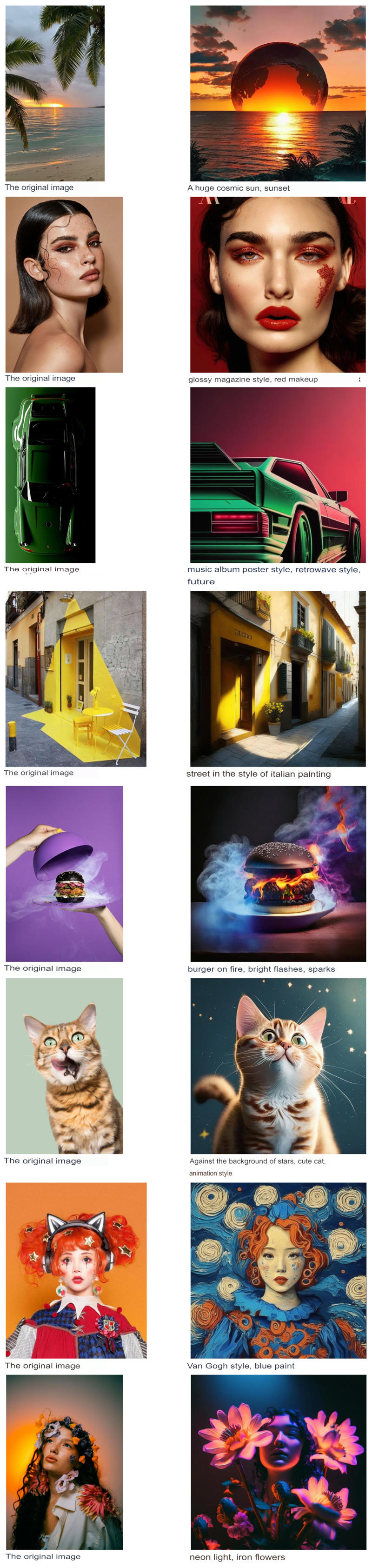

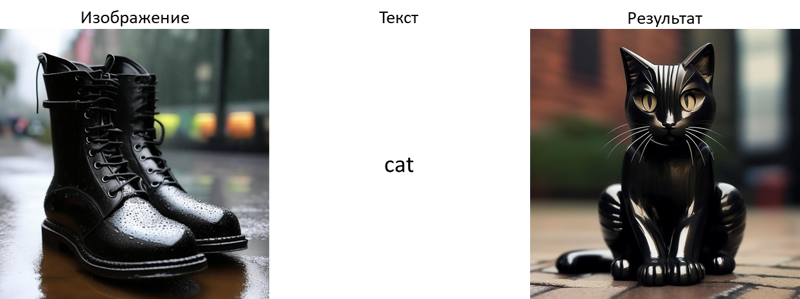

Modifying images by text

The source images are shown on the left, and the text description and the result of modifying the source image by that text are shown on the right.

Kandinsky 2.2 - a new step towards photorealism

generation resolution has now reached 1024 px on each side (in 2.1 it was 768 px);

generation can have any aspect ratio (in 2.1 there were only square generations);

generated images are now more photorealistic;

ControlNet functionality is now available, which adds the ability to make local changes to the image without changing the entire composition of the scene.

The main change within the basic architecture is the replacement of the visual encoder for training the Image Prior model with a larger CLIP-ViT-G, which allowed to increase the quality of generated images. Due to the encoder replacement, we had to retrain the Image Prior (Diffusion Mapping) model (1 million iterations) and further fine-tune the diffusion part of the U-Net (200 thousand iterations). During training we used data of different resolutions from 512 to 1536 pixels and different aspect ratios.

ControlNet capabilities

An example is shown in the figure below - here the authors show how you can use the result of contour extraction by the Canny algorithm to create an additional input condition on the scene composition.

Examples of ControlNet (Kandinsky 2.2) operation based on a depth map:

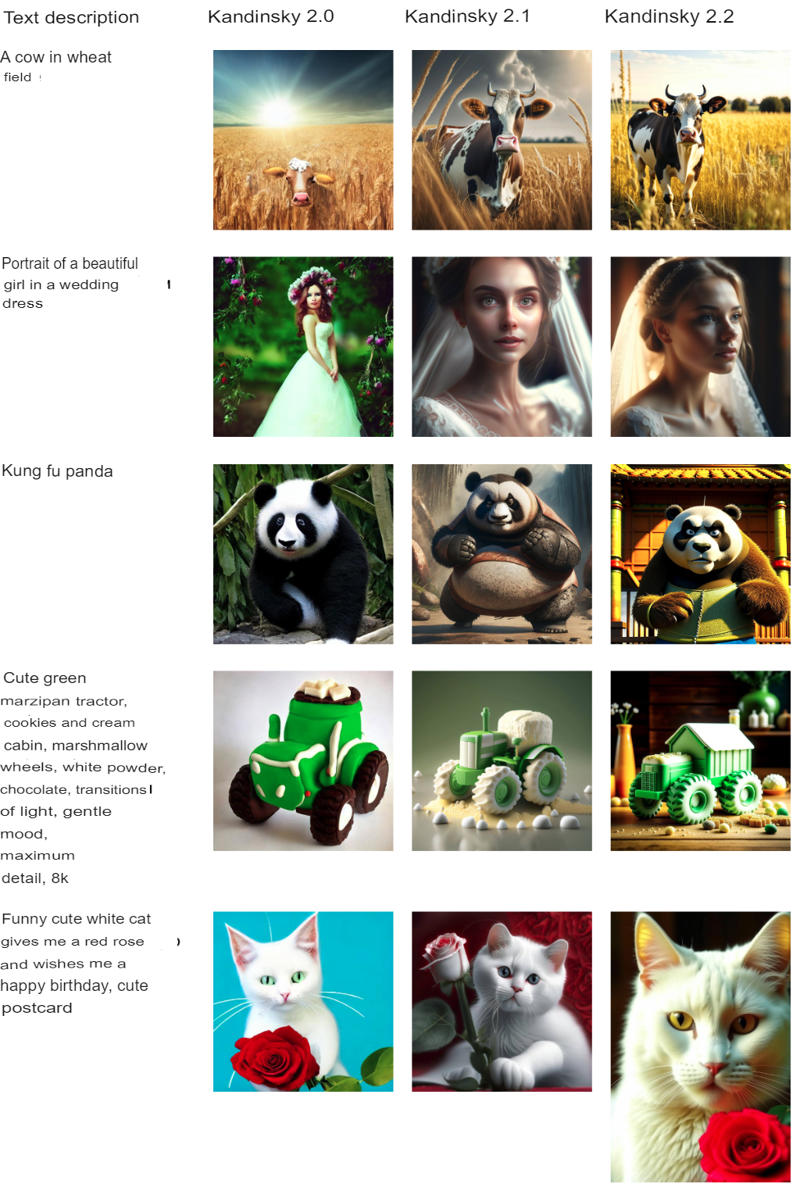

Kandinsky 2.X Version Comparison

Examples of Kandinsky 2.2 Generations

This section shows various Kandinsky 2.2 generations from completely different domains in a wide variety of styles: people, landscapes, abstractions, objects, interacting objects, etc. The descriptions for the Kandinsky 2.2 model are bilingual. The descriptions for the generation are in two languages, and the best part is that the model can synthesize images based on emoticons - I encourage everyone to experiment with this for sure

Kandinsky 3.0, better than SDXL?

A year after the release of the first diffusion model, they introduced a new version of the text-based image generation model - Kandinsky 3.0

There are a lot of technical changes in the model, which greatly improved the quality of generation, I advise you to read about them yourself here or here.

Comparison of Kandinsky 3.0 model with Kandinsky 2.2 and SDXL models by number of parameters is shown in the table below:

| | Kandinsky 2.2 | SDXL | Kandinsky 3.0 |

| --------------------- | ---------------- | ---------------- | ---------------- |

| Model type | Latent Diffusion | Latent Diffusion | Latent Diffusion |

| Number of parameters | 4,6 B | 3,33 B | 11,9 B |

| Text encoder | 0,62 B | 0,8 B | 8,6 B |

| Diffusion Mapping | 1,0 B | - | - |

| Decoder (U-Net) | 1,2 B | 2,5 B | 3,0 B |

| MoVQGAN | 0,08 B | 0,08 B | 0,27 B |Kandinsky 3.0 generation results and examples

Below are a few selected examples of Kandinsky 3.0 model generation (from texts in different domains).

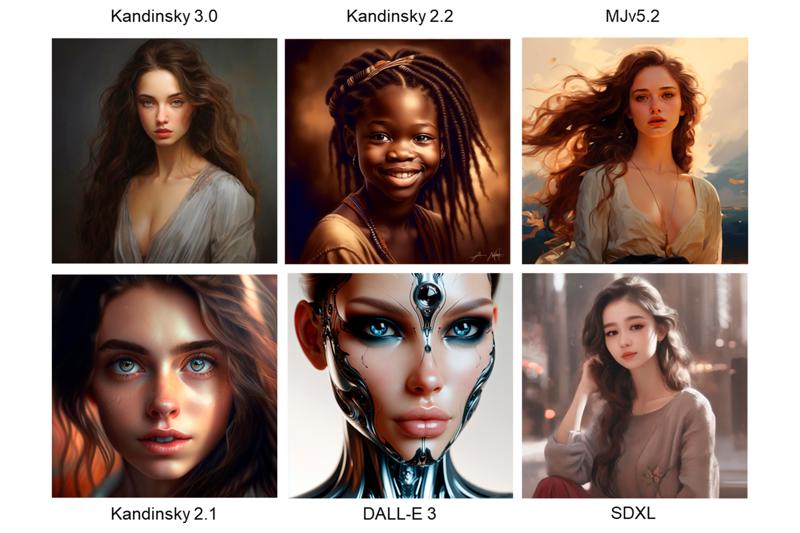

Results comparing Kandinsky 3.0 with other known text-to-image models

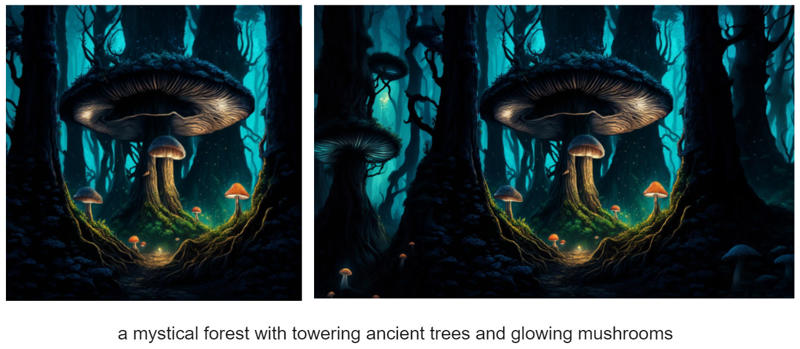

Examples of side-by-side comparison images:

Examples of popular model generation compared to Kandinsky 3.0:

A beautiful girl

A highly detailed digital painting of a portal in a mystic forest with many beautiful trees. A person is standing in front of the portal.

A man with a beard

A 4K dslr photo of a hedgehog sitting in a small boat in the middle of a pond. It is wearing a Hawaiian shirt and a straw hat. It is reading a book. There are a few leaves in the background.

Barbie and Ken are shopping

Extravagant mouthwatering burger, loaded with all the fixings. Highlight layers and texture

A bear in Russian national hat with a balalaika

Examples of Inpainting

Examples of Outpainting

Kandinsky 3.1 is the ideological continuation of the Kandinsky model

Kandinsky 3.1 includes several improvements:

distillation by the number of diffusion steps (Kandinsky 3.0 Flash);

Prompt improvement using the Neural-Chat-v3-1 language model;

IP-Adapter, which allows additional (in addition to text) conditioning on image (due to this it was possible to return the modes of mixing image, image and text, which were in Kandinsky 2.X versions, but worked there due to the presence of a special image prior block in these models);

ControlNet - mechanics implementing methods of additional conditioning (generation control) on the basis of canny edges, depth maps, etc.;

Inpainting - modification of the basic model to complete missing parts of the image by text;

SuperRes - a special diffusion model that increases the resolution of the image (so Kandinsky 3.1 can now generate 4K images).

In addition, they have trained a small version of the Kandinsky 3.0 Small (1B) model, which is easier and more convenient to experiment with.

Model acceleration

A serious problem with the Kandinsky 3.0 model, as with all diffusion models, was the speed of generation. To generate a single image, it was necessary to go through 50 steps in the reverse diffusion process, i.e., to run the data 50 times through U-Net with a batch size of 2 for classifier free guidance. To solve this problem, we used the Adversarial Diffusion Distillation approach first described in a paper from Stability AI, but with a number of significant modifications:

If the pre-trained pixel models were used as discriminator, there would be a need to decode the generated image using MoVQ Decoder and throw gradients through it, which would result in a huge memory overhead. These costs would not have allowed us to train the model at 1024 × 1024 resolution. Therefore, we used the frozen downsample part of the U-Net from Kandinsky 3.0 with trained heads after each layer of resolution downsampling as the discriminator. This is due to the desire to retain the ability to generate high-resolution images.

We added Cross Attention on text embeddings from FLAN-UL2 to the discriminator heads instead of adding a text CLIP embedding. This improved the understanding of the text by the distilled model.

We used Wasserstein Loss as the loss function. Unlike Hinge Loss, it is unsaturated, which avoids the problem of gradient nulling in the first stages of training when the discriminator is stronger than the generator.

We removed the regularization in the form of Distillation Loss, since according to our experiments it did not significantly affect the quality of the model.

We found that rather quickly the generator becomes stronger than the discriminator, which leads to learning instability. To solve this problem, we significantly increased the discriminator's learning rate. The discriminator had a learning rate of 1e-3, while the generator had a learning rate of 1e-5. To prevent divergence, we also used a gradient penalty as in the original work.

Training was performed on an "aesthetically pleasing" (hand-selected) dataset of 100K text-image pairs, which is a sub-set of the Kandinsky 3.0 pretrain dataset.

The result of this approach was to speed up Kandinsky 3.0 by a factor of almost 20, making it possible to generate an image in just 4 passes through U-Net. The fact that there is no need to use classifier free guidance has also had an impact on speed. Kandinsky 3.0 from a diffusion model has in fact become a GAN (Kandinsky 3.0 Flash) trained with a good initialization of the weights after pretrain.

However, for serious speedup, the quality of text understanding had to be sacrificed, as shown by side-by-side (SBS) comparison results. SBS is conducted on a fixed query garbage can of 2100 prompts (100 prompts for each of 21 categories). Each generation is evaluated on visual quality (which of two images you like better) and text relevance (which of two images better matches the query). You can read more about the side-by-side (SBS) comparison methodology in the Kandinsky 3.0 article.

Examples of images generated by the Kandinsky 3.0 Flash model:

Query Beautification

If you've used past versions of Kandinsky or other text-based image generation models, you've probably noticed that the more detailed the query, the more beautiful and detailed the image. This is because the training sample more often had fairly detailed descriptions of images. However, in reality, most user queries are very short and contain few details about the objects being generated (for obvious reasons - not all users have time to spend a long time selecting the prompt they need).

To solve this problem, Kandinsky 3.1 has built in the option of query beatification - a way of improving a user's query (adding details to it) using a large language model (LLM). Butification works very simply: an instruction asking the language model to improve the query is fed to the input of the language model, and then the model's response is fed to the Kandinsky input for generation.

We used Intel's neural-chat-7b-v3-1 (a pre-trained Mistral-7B model) as the LLM with the following system prompt:

### System:\nYou are a prompt engineer. Your mission is to expand prompts written by user. You should provide the best prompt for text to image generation in English. \n### User:\n{prompt}\n### Assistant:\n

Here {prompt} is the query that the user wrote.

Examples of generations for the same query, but with and without muting, are shown below (using the Kandinsky 3.0 model).

"Lego figure at the waterfall": without LLM / with LLM (Kandinsky 3.0)

"Close-up photo of a beautiful oriental woman, elegant hijab-adorned with hints of modern vintage style": without LLM / with LLM (Kandinsky 3.0)

"A hut on chicken legs": without LLM / with LLM (Kandinsky 3.0)

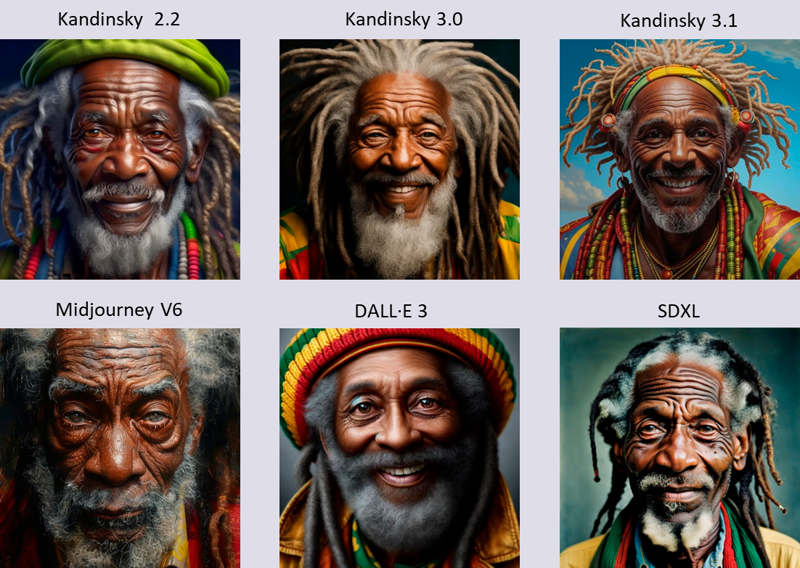

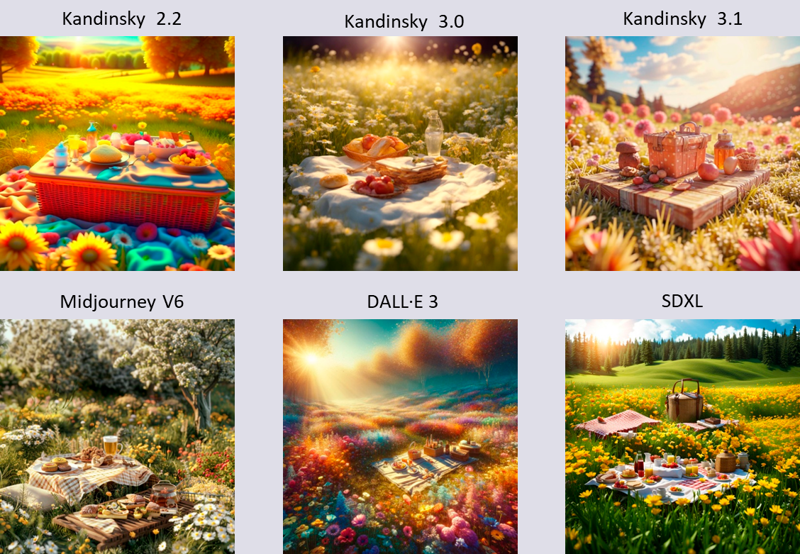

Comparison of Kandinsky 3.1 (Flash + Prompt improvement) with other models:

"Maximally realistic portrait of a jolly old gray-haired Negro Rastaman with wrinkles around his eyes and a crooked nose in motley clothes"

SD3:

"3D model, picnic on a bright flowering meadow, flooded with sun, depth of field, covered with glaze, bird's eye view, matte painting style"

SD3:

"Funny cute wet kitten sitting in a basin with soap foam, soap bubbles around, photography"

SD3:

"Tomatoes on a table, against the backdrop of nature, a still life painting depicted in a hyper realistic style"

SD3:

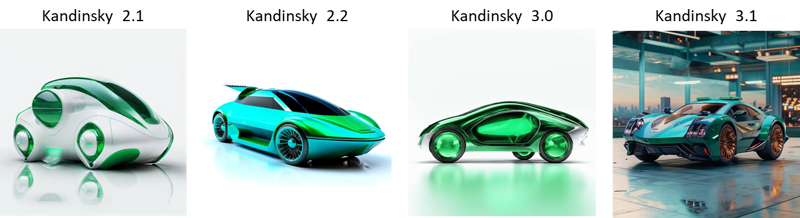

It is also interesting to trace the evolution of Kandinsky models, starting with the Kandinsky 2.1:

Image Editing and Text-Image Guidance

In contrast to Kandinsky 3.0, in Kandinsky 3.1 we have introduced the functionality to generate an image not only with a text query, but also and/or with visual guidance in the form of an input image. This allows you to edit an existing image, change its style, and add new objects to it. For this purpose, we used IP-Adapter, an approach that has demonstrated good results compared to traditional pre-training.

Supported inference options:

Image Variation. To make an image variation, we simply count the image embeddings with CLIP-ViT-L/14 and feed them into the model.

Image Blending. Here we count embeddings for each image and add them with specified weights, after which the result is fed into the model.

Mixing image and text. We count image embeddings and feed them into the model together with text, since we have kept the standard cross attention on text.

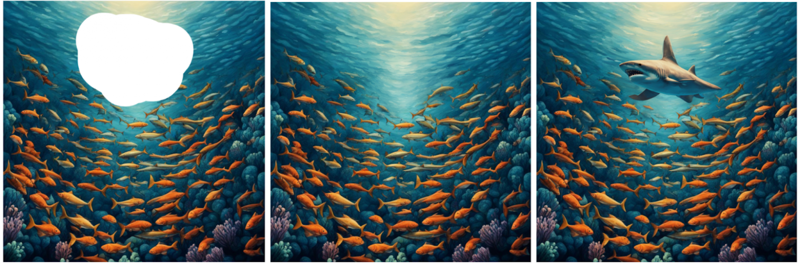

Kandinsky 3.1 Inpainting

In Kandinsky 3.1 Inpainting, we focused on improving the quality of the object generation model. In Kandinsky 3.0, we trained the model to reconstruct an image from its original description - because of this, the model reconstructs the original image very well, but when it comes to creating another object in place of the old one, the model can fail. One way to fix this problem is to pre-train on masks from datasets for an object detection or segmentation task (such as Paint by Example or SmartBrush). When using bounding box masks, the model learns to generate images by text query rather than by the description of the whole picture. Thus, the quality of the model grows when people use it - this is the format in which the Inpainting model is used at Inference. To ensure that the model does not lose its ability to do "classical" inpainting (using full image descriptions), we have balanced our training sets - 50% of masks come from bounding boxes, and the remaining 50% are randomly selected, as was previously the case in Kandinsky 3.0 Inpainting.

А white bear sitting on the ice (masked_image / Kandinsky 3.0 / Kandinsky 3.1)

А shark (masked_image / Kandinsky 3.0 / Kandinsky 3.1)

Since we use only class names as text queries, the model may become unlearned in generating images from long queries. Therefore, we decided to finalize our dataset using LLaVA 1.5. For this purpose, after selecting the bounding box that we used as a mask, we fed the crop image into LLaVA to get a textual description of this piece of image. This textual description was then used as a text query.

A mexican hat (masked_image / Kandinsky 3.0 / Kandinsky 3.1)

A fox is sitting in front of the fox and drinking cup of tea and talking about the love (masked_image / Kandinsky 3.0 / Kandinsky 3.1)

We also performed a comparison with other models to "digitize" the quality of our new inpainting method. To do this, we took the COCO dataset, randomly selected 1000 images from it and one object from each image we regenerated. Next, we ran a model trained on the YOLO-X detection dataset on the resulting images and computed its detection quality metrics. If the detector trained on real images can detect the generated object, we can conclude that the object is generated naturally enough. The metrics are as follows:

| Model / Metric | AP50↑ | AP small↑ | AP large↑ |

| -------------------- | ----- | --------- | --------- |

| SD | 0,276 | 0,033 | 0,253 |

| SDXL | 0,272 | 0,032 | 0,245 |

| SD2 | 0,238 | 0,032 | 0,205 |

| Kandinsky 3.0 (Ours) | 0,290 | 0,028 | 0,275 |

| Kandinsky 3.1 (Ours) | 0,306 | 0,027 | 0,296 |KandiSuperRes

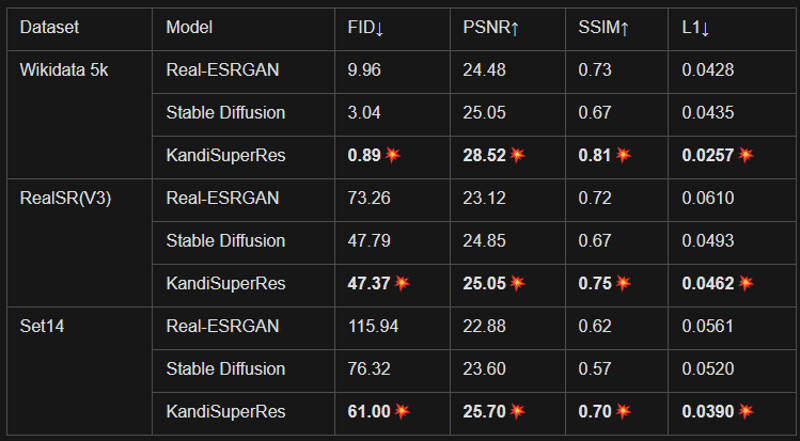

In the new Kandinsky 3.1 version, it is now possible to generate images in 4K resolution. The KandiSuperRes diffusion-based resolution enhancement model has been trained for this purpose.

Comparison of the Real-ESRGAN, Stable Diffusion and KandiSuperRes models:

Figure 1 shows examples of generating KandiSuperRes, Stable Diffusion and Real-ESRGAN models in 1024 resolution. Figure 2 shows examples of KandiSuperRes generation at 4K resolution.

Comparison of KandiSuperRes, Stable Diffusion and Real-ESRGAN models when increasing resolution from 256 to 1024 resolution

Example of KandiSuperRes generation when increasing resolution from 1024 to 4096

Conclusion

While other models such as Stable Diffusion are getting the recognition they deserve, Kandinsky unfortunately remains in the shadows. This article is a call to action to change that.

Kandinsky deserves a place on Civitai and to be recognized by a wide range of users. Its potential is enormous.

With open source code and an active community, Kandinsky has a good chance of becoming the new Stable Diffusion. Join the Kandinsky 3.1 movement, try, create, share your work, and together we will help this model reach its full potential!

Sources:

https://arxiv.org/abs/2310.03502

https://arxiv.org/abs/2312.03511

https://habr.com/ru/companies/sberbank/articles/701162/

https://habr.com/ru/companies/sberbank/articles/725282/

https://habr.com/ru/companies/sberbank/articles/747446/