Introduction

Latent Noise is the basis for all of what we do with Stable Diffusion. It is amazing to take a step back and think of what we are able to accomplish with this. However generally speaking we are forced to use a random number to generate the noise. What if we could control it?

I am not the first to use Unsampling. It has been around for a very long time and has been used in several different ways. Until now however I generally have not been satisfied with the results. I have spent several months finding the best settings and I hope you enjoy this guide.

By using the sampling process with AnimateDiff/Hotshot we can find noise that represents our original video and therefore makes any sort of style transfer easier. It is especially helpful to keep Hotshot consistent given its 8 frame context window.

This guide assumes you have installed AnimateDiff and/or Hotshot. The guides are avaliable here:

AnimateDiff: https://civitai.com/articles/2379

Hotshot XL guide: https://civitai.com/articles/2601/

WORKFLOWS ARE ATTACHED TO THIS POST TOP RIGHT CORNER TO DOWNLOAD UNDER ATTACHMENTS

Link to resource - If you want to post videos on Civit using this workflow. https://civitai.com/models/544534

Changelog

July 1, 2024 Updated some suggestions re samplers with @spacepxl on reddit.

System Requirements

A Windows Computer with a NVIDIA Graphics card with at least 12GB of VRAM. This requires no more VRAM than normal AnimateDiff/Hotshot workflows - it does take slightly less than double the time though.

Node Explanations and Settings Guide

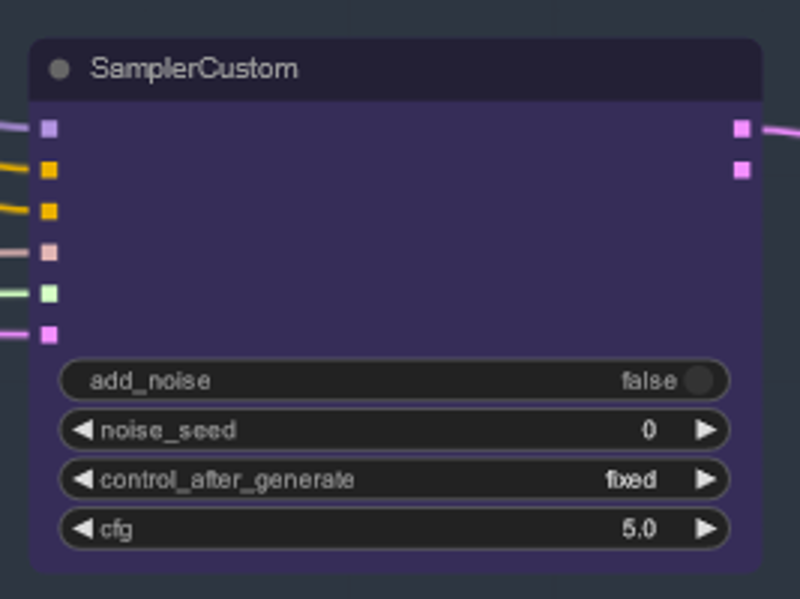

The main part of this is using the Custom Sampler which splits all the settings you usually see in the regular k-sampler in to Pieces:

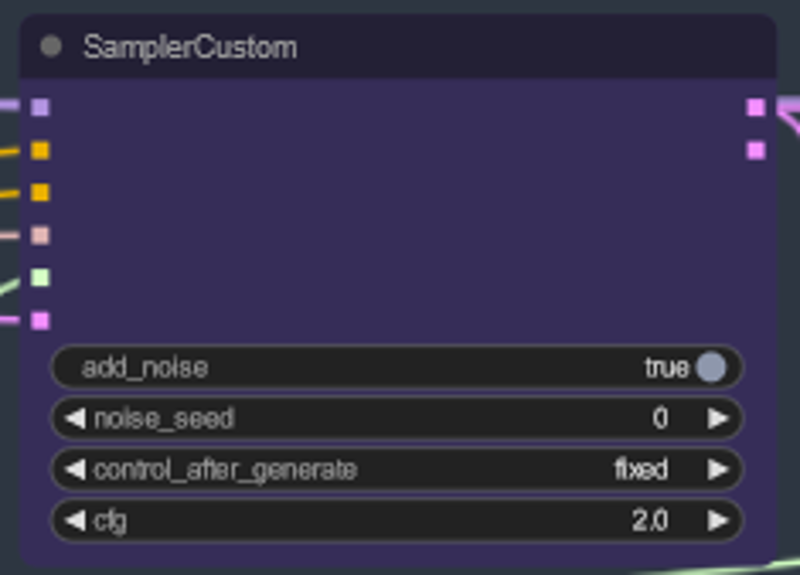

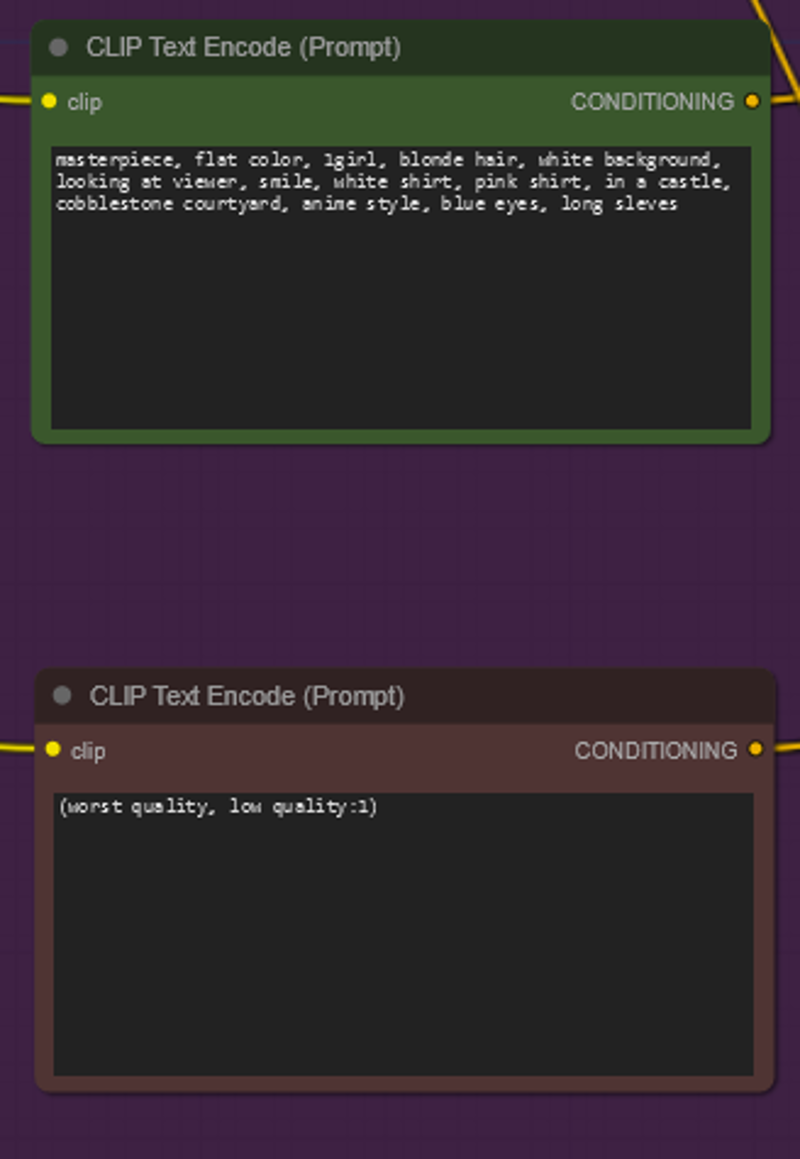

This is the main k-sampler node - for unsampling adding noise/seed do not have any effect (that I am aware of). CFG matters - generally speaking the higher the CFG is on this step the closer the video will look to your original.

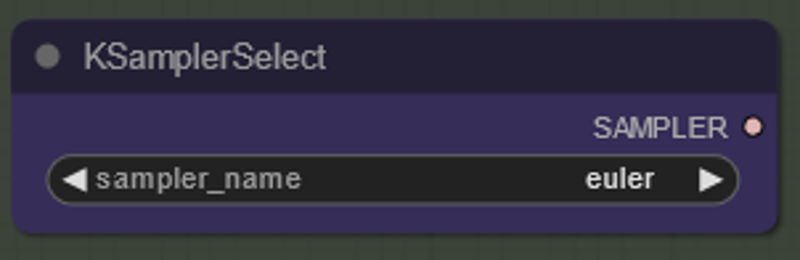

The most important thinks is to use a sampler that converges! This is why we are using euler over euler a as the latter results in more randomness/instability. If you want to read more about this I have always found this article useful. @spacepxl on reddit suggests that DPM++ 2M is perhaps the more accurate sampler depending on use case.

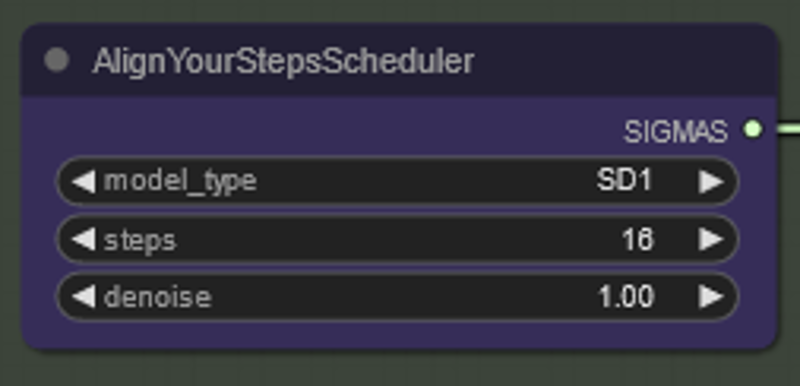

Any scheduler will work just fine here - AYS however gets good results with 16 steps so I have opted to use that to reduce compute time.

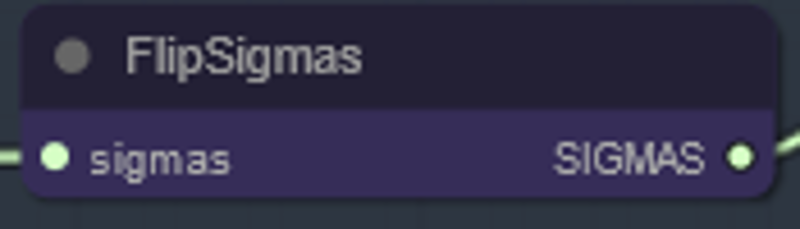

This is the Magic node that causes unsampling to occur!

Prompting matters quite a bit in this method for some reason. A good prompt can really improve coherence to the video especially the more you want to push the transformation. For this example I have fed the same conditioning to the sampler as to the sampler. It seems to work well generally - nothing stops you however from putting blank conditioning here - I find it helps improve the style transfer - perhaps with a bit of loss of consistency.

For resampling it is important to have add noise turned off (although having empty noise in animatediff sample settings has the same effect - I have done both for my workflow). If you do you will get a static result (at least with default settings). Otherwise I suggest starting with a fairly low CFG combined with weak controlnet settings as that seems to give the most consistent results.

The remainder of my settings are personal preference. I have simplified this workflow as much as I think possible.

Workflow Information

I have provided both a SD1.5 and a SDXL workflow. They both are prompted to work with the sample video provided (from Pexels)

Important Notes/Issues

I will put common issues in this section:

Flashing - If you take a look in my workflows where I have decoded and previewed the latents we create by unsampling look you will se some with obvious color abnormalities. I am unclear on the cause exactly and generally they do not affect ones results. With SDXL they are especially apparent. They can however sometimes cause flashing in your video. The main cause I think comes from the controlnets - so reducing strength on them can help. So can changing the prompt or even altering the scheduler a bit. I still struggle with it at times - if you have a solution please let me know!

DPM++ 2M can at least sometimes improving flashing.

Where to Go From Here?

This feels like getting a whole new way to control video consistency so there is a lot to explore. If you want my suggestions:

Trying to combine/mask noise from several source videos.

Adding IPAdapter for consistent character transformation.

In Closing

I hope you enjoyed this tutorial. If you did enjoy it please consider subscribing to my YouTube/Instagram/Tiktok/Twitter (https://linktr.ee/Inner_Reflections )

If you are a commercial entity and want some presets or training please contact me Reddit or on my social accounts (Instagram and Twitter seem to have the best messenger so I use that mostly).

If you’re going deep into Animatediff, you’re welcome to join this Discord for people who are building workflows, tinkering with the models, creating art, etc.

(If you go to the discord with issues please find the adsupport channel and use that so that discussion is in the right place)

Special Thanks

Kosinkadink - for making the nodes that make AnimateDiff Possible

Aakash and the Hotshot-XL team

The Banadoco Discord - for the support and technical knowledge to push this space forward

![[GUIDE] Unsampling for AnimateDiff/Hotshot - An Inner-Reflections Guide](https://image.civitai.com/xG1nkqKTMzGDvpLrqFT7WA/d398be3e-8344-4c87-afb6-c2ef8ec4f1b3/width=1320/Video_00013.jpeg)