Hello all,

My name is Wolfgang Black. I’m a lead AI Engineer here at Civitai, working in the background with other Devs on building a safe and exciting experience for all our users. I’m a scientist at heart having worked in a few different industries from High Energy Density Physics, to consumer electronics and the Vape industry, to Machine Learning in the banking industry. Because of my background and experience focusing on fundamentals, when I came to Civitai I knew I wanted to share our machine learning methodologies with the larger community. So this is the start of the ML/AI team sharing our projects, goals, challenges, successes and failures to you. I’d like these articles to be informative and helpful on your own machine learning journeys, as well as serve as examples of real world problems beyond image generation.

In this series of articles I’ll be discussing building a part of our moderation pipeline, the machine learning models we’ve implemented, and I’ll share out the data so anyone can experiment on their own!

What are we trying to solve

At Civitai we receive hundreds of thousands of images from either our generation pipeline where users experiment with different checkpoints and LoRAs or ingestion from users sharing their locally generated images. We also receive a ton of data from users in training data sets, which users can use to create their own LoRAs onsite. When users want to make this data public and share with the community, we at Civitai have to make sure we can serve those images up to the appropriate audience. One way we do this is with image ratings that mimic movie ratings. Users are able to control the nature of the content they’ll see in their image by toggling on or off specific ratings.

To make sure we keep our users browsing experience pleasant, we want to make sure our ratings are accurate and representative of the content. Currently we use a system of tags, rating the tags with a numeric value, and then determining a max allowable threshold per rating. If tags push the numeric value over a specific rating threshold, the rating graduates to a more restrictive level. However, we’re interested in trying to see if we can’t run a smaller or more simple architecture than a tagger to determine the rating. As such, I’ve tried a few different architectures, modalities, and strategies to build an end-to-end pipeline to correctly classify images with the movie ratings.

What is Data

Every machine learning model is a function of its data. The adage is “Garbage in, Machine Learning Model out” after all. In an ideal project, the machine learning (ML) practitioners would be able to view and confirm the label of each piece of data the models are trained on. They'd also make sure the data is fully representative of the type of data the model will see and action on in a production environment. However, today ML models are trained on millions of pieces of data. Even datasets like COCO or Image-net, foundational Computer Vision datasets, are found to have surprising rates of mislabeled data. Models are also expected to generalize well to unseen data, or data that is indirectly related to the datasets. In an effort to build models for realistic use cases, practitioners do their best to ensure good labels and clean/clear data.

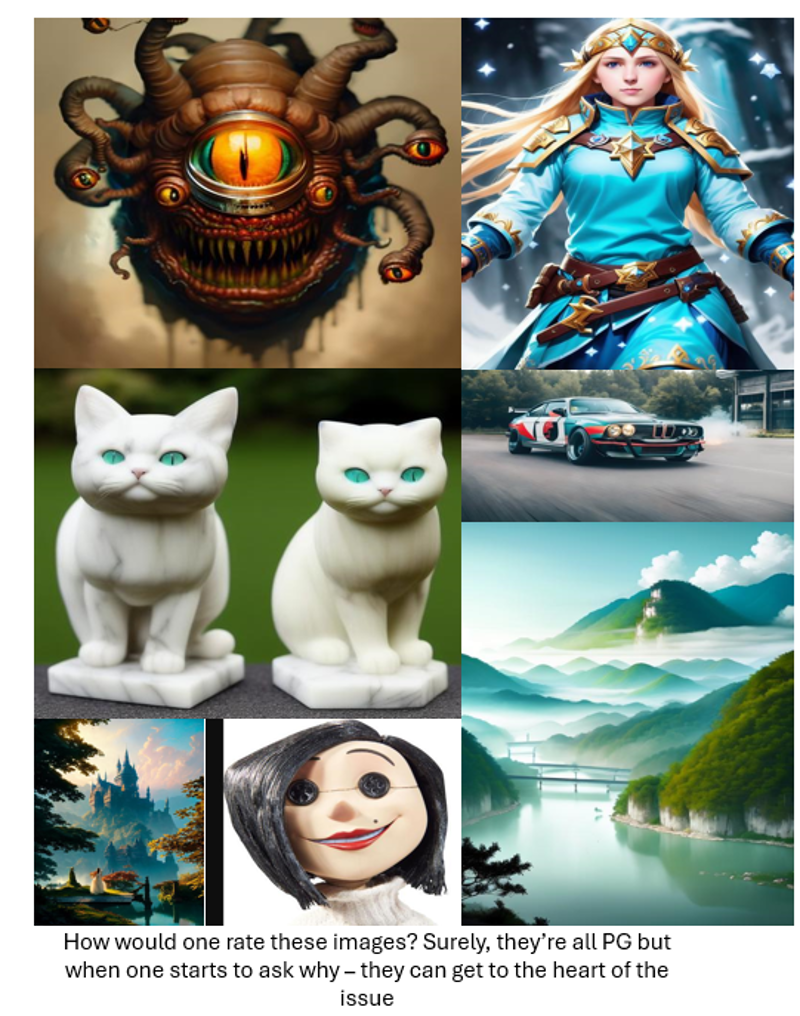

What does this mean? It means we want as many highly labeled examples that represent the data the model will see. However, labels for things like ratings can be nebulous and complicated. For our project we decided to try to put images into movie-like ratings. So an image would have a single label of PG, PG13, R, X, or XXX. However, finding these labels can prove to be very challenging!

What's in a rating?

One may think, well if you need labeled data why not use the labels that currently exist? Well for one, there are thousands of images a day flagged by users or moderators which need rating reassignment. So we know the current method isn’t perfect. Also, our user base is creative! So while they create new images never before seen, the tagging model we’re using to tag images can drift further and further from true tags.

So does this mean machine learning can tackle this problem if a widely used tagger has challengers? Of course, after all our users and moderators can see an image and acknowledge the rating is correct or needs to be changed. We just need a model we can train and update based on the correct data - maintaining some form of the data distribution representative of the actual data we’re going to be labeling!

If we can’t trust our current method - can we trust our moderators? We did try this, our fine moderates offered up a few thousand images. However, they hadn’t been labeling images originally, and as one can imagine one man's smut is another man's prime time television. We found that our moderators were conservative in some places, and very flexible in others! An interesting observation was mens abs tended to lean “R” rating, whereas womens abs and stomachs could be “PG” or “PG13”! This difference in rating levels can heavily depend on the person, the time of day, what images/rating level they’ve been looking at recently, gender, kinks, ect, ect. So while we had enough data to demo a model, we quickly learned different moderators had different opinions of the ratings, and that the model would typically disagree with any one moderator on different kinds of images.

Again, we had to ask ourselves: For whom are we rating these images? Our community should help determine what category an image falls into, since they’ll be heavily impacted by what they see. To enlist the help of our community we opened up a data rating game to our users, and ya’ll put in some heavy work! We had hundreds of thousands of labels in just a few short months.

To create the dataset we’ve used so far for these classifications, we looked at all the reviewed data and processed the data considering a majority rule. Ratings would be considered the ‘true label’ if the image had been seen 3 or more times, and the rating had been selected >=50% of the time. This left us with some 35 thousand images (as of May 2024). The dataset consisted of the images, the prompts (where available), the tags from our tagger, and the current rating. From here, we could decide what kind of modeling we wanted to do.

Modality? I hardly know thee…

What is modality for data? Well modality just means the kind of data we're going to use for our ML model. I mentioned the dataset contained the prompt and tags - or natural language text data. What's interesting, is in the research rater game users vote ONLY on the image itself. Anyone familiar with diffusion models and text-to-image generation knows that a seemingly innocent prompt can generate a range of images from tame to racy depending on seed, steps, LoRAs, or the checkpoint. Even on the same checkpoint, the same prompt can have someone go from appropriately clothed to scantily clad given the right generation setting.

This makes labeling the prompts difficult. After all, “1girl solo” doesn’t seem like it warrants an “X” rating, or even an “R” rating. But if suddenly the generation reveals a woman in their underwear or even less, that definitely changes the rating. However, there are different prompting styles. Some users prompt with tags, some users prompt with natural language or story-like prompts. These prompts are typically easier to label, though there is still a level of stochasticity in the generation. This again becomes more complicated with trigger words in LoRAs, or popular quality phrases in models like Pony. However, any prompter knows that you can sort of control the level of content through semantic control - that is by specifying a woman a few different times you can control the generation of a woman. Or by prompting specific scenes through multiple details one can encourage more of those types of details in the final product. Thus, while there is randomness across the language and the actual latent space these models WERE trained on some semantic understanding… Does this indicate that there is a reasonable signal in the prompt?

Similarly, how about the tags? The tags are generated by a few different models taking the in the images, and then outputting text. We then take that text and algorithmically process it into a score - can we then use a machine learning algorithm as opposed to a rules based algorithm to take advantage of this modality? As such, when exploring modeling efforts we wanted to explore all available modalities. That is image, text, and the combination of them.

To consider the different modalities, we need to acknowledge the challenges associated with each modality. I've already mentioned that the text input can depart from the generated image. We may be able to account for this by using some coherence score - or a metric of similarity between the text latents and the image latents. However, the goal is to use all available data to classify the image/media, and by focusing only on related or similar text/images we could end up neglecting a large portion of the data distribution the model will face in production. For our case, we didn't exclude any text based off of its similarity to the image. The benefit of using text is that there are tons of incredible light weight transformers or recurrent nets which are already trained on large corpus of text data that contain high semantic understanding that can be used easily and with little computational cost.

The challenge with the tag data is that this is an output of a widely used model, trained on data unknown to us, at a time much before our own image generations, and inherently controlled outside our ecosystem. This isn't uncommon, however it is worth mentioning. As our community sees new trends, new styles of generation and art, and new subjects the tags may be outdated or misrepresent specific concepts within the images. However, if we wanted to utilize our own tagger we'd be doing essentially the same project - except instead of 5 unique classes we'd have 1000's of interrelated (multilabel) classes. The benefit of the tags is that is a smaller latent space, we're already using the tags for rating levels (albeit abstracted through a different algorithm), and the community is already aware of the tag based system.

Is there a single modality that can be used to classify these models? If so, it's likely not prompts as not all images have prompts associated with them. That is, users can share prompts with us when they upload images or decide to keep them to themselves. So then, do we use only images or tags? Can we mix modalities in different ways to determine the best way to get at a rating?

Conclusion

In this article we mostly discussed the different challenges associated with the data collection phase of a typical ML problem. The main take aways can be summarized as:

The importance of high-quality, representative data can not be overstated. This is a challenge that can be seen through the history of machine learning and persists today. As Andrew Ng has said "The most valuable resource in the world is no longer oil, but rather data."

Data labeling for content rating is inherently subjective and context-dependent, requiring careful consideration of majority opinions and consistent labeling criteria.

To solve this, we implemented the rating game to get a majority vote on the ratings for images, and through this we were able to get around 35k unique voted upon labels for images. This data is also more inline with our communities expectations

We also talked about the various modalities of images, prompts, and tags; their sources, and the challenges with using them.

In the next article we'll focus on how to utilize the different modalities, model selection, and training procedure. In the mean time, please share your experiences with data capturing or challenges you've had in early ML projects. Have you found errors in a dataset that was causing you grief? Are you interested in combining different modalities or trying to use the outputs of one model as the inputs of another?

Together, by solving these challenges and sharing our experiences, we can share the future of safe and enjoyable content across Civitai and the Gen-AI community!

Glossary:

checkpoint - A text-to-image generative model. This can be a base model like SD1.5, or a finetuned checkpoint like Pony

LoRA - Stand for Low-rank Adaptation, can be thought of as very small finetuned layers for a base model that allows for easier control towards generating specific styles or generations

Modality - the way data is represented. Can be text, image, audio, video, ect. For this series of articles, we'll focus on Text and Image modalities

Update: Part 2 of this series is up now!