Why?

To say Kohya Dreambooth TI training is finicky would be the understatement of the year.

This said, I have been playing around with it for the past week or so for four reasons:

I use Kohya SS to create LoRAs all the time and it works really well. So I had a feeling that the Dreambooth TI creation would produce similarly higher quality outputs. It does, especially for the same number of steps. More on this later (see Note # 02).

Kohya SS is FAST. For ~1500 steps the TI creation took under 10 min on my 3060.

Kohya SS produces safetensors files, rather than ckpt, which is safer for downloading from a random dude on the internet.

The challenge. Isn't that what we're all here for? ;)

Who?

For this example, I'm using the same data set that I used for training this LoRA: https://civitai.com/models/91607/shraddha-kapoor

(Shraddha Kapoor is an Indian celebrity and all images were sourced from the Internet.)

TL;DR Version?

Don't use regularization images for TI creation - they screw up the output big time

Captions don't matter in Kohya SS TI generation - don't waste your time

Number of reps do matter, especially if it's a small dataset like the one I had (15 images) - aim for ~1500-2500 total steps (reps * number of images, so in my case 100 reps)

Resizing images to 512x512 has some positive impact so if it's not too painful, do that.

This is NOT a tutorial on Kohya Dreambooth TI generation. Will follow up on that soon.

First, some caution

Note 01: I don't recommend Textual Inversions for generating a person because while small, they are quirky and very quickly lock onto specific aspects of a person's looks. I'm using a person in this case to make it easy to compare what the real person looks like to the output to see the delta. Here's the output of the best TI vs that generated by this LoRA, using the same training images. For persons I'd always recommend LoRAs. Also see Note 04.

TI output:

LoRA output:

Real person:

Note 02: To build on the note above, I compared the TI generated by standard SD training scripts (https://civitai.com/models/59634/desigirl-nsfw) to that generated by Kohya SS. The output is of a much higher quality since it allows the use of regularization images. However since the output is NSFW, I am not currently putting up that comparative analysis. May do that in the future.

Note 03: This study doesn't get into the steps for training. That will be a separate guide that will follow.

What's being compared?

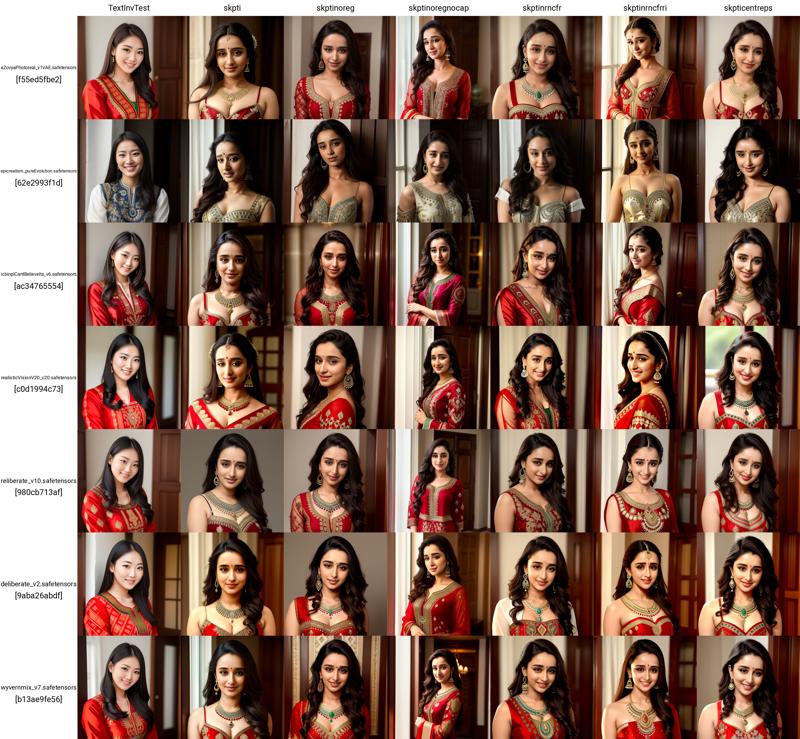

As you can see in the cover image has various columns which shows my evolution as I developed the TI. The rows compare the output on various models.

Link to larger image: https://civitai.com/images/1381178?postId=355147

Column 1: No TI used

Column 2: Original TI created with regularization images (TI: skpti)

Column 3: Regularization images dropped (TI: skptinoreg)

Column 4: Column 3 + Caption files deleted (TI: skptinoregnocap)

Column 5: Column 4 + 50 reps (TI: skptinrncfr)

Column 6: Column 5 + images resized to 512x512 (TI: skptinrncfrri)

Column 7: Column 6 with 100 reps (TI: skpticentreps)

Attachment has all the TIs in case you want to play with them.

Note 04: One more reason to not use TIs for generating a person's likeness is because the output on different models can differ significantly even if they are all derived from SD 1.5, as you can see in the images in the rows for the same column.

Findings

#1 - Don't use regularization images when the TI is focusing on a person

I always use regularization images when I'm creating a LoRA, and so I thought it'd work in this case as well. Clearly not. My understanding of reg images is that it tells the AI what not to train on. But using them in this case was churning out images like the one below in the samples, telling me something was off.

#2 - Use the 'Object' prompt template instead of captions

Don't know if this is specific to the TI training, or in general an issue with Kohya, but as your can see comparing column 3 to 4, deleting the caption files and using the 'Object' template actually produced better images

Note 05: Before deleting the caption files, I tried changing the caption extension from .caption to .txt, since that is what BLIP produces, but that had no impact either.

#3 - Number of reps matter

I started with 75 reps and saw that the output was too saturated. Dropping it to 50 helped.

However, the training image sizes in Column 2-5 were much larger than 512x512. When I dropped cropped them to the smaller size, I needed to reset the reps to 100, as seen in column 7. See point #5 below.

#4 - Crop your images when training for TI

Typically this is a step I ignore for LoRA training, but as you can see in the comparison between column 5 and 6, cropping the images to 512x512 helps produce better likeness.

I use birme.net to crop images. Highly recommend since it's free and everything is processed locally on your machine through scripts.

#5 - Increase the reps if your images are 512x512

Increasing the number of repetitions to 100 helped improve the output a lot. I was concerned the TI would be baked and produced deep fried images, but it did not, as seen in column 7.

Comments and suggestions welcome.

Attached:

TIs explored in this comparative study

Training image set - cropped as well as original