The cover image for this article is kind of amazing.

Not the image itself, that sucks. But how it was made.

I am generating a prompt using one ai, and generating an image with that prompt on a different ai on another machine, all from a third machine. Using my agent workflow tool. All on my local network using ollama and automatic1111. That was the first image I made.

Sure today I have to run the output of my script through | base64 -d > filename.png, but darn it, it works!!!

I plan on this tool becoming a swiss army knife for data processing and ai was integrated into it from the start.

General overview

The dynamic_workflows_agents_py file is a Python program command line tool (CLI) that implements a flexible AI agent workflow execution system based on the configuration in config.json. Here are its key features:

It loads the configuration file, with an option to specify a custom config file path.

It can execute both predefined workflows and individual agents.

It supports dynamic creation of workflows from individual agents.

It handles API calls to various AI services, including different input/output formats.

It implements a logging system with configurable verbosity and remote logging.

It provides a command-line interface to list available workflows and agents, and to only execute them with required inputs.

The program is designed to be flexible and extensible, allowing users to define new agents, workflows, hosts, services, and endpoints in the configuration file without changing the core code. It also includes error handling and detailed logging to aid in troubleshooting.

Some notable aspects of the implementation:

It uses dynamic argparse for command-line argument parsing, it adjusts the required parameters depending on the agent or workflow selected.

It implements a system to resolve aliases for hosts and models.

It can extract specific data from API responses using highly configurable endpoint selectors.

It supports different input formats (JSON, XML, HTML) for API calls.

It can create temporary workflows from individual agents using a singleton template.

The config.json file contains a complex configuration for an AI workflow system. It includes:

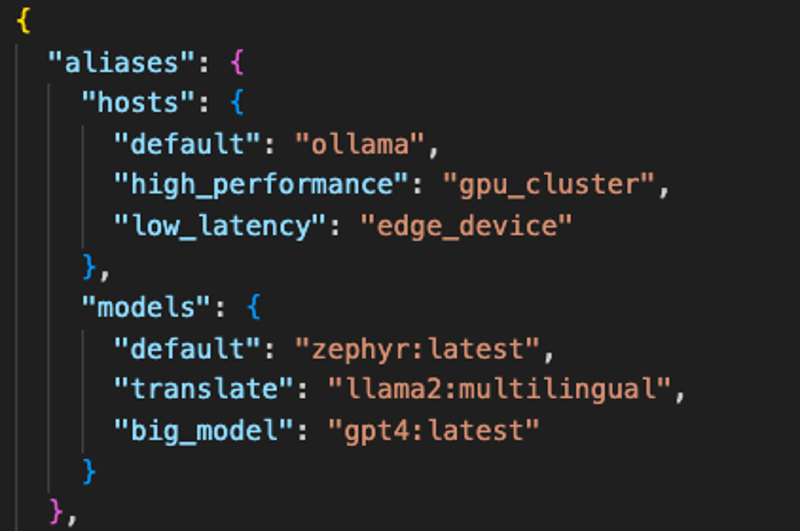

Aliases for hosts and models

Host configurations

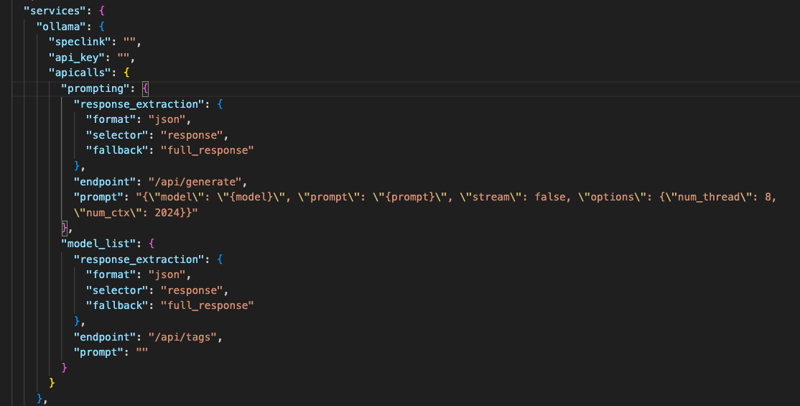

Service configurations for different AI services (e.g., Ollama and Stable Diffusion right now)

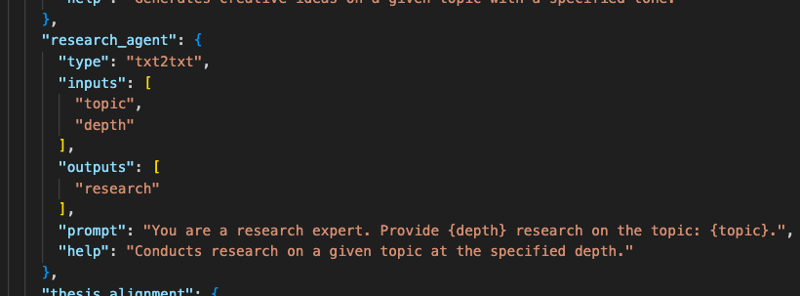

Agent definitions for various tasks (e.g., brainstorming, research, image generation)

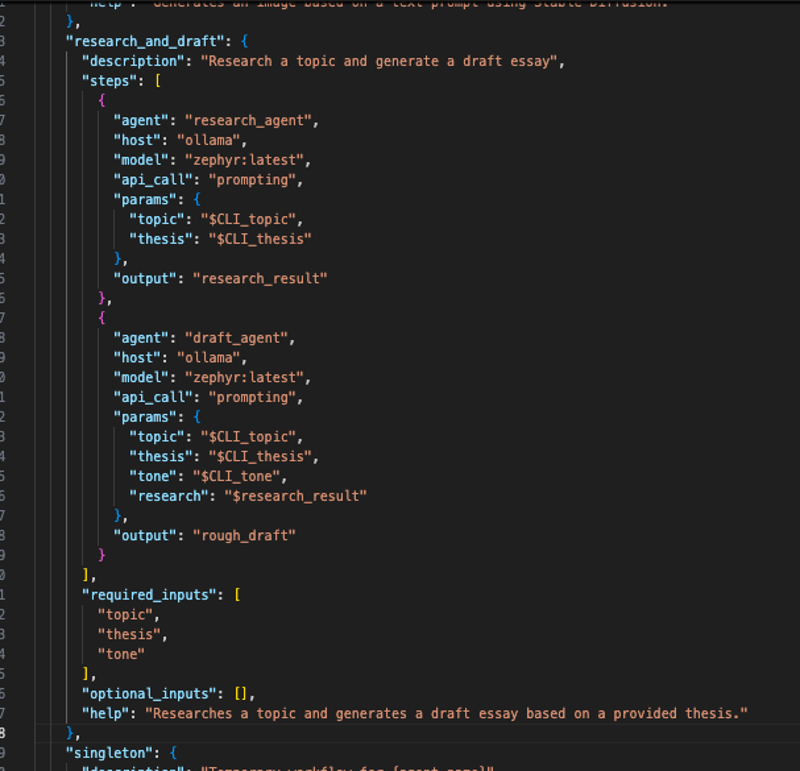

Workflow definitions that combine multiple agents

And new agents, workflows, hosts, services and endpoints can be defined in the configuration file without needing to be programmed.

Overall, this system is a powerful and flexible framework for defining and executing AI workflows, capable of integrating with various AI services and handling complex multi-step processes. I plan on making it a general purpose way to connect to various web servers and AI services and be able to access the full power of python data manipulation.

Overview of the configuration file

I am just really amazed at how quickly this swiss army knife of ai is coming together. I got tired of everyone else making it look hard.

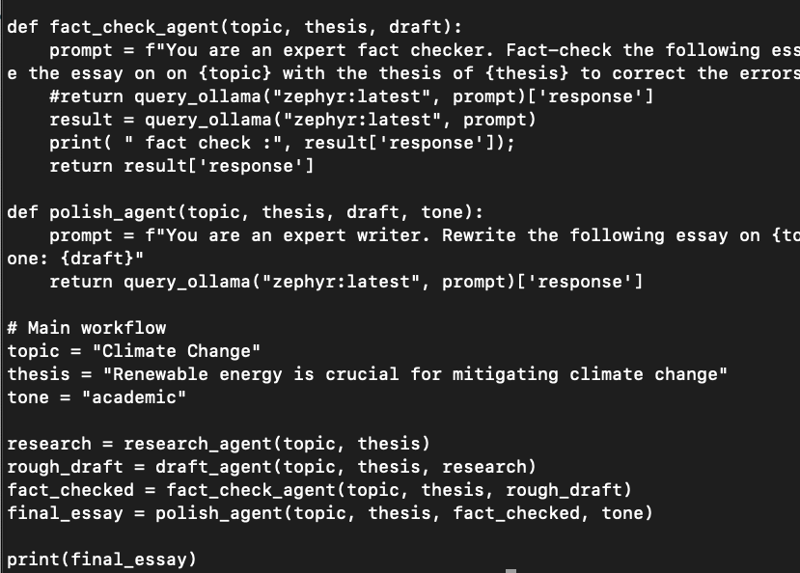

A week ago I scripted my first agent and an agent workflow quickly followed. This echoed how I would manually put chatbots into different modes and send the data through them repeatedly, but automated.

I started implementing a large number of agents and saw that the only difference between each agent was the string that defined the agent and the parameters they took. So I put the agents into a config file.

Then I saw that I could replicate the workflow as an entry in the same config file.

This lists the order that the agents run and maps inputs to outputs. The new processing agents will act identically to api workflows.

I had the ollama connection and endpoint behavior all hardcoded because that is the one interface I had and I could get that to work. I moved parts over to the config file one at a time, hosts first.

Some of these are local on my network, others are place holders as I ran across information about other services.

See how great this concept is about organizing and collecting information in one place? My plan is to try to accumulate as much knowledge as possible about available services especially services that run locally. I don't have access to any of the big model APIs but I plan on having the info here.

The next thing I did was move the services to the configuration file:

First I did the send part of the endpoint because I needed to package up the prompt to send to ollama first, I had to move hardcoded receiving of data from ollama over into the config file in order to also connect to stable diffusion api in automatic1111.

And now I support two different interfaces in a generic way. And I have built in primitive web scrapping using this tool as it is right now.

This meets separation of concerns: the end points package and unpackage the data for communicating with remote apis. The agents work with that data.

I noticed that if you have hundreds of workflows for different tasks and you changed models or hosts that it would suck to go through the file and replace all those strings. Also aliases support the concept of roles, so you could have a model you use for translation, and just use the keyword translate in your workflows and that would map to the ai you use for that task. If you find a better model, you just have to change it in one place.

So this brings us up to date on the development to this point.

Future plans

In the words of Claude 3.5

Strengths:

Flexibility: Your system is highly flexible, allowing for the definition of various agents and workflows in a configuration file.

Modularity: The design separates concerns well, with distinct components for API calls, processing tasks, and workflow execution.

Extensibility: The system can easily accommodate new agents, workflows, hosts, and services without significant changes to the core code.

Consistency: API and processing agents are treated similarly in workflow definitions, providing a uniform interface.

Configuration-driven: Most of the system's behavior is defined in the configuration file, allowing for easy modifications and additions. Including brand new APIs.

Error handling: You've implemented error handling and logging mechanisms.

Versatility: The tool can handle various tasks, from API interactions to local data processing.

Command-line interface: The tool provides a user-friendly CLI for executing workflows and agents.

Weaknesses:

Complexity: As the system grows, managing the configuration file might become challenging.

Limited data flow control: The current design might struggle with complex data flows or conditional executions.

Lack of built-in parallelism: There's no native support for parallel execution of independent steps.

Potential performance issues: For large workflows or data-intensive tasks, the sequential execution might be a bottleneck.

Limited type checking: There's no strong type checking between agent inputs and outputs, which could lead to runtime errors.

Debugging challenges: While there's logging, debugging complex workflows might be difficult without additional tools.

Limited visualization: There's no built-in way to visualize workflows, which could be helpful for complex scenarios.

Dependency management: The system doesn't have a built-in way to manage and validate dependencies between agents.

Overall, you've made significant progress in creating a flexible and powerful command-line tool for executing AI workflows. The system's strengths in flexibility, modularity, and extensibility provide a solid foundation for future enhancements. Addressing some of the weaknesses, particularly in areas like data flow control, parallelism, and type checking, could be good next steps in the tool's evolution.

Tomorrow I add the ability to load and save files and to encode and decode base64 chunks of data. This way I can directly save generated images from my CLI by adding the base 64 decoder agent and the save file agent. In the workflow these new nodes will look exactly like agents and be configured like them and accept arguments and map data to the agent just like the api agents. Once those tools are working I will add agents that can modify xml, json, text, xml and convert between them.

Once the configuration file stabilizes in a week or two I will start building a GUI to run on top of the configuration file and this tool to make it easy to use.

And RAG agents would be very powerful in a workflow. So I am looking into introducing this to the program.

I plan on posting it on github as an open source program in a month. Will wait until I have 99% of the features I want this tool to have before I post it.