Introduction

This RAVE workflow, combined with AnimateDiff, enables you to transform a primary subject character into something entirely different. It is a powerful process that lets your imagination run wild.

RAVE, is a zero-shot video editing approach that harnesses pre-trained text-to-image diffusion models without additional training. RAVE takes an input video and a text prompt to generate high-quality videos while retaining the original motion and semantic structure. It employs an innovative noise shuffling strategy that utilizes spatio-temporal interactions between frames, resulting in temporally consistent videos faster than current methods. Moreover, it demonstrates efficiency in memory requirements, enabling it to handle longer videos.

Below is an example of what can be achieved with this ComfyUI RAVE workflow.

Pretty cool right?! Let's get everything set up so you can make these animations too.

How to install & set up this ComfyUI RAVE workflow with AnimateDiff

Loading your JSON file

If you are using ThinkDiffusion, it is recommended to use the TURBO machine for this workflow as it is quite demanding on the GPU.

Setting up custom nodes

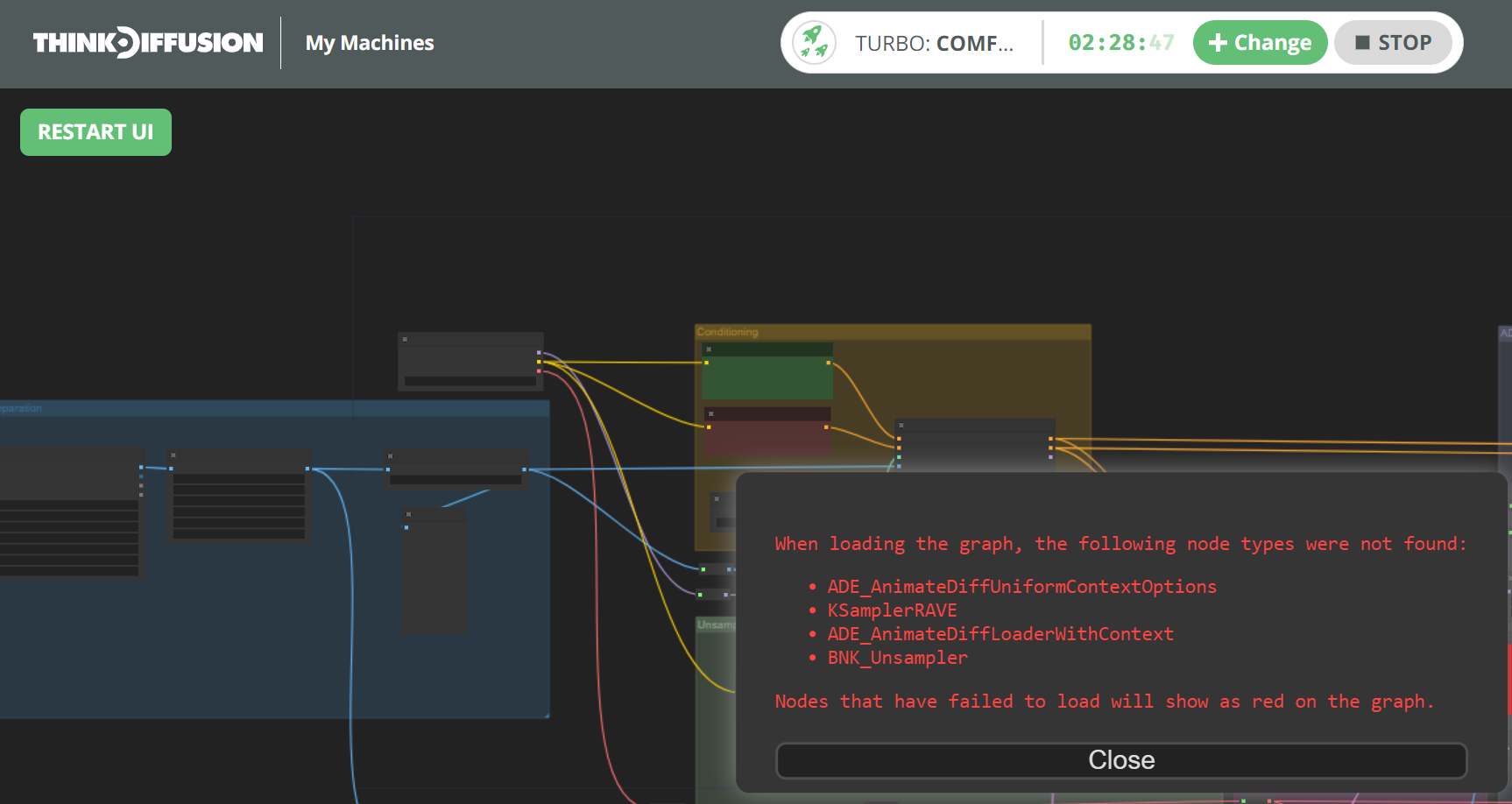

Once you load the workflow into your instance of ThinkDiffusion, you may have some missing nodes.

In my case when I loaded this workflow, the following custom nodes were missing:

ADE_AnimateDiffUniformContextOptions

KSamplerRAVE

ADE_AnimateDiffLoaderWithContext

BNK_Unsampler

Missing ComfyUI Custom Nodes

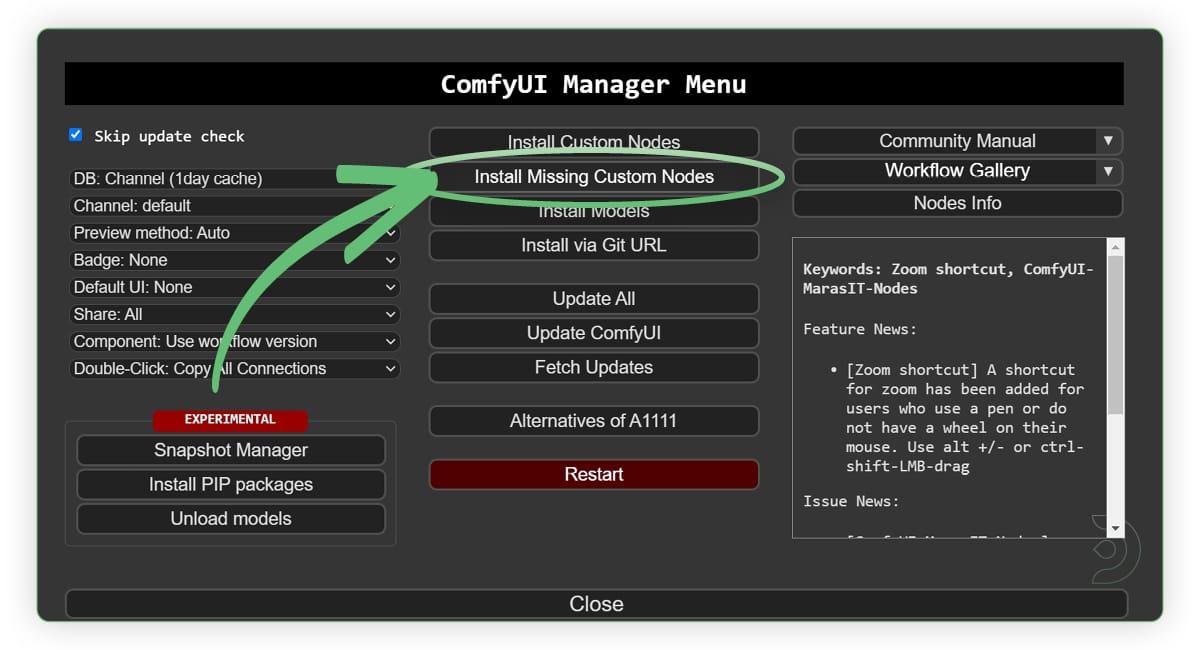

To fix this, you will need to go the the ComfyUI manager and click on Install missing custom nodes.

ComfyUI manager

Install each of the missing custom nodes and once done you will need to restart the UI and refresh the browser. If the nodes are still coloured red, you will need to close down your instance of ComfyUI and launch a new machine.

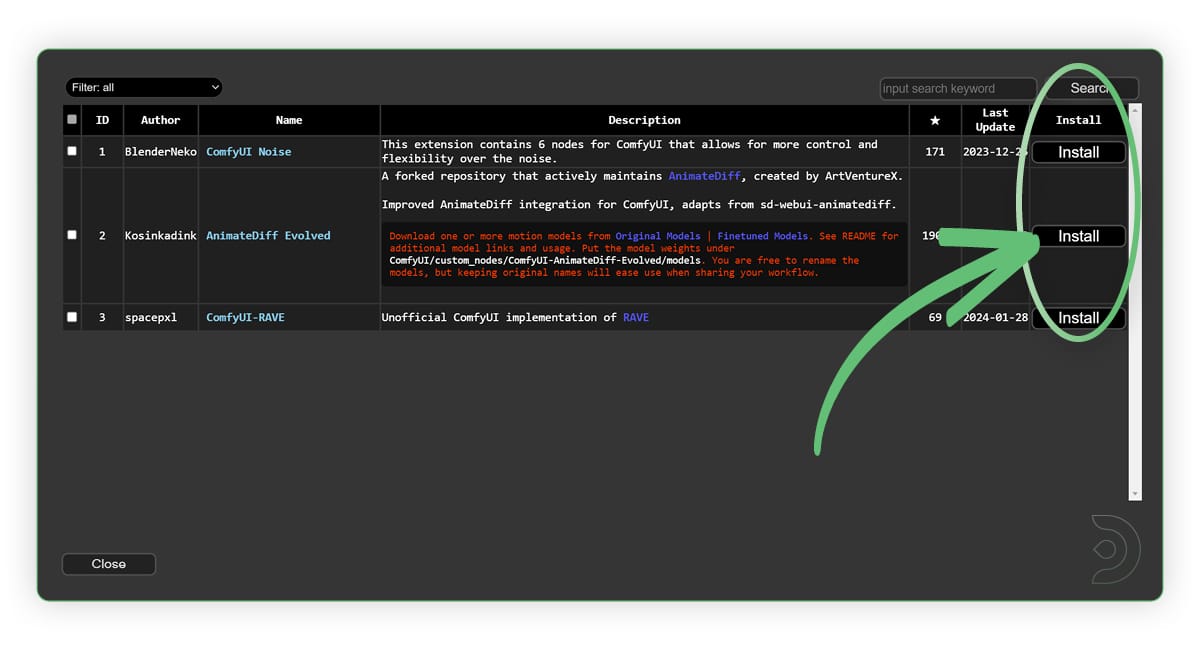

Install missing nodes for the ComfyUI AnimateDiff RAVE workflow

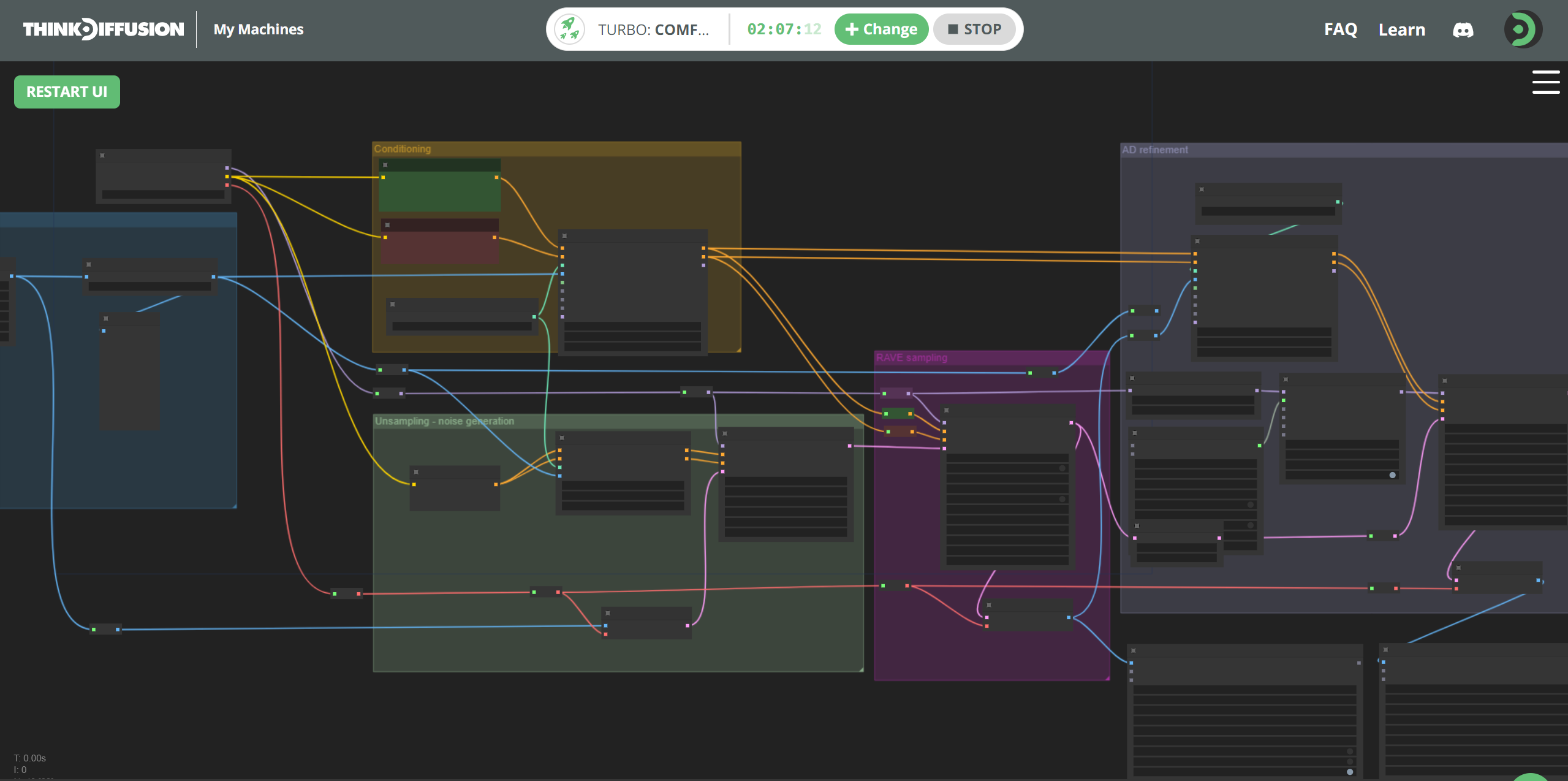

Once restarted, we can now see that we do not have any missing custom nodes.

ComfyUI AnimateDiff RAVE workflow with no missing nodes

Downloading all AnimateDiff models and AnimateDiff motion modules

We now need to download 4 files, starting with the following:

A loosecontrol model:

Original author and address:shariqfarooq/loose-control-3dbox https://shariqfarooq123.github.io/loose-control/ I only combined it with the same lice…

And upload it to the following folder within ThinkDiffusion: ../user_data/comfyui/models/ControlNet/

Uploading the ControlNet loosecontrolUseTheBoxDepth model

We now need to download the controlnet_checkpoint.ckpt model from the URL below:

crishhh/animatediff_controlnet at main

Once downloaded we will need to upload it to the following folder within ThinkDiffusion:

../user_data/comfyui/models/ControlNet/

We now need to download the following v3_sd15_adapter.ckpt model from the URL below.

V3_sd15_adapter LoRA

Once downloaded we will need to upload it to the following folder within ThinkDiffusion:

../user_data/comfyui/models/Lora/v3_sd15_adapter.ckpt

The final model to download is the motion model v3_sd15_mm.ckpt.

v3_sd15_mm.ckpt · guoyww/animatediff at main

Animatediff motion model

Once downloaded, you will need to upload to your instance of ThinkDiffusion in the following folder:

../user_data/comfyui/custom_nodes/ComfyUI-AnimateDiff-Evolved/models/

Let's get creating!

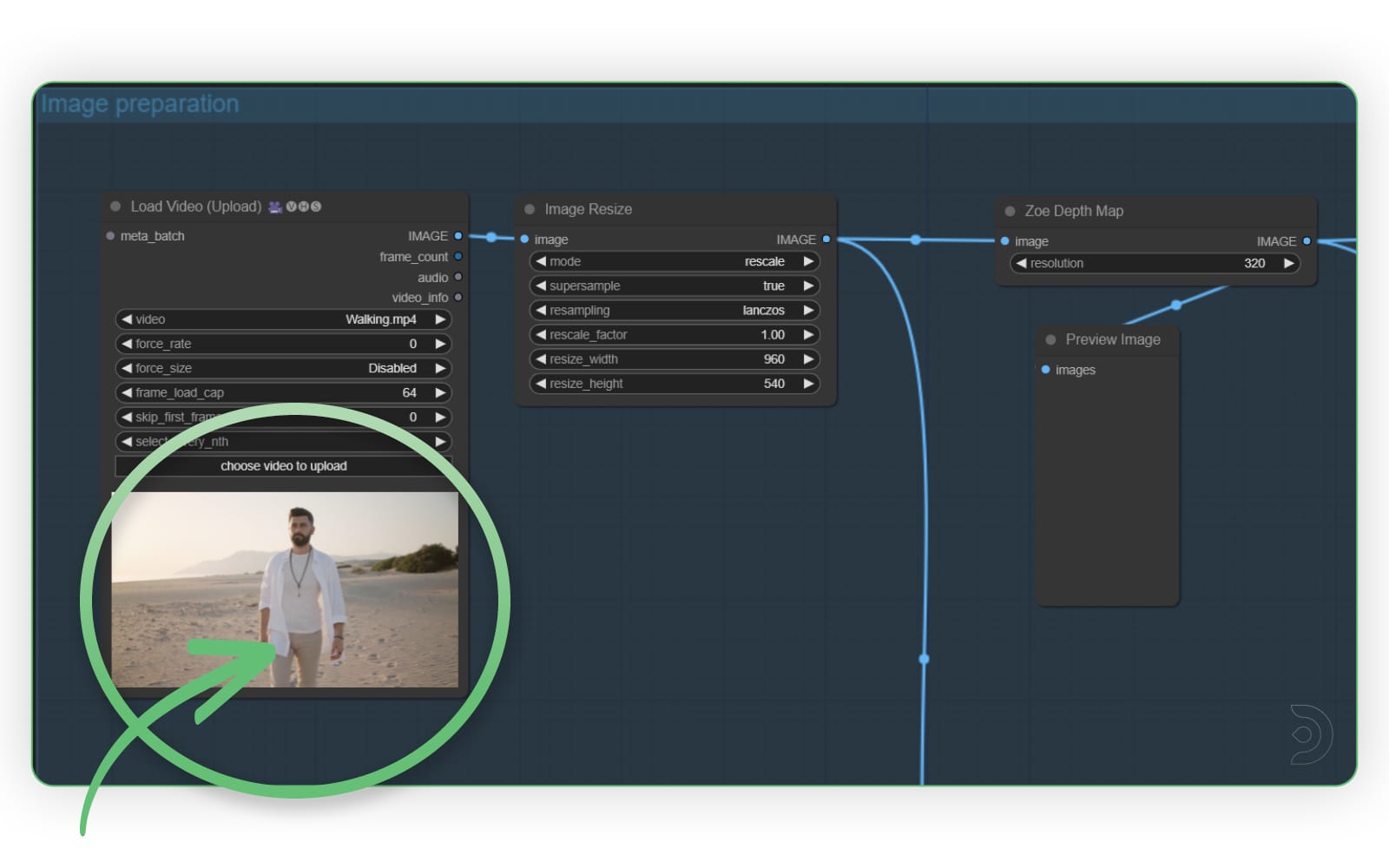

Phew! Now that the setup is complete, let's get creating with the ComfyUI RAVE workflow. Firstly, you will need to upload your video into the Load Video node. You can leave the other settings as the default.

I am using the following video as my input video.

Adding your input video to the Load Video ComfyUI node

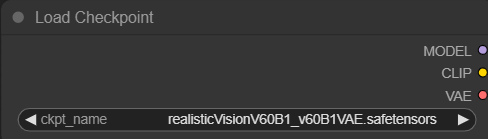

I have selected realisticVision as my checkpoint model but feel free to choose whatever model you want

Selecting realisticVision as your checkpoint model in the ComfyUI Load Checkpoint node

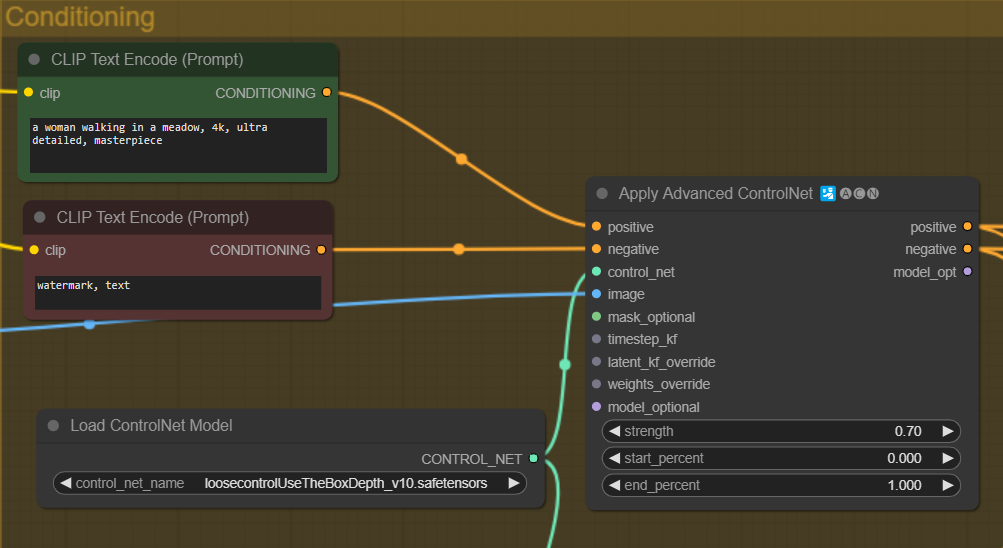

Within the conditioning tab, enter your positive and negative prompts. I have added the following positive prompt:

'a woman walking in a meadow, 4k, ultra detailed, masterpiece'

and the following negative prompt:

'watermark, text'You will also need to select the LoosecontrolUseTheBoxDepth ControlNet model that we downloaded earlier in the Load ControlNet Model node

The Apply Advanced ControlNet node can be left with the default settings

Using the Apply Advanced ControlNet node in ComfyUI

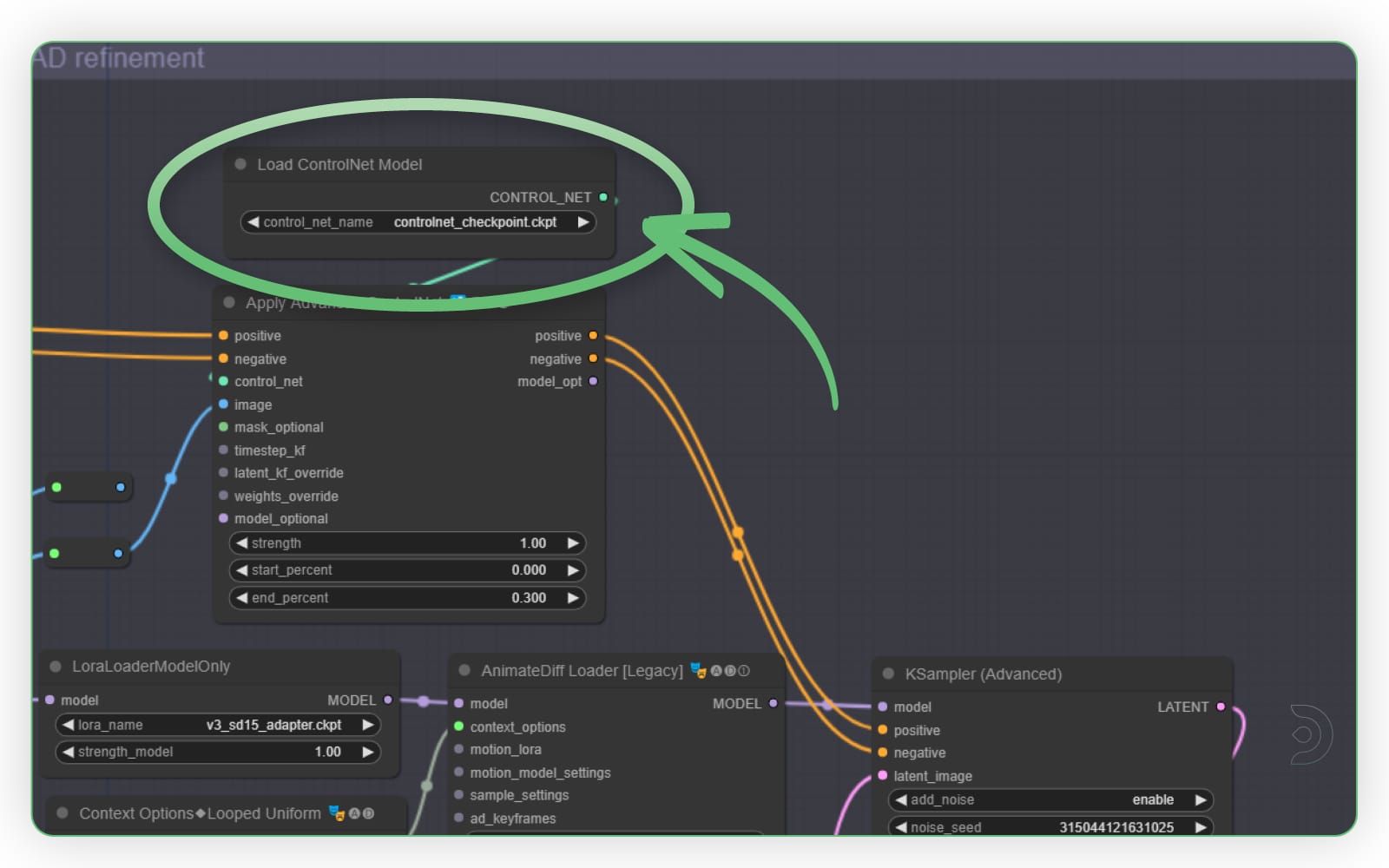

Within the AD Refinement tab, select controlnet_checkpoint.ckpt within the Load ControlNet Model node that we downloaded earlier.

Using the LoraLoadModelOnly in ComfyUI

The remaining values can be left as the default but you can also adjust the number of steps and the cfg scale to suit your workflow.

Now we can hit generate!

Transforming a subject character with the RAVE ComfyUI workflow

Let's run exactly the same settings as before but we will change our prompt to:

'a gorilla walking on a road, 4k, ultra detailed, masterpiece'

Pretty cool right?!

Transforming a subject character into a gorilla with the ComfyUI RAVE workflow

More Examples

Transforming a subject character into an alien with the ComfyUI RAVE workflow

Transforming a subject character into a dinosaur with the ComfyUI RAVE workflow

Frequently asked questions

What is ComfyUI?

ComfyUI is a node based web application featuring a robust visual editor enabling users to configure Stable Diffusion pipelines effortlessly, without the need for coding.

What is AnimateDiff?

AnimateDiff operates in conjunction with a MotionAdapter checkpoint and a Stable Diffusion model checkpoint. The MotionAdapter comprises Motion Modules, which are tasked with integrating consistent motion throughout image frames. These modules come into play following the Resnet and Attention blocks within the Stable Diffusion UNet architecture.

What is ControlNet?

ControlNet encompasses a cluster of neural networks fine-tuned via Stable Diffusion, granting nuanced artistic and structural control in image generation. It enhances standard Stable Diffusion models by integrating task-specific conditions.

Can I use AnimateDiff with SDXL?

YES! AnimateDiff for SDXL is a motion module which is used with SDXL to create animations. It is made by the same people who made the SD 1.5 models

Original article can be found here: https://learn.thinkdiffusion.com/how-to-create-stunning-ai-videos-with-comfyui-rave-and-animatediff/

ComfyUI in the cloud

Any of our workflows including the above can run on a local version of SD but if you’re having issues with installation or slow hardware, you can try any of these workflows on a more powerful GPU in your browser with ThinkDiffusion.

If you’d like a way to enhance facial details then check out my post on ComfyUI-FaceDetailer. And as always, have fun RAVING out there!

RAVE Reviews for ComfyUI Workflows