Intro:

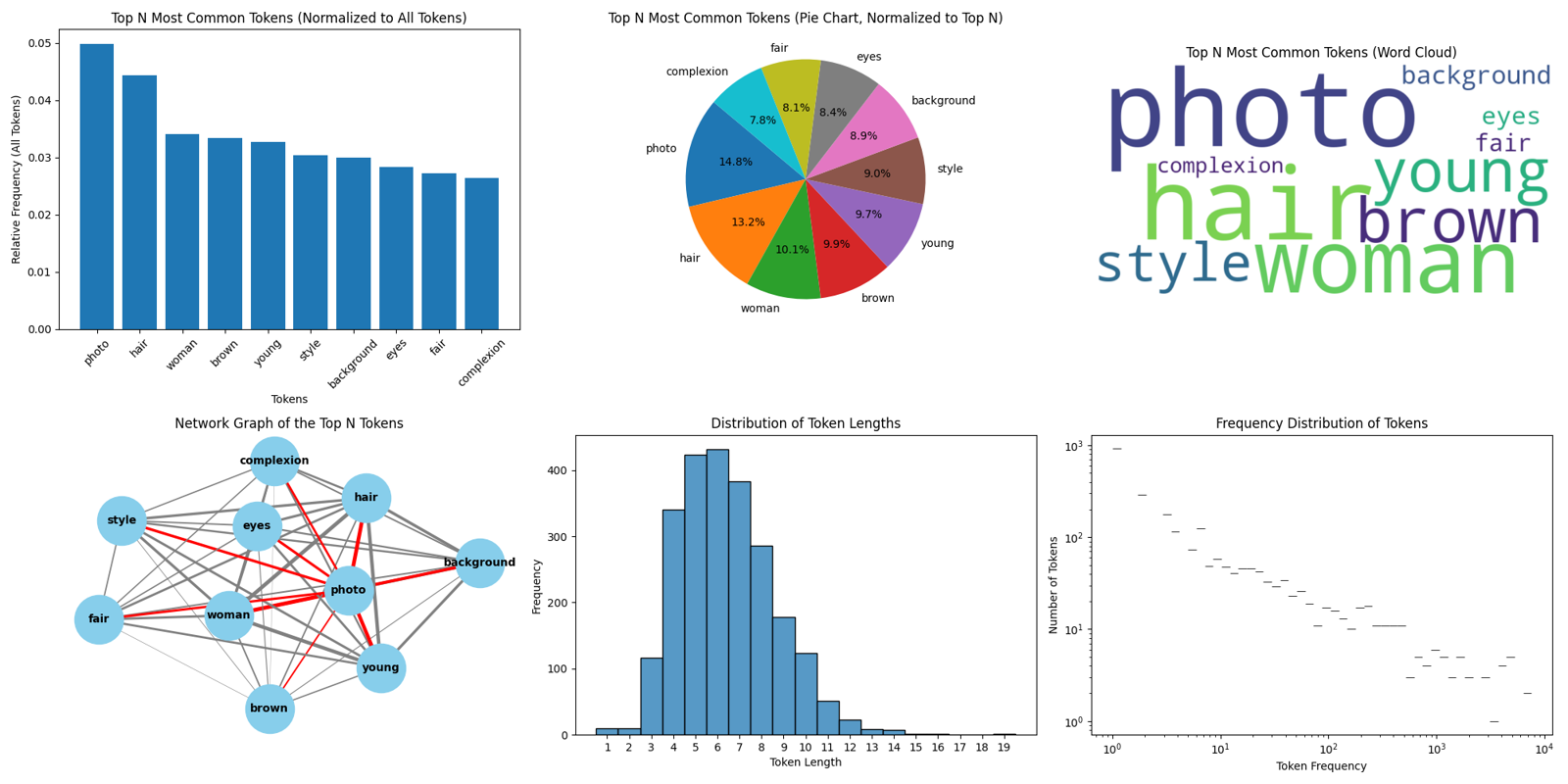

I want to share a simple Python script I wrote to test my regularization dataset captions -- built with the help of GPT-4o -- which combs through a dataset folder and analyzes the caption TXT files therein. Information about the distribution/frequency of the words/tokens is then displayed using a bar chart, pie chart, word cloud, network graph, length histogram, and frequency distribution. An output TXT with listed token counts is also generated during the process. Feel free to use, share, and modify this script to suit your needs. <(˶ᵔᵕᵔ˶)>

Please let me know if you have any questions, comments, or concerns!

klromans557/Visualize-DataSet-Captions: A relatively simple Python script built with the help of GPT-4o. Its purpose is to look through the captions in your dataset folder and display information about the tokens in 5+1 graphs. (github.com)

Changelog:

[2.1] - 12/10/2024

A few small changes to improve the user experience.

Added

New install BAT to set up local venv and create

captionsfolder.New run BAT to use script and activate venv.

Changed

Main script now uses the local

captionsfolder to look for the TXT files. Should still be able to keep images and captions together.

[2.0] - 7/22/2024

Removed

One Bar chart as it contained redundant information already present in the pie chart.

Added

Network Graph of the Top N Tokens to highlight co-occurences within the Top N tokens.

Fixed

Chart titles/labels that were not correct

Expanded comments in python script for user convenience and clarity

[1.0] - 7/20/2024

First Release.

Requirements:

Python 3.7, or later

matplotlib

wordcloud

seaborn

networkx

See also the included requirements.txt file.

Installation:

1. Please have Python 3.7, or later, installed. You can download it from [python.org](https://www.python.org/downloads/).

2. Clone the repository, or download the attached ZIP, and go into the created folder:

git clone https://github.com/klromans557/Visualize-DataSet-Captions.git

cd Visualize-DataSet-Captions3. Install the required dependencies:

- Use the provided install_VDC.bat, which also makes caption folder

OR

pip install -r requirements.txt- and make a caption folder yourself

4. The script can handle the images and captions being in the same folder, feel free to keep them together.

Usage:

(Optional) Update the variables at the top of the script. However, the defaults work fine in most cases:

The

exlude_listhas been populated with common words, but feel free to change these.Set the

num_loadersbased on number of CPU cores/threads to use in parallel processing.Set the

top_nvalue to change the number of top N tokens used in graph calculations.Set the

network_indexto select which of the top N token nodes to emphasize in network graph.-- e.g., most common: = 1, least common: = top_n

Change

output_filetext for the name of the output TXT file with token counts-- note: this file is created in the same directory as the script when run.

Place captions in the

captionsfolder. Run the script using the includedrun_VDC.batfile.If the

token_counts.txtfile already exists, the script will skip the token-counting step and proceed to the graphs. Keep that in mind if you change any of your dataset TXT files; you will need to delete/rename this file and re-run the script to update any changes.The script will display the following graphs:

A bar chart showing the relative frequency of the top N tokens (normalized to all tokens).

A pie chart showing the relative frequency of the top N tokens (normalized to top N tokens).

A word cloud of the top N tokens.

A network graph of the co-occurrences in the top N tokens.

A histogram of token lengths.

A frequency distribution plot of token frequencies.

Close the graph window and terminal to exit the script.

Wrap-up:

The inspiration for this script came from figuring out the various Python scripts within the resources/guides of SECourses (Dr. Furkan Gözükara), our resident Maestro. Many headaches and sleepless nights have been prevented, thanks to him, and that's the Toasted Toad's Truth!

Of course, I could not have done it without the help of GPT-4o. Thanks, Zedd! (>'.')>

I am grateful to both GitHub and Civitai for hosting the script and article.

[EXTRA] Batch Caption Prompt:

I was developing a universal prompt for batch captioning images using either GPT-4V/-4o (fast,fast!,paid), through an API link, or with CogVLM (slow,reliable,free) run locally. Combined with either of these VLMs, this tool allows us to get a visual "feel" for the style, composition, and subject description for a given dataset. I believe VDC will be particularly useful for those experimenting with captioning large regularization datatsets.

Here is an example batch captioning prompt, and an example output, given in the Question:/Answer: format of some CogVLM gradio apps, which will work well with my script,

Question: Caption this image of a woman by beginning each one with the phrase, "photo of a woman". Then list out her age as young (18-30), middle age (31-50), or old (51+), complexion as fair, tanned, or dark, apparent ethnicity (Caucasian, Asian, African, Hispanic), hair color, hair style, eye color, facial expression, pose, clothing, background (with specific details), and photo style. Use comma separation and no other punctuation. Stay under 75 words.

Answer: photo of a woman, young (18-30), dark complexion, African, black hair, curly hair, brown eyes, neutral expression, sitting, plaid jacket, mustard pants, straw hat, glasses, outdoor field with dry grass and distant trees, natural light

When using GPT-4V/-4o (paid API link), copy&paste only the text of the 'Question:' portion into the caption/prompt section of the app.

When using CogVLM (free gradio app), copy&paste everything up to the 'Answer:' into the caption/prompt section of the app. This includes the word 'Question:' and both colons ':'.

-- You may need to change the prompt when using different apps/models in order to get the desired output

The following GitHub repo contains an image captioner which can handle both, in principle,

jiayev/GPT4V-Image-Captioner (github.com)

I used this one for the GPT API access, but I used the CogVLM gradio app of SECourses in my testing (behind his Patreon, which I believe is worth it, but not required!), so I'm not sure how well this one's Cog implementation will respond to this prompt. There are plenty of free Cog implementations out there, so feel free to mix-n-match; I've also had limited success using the Kosmos-2 VLM, though its output it much less descriptive and reliable than the other two.

Please experiment with this prompt and your VLM of choice, and please let me know what you find. I'm in GenAI for the long-haul, and I'm constantly on the hunt for new things to learn.

ദ്ദി ˉ͈̀꒳ˉ͈́ )✧