First、introduction

Many people don't even know the basics of models, but claim "I can make models" and then produce a lot of garbage here. Some people don't even know what they're doing and blindly merge models here, claiming that their models are very useful. There are also many so-called top 100 model authors on Civitai who produce videos and output incorrect theories, explaining pseudo-science.

Recently, many low-quality models have gained a lot of popularity, so here is an article to explain the basic principles and provide some explanations for some hot topics.

【The article is translated throughout using Chat-GPT4.】

【中文用户可以查看tx文档,本文由原tx文档内容翻译而来:https://docs.qq.com/doc/p/a36aa471709d1cf5758151d68ef5b59397421b2e】

Second、tools

Downloading and installing tools for repairing/checking the models.The commonly used model detection and processing tools here are Clip check tensors/model toolkit/model converter

1.DL

These three plugins are not included in the web-ui by default, so they need to be installed first. Here are the installation steps.

Clip check tensors:iiiytn1k/sd-webui-check-tensors (github.com)

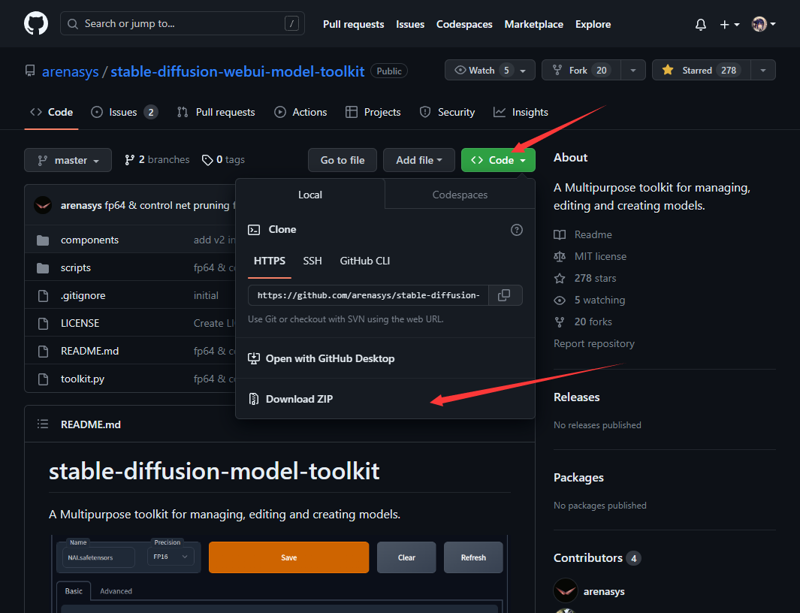

model toolkit:arenasys/stable-diffusion-webui-model-toolkit

model converter:Akegarasu/sd-webui-model-converter

a.Download directly using the Webui

Directly fill in the link copied from the website into the WebUI plugin download area, and wait for automatic loading to complete.

b.Download ZIP package

Click "Download ZIP" on the GitHub interface.

After fully extracting, put it in the extensions folder: the folder where your WebUI is located/extensions.

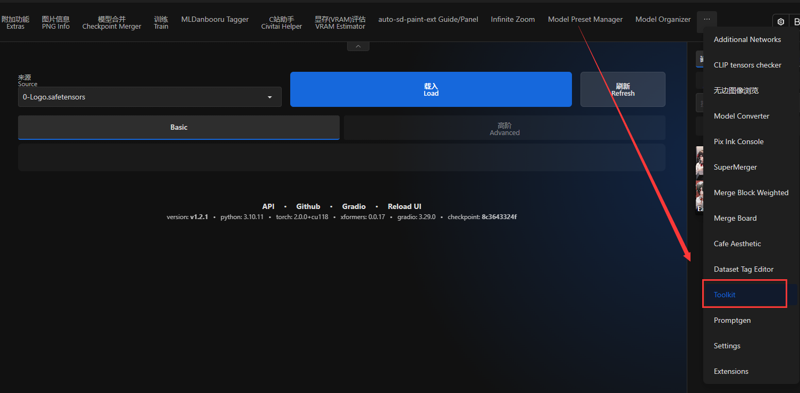

If you can see it in the top bar, it means the installation is successful. (Here, the Kitchen theme has collapsed part of the top bar.)

2.Function Description

CLIP tensors checker

Clip for querying offsets.

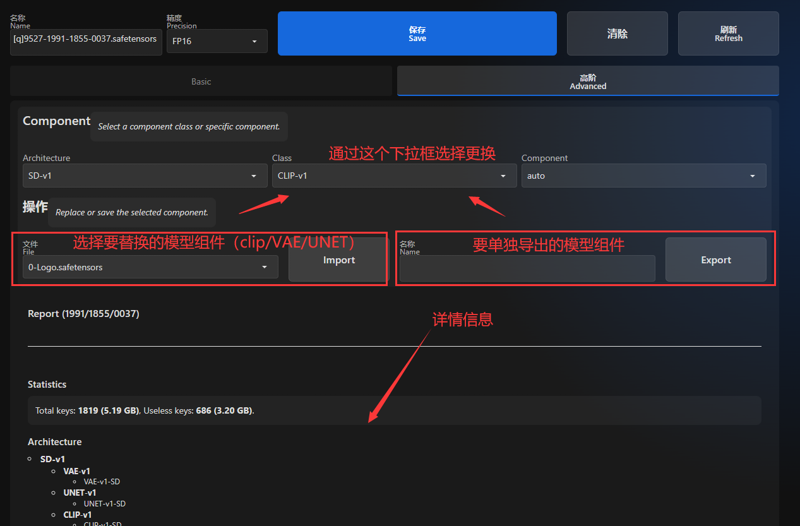

model toolkit

Used for repairing damaged clips, exporting/replacing models such as Unet/VAE/Clip, etc.

model converter

Converting model formats, repairing clips, compressing models.

Third、model problem

For the basic principles of Stable Diffusion, please refer to:

https://lilianweng.github.io/posts/2021-07-11-diffusion-models/

1.Model evaluation system.

A model that meets all of the following criteria can be considered a good model: accurate prompts, no unnecessary details, visually appealing generated images, and a well-functioning model itself.

Accurate prompts: as the name suggests, the better the model's ability to distinguish tag prompts, the better. If the model has poor prompt recognition, it will be difficult to achieve the desired results.

Not adding unnecessary details: Refers to adding details that are not included in the prompt, which can affect the control ability of the prompt on the generated image. In model training, this means that using a large number of strongly correlated tag images in the training set will cause overfitting. For example, in the nai model, the prompt "flat chest" will force a child-like effect, and in Counterfeit-V3.0, the prompt "rain" will force an umbrella, which is also known as "level 0 pollution". It is impossible to completely avoid adding unnecessary details, but it is better to add as few as possible.

The impact of this varies, but under the deliberate guidance of some Merge model authors, the effects of overfitting have been amplified and merged, making it possible for many models to generate seemingly good images with very simple prompts. Some even call this a "high rate of good images". However, the details outside of these prompts are mostly homogeneous and unstable, making these models extremely poor in terms of deep learning.

For the current Merge model, it can be understood that the quality of the model is poorer if the generated image from input "1girl" is more refined.

The model itself is fine: generally speaking, it refers to a model that does not contain junk data and has no issues with VAE/Clip.

Special note: LoRA compatibility has nothing to do with model quality.

Many people consider this to be related to model quality, but in fact, it has nothing to do with model quality. Some models require a lot of adjustments to achieve a certain effect, while others require fewer adjustments to achieve the same effect. If I use the LoRA derived from the previous model as the base model for the subsequent model, the output will be poor, and if I use the LoRA derived from the subsequent model as the base model for the previous model, I will not achieve the desired effect.

Some people may argue that even if the prompt words do not obey, as long as the generated image is good, it is still a good model. Then why not throw away the text decoder and use a random function generator to generate embedded noise instead, instead of carrying such a large text encoder?

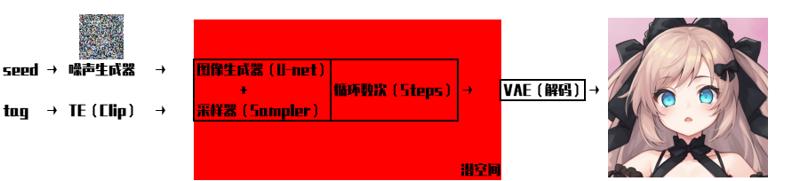

2.clip、unet、VAE

TextEncoder(Clip):In the Diffusion model, the TextEncoder (Clip) is responsible for converting textual data into vector form for processing and generation by the model. It is an important component of the Diffusion model, used to combine text inputs with image inputs to generate high-quality images.

U-Net:Guiding the noise image generated by random seeds to guide the direction of denoising, identify areas that need to be modified, and provide the changed data.

VAE: The generated images by AI are not normal images that can be viewed by humans. The role of VAE is to convert this part of the output from AI into images that can be viewed by humans.

Comparison of Model Before and After Issue Fix (Above is the generated diagram after repairing the model)

3. VAE

a. The reasons for VAE issues.

When the model output image turns gray, it indicates that there is an issue with the VAE in the model, which is commonly seen in fusion models. Any merge between different VAEs can cause something to be broken in the VAE. This is why many model output images are gray and why people are keen on using an external VAE separately.

In this situation, generally, the VAE needs to be fixed in order for the model to function properly. However, the web UI provides an option for using an external VAE instead of the model's VAE during generation, which allows for ignoring the model's VAE.

For example, this type of image is a typical sign of VAE damage.

b. Replace the VAE model.

Replacing or using another VAE is not always the best solution, as some models may produce blurry or messy output images after the replacement. However, this does not mean that the original VAE cannot be used in the merged model. The Merge model can utilize the VAE from the original models before the merge, thereby avoiding various issues with the output quality.

c. VAE duplication.

Some people like to rename existing VAE models (such as AnythingV4.5VAE) and use them as their own VAE models, which leads to downloading many VAE models, but upon checking the hash, they are found to be exactly the same. Here are the hash comparisons for all the VAE models in my possession:

d. Common misconceptions/mistaken statements

Therefore, models that are often accompanied by statements such as "VAE is not injected into the model and can be freely chosen", "VAE is like adding a filter", or "VAE is optional" usually have many problems.

Similarly, the color depth sorting related to VAE is unscientific, such as statements like "NAI's VAE has the lightest color, and 840000VAE has the darkest color." Replacing VAE arbitrarily can affect the output image, and the output of some models may be blurred or the lines may explode as a result. If a problem-free external VAE is used and the output image still turns gray, it is a problem with the model itself and has nothing to do with VAE.

The role of VAE is not to correct color tones or act as a "model filter".

4. Clip damaged.

a. Clip offset.

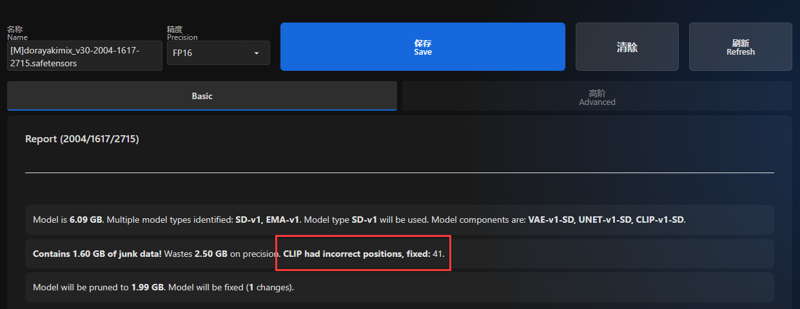

In general, clip damage refers to clip offset, similar to the one shown in the image below.

During merging a CLIP key called embeddings.position_ids is sometimes broken. This is an int64 tensor that has the values from 0 to 76, merging will convert these to float and introduce errors. For example in AnythingV3 the value 76 has become 75.9975, which is cast back to int64 when loaded by the webui, resulting in 75.(So actually, only the value 41 would affect the normal use of the model. The toolkit only displays the clip value that would affect the normal use of the model, which is also the reason why the two detection tools output different results.)

b.The impact of clip offset.

Clip offset can affect the model's understanding of the semantics of the corresponding token at a certain position. For more details, please refer to the "Smiling Face Test" experiment in the following link:

[調査] Smile Test: Elysium_Anime_V3 問題を調べる #3|bbcmc (note.com)

There was a time when almost every fusion model on Civitai had this issue, where clip corruption was a very common problem, and even now many models may still have this problem. A long time ago, someone "fixed" clip by pruning FP16, but this is not the correct method. However, now it is very easy to fix clip using toolkits and other plugins.

5.junk data

a. The generation of junk (invalid) data.

Model merging often generates a lot of junk data in the process of running on web UI, which is actually not used. Only the fixed content in the model can be loaded, and the rest is all junk data. Many merged models have a bunch of this junk data, and many people always think that deleting this junk data will affect the model itself, so they don't remove it.

[1] The biggest impact is the EMA model: after merging, the EMA model will no longer accurately reflect UNET, which not only makes the EMA useless but also affects the model's training. If you want everyone to effectively use EMA, please use the training model. (It is recommended to delete all EMA models before merging the models, because for any merged model, EMA can be considered as junk data.)

[2] Some operations that inject LoRA into the ckp large model will generate some invalid data.

[3] Other unusable data of unknown origin (there are many reasons, which will not be discussed here).

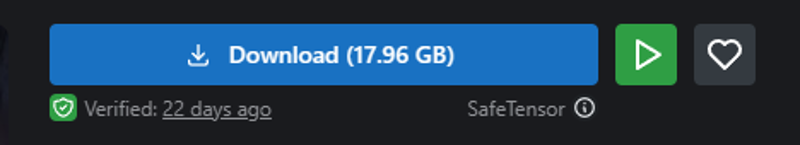

For example, the 17GB classic model contains over 10GB of junk data.

b. General size of the model.

The general size of the model is 1.98GB and 3.97GB, some are 7.17GB. Unless the different precision of each part of the model causes other sizes, strange-sized models generally have more or less junk data.

If someone says that bigger models are better, then I suggest you add the webui integration package to it and convert it to a .ckpt file.

"[PS: Many models take advantage of the fact that the webui for automata does not have model checking, while diffusers themselves do have checking. Many models that contain unnecessary data will trigger errors. However, if you submit a pull request to fix this, many people will likely complain that the new version is not compatible and advise against upgrading.]"

6.Invalid precision.

a.It is meaningless to use higher precision in practice.

By default, the webui will convert all loaded models to use FP16.

So, many times higher precision doesn't make much sense, as models without using --no-half will be exactly the same. And in fact, even with --no-half, the difference in models won't be very significant.

Comparison of specific effects:https://imgsli.com/MTgwOTk2

b.annoying float64

Generally speaking, the most common problem is caused by the old version of the SuperMerge plugin (which has been fixed in the new version). Through detection, it was found that there were quite a few float64 weights mixed in a 3.5GB "FP16" model, which resulted in the model having a strange size.

7.Unet

Model fusion basically fuses UNet, and sometimes the appearance of UNet can cause serious inaccuracies or excessive details in the generated prompts. Some models become more accurate in generating prompts after removing certain layers, for example, some of the "in" layers of the Tmnd model were replaced and the generated prompts became much more accurate.

As for some models, the generated images become blurry when dealing with complex prompts (such as prompts with many details), which is also a problem with UNet. Many people attribute this to the problem of the new version of the web UI, but in fact, this is a problem with the model itself.

However, blurry images are not necessarily caused by UNet.

fourth、Hotspot Description.

1. Model Homogenization.

a. Explanation.

Most Merge models are a combination of several fixed high-download models. They can be classified according to the output effect, such as the AOM, pastel, Anything, and cf series, or they can be simply a combination of these models (such as Anything4.5). Many common series models can be easily identified as belonging to a certain type. This part of the fusion models is highly homogeneous.

b. How to identify high-download models and fusion models that are primarily based on them.

The specific effect of the AOM model is that there is a kind of oiliness on the surface of the character's body (this is also what people outside the circle often refer to as "AI flavor" recently).

The PastelMIX model is one of the models with the poorest performance in generating prompts, even when there is no bias in the clip. It often experiences serious prompt failures. The PastelMIX model series has a very obvious background effect, mostly composed of various mixed color blocks.

The Anything model is an ancient fusion model, in which AnythingV3 has poor performance in generating prompts and also adds some messy details. Whether it is the V3V5 main body or other models fused from it, there are varying degrees of issues with clothing wrinkles, skin wrinkles, and hair effects.

The features of the cf3 series are not very obvious, and it takes some familiarity to be able to recognize them at a glance.

2.The download count of models on the Civitai platform highly depends on updates.

The hastily fused models are updated almost every few days, while the truly good models are difficult to update or have no room for further improvement, perhaps once is enough. Civitai's browsing mode, which is destined for frequent updates, can generate higher popularity for the model.

What we can do is to make good use of the star rating function, and give a 1-star negative review for models that are very poor.

On CIVITAI, we often see some very poor models. Due to issues such as face-saving or social pressure, some people give them a full-star rating instead of criticizing them. They are afraid to give a 1-star negative review, and feel obliged to praise everything that is presented to them. This is not a good phenomenon, as insincere praise is not beneficial to anyone.

3. Blurry Image

Some people may feel that the generated images have become blurry recently. This is not a problem with the web UI, nor is it something that cannot be solved without high-definition restoration. If you encounter this situation, please check the VAE first. Some damaged VAEs themselves may be blurry, while others may be due to the use of unreasonable VAEs. If all VAEs have been tested and found to be blurry, then this is a problem with the model itself, which is common in various merge models (especially mbw merge models).

Note: Many of your VAEs are likely the same, just with different names, so please check the hash value of the VAE before testing.

4. Easynegative Emb

It is not recommended to use Easynegative Emb in any model other than counterfeit2.5/3.0.

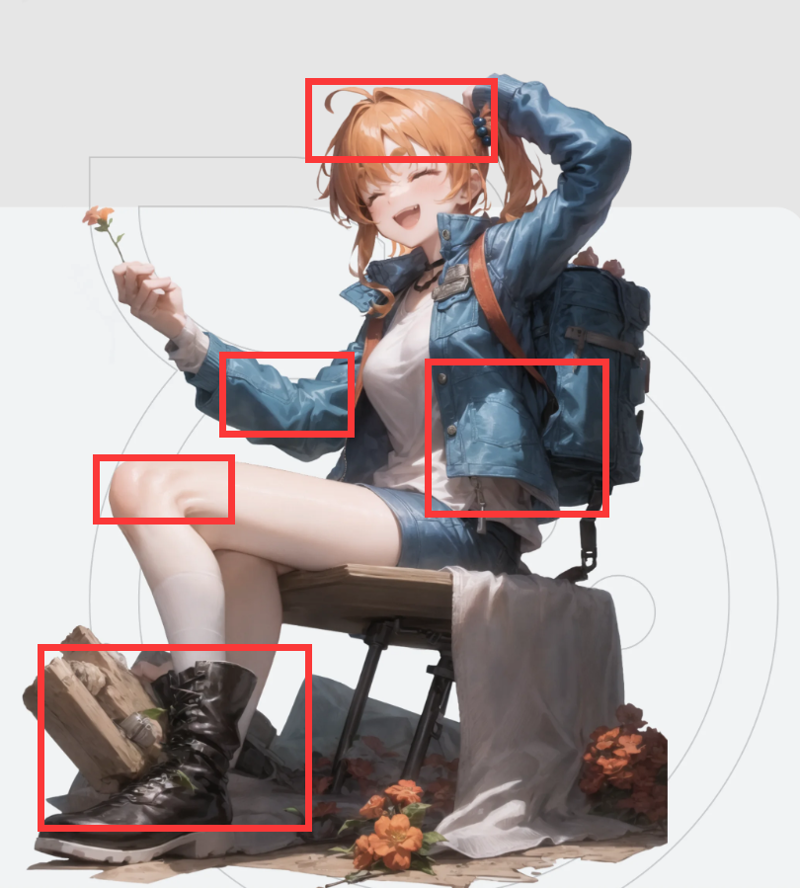

a. Affects the generated image.

As for why it is not recommended, you will know it by testing it. Easynegative can affect composition, coloring, clothing texture, and many other things. When testing a model with EasyNegative, it will seriously affect your judgment of the model.

The front part is the normal negative prompt, and the back part is the use of EasyN (EasyNegative embedding, abbreviated as EasyN).

The former did not use EasyN, while the latter did. It can be clearly seen that the image generated using EasyN has lost its stylization.

b. Impact on the accuracy of prompt words.

EasyN will significantly impact the accuracy of prompt words and also alter the texture of some models. It's best to avoid using it if possible. When writing a new tag string, negative prompt words are typically added last because they will inevitably have some impact on the image, and much of that impact is unpredictable. This can make it impossible to achieve the desired effect. In fact, during the time of the "元素法典" the general practice was to use negative prompts only as a supplement when the generated image contained unwanted elements.

Universal negative prompts (such as the one below) can cause significant effects, sometimes making it impossible to achieve the desired result no matter how much adjustment is made. They can also cause some prompt words to become ineffective. The effect of EasyN is equivalent to that of these fixed negative prompts. Moreover, other quick negative prompts can also have the same effect.

Negative prompt: (mutated hands and fingers:1.5 ),(mutation, poorly drawn :1.2), (long body :1.3), (mutation, poorly drawn :1.2) , liquid body, text font ui, long neck, uncoordinated body,fused ears,(ugly:1.4),one hand with more than 5 fingers, one hand with less than 5 fingersOf course, if it's just a single prompt word or a few simple prompt words drawn from a deck, or if you're using a model that is not very accurate with prompt words, then using EasyN as a quick prompt can be a very convenient operation.