FLUX.1 Model Quantization Comparison and User Guide

The FLUX.1 language model is available in various quantized versions, each offering different trade-offs between model size, inference speed, and output quality. This guide will help you choose the right version for your needs.

Quantization Comparison Table

Choosing the Right Model

For Maximum Quality (flux1-dev-F16)

Use when accuracy is critical and computational resources are abundant.

Ideal for research or applications where output quality is paramount.

For High Quality with Optimization (flux1-dev-Q8_0)

Offers near-original quality with some performance gains.

Good for applications requiring high accuracy but with some resource constraints.

For Balanced Performance (flux1-dev-Q5_1 or flux1-dev-Q5_0)

Recommended for most general-purpose applications.

Provides a good balance between output quality and inference speed.

For Fast Inference (flux1-dev-Q4_1)

Use when speed is important but some level of accuracy must be maintained.

Suitable for interactive applications or processing larger amounts of text.

For Maximum Speed and Minimum Size (flux1-dev-Q4_0)

Best for mobile applications, edge devices, or scenarios with strict resource limitations.

Prioritizes speed and efficiency over maximum accuracy.

Advice for Users

Testing: If possible, test different quantizations on your specific hardware with your intended use case. Performance can vary depending on the hardware and specific application.

Resource Constraints: Consider your available RAM and storage. Larger models offer better quality but require more resources.

Application Requirements: Assess whether your application prioritizes speed (e.g., real-time interactions) or accuracy (e.g., critical decision-making tools).

Iterative Approach: Start with a balanced model like Q5_0 and adjust based on performance and output quality in your specific use case.

Remember, the "best" model depends on your specific requirements and constraints. Always evaluate the trade-offs between size, speed, and quality in the context of your project.

the list of official GGUF models :

from the master of GGUF models

https://huggingface.co/city96/FLUX.1-dev-gguf/tree/main

https://huggingface.co/city96/FLUX.1-schnell-gguf

the list of my merged GGUF models:

https://civitai.com/models/682369/bernoulli

https://civitai.com/models/661102?modelVersionId=739814

run T5 GGUF to save more GPU:

https://civitai.com/models/668417/t5gguf

My personal workflow

https://civitai.com/models/658101

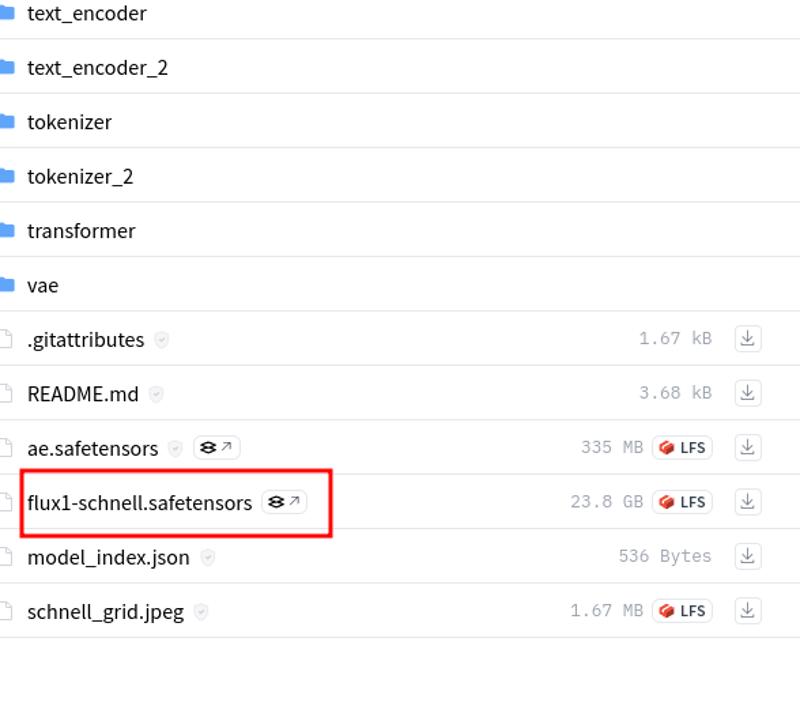

How to use or convert any model in GGUF format

model ex could be used

https://huggingface.co/black-forest-labs/FLUX.1-schnell/tree/main