UPDATE: After some testing I think captioning images for LoRA training might be obsolete for Flux, as training LoRA with using only a single activation token has produced excellent results. I will leave this up for posterity, but keep in mind that there may be no need to use it at all.

See this article for more info: https://civitai.com/articles/6792/flux-captioning-differences-training-diary

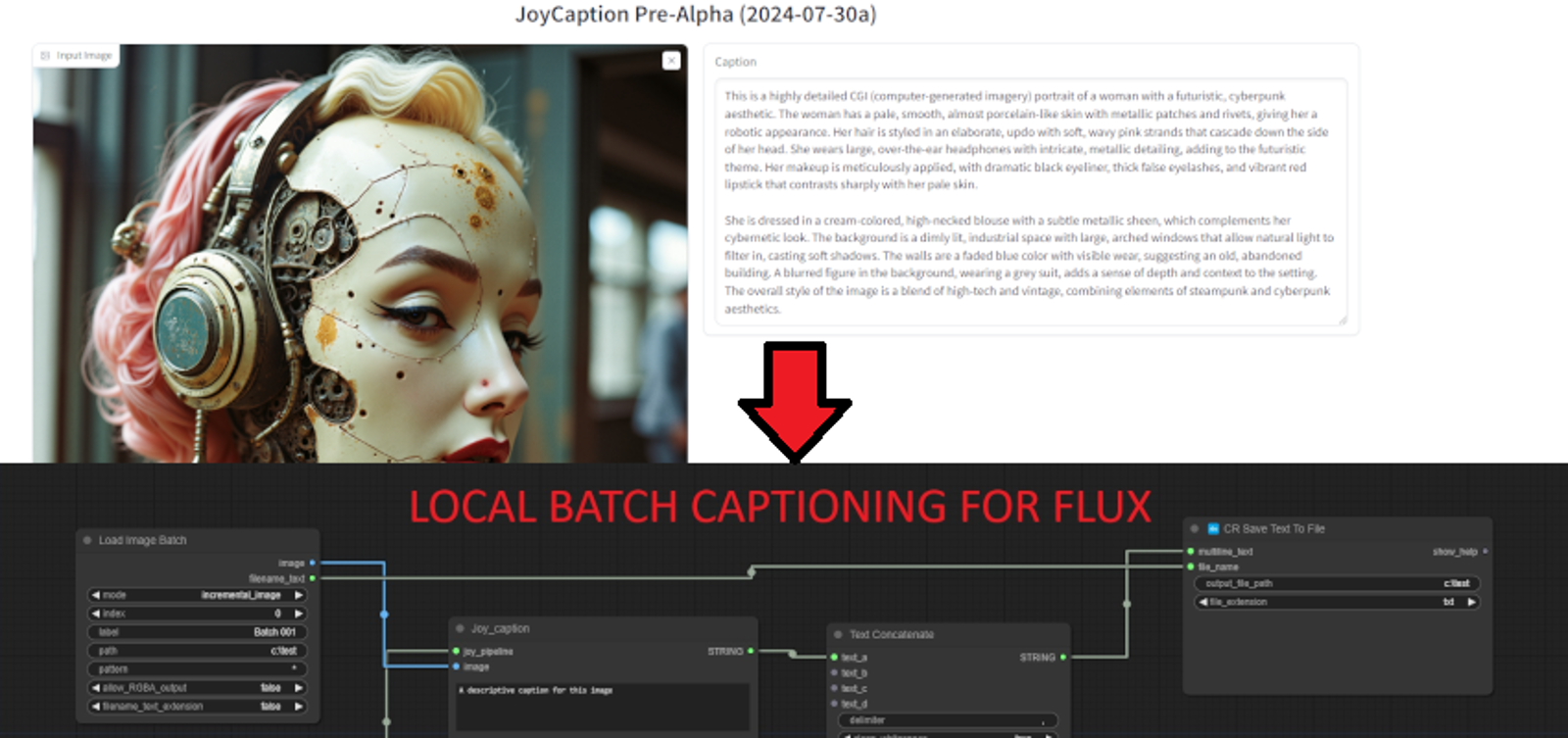

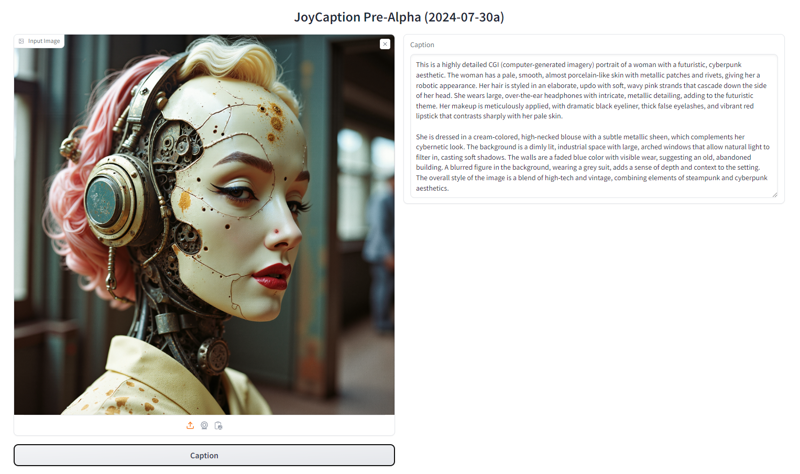

JoyCaption is excellent for captioning images for Flux.1 Dev training, or just getting a Flux.1 dev

You can use it for free online here: https://huggingface.co/spaces/fancyfeast/joy-caption-pre-alpha

But, if you want to batch process images for training, dragging each image into the site one by one is tedious, and you get limited free compute time on huggingface. However you can do it all inside ComfyUI locally.

Steps:

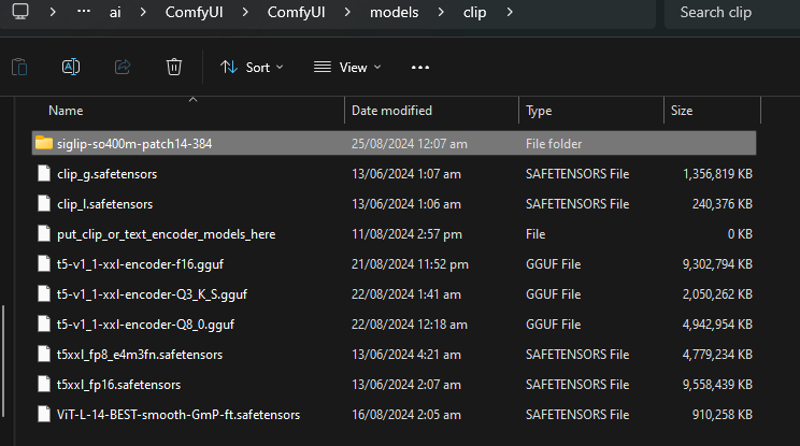

Clone the following clip to your ComfyUI\models\clip\ directory

git clone https://huggingface.co/google/siglip-so400m-patch14-384

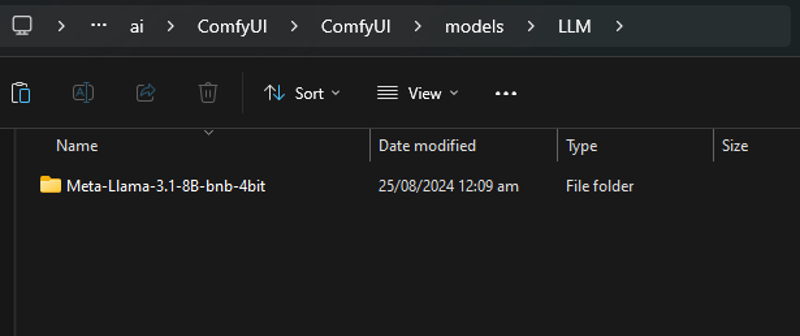

Create a new folder in ComfyUI\models\ called LLM inside clone the Llama model

git clone https://huggingface.co/unsloth/Meta-Llama-3.1-8B-bnb-4bit

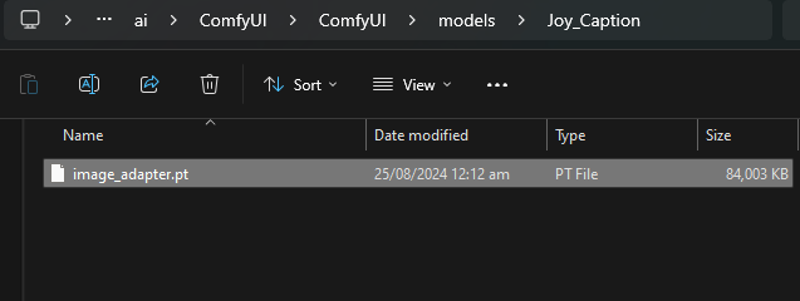

Create a new folder in ComfyUI\models called Joy_Caption and install the image_adapter.pt

https://huggingface.co/spaces/fancyfeast/joy-caption-pre-alpha/tree/main/wpkklhc6

Then save the script as a .json and drag it into your comfy workflow. Any nodes you don't have will be in red. If you have comfy manager (you should) you can just download the missing nodes.

Restart comfy and try again.

Some notes:

All your images should be named the same way, i.e image-001, image-002 etc

You must create the output directory first, the workflow will not do it for you.

JoyCaption is excellent, but it's not perfect. I recommend you look at each output file and compare it to the image it captioned, and make manual edits for better training.

It uses about 9GB of VRAM while captioning so keep this in mind.

I take no credit for any of this, I just compiled all the resources into one place.

Real Credits:

JoyCaption on Huggingface.

StartHua on Github

Previous_Power_4445 on Reddit for the workflow.

ltdrdata on Github

WASasquatch on Github

suzie1 on Github

comfyanonymous on Github

unsloth on huggingface

google on huggingface

Anon