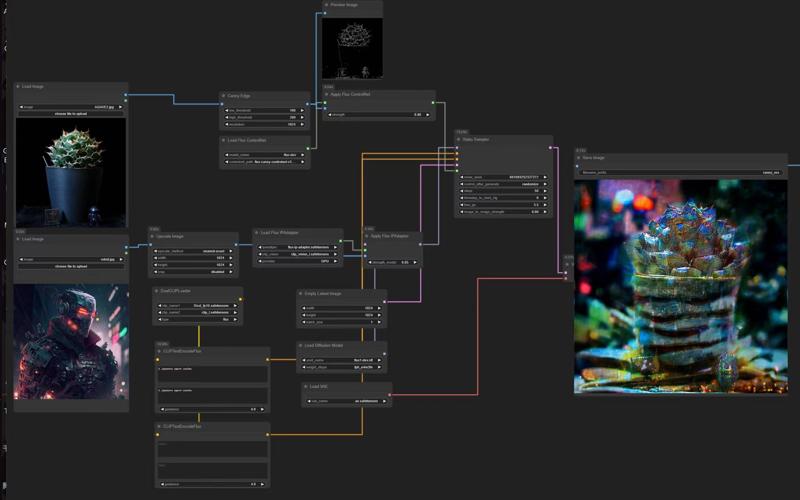

It's great to finally have an IPAdapter node for Flux! However, it is not working ideally yet, at least not for style transfer. In order to achieve that desired effect, I tried a mixed approach, using Flux IPA in conjunction with Flux's natively superior img2img feature, and finally got something working.

Workflow here

The catch? You need to manually tune the controller, IPA and img2img for every use case. It's like an impossible triangle, and you need to keep a balance between composition, style accuracy, and image quality.

The workflow is quite simple:

ControlNet for image composition control. (used Canny in sample workflow, but you can swap it out for Depth or HED if you prefer.)

IPAdapter for style transfer. (To be honest, the current IPAdapter isn’t very powerful yet, at least not for style transfer.)

Img2Img to further enhance style transfer effect, (it does a good job to ensure that the lighting and color tones of the image are relatively consistent.)

By combining these three, you can achieve the general goal of style transfer. I've included some comparison below.

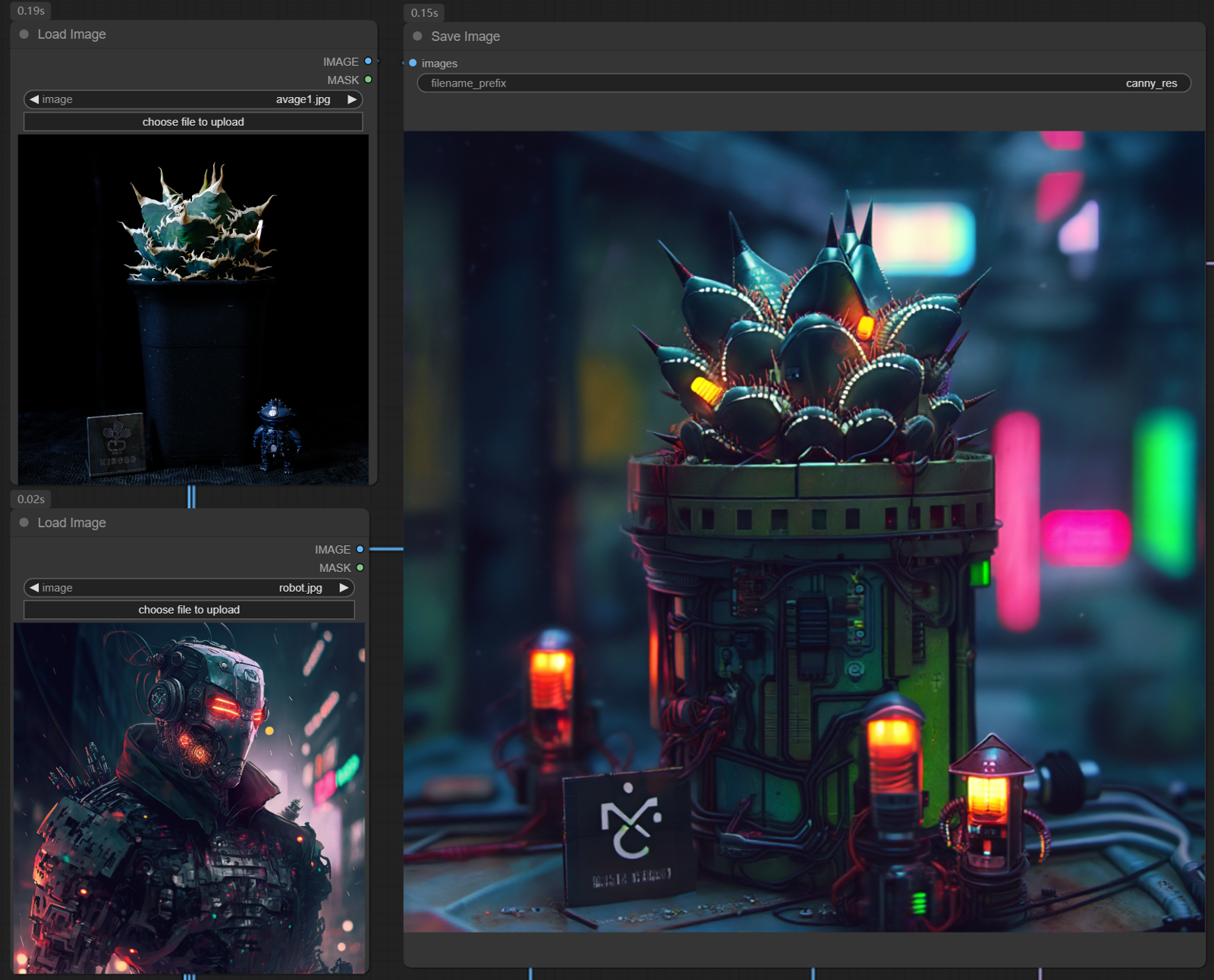

Example 1: Using a conjunction of ControlNet + IPA + Img2Img. Although the details (like highly mechanical feel, electronic components) are not 100% transferred, the style is similar and the color tone is very similar (thanks to img2img). The image is usable. (Using only IPA is worse, more examples below).

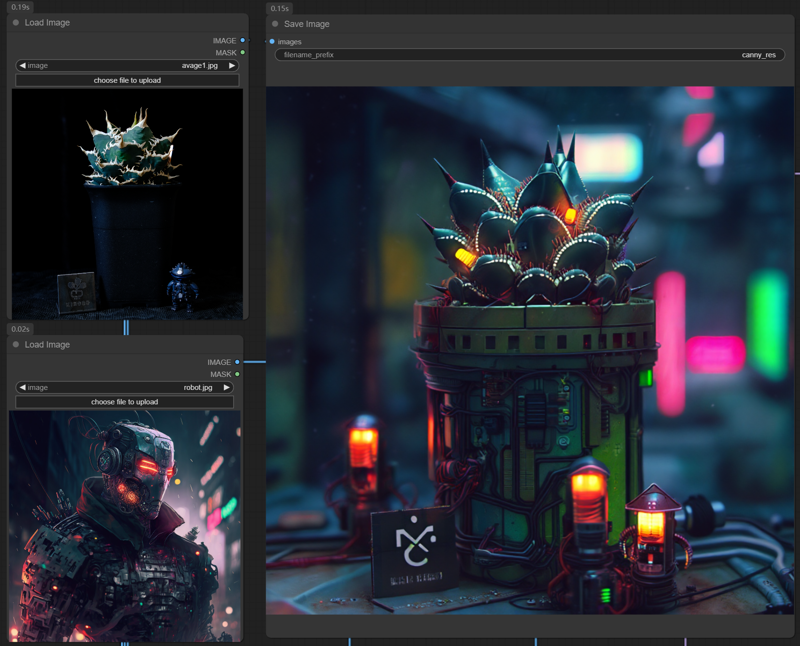

Example 2: Also using a conjunction of ControlNet + IPA + Img2Img. With Img2Img weight around 0.2 - 0.3, we begin to lose control of the image composition.

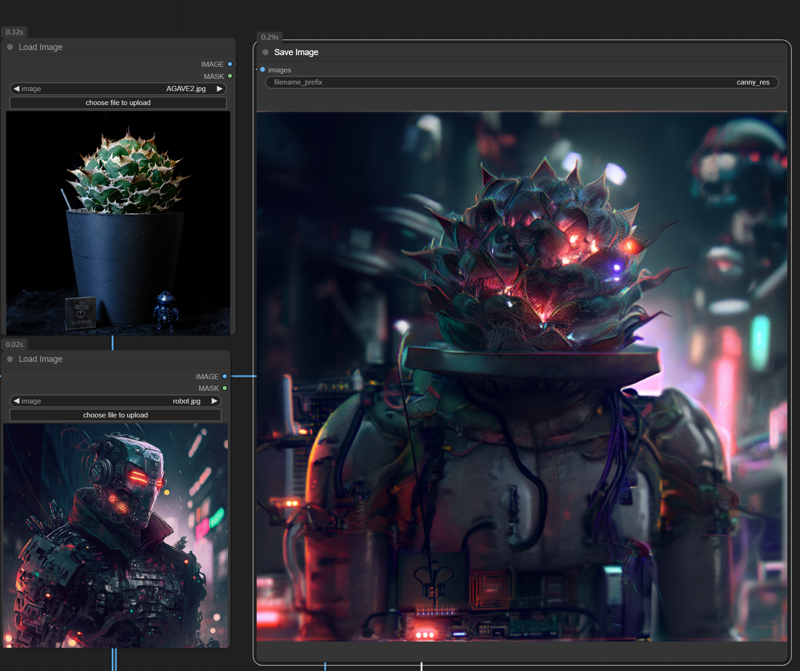

Example 3: Using only ControlNet + IPAdapter. This doesn't always give the desired style coherence. Usually, either the art style or color tone is off. In this case, the art style is quite different, and the background style is off too.

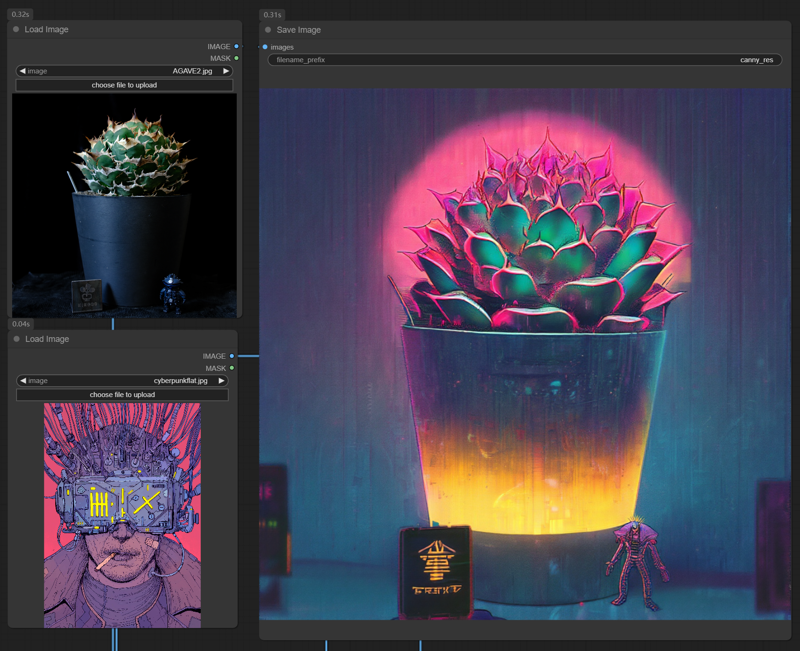

Example 4: Only using IPA + Controlnet. Setting the IPA weight too high only leads to overly noise image which is not really usable.

So the way I went about tuning the strengths of different node can be summarized as below:

ControlNet Strength: I tend to keep it above 0.7. The stronger the subject control you need, the higher you should set the strength.

IPA Strength: Doesn’t need to be very high. In theory, the higher the IPA strength, the more style transfer you get, but in practice, it affects image quality and the transfer effect isn’t great. So, I suggest keeping it below 0.5.

Img2Img weight: Img2Img contributes more to overall image quality and the similarity in lighting and color compared to IPA. I set it around 0.1. If you go up to 0.2, the image composition might begin shifting outta control.

Prompt: This is also crucial. Since you can’t set IPA and Img2Img weights too high (doing so would increase noise and reduce detail), matching the right prompt, especially a style prompt, can effectively help produce better images. I used WD14 Tagger to extract prompts from reference images for your reference.

Finally, Flux’s node ecosystem is still maturing. If your priority is image quality, combining it with a more mature stable diffusion based IPA might be better. If you want a quick and easy workflow, using ControlNet + Img2Img may work too. Lmk what you guys think, cheers!