Let's talk about how you can edit a picture. Suppose we have this picture of an anime woman wearing straw hat:

Let's say I want to remove this hat. How can I do it. Inpainting is the first thing that comes to mind. Lets try it.

Prompt/Negative prompt:

1girl, upper body, outdoors, orange hair, long hair, sky

3d, censored, muscular, swimsuit, straw hat

Steps: 30, Sampler: DPM++ 2M SDE, Schedule type: Karras, CFG scale: 7, Model: autismmixSDXL_autismmixConfetti, Denoising strength: 1

Welp.

The result doesn't look so good. Reason? The model can't figure out shape of the head by itself. Hopefully there is a well known way to guide generation process.

ControlNet Lineart for the rescue!

Just extract lineart from the image and edit it in any image editing software (Photoshop, I mean Photoshop)

Easy.

If you don't know how to extract lineart from the image here's how to do it (Forge web-gui):

Click the button.

Once you've drawn desired shape put the control image back into controlNet lineart. But be careful with the settings.

No preprocessor because we use control image itself.

Mistoline control model (works well with SDXL and Pony models).

With controlNet Lineart you can easily guide inpainting.

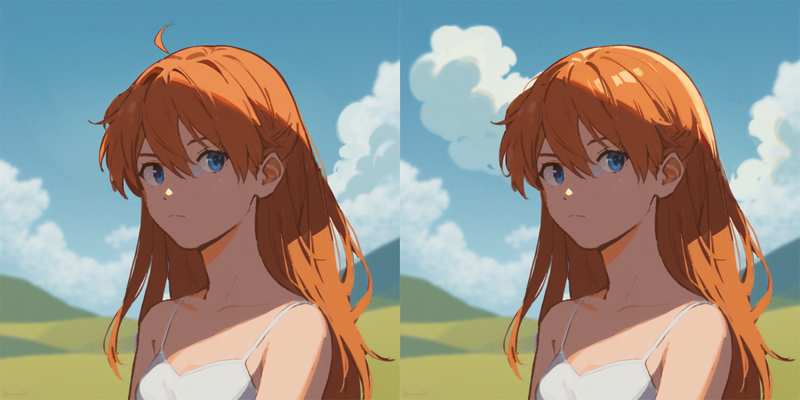

Much better.

We robbed her of her hat and it may seem that our mission is accomplished but...

It's not good enough

If you look closely you'll notice that the inpainted part lacks brush texture present on the rest of the image. It's because the model doesn't know the style that should be used for a picture like this. You can try to replicate style with prompt or search of LoRAs with similar style but there is a more straightforward approach - style transfer.

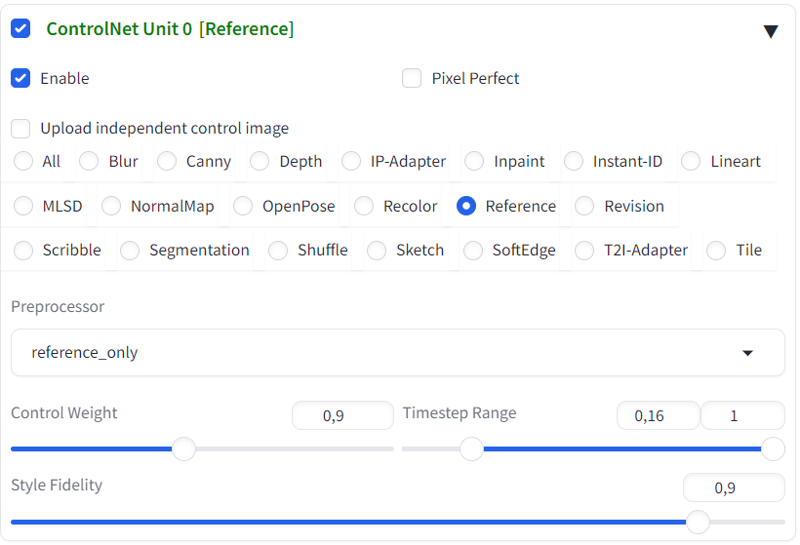

What we want to do is to make the model replicate style of the original image. In this particular case we want low-level details like texture of our inpainting area to match the original image. For this purpose we'll use another controlNet - controlNet Reference.

Activate another controlNet Unit:

ControlNet Refenrence is tricky. It doesn't need additional model but it has different implementations for Forge, Automatic1111 and ComfyUI. It works especially well for non-photorealistic SDXL models like Animagine and Pony. Similar to IP-Adapter but less stable and more effective for replicating high-frequence features (textures, lineweight, etc). Paired with ControlNet Lineart can lead to almost perfect inpainting for any non-photorealistic images - as if the inpainted area was drawn by the same artist.

The final result

The hat is gone

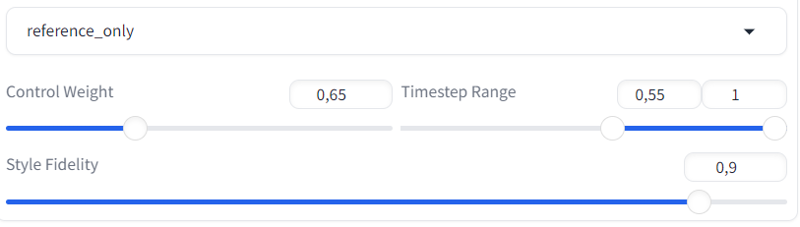

ControlNet Reference can have a very strong effect. On high strength it may lead to results like this:

I this case lower the strength of the controlNet or move Timestep Range closed to the end.

If you have any questions ask them in the comments.