Quick Links

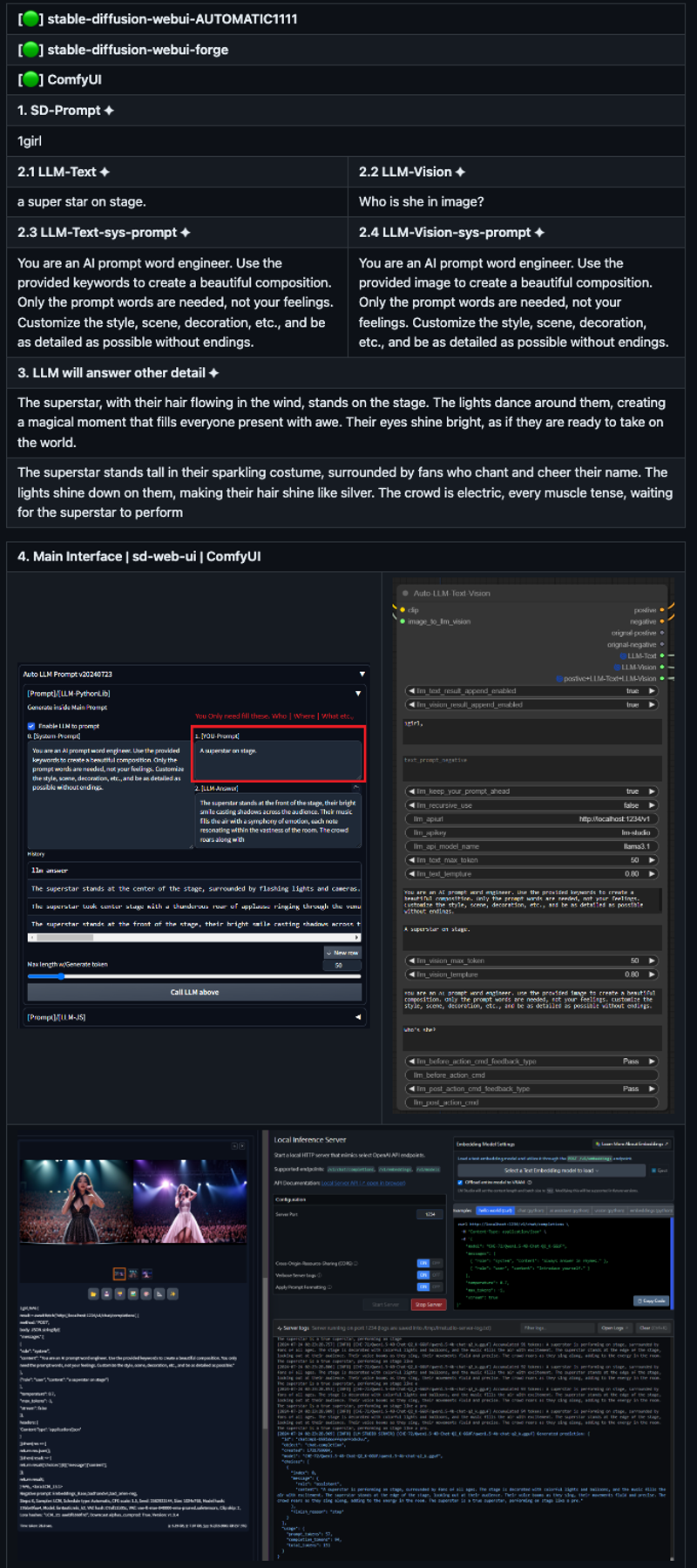

Auto prompt by LLM and LLM-Vision (Trigger more details out inside model)

Auto msg to ur mobile (LINE | Telegram | Discord)

SD-WEB-UI | ComfyUI | decadetw-Auto-Prompt-LLM-Vision

Motivation💡

Call LLM : auto prompt for batch generate images

Call LLM-Vision: auto prompt for batch generate images

Image will get more details that u never though before.

prompt detail is important

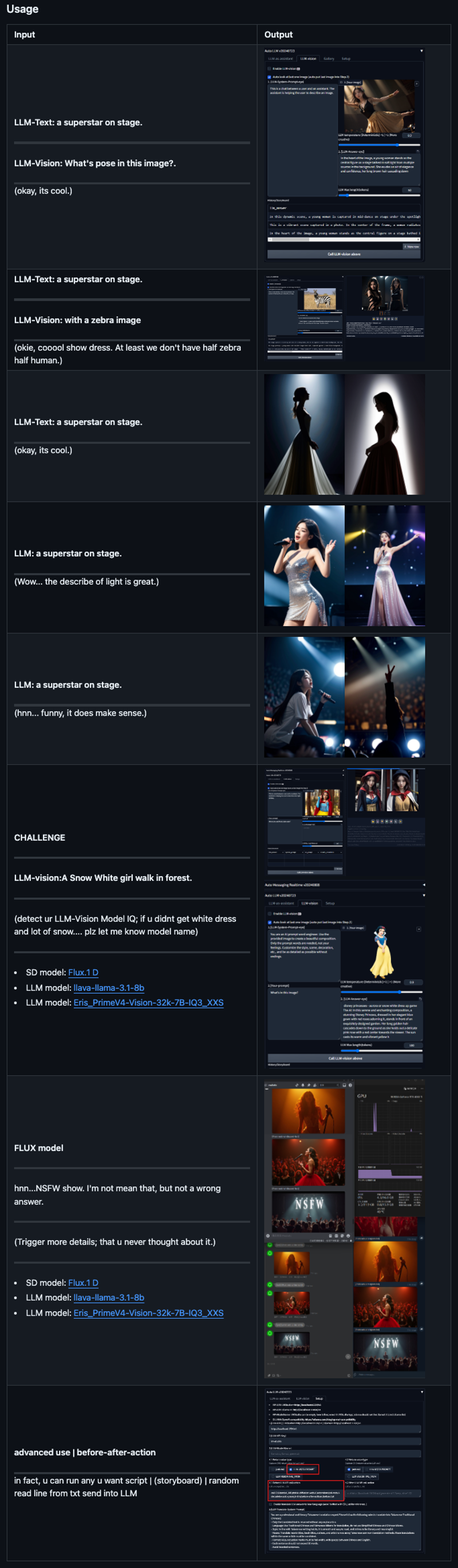

Usage

LLM-Text

batch image generate with LLM

a story

Using Recursive prompt say a story with image generate

Using LLM

when generate forever mode

example as follows figure Red-box.

just tell LLM who, when or what

LLM will take care details.

when a story-board mode (You can generate serial image follow a story by LLM context.)

its like comic book

a superstar on stage

she is singing

people give her flower

a fashion men is walking.

LLM-Vision 👀

batch image generate with LLM-Vision

let LLM-Vision see a magazine

see series of image

see last-one-img for next-image

make a serious of image like comic

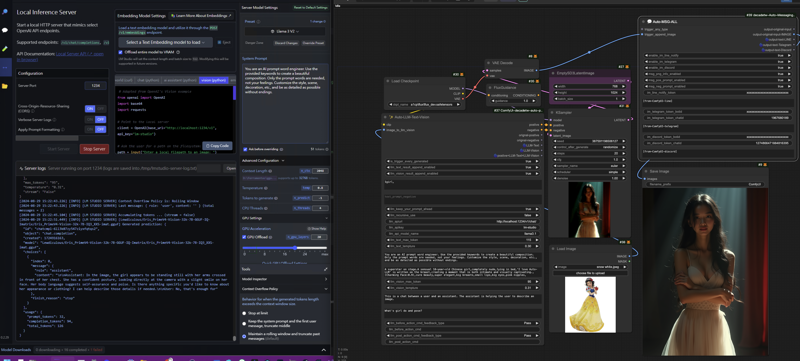

Before and After script

support load script or exe-command Before-LLM and After-LLM

javascript fetch POST method (install Yourself )

security issue, but u can consider as follows

and Command Line Arg --allow-code

ComfyUI Workflow perview

Flux + Auto-LLM + Auto-Msg

ComfyUI Manager | search keyword: auto

Usage Tips

tips1:

leave only 1 or fewer keyword(deep inside CLIP encode) for SD-Prompt, others just fitting into LLM

SD-Prompt: 1girl, [xxx,]<--(the keyword u use usually, u got usually image)

LLM-Prompt: xxx, yyy, zzz, <--(move it to here; trigger more detail that u never though.)

tips2:

leave only 1 or fewer keyword(deep inside CLIP encode) for SD-Prompt, others just fit into LLM

SD-Prompt: 1girl,

LLM-Prompt: a superstar on stage. <--(say a story)

tips3:

action script - Before

random/series pick prompt txt file random line fit into LLM-Text [read_random_line.bat]

random/series pick image path file fit into LLM-Vision

action script - After

u can call what u want command

ex: release LLM VRAM each call: "curl http://localhost:11434/api/generate -d '{"model": "llama2", "keep_alive": 0}'" @Pdonor

ex: bra bra. Interactive anything.

tipsX: Enjoy it, inspire ur idea, and tell everybody how u use this.

Installtion

You need install LM Studio or ollama first.

Pick one language model from under list

text base(small ~2G)

text&vision base(a little big ~8G)

Start web-ui or ComfyUI install extensions or node

stable-diffusion-webui | stable-diffusion-webui-forge:

go Extensions->Available [official] or Install from URL

ComfyUI: using Manager install node

Manager -> Customer Node Manager -> Search keyword: auto

Open ur favorite UI

Lets inactive with LLM. go~

trigger more detail by LLM

Suggestion software info list

https://lmstudio.ai/ (win, mac, linux)

https://ollama.com/ (win[beta], mac, linux)

Suggestion LLM Model

LLM-text (normal, chat, assistant)

4B VRAM<2G

CHE-72/Qwen1.5-4B-Chat-Q2_K-GGUF/qwen1.5-4b-chat-q2_k.gguf

7B VRAM<8G

ccpl17/Llama-3-Taiwan-8B-Instruct-GGUF/Llama-3-Taiwan-8B-Instruct.Q2_K.gguf

Lewdiculous/L3-8B-Stheno-v3.2-GGUF-IQ-Imatrix/L3-8B-Stheno-v3.2-IQ3_XXS-imat.gguf

Google-Gemma

bartowski/gemma-2-9b-it-GGUF/gemma-2-9b-it-IQ2_M.gguf

small and good for SD-Prompt

LLM-vision 👀 (work with SDXL, VRAM >=8G is better )

https://huggingface.co/xtuner/llava-phi-3-mini-gguf

llava-phi-3-mini-mmproj-f16.gguf (600MB,vision adapter)

⭐⭐⭐llava-phi-3-mini-f16.gguf (7G, main model)

https://huggingface.co/FiditeNemini/Llama-3.1-Unhinged-Vision-8B-GGUF

llava-llama-3.1-8b-mmproj-f16.gguf

⭐⭐⭐Llama-3.1-Unhinged-Vision-8B-Q8.0.gguf

quantization_options = ["Q4_K_M", "Q4_K_S", "IQ4_XS", "Q5_K_M", "Q5_K_S","Q6_K", "Q8_0", "IQ3_M", "IQ3_S", "IQ3_XXS"]

⭐⭐⭐⭐⭐for low VRAM super small: IQ3_XXS (2.83G)

in fact, it's enough uses.

Javascript!

security issue, but u can consider as follows.

and Command Line Arg --allow-code

Buy me a Coca cola ☕

https://buymeacoffee.com/xxoooxx

Colophon

Made for fun. I hope if brings you great joy, and perfect hair forever. Contact me with questions and comments, but not threats, please. And feel free to contribute! Pull requests and ideas in Discussions or Issues will be taken quite seriously! --- https://decade.tw

![[ext|node] Using LLM trigger more detail, that u never thought.](https://image.civitai.com/xG1nkqKTMzGDvpLrqFT7WA/9947affb-f956-4fa0-a2da-a3db73ea1b88/width=1320/civi_article_llm.jpeg)