I want to share with you results of training Flux lora on photos of the person (me). If you are not new to learning flux on real people you will probably don't learn anything new. But if you are only planning to learn your first flux lora and afraid of wasting time/money, then this article could come in handy.

Today I am gonna show how different caption styles can affect your training results. All models were trained with Civitai trainer.

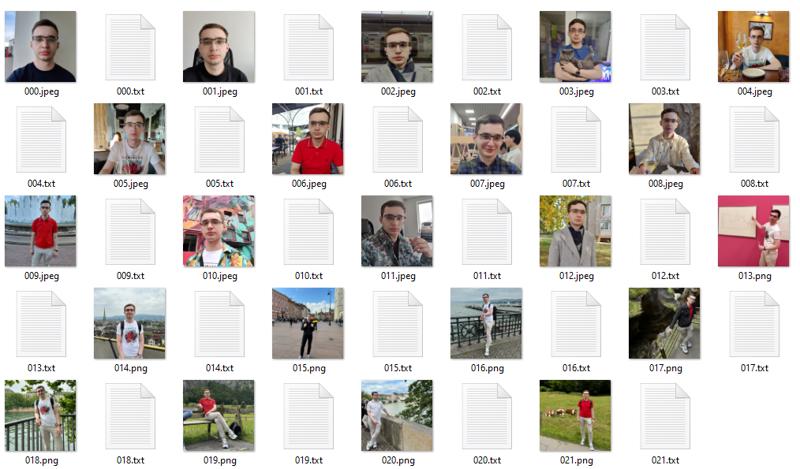

Dataset info

For both versions of images I used the same collection of 22 photos cropped to 1024x1024 resolution.

5 selfies

10 full body photos in various poses

And 7 "half-body" photos, mostly sitting

Here is a full breakdown of the dataset:

And here are some photos to use as a ground truth:

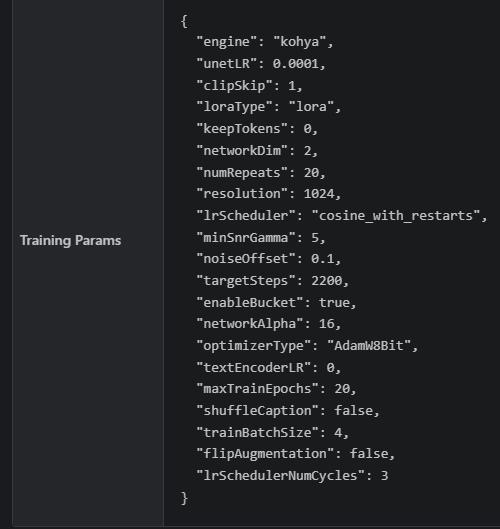

Training parameters

For both versions of the caption I used the exact same training parameters with the same amount of steps. All models, as mentioned earlier, was trained with Civitai on site trainer

Let's start with my first version of lora - Trigger word + class prompt only

Captions style

Inspired by other articles on civitai, I decided to save myself some time and use "trigger word + class word".

So, all images were captioned like this:

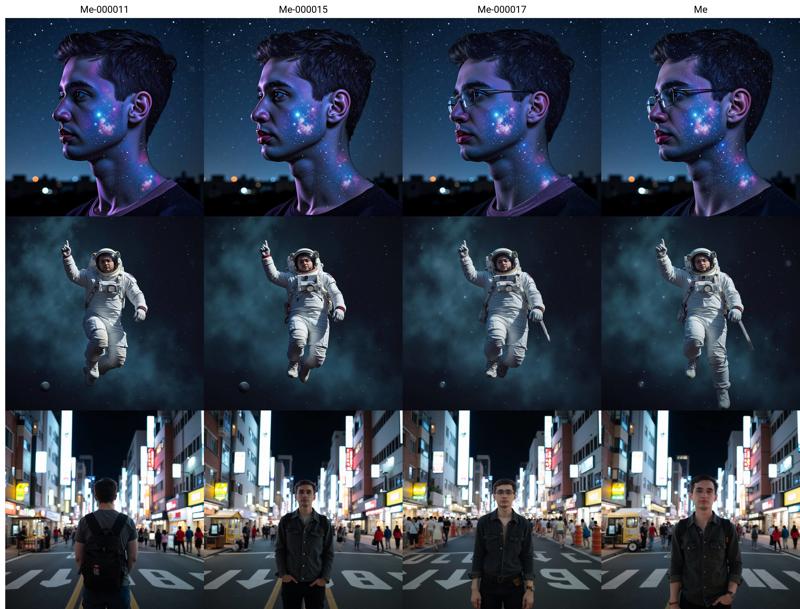

ohwx manResults for Trigger word + class prompt

All images were generated inside comfy ui using Q8 Dev Model and Q8 T5 text encoder (I only have 8gb of vram and 16 ram). With Flux Guidness of 3.5 and CFG 1, no negative prompts hacks were used. Sampler Euler Beta, 20 steps. First, I decided to prompt only T5, but in the process I discovered that including just the trigger word in Clip increased likeliness, but I was in the middle of the process so some XY plots were generated with only prompting T5 (like a half of them).

Notes

What was most surprising for me, is that despite wearing glasses on all 22 photos and don't specifying it in the dataset, models repeatedly generated images of me without wearing glasses, while preserving a high level of likeliness (perhaps undertrained?).

At the same time, it learned pretty well that I like wearing watches on my right hand, while they were visible only on 9 photos. Also, it constantly tried to generate pictures of me wearing my backpack, while having it in only 6 photos out of 22 in the dataset.

You absolutely don't need to specify clothes in your caption dataset. Maybe only if you are training on more than 2000 steps or training on more than just a face. All models change clothes very well. The only problem that I saw was a spacesuit with boots that kind of looked like sneakers, but not very noticeable.

Training on photos definitely toned down that plastic feel that is common for images of people generated with Flux.

Training only on good photos boosted my self esteemFlux is doing its magic trying to generate only pretty images, even with an imperfect dataset. Very often the generated images felt like it portraying me as prettier than I actually look in real life. But maybe it is just me.Reducing Flux guidance helps with stylized images but also reduces likeliness (more testing needed).

As expected, the model is struggling with stylized images, but not as much as I expected.

Here are the results for "lineart style portrait of ohwx man"

With this prompt it sometimes (like 2 of 5 images) tries to generate colorized images, which we normally don't expect from Line art style. More often I get images that look like stylized black and white photos (middle). Proper line art images are possible but they are more rare, like 1 in a 5 images at best.

BUT that doesn't mean that we can't generate proper Line art style portraits. Here are examples with an adjusted prompt.

I need to add, that the colorized result is probably due to incorrect spelling of "lineart".

You need to use Clip encoder during testing of your loras.

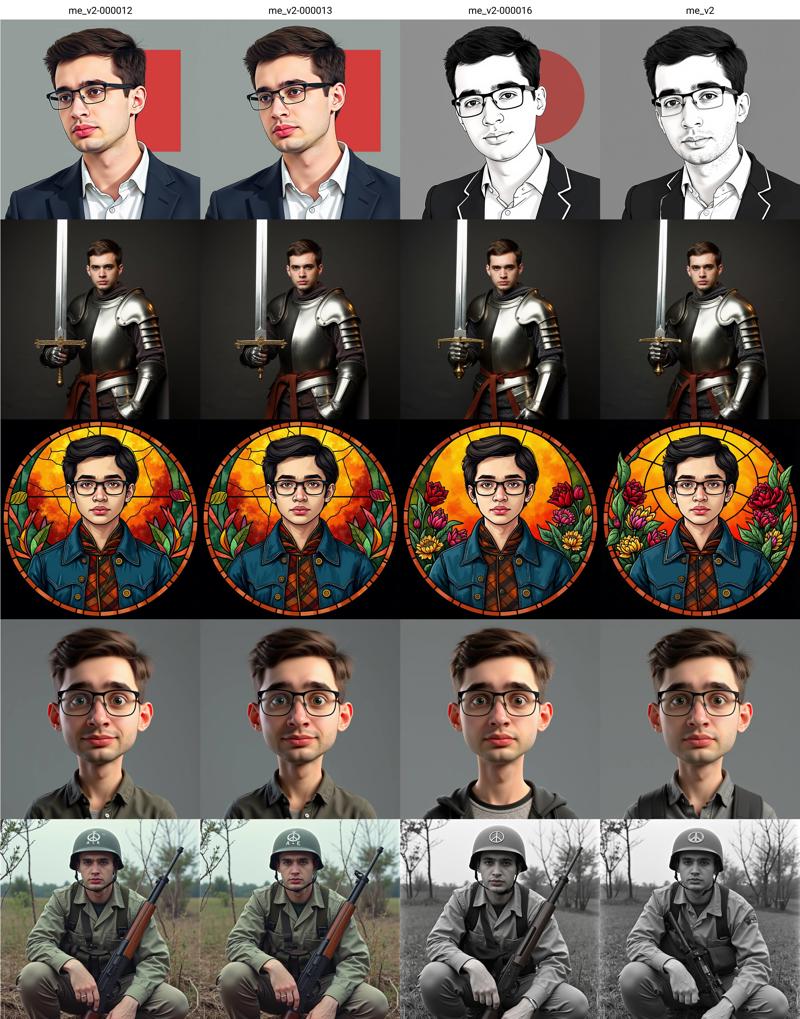

Second attempt - Short description + trigger word

Given the results of my first training I decided to make another version of the model. Key points that I intended to improve:

Stylized images. Like with line art style images shown earlier.

Reduce images with backpacks and wrist watches

Captions style V2

For v2 I am specifying that each image is a photo, and if I'm wearing watches and backpack or not. Also I decided to add information about the pose.

Here sample of what I got. All the captions were written by hand.

selfie of ohwx man

photo of ohwx man sitting in the restaurant

photo of ohwx man standing wearing watches and holding a cat

selfie of ohwx man standing before colorful mural

photo of ohwx man smilling, sitting in the restaurant

photo of ohwx man standing wearing backpack

topdown photo of ohwx man wearing backpack and smiling

photo of ohwx man standing wearing watches and backpack, smilingTraining parameters were the same as for v1.

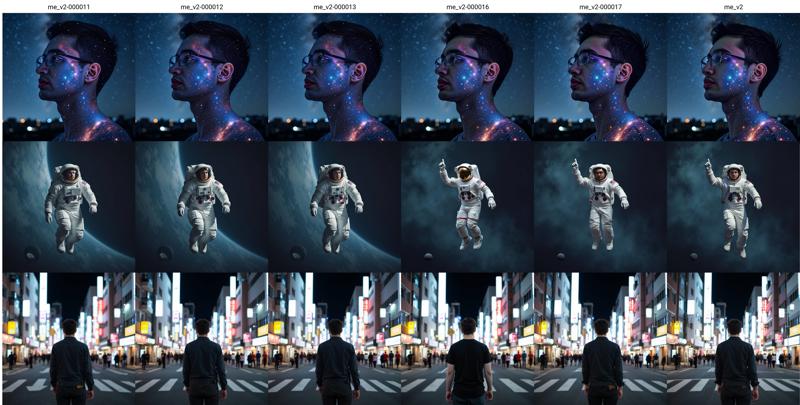

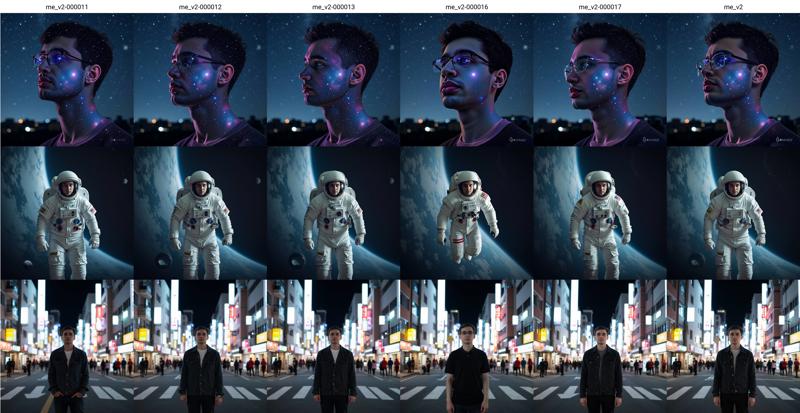

Results for Short description + trigger word

Well, I would like to write that results improved, but in reality they are .... mixed.

All XY Plots were generated with the same seed from v1.

Notes

Stylized images definitely improved, that's a plus.

But at the same time likeness is reduced, especially on stylized images. You can see it on generations with spacesuit, model me_v2-000016 (1760 steps) completely missed the point of "ohwx man". Same for "city" prompt, I definitely did not expect photos from behind, but I am guessing that looks like my back. I gave some pictures to people that know how I look in real life for comparison, for swordsman they were choosing results of v1 (in terms of likeliness), but for "soldier" they preferred v2 results. And I probably agree with them. I don't think I can't choose a definitive winner here.

All prompts for v2 plots were the same as for v1 for comparison purposes. Let's see if we can improve results by staying closer to the new captions:

stylized photo of ohwx man portrait made of stars and galaxies on night sky photo of ohwx man in spacesuit floating in space Photo of ohwx man standing in the middle of the busy street in Japan modern city

It definitely helped with the second and third prompts (but responders say the spaceman still doesn't look anything like me). For the first prompt it reduced both - likeliness and style.

That leads us to the most important note. This version of the model is probably undertrained. You can see it on the "lineart" and "soldier" prompts, style was getting better with each new epoch.

At the same time "portrait made of stars" prompt produces better results with lower steps (1 210 - 1 320) than the last epochs. So, I'm not entirely sure here, but still probably would train for at least 1000-2000 steps more (if I had the buzz for new training).

In terms of reducing backpack and wrist watches, it seems like it helped. You can still see backpacks on "soldier" prompt but I'm guessing it's fairly common for soldiers to wear backpacks. Also there one backpack on "3d render prompt".

Final note

The trigger word + class prompt approach is a completely legit way to train a model. And I would probably prefer it over minimal captions. It is capable of producing stylized images and likeness is also good. You can probably get a better result if you use negative prompting, I didn't test it because it will take around 4 minutes for 1 image to generate on my laptop. So, not a solution for everyone.

Perhaps, the best prompting style would be

photo of ohwx manI would like to see if more steps would improve v2 models or not, but I'am out of buzz and it will take around 6k. So please consider supporting this article if you enjoyed it.