I have done total 104 different LoRA trainings and compared each one of them to find the very best hyper parameters and the workflow for FLUX LoRA training by using Kohya GUI training script.

You can see all the done experiments’ checkpoint names and their repo links in following public post: https://www.patreon.com/posts/110838414

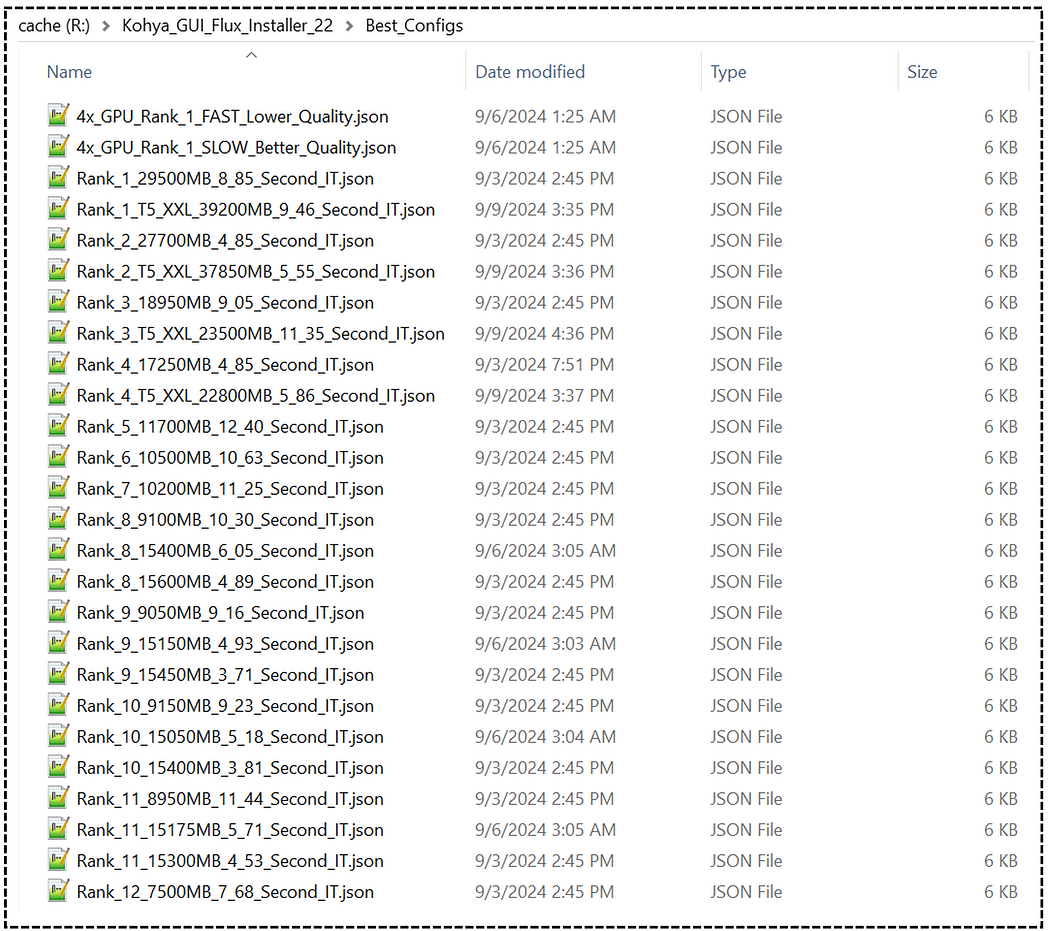

After completing all these FLUX LoRA trainings by using the most VRAM optimal and performant optimizer Adafactor I came up with all of the following ranked ready to use configurations.

You can download all the configurations, all research data, installers and instructions at the following link : https://www.patreon.com/posts/110879657

https://www.patreon.com/posts/110879657

Tutorials

I also have prepared 2 full tutorials. First tutorial covers how to train and use the best FLUX LoRA locally on your Windows computer : https://youtu.be/nySGu12Y05k

This is the main tutorial that you have to watch without skipping to learn everything. It has total 74 chapters, manually written English captions. It is a perfect resource to become 0 to hero for FLUX LoRA training.

FLUX LoRA Training Simplified: From Zero to Hero with Kohya SS GUI (8GB GPU, Windows) Tutorial Guide

The second tutorial I have prepared is for how to train FLUX LoRA on cloud. This tutorial is super extremely important for several reasons. If you don’t have a powerful GPU, you can rent a very powerful and very cheap GPU on Massed Compute and RunPod. I prefer Massed Compute since it is faster and cheaper with our special coupon SECourses. Another reason is that in this tutorial video, I have fully in details shown how to train on a multiple GPU setup to scale your training speed. Moreover, I have shown how to upload your checkpoints and files ultra fast to Hugging Face for saving and transferring for free. Still watch first above Windows tutorial to be able to follow below cloud tutorial : https://youtu.be/-uhL2nW7Ddw

Blazing Fast & Ultra Cheap FLUX LoRA Training on Massed Compute & RunPod Tutorial — No GPU Required!

Example Generated Images

These images are generated on SwarmUI with the above shared configs trained LoRA on my poor 15 images dataset. Everything shown in tutorial videos for you to follow. Then I have used SUPIR the very best upscaler to 2x upscale them with default parameters except enabling face enhancement : https://youtu.be/OYxVEvDf284

Complete Guide to SUPIR Enhancing and Upscaling Images Like in Sci-Fi Movies on Your PC

All the prompts used to generate below images shared in the below public link:

https://gist.github.com/FurkanGozukara/3e834b77a9d8d6552f46d36bc10fe92a

Revolutionizing AI Image Generation: A Deep Dive into FLUX LoRA Training

In a groundbreaking development in the world of artificial intelligence and image generation, a comprehensive tutorial has emerged, guiding users through the intricate process of training LoRA (Low-Rank Adaptation) on FLUX, the latest state-of-the-art text-to-image generative AI model. This tutorial, presented by an unnamed expert in the field, promises to unlock new possibilities for both novice and experienced AI enthusiasts.

The presenter, who has dedicated an entire week to intensive research, has conducted an impressive 72 full training sessions, with more underway. This level of dedication underscores the complexity and potential of FLUX LoRA training. The tutorial aims to democratize this advanced technology, making it accessible to a wider audience.

One of the most significant aspects of this tutorial is its inclusivity in terms of hardware requirements. The presenter has developed a range of unique training configurations that cater to GPUs with varying VRAM capacities, from as little as 8GB to high-end 48GB models. This approach ensures that users with different hardware setups can participate in and benefit from FLUX LoRA training.

The tutorial utilizes Kohya GUI, a user-friendly interface built on the acclaimed Kohya training scripts. This graphical user interface simplifies the installation, setup, and training process, making it accessible even to those who might be intimidated by command-line interfaces. While the demonstration is conducted on a local Windows machine, the presenter assures that the process is identical for cloud-based services, broadening the tutorial's applicability.

The comprehensive nature of the tutorial is evident in its coverage of topics. It spans from basic concepts to expert settings, ensuring that even complete beginners can fully train and utilize a FLUX LoRA model. The tutorial is thoughtfully organized into chapters and includes manually written English captions, enhancing its accessibility and ease of use.

Beyond the training process, the tutorial also delves into practical applications. It demonstrates how to use the generated LoRAs within the Swarm UI and how to perform grid generation to identify the best training checkpoint. This holistic approach ensures that users not only learn how to train models but also how to effectively utilize them in real-world scenarios.

In a nod to the diverse needs of the AI community, the tutorial concludes with a demonstration of how to train Stable Diffusion 1.5 and SDXL models using the latest Kohya GUI interface. This addition broadens the tutorial's scope, making it a valuable resource for a wide range of AI image generation tasks.

The presenter has gone above and beyond by preparing a detailed written post to accompany the video tutorial. This post contains all necessary instructions, links, and guides, serving as a comprehensive reference for users. Importantly, the post is designed to be a living document, with the presenter committing to update it as new information and research findings become available.

A key component of the FLUX LoRA training process is the preparation of the training dataset. The tutorial emphasizes the importance of dataset quality, encouraging users to include a variety of poses, expressions, clothing, and backgrounds in their training images. The presenter acknowledges that the current dataset used in the tutorial has limitations and is working on developing a more diverse and effective training dataset for future demonstrations.

The tutorial also addresses the technical aspects of the training process, including the selection of appropriate models, setting up the training environment, and configuring various parameters. It provides detailed instructions on how to use the Kohya GUI, including how to set up the training data, configure the model, and initiate the training process.

One of the most valuable aspects of the tutorial is its emphasis on experimentation and comparison. The presenter demonstrates how to generate grids of images using different checkpoints, allowing users to visually compare the results and select the best-performing model. This approach encourages a scientific and iterative process in model training and selection.

The tutorial doesn't shy away from addressing the computational demands of AI model training. It provides tips on optimizing VRAM usage and suggests cloud-based solutions for those needing more computational power. The presenter shares personal experiences with using cloud computing services like Massed Compute, offering insights into how to scale up training processes for more extensive experiments.

In addition to the core tutorial content, the presenter has created a supportive ecosystem for learners. This includes a Discord channel with over 8,000 members, a GitHub repository with scripts and resources, and a subreddit for community discussions. The presenter's commitment to engaging with the community and providing ongoing support is evident in their active presence across these platforms.

The tutorial also touches on the rapid pace of development in the field of AI image generation. The presenter mentions ongoing research into new hyperparameters and features, promising to update the tutorial and associated resources as new findings emerge. This commitment to staying current ensures that the tutorial remains a valuable resource in a rapidly evolving field.

One of the most intriguing aspects of the tutorial is its exploration of the unique capabilities of the FLUX model. Unlike some other models, FLUX appears to have an internal system that behaves like a text encoder and automatic captioning system. This feature allows FLUX to understand and generate images based on concepts even without explicit prompts, showcasing the model's advanced understanding of visual and textual relationships.

The tutorial also delves into the technical intricacies of model training, discussing concepts such as epoch counts, batch sizes, and the use of regularization images. It provides detailed explanations of how these parameters affect the training process and the quality of the resulting models, offering users the knowledge they need to fine-tune their training processes.

In conclusion, this comprehensive tutorial on FLUX LoRA training represents a significant step forward in democratizing advanced AI image generation techniques. By combining detailed technical instruction with practical demonstrations and ongoing community support, the presenter has created a valuable resource for anyone interested in pushing the boundaries of AI-generated imagery. As the field continues to evolve rapidly, resources like this tutorial will play a crucial role in keeping the AI community informed and empowered to explore new possibilities in generative AI.

This article provides a comprehensive overview of the FLUX LoRA training tutorial, highlighting its key features, the presenter's expertise, and the potential impact on the AI image generation community. The article is designed to be informative and engaging for readers interested in AI technology and its applications in image generation.

Revolutionizing AI Image Generation: A Deep Dive into FLUX Training and Cloud-Based Implementations

In the ever-evolving landscape of artificial intelligence and image generation, a groundbreaking tutorial has emerged, promising to revolutionize the way we train and utilize FLUX models. This comprehensive guide, spanning over an hour, offers an in-depth look at how to harness the power of cloud computing services to train FLUX models efficiently and cost-effectively.

The tutorial, presented by an unnamed expert in the field, begins by addressing a common pain point for AI enthusiasts and professionals alike: the lack of powerful GPUs for training complex models. The solution? Leveraging cloud services such as Massed Compute and RunPod to access high-performance computing resources on-demand.

One of the most striking aspects of this tutorial is its focus on accessibility and affordability. The presenter demonstrates how users can train impressive FLUX models in under an hour for as little as $1.25 per hour using 4x GPU setups. This democratization of advanced AI training techniques opens up new possibilities for researchers, artists, and developers who may not have access to expensive hardware.

The tutorial is meticulously structured, covering both single and multi-GPU training scenarios. It begins with an introduction to Massed Compute, guiding viewers through the process of setting up an account, deploying a cloud machine, and connecting to it using the ThinLinc client. The presenter emphasizes the importance of proper configuration, demonstrating how to set up file synchronization and monitor GPU usage.

A significant portion of the tutorial is dedicated to the installation and setup of various tools and models required for FLUX training. The presenter walks through the process of upgrading Kohya GUI, downloading necessary models, and preparing datasets. This attention to detail ensures that viewers can follow along regardless of their prior experience with these tools.

One of the most valuable aspects of the tutorial is its focus on practical, real-world application. The presenter doesn't just explain how to train models but also demonstrates how to use them effectively. This includes showcasing the use of SwarmUI for fast image generation, grid generation for checkpoint comparison, and even the implementation of the Forge Web UI.

The tutorial also addresses the critical issue of model storage and sharing. The presenter introduces a novel approach to uploading and downloading checkpoints to and from Hugging Face, a popular platform for sharing machine learning models. Using specially crafted scripts, the presenter demonstrates how to upload 12GB of LoRA files to Hugging Face in just two minutes, a significant improvement over traditional methods.

Throughout the tutorial, the presenter consistently compares the performance and user experience between Massed Compute and RunPod, offering viewers a balanced perspective on the strengths and weaknesses of each platform. This comparative approach helps viewers make informed decisions about which service might best suit their needs.

One of the most exciting aspects of the tutorial is its exploration of multi-GPU training. The presenter demonstrates how to achieve near-linear speed increases when using multiple GPUs, a feat that was previously difficult to achieve without expensive specialized hardware. This breakthrough in training efficiency could have far-reaching implications for the field of AI image generation.

The tutorial doesn't shy away from addressing potential issues and troubleshooting. When encountering problems, such as slow download speeds or installation errors, the presenter walks through the process of identifying and resolving these issues. This real-time problem-solving adds an invaluable layer of practical knowledge to the tutorial.

Another noteworthy aspect of the tutorial is its forward-looking approach. The presenter hints at ongoing research into fine-tuning techniques and optimal training parameters for the CLIP large model, suggesting that even more impressive results may be achievable in the near future.

The tutorial also touches on the importance of model evaluation and selection. By demonstrating how to generate comparison grids and analyze the results, the presenter empowers viewers to make informed decisions about which checkpoints to use for their specific needs.

Throughout the presentation, there's a strong emphasis on the rapid pace of development in the field. The presenter frequently mentions updating scripts, configurations, and workflows to keep up with the latest advancements. This underscores the dynamic nature of AI research and the importance of staying current with new techniques and tools.

One of the most intriguing aspects of the tutorial is its exploration of the FLUX model itself. While the specifics of the model architecture are not delved into, the presenter's excitement about its potential is palpable. The ability to train high-quality models quickly and affordably could democratize access to advanced AI image generation techniques, potentially leading to a explosion of creativity in fields ranging from digital art to scientific visualization.

The tutorial also highlights the growing ecosystem of tools and platforms supporting AI model training and deployment. From SwarmUI to Forge Web UI, from Hugging Face to custom Jupyter notebooks, the presenter demonstrates how these various tools can be integrated into a cohesive workflow for model training, evaluation, and deployment.

Perhaps one of the most valuable takeaways from the tutorial is the presenter's approach to problem-solving and continuous improvement. Throughout the presentation, there's a consistent message of experimentation, iteration, and refinement. This mindset is crucial in a field as rapidly evolving as AI, where today's best practices may be outdated tomorrow.

As the tutorial concludes, the presenter leaves viewers with a sense of anticipation for future developments. Hints at ongoing research into fine-tuning techniques and improvements to the FLUX model suggest that this tutorial is just the beginning of a longer journey into the frontiers of AI image generation.

In conclusion, this comprehensive tutorial represents a significant contribution to the field of AI image generation. By demystifying the process of training advanced models like FLUX and demonstrating how to leverage cloud computing resources effectively, the presenter has opened up new possibilities for researchers, artists, and developers around the world. As we look to the future, it's clear that the democratization of these powerful AI tools will continue to drive innovation and creativity in ways we can only begin to imagine.

The implications of this tutorial extend far beyond the realm of AI enthusiasts and professionals. As these tools become more accessible and affordable, we can expect to see their impact in fields as diverse as entertainment, education, scientific research, and beyond. The ability to generate high-quality, customized images quickly and affordably could revolutionize industries ranging from advertising to academic publishing.

Moreover, the tutorial's emphasis on cloud-based solutions highlights a broader trend in the tech industry towards more flexible, scalable computing resources. This shift away from reliance on local hardware could have far-reaching implications for how we approach complex computational tasks in the future.

As we reflect on the content of this tutorial, it's clear that we are standing on the cusp of a new era in AI-powered creativity. The barriers to entry for advanced AI techniques are falling rapidly, and with them, we can expect to see an explosion of innovation and experimentation in the coming years.

For those looking to stay at the forefront of this exciting field, this tutorial serves as an invaluable resource and a roadmap for future exploration. As the presenter notes, the journey doesn't end here – with ongoing research and development, we can expect even more impressive results in the near future.

In the end, this tutorial is more than just a guide to training FLUX models. It's a window into the rapidly evolving world of AI, a demonstration of the power of cloud computing, and a testament to the democratizing force of open-source tools and platforms. As we look to the future, it's clear that the possibilities are limited only by our imagination and our willingness to explore this new frontier of technology.