Cloning REPO

Clone the specific branch for Flux

git clone -b sd3 https://github.com/kohya-ss/sd-scripts.git# Navigate to the cloned directory

cd sd-scriptscreate the Environment

python3 -m venv venvThe activation command depends on your shell:(every time you must activate it for training)

For Bash:

source venv/bin/activateFor Zsh:

source venv/bin/activateFor Fish:

source venv/bin/activate.fishFor Csh or Tcsh:

source venv/bin/activate.csh**** all time this must be active (stay at same terminal window)

Install Dependencies

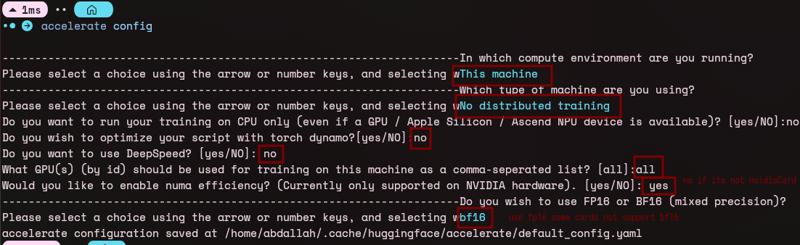

pip install -r requirements.txtsetup Accelerate :

accelerate config

DATASET PREPARE

folder structure for images and descriptions

/dataset

├── image1.jpg

├── image1.txt

├── image2.jpg

├── image2.txt

├── image3.jpg

├── image3.txt

└── ...Explanation:

Images: The dataset folder contains images (e.g.,

image1.jpg,image2.jpg).Text Descriptions: Each image has a corresponding text file (e.g.,

image1.txt,image2.txt). The text files should contain descriptions that provide details about the image content, which can be used to guide the model during training.

Example Content of image1.txt:

A person wearing a traditional abaya walking through a marketplace.

*********you can use my workflow to prepare dataset

Models options :

i would like to explain how to run training on 12g gpu in good speed

*******(this part updatable depends on community experience and feed back)*****

Model Download Guide

This guide helps you choose the correct models to download based on your GPU capacity, separating options for low and high GPU setups. FP8 models are ideal for users with more standard GPUs, while FP16 models are for high-end GPUs.

1. Low GPU (FP8 Models)

For users with standard GPUs like the RTX 30xx/40xx series or similar, use FP8 models for better efficiency.

Flux Model (FP8)

T5 Text Encoder (FP8)

CLIP Model

Autoencoder (AE)

2. High GPU (FP16 Models)

If you have a high-end GPU such as A100, H100, or powerful RTX 40xx GPUs, FP16 models offer greater precision.

Flux Model (FP16)

T5 Text Encoder (FP16)

Training

1. Create Dataset Configuration File

Firstly, create a dataset configuration file named dataset.toml. This file will specify the dataset location and various parameters for training.

create file of dataset.tomlwhich contain

[[datasets]]

enable_bucket = true

resolution = [512, 512]

bucket_reso_steps = 64

max_bucket_reso = 2048

min_bucket_reso = 128

bucket_no_upscale = false

batch_size = 1

random_crop = false

shuffle_caption = false

[[datasets.subsets]]

image_dir = "Dataset location"

num_repeats = 1

caption_extension = ".txt"num_repeats could be= 2, 3 ,4 or 5 , less images samples more repeats

Replace "Dataset location" with the actual path to your dataset ex ./dataset

2. Run the Training Script

With the dataset configuration file prepared, you can proceed with training the model using the flux_train_network.py script. Make sure to replace paths to models and other configurations as needed.

Use the following command to start the training process:

***** run this inside terminal be careful you must be inside environment you created

accelerate launch \

--mixed_precision fp16 \

--num_cpu_threads_per_process 1 \

flux_train_network.py \

--pretrained_model_name_or_path <default_fp8_model_path_or_url> \

--clip_l <default_clip_l_model_path_or_url> \

--ae <default_ae_model_path_or_url> \

--cache_latents_to_disk \

--save_model_as safetensors \

--sdpa \

--persistent_data_loader_workers \

--max_data_loader_n_workers 2 \

--seed 42 \

--gradient_checkpointing \

--save_precision bf16 \

--network_module networks.lora_flux \

--network_dim 16 \

--network_alpha 8 \

--optimizer_type adamw8bit \

--learning_rate 2e-4 \

--lr_scheduler constant_with_warmup \

--lr_warmup_steps 20 \

--cache_text_encoder_outputs \

--cache_text_encoder_outputs_to_disk \

--fp8_base \

--max_train_steps 120 \

--save_every_n_epochs 1 \

--dataset_config /path/to/dataset.toml \

--output_dir /home/abdallah/Desktop/webui/sd-scripts/x \

--output_name flux-lora-name \

--timestep_sampling shift \

--discrete_flow_shift 3.1582 \

--model_prediction_type raw \

--guidance_scale 1.0 \

--t5xxl <default_t5xxl_model_path_or_url> \

--split_mode \

--network_args "train_blocks=single" \

--max_grad_norm 1.0 \

--gradient_accumulation_steps 4 \

--clip_skip 2 \

--min_snr_gamma 5 \

--noise_offset 0.1

my success examples

adamw8bit example

accelerate launch --mixed_precision bf16 --num_cpu_threads_per_process 1 flux_train_network.py \

--pretrained_model_name_or_path /home/abdallah/Desktop/webui/ComfyUI/models/diffusion_models/fp8-kaji.safetensors \

--clip_l /home/abdallah/Desktop/webui/stable-diffusion-webui/models/text_encoder/clip_l.safetensors \

--ae /home/abdallah/Desktop/webui/stable-diffusion-webui/models/VAE/ae.safetensors \

--cache_latents_to_disk \

--save_model_as safetensors \

--sdpa \

--persistent_data_loader_workers \

--max_data_loader_n_workers 2 \

--seed 42 \

--gradient_checkpointing \

--mixed_precision bf16 \

--save_precision bf16 \

--network_module networks.lora_flux \

--network_dim 16 \

--network_alpha 8 \

--optimizer_type adamw8bit \

--learning_rate 2e-4 \

--lr_scheduler constant_with_warmup \

--lr_warmup_steps 20 \

--cache_text_encoder_outputs \

--cache_text_encoder_outputs_to_disk \

--fp8_base \

--max_train_steps 120 \

--save_every_n_epochs 1 \

--dataset_config dataset_1024_bs2.toml \

--output_dir /home/abdallah/Desktop/webui/sd-scripts/x \

--output_name flux-lora-name \

--timestep_sampling shift \

--discrete_flow_shift 3.1582 \

--model_prediction_type raw \

--guidance_scale 1.0 \

--t5xxl /home/abdallah/Desktop/webui/stable-diffusion-webui/models/text_encoder/fp8.safetensors \

--split_mode \

--network_args "train_blocks=single" \

--max_grad_norm 1.0 \

--gradient_accumulation_steps 4 \

--clip_skip 2 \

--min_snr_gamma 5 \

--noise_offset 0.1prodigy example

accelerate launch --mixed_precision bf16 --num_cpu_threads_per_process 1 flux_train_network.py \

--pretrained_model_name_or_path /home/abdallah/Desktop/webui/ComfyUI/models/diffusion_models/fp8-kaji.safetensors \

--clip_l /home/abdallah/Desktop/webui/stable-diffusion-webui/models/text_encoder/clip_l.safetensors \

--ae /home/abdallah/Desktop/webui/stable-diffusion-webui/models/VAE/ae.safetensors \

--cache_latents_to_disk \

--save_model_as safetensors \

--sdpa \

--persistent_data_loader_workers \

--max_data_loader_n_workers 2 \

--seed 42 \

--gradient_checkpointing \

--mixed_precision bf16 \

--save_precision bf16 \

--network_module networks.lora_flux \

--network_dim 16 \

--network_alpha 8 \

--optimizer_type prodigy \

--learning_rate 1 \

--cache_text_encoder_outputs \

--cache_text_encoder_outputs_to_disk \

--fp8_base \

--max_train_steps 120 \

--save_every_n_epochs 1 \

--dataset_config dataset_1024_bs2.toml \

--output_dir /home/abdallah/Desktop/webui/sd-scripts/x \

--output_name flux-lora-name \

--timestep_sampling shift \

--discrete_flow_shift 3.1582 \

--model_prediction_type raw \

--guidance_scale 1.0 \

--t5xxl /home/abdallah/Desktop/webui/stable-diffusion-webui/models/text_encoder/fp8.safetensors \

--split_mode \

--network_args "train_blocks=single" \

--max_grad_norm 1.0 \

--gradient_accumulation_steps 4 \

--clip_skip 2 \

--min_snr_gamma 5 \

--noise_offset 0.1 \

--optimizer_args "d0=1e-4" "d_coef=0.215" "weight_decay=0.01"#last update

accelerate launch --mixed_precision bf16 --num_cpu_threads_per_process 4 flux_train_network.py --pretrained_model_name_or_path /home/abdallah/Desktop/webui/ComfyUI/models/diffusion_models/fp8-kaji.safetensors --clip_l /home/abdallah/Desktop/webui/stable-diffusion-webui/models/text_encoder/clip_l.safetensors --ae /home/abdallah/Desktop/webui/stable-diffusion-webui/models/VAE/ae.safetensors --cache_latents_to_disk --save_model_as safetensors --sdpa --persistent_data_loader_workers --max_data_loader_n_workers 4 --seed 42 --gradient_checkpointing --mixed_precision bf16 --save_precision bf16 --network_module networks.lora_flux --network_dim 32 --network_alpha 32 --optimizer_type prodigy --learning_rate 0.5 --cache_text_encoder_outputs --cache_text_encoder_outputs_to_disk --fp8_base --max_train_steps 500 --save_every_n_epochs 2 --dataset_config dataset_1024_bs2.toml --output_dir /home/abdallah/Desktop/webui/sd-scripts/x --output_name flux-lora-name --timestep_sampling shift --discrete_flow_shift 3.1582 --model_prediction_type raw --guidance_scale 1.0 --t5xxl /home/abdallah/Desktop/webui/stable-diffusion-webui/models/text_encoder/fp8.safetensors --split_mode --network_args "train_blocks=single" "algo=locon" --max_grad_norm 0.8 --gradient_accumulation_steps 4 --clip_skip 2 --min_snr_gamma 5 --noise_offset 0.1 --optimizer_args "d0=1e-4" "d_coef=0.215" "weight_decay=0.015" --lr_scheduler cosine_with_restarts --train_batch_size 2