Introduction

In the ever-evolving world of AI-driven creativity, tools like EBSynth and the Automatic1111 (A1111) Stable Diffusion extension are pushing the boundaries of what's possible with video synthesis. Whether you're aiming to turn static images into realistic motion sequences or breathe life into your animations, combining these two powerful utilities unlocks endless possibilities. In this guide, we'll explore how to effectively use EBSynth in tandem with A1111 to craft hyper-realistic videos, from setup to final render, giving you the ability to create stunning, lifelike footage with ease.

**Click here for a video walkthrough**

System Requirements

To run the EbSynth Utility extension in combination with AUTOMATIC1111 for video generation, you'll need the following hardware and software setup:

Operating System:

Windows 10 or higher, macOS, or Linux.

GPU:

Minimum: NVIDIA GPU with at least 4GB VRAM (NVIDIA GPUs are strongly recommended due to better compatibility with most AI models used in this setup).

Recommended: 8GB VRAM or higher for smoother performance, especially when dealing with longer videos and higher resolutions.

CPU:

Minimum: Multi-core processor, 4 cores or higher.

Recommended: 6-core or better for faster processing.

RAM:

Minimum: 8GB RAM.

Recommended: 16GB or more, especially for handling higher resolution frames and longer sequences.

Storage:

At least 10GB free space for software and project files. More may be required for larger videos and higher quality renders.

Software Dependencies:

FFmpeg (required for video processing).

EbSynth (available at ebsynth.com).

AUTOMATIC1111 Stable Diffusion WebUI installed and configured.

ControlNet extension (recommended for better motion control and flicker reduction).

Having a powerful GPU and sufficient VRAM is critical, as Stable Diffusion-based processes can be resource-intensive, particularly when generating high-resolution or long-duration videos.

Dependencies

To get started with EbSynth Utility and AUTOMATIC1111 for video synthesis, you’ll need to install several key dependencies. Below is a step-by-step guide to set everything up:

1. Install FFmpeg

FFmpeg is essential for video processing tasks such as encoding and decoding video files.

Windows:

Download the latest FFmpeg version from the FFmpeg official website.

Extract the contents and add the

bindirectory to your system's PATH environment variable. You can do this by:Right-clicking "This PC" → Properties → Advanced system settings → Environment Variables.

In the "System variables" section, locate the Path variable and click Edit.

Add the path to the FFmpeg

bindirectory (e.g.,C:\ffmpeg\bin).

macOS:

Open Terminal and install FFmpeg via Homebrew:

brew install ffmpeg

Linux:

Use your package manager to install FFmpeg:

sudo apt install ffmpeg

2. Install EbSynth

Download and install the EbSynth utility from the EbSynth official website. Simply download the appropriate version for your operating system, extract the files, and follow the installation prompts.

3. Install AUTOMATIC1111 WebUI

To use EbSynth with Stable Diffusion, you'll need the AUTOMATIC1111 web UI.

Clone the repository:

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui cd stable-diffusion-webuiCopy code

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui cd stable-diffusion-webuiRun the setup script:

Windows: Run

webui-user.batin the cloned directory.macOS/Linux: Run the following in your terminal:

./webui.sh

Follow the prompts to complete the installation. Make sure to select the appropriate Stable Diffusion model during setup. Once installed, you can access the WebUI via your browser.

4. Install the EbSynth Utility Extension for AUTOMATIC1111

To integrate EbSynth with AUTOMATIC1111, you need to install the EbSynth utility extension:

Open the AUTOMATIC1111 WebUI.

Go to the Extensions tab and click on "Install from URL."

Paste the following repository link and click Install:

https://github.com/s9roll7/ebsynth_utilityAfter installation, restart the web UI to enable the extension

5. (Optional but extremely recommended) Install ControlNet for Better Motion Control

ControlNet is highly recommended for improved video control and reducing flickering during the EbSynth process.

Go to the Extensions tab in AUTOMATIC1111 WebUI.

Install the ControlNet extension by using the following repository URL:

https://github.com/Mikubill/sd-webui-controlnetAfter installation, restart the WebUI and enable ControlNet in your settings.

EbSynth Utility Steps

Once all dependencies are installed, follow these steps to use EbSynth Utility with AUTOMATIC1111 to create realistic, animated videos from images.

1. Prepare Your Project

Before you begin, make sure you have your source video or sequence of images ready. EbSynth works by blending new frames between keyframes, so you’ll need to identify keyframes that represent the major changes in your video.

Open the AUTOMATIC1111 WebUI and navigate to the EbSynth Utility tab.

Create an empty directory for your project. This directory will hold the original video, keyframes, and output files.

In the Project Directory field, enter the path to your new project folder.

Under Original Movie Path, specify the video file you’ll be working with.

2. Generate Keyframes (Stage 1)

Keyframes are essential for controlling the animation. EbSynth uses these to interpolate and generate smooth transitions between them.

Select Stage 1 in the EbSynth Utility tab.

Click Generate. This will extract all the frames from your video into individual images, which you can later modify.

If the video has a lot of motion, EbSynth will shorten the intervals between keyframes; if less motion, it will extend the intervals.

3. Edit Keyframes with Image-to-Image (Stage 2 & 3)

Now you can modify keyframes to apply the artistic or style changes you want across your video. This step involves using the img2img feature for manual editing, and the EbSynth script for added control and precision.

Step 3.1: Edit Keyframes Using img2img

Select Stage 2 to start editing keyframes using img2img mode.

In this stage, you can adjust the Denoising Strength to control how much of the original image is maintained. Lower values will keep more details from the original keyframe, while higher values will apply more artistic changes.

You can also use ControlNet to apply specific effects such as pose matching, edge detection, or depth consistency.

Step 3.2: Integrating the EbSynth Script

In addition to manually adjusting keyframes, you can use the EbSynth script to control various parameters, allowing for more precision and control. Here’s how to configure the EbSynth script:

Project Directory: Enter the path to your project directory (e.g.,

/home/demo).Mask Option: Set the Mask Mode to Normal and choose Inpaint Area to apply to Only Masked sections.

Depth Map: Enable Use Depth Map if depth information is available in

/video_key_depth. This helps maintain depth accuracy across all frames.ControlNet Options:

Set ControlNet Weight and ControlNet Weight for Face to

0.5for balanced control.Enable Preprocess Image if a preprocessed image exists in

/controlnet_preprocess.

Step 3.3: Generate Keyframes with Modifications

Select the keyframes that require modification.

Click Generate to apply the artistic or style effects to the selected keyframes, ensuring that the EbSynth script parameters (e.g., masking, ControlNet weights, and depth maps) are taken into account.

4. Set Up ControlNet for Consistency

To ensure smooth transitions and frame-to-frame consistency, configure ControlNet with the following settings:

ControlNet Unit 0:

Set Preprocessor to none and Model to

TTPLANET_Controlnet_Tile_realistic_v2_fp16for realistic tiling.

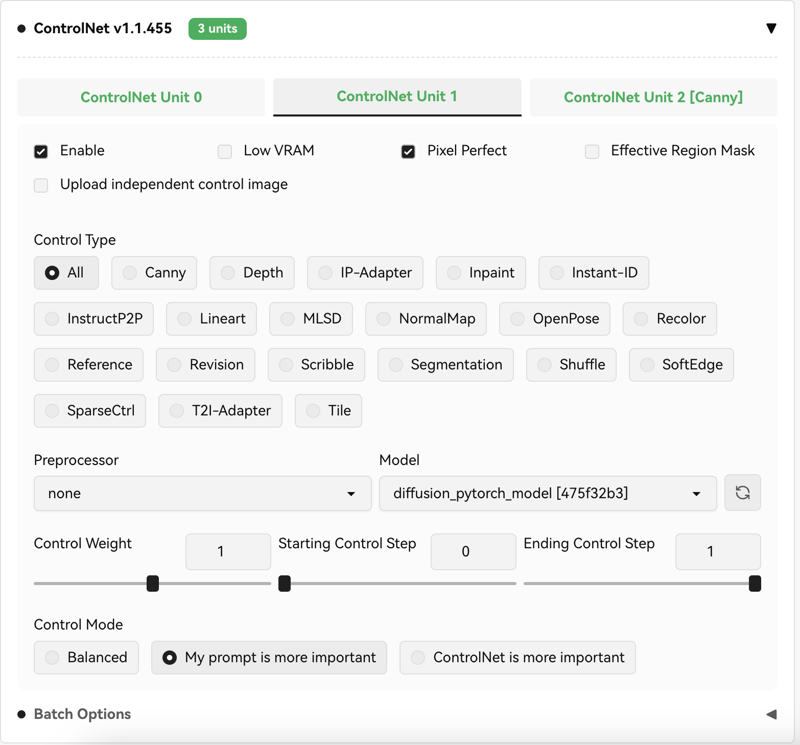

ControlNet Unit 1 (Labeled as diffusion_pytorch_model in the interface):

Set Preprocessor to none and select TemporalNetXL (shown as diffusion_pytorch_model) to maintain consistency across frames.

ControlNet Unit 2 (Canny):

Set Preprocessor to canny and choose diffusers_xl_canny_full for edge detection.

Adjust the Low Threshold to

100and the High Threshold to200for optimal edge detection without overly harsh transitions

**Make sure to select My prompt is more important for all of units*

Before

After

5. Render the Transition Frames (Stage 4 to 7)

Once your keyframes are edited and ControlNet is set up, you can let EbSynth generate the in-between frames to create smooth transitions.

Stage 4: Upscale the keyframes to match the size of the original video. (if need be)

Stage 5: Rename keyframes and generate the

.ebsfile (EbSynth project file).Stage 6: Run EbSynth locally by opening the

.ebsfile under the project directory and pressing the Run All button. If there are multiple.ebsfiles, run them all.Stage 7: Concatenate each frame while crossfading. Add the original audio file back to the generated video.

6. Export the Final Video

Once all frames have been generated and transitions smoothed out, EbSynth will output your completed video.

Use FFmpeg or the ebsynth processing tool in the extension to reassemble the frames into a video file.

You can export in multiple formats depending on your needs. Common choices include

.mp4or.avi, depending on the required resolution and qualitySummarized

Basic Vid2Vid Workflow

This is the simplest way to transform an original video using EbSynth Utility. You extract keyframes, modify them with img2img, and use ControlNet (optional) for improved transitions. The frames between keyframes are automatically generated, ensuring smoother, flicker-free transitions. This is ideal for users new to EbSynth who want a quick and effective video transformation without complex parameters.

Vid2Vid with Multi-ControlNet

In this workflow, you leverage multiple ControlNets for enhanced control over specific aspects of the video. For instance, using Canny in ControlNet Unit 2 for edge detection, while TemporalNetXL (labeled as diffusion_pytorch_model) in ControlNet Unit 1 ensures consistency across frames. This approach is perfect when you need fine-grained control over multiple elements, such as poses and edge refinement, in your video transformations.

Img2Img with EbSynth Script

This workflow combines img2img modifications with the EbSynth script to give you precise control over frame edits. You manually adjust keyframes through img2img (with optional ControlNet effects), and the EbSynth script lets you fine-tune masking, depth mapping, and preprocessing for even better results. This method is highly flexible, allowing you to maintain detailed control over specific sections of the video.

Vid2Vid with Color Correction

In this workflow, you add an optional color correction stage after modifying keyframes with img2img. Correcting color at this stage helps reduce flickering and ensures consistency across the video. This is ideal when transitioning between frames that have slightly different lighting or color tones. If you're aiming for a professional finish, this workflow helps bring cohesion to your entire video.

Upscale & Concatenate

Once you’ve finished modifying keyframes, you move into the upscaling phase. This workflow ensures your keyframes match the original video’s resolution. Afterward, you concatenate the frames using EbSynth, add crossfades between transitions, and reattach the audio extracted from the original video. This method is perfect for delivering a final polished product ready for export.

Background Replacement in Stage 8

This is an advanced workflow where you introduce a custom background to your video. You can specify a background image or video in the EbSynth Utility configuration under Stage 8, replacing the original scene behind your keyframe edits. This workflow is particularly useful for creative projects where you want to completely alter the environment of the video.

Model I used (Ponyxl)

When working with the PonyXL model, there are key differences in how it operates compared to standard 1.5 models, particularly when integrating ControlNets. Since PonyXL is based on the XL architecture, it requires specific XL ControlNets and optimized settings to achieve the best results.

Key Differences Between PonyXL and 1.5 Models:

Model Architecture:

PonyXL is a part of the Stable Diffusion XL (SDXL) family, which is a more advanced version of the Stable Diffusion 1.5 models. The XL models offer higher resolution, better attention control, and more detailed image generation capabilities.

Unlike the 1.5 models, PonyXL operates with deeper layers and larger latent space, meaning it requires more computational resources (especially GPU VRAM) and fine-tuning to maintain consistency across video frames.

ControlNet Settings for XL Models:

XL ControlNets are necessary for models like PonyXL. You cannot use the same 1.5 ControlNets as they are not compatible with the deeper architecture and advanced features of the XL models.

When setting up ControlNet for PonyXL, you should choose diffusers_xl ControlNets specifically designed for XL models (e.g., diffusers_xl_canny_full, diffusers_xl_depth_full).

These ControlNets provide more control over higher resolutions and complex video transformations, maintaining consistency, and reducing flicker between frames.

Performance Requirements:

Since PonyXL and the XL ControlNets require significantly more VRAM, it's recommended to use at least an 8GB VRAM GPU (preferably 12GB or more) to ensure smooth processing.

Denoising and masking thresholds are different in PonyXL; you may need to lower denoising strengths slightly compared to 1.5 models to maintain details in high-res frames.

Prompt and ControlNet Adjustments:

Prompt Sensitivity: Prompts in PonyXL tend to be more detailed and responsive, which means the adjustments in prompts can have more significant effects on the final output. You should consider adjusting prompts with more precise details, particularly if you're blending various styles or artistic elements.

ControlNet Control Weight: You may need to adjust the ControlNet weights more finely in PonyXL projects. The standard 0.5 weight may sometimes need to be increased slightly, especially when working with high-contrast or highly detailed keyframes, ensuring the ControlNet effects don’t overpower the artistic style applied via img2img or prompts.

Workflow Considerations with PonyXL:

When using PonyXL, the workflow follows the same stages as described in the EbSynth Utility setup but requires additional focus on resource allocation and fine-tuning settings in the img2img and ControlNet stages.

Make sure to select XL ControlNet models in every step where ControlNet is applied, and allocate more time for rendering, especially when working with high-resolution videos or intricate frame transitions.

By understanding these differences and making the necessary adjustments, you can maximize the output quality when working with PonyXL and ensure that your video frames are rendered smoothly with consistent quality across keyframes.

Works Cited

GitHub - s9roll7/ebsynth_utility

Information on using the EbSynth Utility with AUTOMATIC1111, including setup and stages of the video workflow.

Source: GitHub(GitHub)(GitHub)Stable Diffusion Art - ControlNet and Workflow Extensions

Details on ControlNet integration, settings, and the specific configurations for video transformations with AUTOMATIC1111.

Source: Stable Diffusion Art(Stable Diffusion Art)Toolify.ai - Creating Animations with EbSynth Utility

Examples and detailed steps of the EbSynth process and its integration with ControlNet for producing high-quality animations.

Source: Toolify (Toolify)Weam.ai - Using AUTOMATIC1111 for Video Generation

Reference for ControlNet settings, including differences between XL and 1.5 models and their application in EbSynth.

Source: Weam.ai (GreatAiPrompts)

These sources provide technical details and best practices for using EbSynth Utility in combination with AUTOMATIC1111 for video generation workflows.

.jpeg)