Results

Something to keep you interested in reading the rest of this loooong article:

A sample of the (final?) results!

Prompt: "1girl,anime, long_hair, dress, orange_hair,, solo,makeup, black_hair, looking_at_viewer, bare_shoulders, red_eyes, freckles, upper_body"

left to right: sdxl base, juggernaut 9 base, final model finetune

model training settings used here=

LION, constant, LR=1e05, EMA step 200, 10 epochs, 600 images.

All images just tagged with "1girl,anime".

This fit into just under 16GB using OneTrainer. (BF16, also using XFORMERS + gradient checkpointing). It gives 2 IT/s on a 4090

This is my story

Please note: this is an accounting of my training journey. It has not been distilled into a concise step-by-step guide, because... I'm not sure that is actually possible.That being said, I think it will be helpful for many people who arent experts already, in the realm of SD(xl) model training.

It seems like, based on my experience::

Everything depends on everything

The base model you are attempting to finetune

The dataset(s) you want to apply

The tagging method

The optimizer

The number of epochs

The use of EMA or not

The value for EMA update steps used

If you change any ONE of the above values... you may end up having to change some or all of the rest of them to get optimal results

It started with two datasets

I started with two anime image datasets: A 145 image one, and a 600 image one.

Since I was fairly picky about selecting images, they actually gave quite similar results for my earlier training experiments... so I was being lazy and just doing many rapid training experiments with the smaller one

But then I got to higher learning rates and longer epoch counts, and decided to re-compare to the larger set, and changed my approach.

For the trivial case of "train with all images tagged with just '1girl,anime' the smaller pickier image set does well. However, now that I have reverted to using FULL per-image tagging, and 50+ epochs, the larger image set seems to be performing better.

Roll with float32 or bf16?

float32 was possible to use with much tweaking... but it was waaayyy too slow, even on 4090. Plus for anime purposes, it really didnt make much difference. So I gave up and went with bf16 for a 4x speed boost

Initial runs

Skipping all my flirting with other optimizers, I went with LION.

There were many fiddlings, but we may as well skip the botched runs, and pick up our story where I made a clean, methodical fresh start:

LR1e06, constant scheduler, no EMA.

I let it run for 50 epochs, and then tried to figure out where to go from there.

(as mentioned lower down, however, 50 epochs turned out to be overkill for my goals)

The jump to Juggernaut

Eventually, I got tired of attempting to force base SDXL to make non-horrible humans.

The art style wasnt horrible, but what it did to hands and arms was. Here are some samples of epochs 0-9.

Finally, I decided to see what a different, better tuned model would give me. I picked juggernaut, and thats when quality went up drastically.

The version of juggernaut I used (v9) had a vague understanding that there was a style called "anime", but tended to do half-realistic renderings. So for my specific purposes, juggernaut 9 seems a much better platform than base SDXL.

Experiments with "full" tagging

I had the impression that if you were making just a quick style lora, then fixed keyword tagging is fine. but for FULL finetunes, you needed FULL tagging.

I guess my goals were a little specialized. I wasnt looking to fully extend the model. In essence, I suppose what I was trying to do was make "A LoRa, but better quality".

Because of that, it seems like the LoRa approach to tagging worked best,

I tried all of:

145 images, full tagging

145 images, only "1girl,anime" on all

145 images, only "anime" on all

600 images, full tagging

600 images, only "1girl,anime" on all

(and technically some other combinations too)

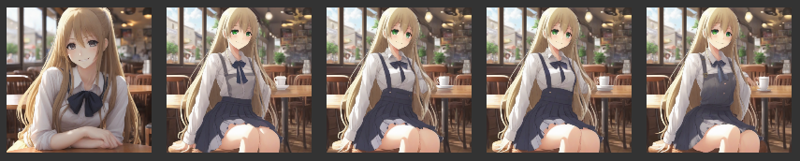

Far left is full tagging. Middle and right are "1girl,anime" vs "anime".

Since my goal was to get an old school, flatter anime style, I decided that "1girl,anime" was actually the best tagging to use

How many epochs are optimal

(note: See also the section above, "everything depends on everything")

For this dataset and model, there was a number of epochs past which it made no difference. The results converged very strongly on a particular output for any one test prompt.

However, I know from prior attempts at training models, that other combinations of dataset+model+learning rate, where the output just keeps morphing infinitely, so picking a finite cutoff is crucial.

For most of my experiments here, it wasnt even showing noticable differences past 10 epochs.

This was surprising, particularly since other datasets and goals Ive tried, this has not been the case.

Shown below are samples at epochs 0,10,20,30,40

Major milestones discovered

The EMA cliff

Background: Using EMA is a great tool when your training is getting a little too enthusiastic. However, it does slow things down.

For a while, I could train up to LR=(edit: I THINK I meant 9e-5 here), with results like this:

But as soon as I pushed it that little extra, to 1e-06, I got

So, for LR=1e-06 and up, I needed to use EMA, which then made it happy again.

Fine-tuning the fine tune

Tweak the generation output

Sometimes, you may think you are tuning for a particular set of settings, but a different set ends up giving better results. So in your testing, dont forgot to look at a range. For example, here's a range of CFG values 4-7 on one of my resulting models:

Depending on whether I was going for a soft look or a hard look, I may have considered this training run a "failure", if I looked at just one value for test output.

Number of Epochs

Earlier I said that doing more epochs on the dataset didnt make much of a difference. That is true for the main subject. But, it turns out that throwing more epochs on it once you find the major settings you like, can allow for more details to appear in the background, for this kind of training.

Or, sometimes, it does actually affect the main subject.

here's an example for 150 images, ema 28, and epochs 20, 50, 100. This time I made them separate images so you can directly flip between them if you like. Some differences are subtle.

EMA update step vs epochs

A reminder that EMA values slow down the change rate. So if you increase the "EMA update step" value, you will need to increase total epochs to get equivalent amount of effect.

Shown here is EMA update step values of 100, 120, and 140, for training Juggernaut on my "anime" style dataset, all with epochs=50

In other words, the higher the value, the greater the damping effect on the training.

EMA best utilization at small batch?

A theory I have not proven yet; Perhaps EMA gives you the greatest quality benefit at small batch sizes. Theoretical points for this:

I read once someone claiming that small batch gives you the most variety of detail in your model

Similarly, When you are doing rough, large-scale model training, large batch sizes may keep things from going off the rails. But if you arent.... maybe they are less great.

EMA smooths out changes. But so does large batch size, in a way. So double smoothing may lose detail.

Update: it is actually proven: https://arxiv.org/abs/2307.13813

TL;DR: increasing batch size requires scaling EMA adjustments up.

Orrrr... halving ema update step when you double batch size, perhaps.

Potential workflow

Summary of potential workflow:

Pick some fixead set of values for optimizer, LR, EMA, and EPOCH. It doesnt matter what, exactly; whatever makes you happy to start with.

Do a bunch of runs to make sure your dataset is as good as you want it. Remove stuff with bad influences

Now change ONLY Learning Rate, to see what you think is the "best" value. Fix on your new value for it.

Now change ONLY EMA value, to see what you think is best value. Fix on your new value for it.

Figure out what your best epoch value is.

Now maybe do a whole bunch of runs on your "final" model, figure out you screwedup somewhere, and start again back at step 3 with your new test prompts.... or potentially step 2, when you need to drastically alter your dataset.

To prove the point of #6, I went back to my larger dataset to give it a try, but with 3x the EMA value, since it is 3x as large. I also tried it with "full tagging" instead of just 1girl,anime for everything.

For some reason, the results are now superior, whereas earlier on in my journey, I found the smaller, simpler tagged set to give better results

Smaller dataset on left, larger full tag on right.

Do note that while the above used the same random seed, I had to customize the cfg and step count for each model to get the best result for each.

Additional Conclusions

If I have any takeaways from this incomplete process, it would be that, for doing finetunes at least, I wish I had a farm of smaller cards, instead of one fast one. So much of this seems like "throw a whole bunch of stuff on the wall and see what sticks".

It would be very nice to have a 4x "NVIDIA Quadro RTX A5000" system, for example.

Or perhaps just a small 4-machine 3090 (or 3080) cluster.' Probably similar purchase cost, but the 4x quadro would cost less electricity to run.

Epilogue

I released the model!

https://civitai.com/models/753912

After comparing EMA step100 vs 85, 120, and 140., for 50 epochs, I noticed that if I had longer EMA step, I needed to also have more epochs.

So I said the heck with it, "what happens if I just run 100 at 100?"

and I liked the results.

Warning: the training samples actually looked WORSE at epoch 100, than my epoch50 samples generated by OneTrainer. But I liked the actual model results better.

This may have been largely due to the training sampler running at CFG=7 instead of 5, but... also, there's something a little odd about the OneTrainer sampler system and SDXL. So, when you get close to your desired result, I would recommend only judging based on fully generated models after that point.

XLsd info moved

I have finally moved my XLsd info to its own article:

https://civitai.com/articles/8690

The Elbow

No, this is not about "finding the elbow of the curve". This is about avoiding deformities and other unexpected surprises.

In multiple training runs, I kept getting this in the outputs:

I think this was all due to ONE IMAGE in 130,000, that had a confusing elbow crop in it:

From that one image, the model somehow fixated on "right arms dont have hands and thats normal". All that goes to show:

Be super picky about your input data!

Less than .001% being bad, can still drastically screw up your images, if it is bad in the "right" way.