(sorry, the banner is NOT generated by the model! :D )

Introduction

I am hereby documenting my path through developing the "new" XLsd model, so that the knowlege may not get lost to time.

XLsd is a model with the SDXL VAE, in front of SD1.5 unet, as a drop-in replacement for the original VAE. (they are the exact same architecture!)

If you just drop it in, though, its output looks like this:

So, there is a lot of retraining that needs to be done.

This is currently not a cleaned-up "how to" guide.

This is basically a history documenting my journey, in all its mess.

SD1.5 with XL VAE rework

I'm attempting to retrain SD1.5 with the XL VAE.

(Recap: this doesnt make SD go to 1024x1024 resolution. What it will hopefully do, is just give more consistency when handling fine details)

This task is ALMOST, but not quite, like a "train model from scratch" endeavour.

I'm trying to use this paper as a milestone marker for things. (Especially Appendix A, page 24, Table 5)

For example, they started out with 256x256 training initially (at LR=2e-04 !!), and only did 512x512 for the last 25% or so?

I tried doing that, but... I'm a bit skeptical of results I am seeing. So I am currently just doing 512x512 training only.

THOSE guys did the very first round of 256x256 training with ADAMW cosine, but then after that, always used ADAMW constant. (batch 2048, LR=8e-05)

Then again, they also weaved in use of EMA.

Specifics on XLsd tuning

For my experiments so far, it seems like I need to do at least two separate rounds of training.

.... Documenting my test history ...I was messing around with my cleaned up varient of CC12m dataset. Tried a bunch of combinations:1. 256x256 training, which got rid of the bad colors and odd... artifacts.. kinda fast.. but then it was a 256x256 model again. I think it regressed things too much, so that when I followed up with 512px training, wasnt as good as I liked2. A buncha 512px training varients: adafactor, adamw, lion, mostly at batchsize 32 (the largest I can fit on a 4090). either it didnt quite get nice enough for my liking, even after going through a million images of training.... or I put EMA on it, and then it wasnt dropping the transition artifacts fast enough. Even at LR=8e-05So I finally gave up on the above, and changed to a cleaner smaller initial dataset, AND a smarter optimizer. I recalled that unlike SDXL finetuning, I can actually fit prodigy optimizer into vram for sd1.5 training!!

Phase 1 plan

Train at 512x512. Get the colors fixed, and basic shapes brought to human standards. NO EMA

The EMA problem shouldnt be too surprising: after all, EMA in one sense prevents large changes.. but what we want is exactly to make large changes.

Best results so far for round 1 training: prodigy, bf16,batch=32, no ema

B32 Only fits in VRAM if you enable latent caching for some reason. Then it takes around 19GB.

(Currently training on https://huggingface.co/datasets/opendiffusionai/pexels-photos-janpf since it is all ultra high quality, zero watermarks or any other junk)

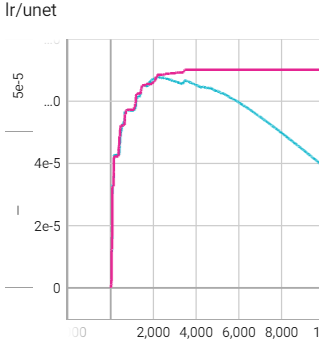

The most interesting thing is that Prodigy is an adaptive optimizer. After 4000 steps, it eventually adapted to 8e-05.. Exactly the same LR that the paper I mentioned earlier, used.

(well, technically, prodigy picked 8.65e-05). However, those guys were using batch size 2048

It is unclear to me whether the LR prodigy chose for this round was primarily due to the dataset, or the tagging, or the FP precision, number of steps (130k images, batch=32,epochs=5), or....

Phase 2 plan

Handle more like a finetune. Add in EMA, for more "prettiness" (i hope)

Note: for THIS round, prodigy eventually settled on LR=1.81e-05

This was for a dataset of 2 million images, batchsize=32, epoch=1

XLsd redo section (where I throw away my prior plans)

Phase 1 redo justification

I have my suspicions that I messed up phase 1 on multiple levels:

Using a bad WD14 tag set

Having my "fallback train type" set to bf16 when it should have maybe been float32?

So, I'm redoing it with Internlm7 NL tags, plus going overboard and training with full float32 (even though saving as bf16)

(PS: float32 didnt work out well first round. Would be too slow to figure out what values work right, so going back to bf16)

Dataset notes

This is the dataset used for phases 1 and 2:

https://huggingface.co/datasets/opendiffusionai/cc12m-cleaned

That dataset is being reduced (cleaned) over time so may get smaller. So information about it given here is as of 2024-11-01

I am using the images pulled via img2dataset so they are stored in subdirectories such as 00000 through 00375

| Nickname | index_range | size |

====================================

| set0 | 000 - 099 | 644,000 |

| set1 | 100 - 199 | 644,000 |

| set2 | 200 - 299 | 644,000 |

| set3 | 300 - 374 | 160,000 |

( The irregularity in set3 is because I ran out of disk space )

When running a set, processing time on my 4090 consisted of:

10-15 hours to generate a latent cache

5-12 hours to generate an epoch from the latents, depending on which set, and batch size.j

Images are stored on-disk as already resized down to 512 x XYZ

Interesting things of note:

For fp32 (I think? later on i just use bf16 everywhere),

I can just barely squeeze batch=8 on my 4090. That turns a 24hour 1epoch run, into an estimate 7 hours. OOps, no I cant. It crashed with OOM after 170 steps. Back to batch=4.

Which starts at VRAM=22G, but rises to 23.1G around 200 steps in. Not too bad though: only a 9 hour run.My smaller batchsize tests resulted in prodigy using lower LR when everything else was the same. So it is indeed doing the "large batch, compensate for LR" thing after all.

But... second rounds, it somehow forgets how to adjust LR. :(Going to try EMA. The normal training gets rid of the colors fairly fast, but... distorts the human figures horribly. It takes a long time to work around to being close to normal.

Hopefully using EMA will minimize the distortion.longer-term LR for the first training set, 130k images at batch=4, is LR=2.81e-05

(or is it?)

LR values (for Phase 1)

(currently 20 warmup steps)

Lion, b32, LRS=Batch, LR=3e-05, EMA100: TOO BIG!!

Lion, b32, LRS=(anything), LR=2e-05 (effective 1.13e-04), EMA100 usable.. until 700 steps. Then it goes all grey

Lion, b32, LRS=both, LR=1e-05 (effective 5.66e-05) , EMA100 ... usable.. pending full results

(It looks like it may converge too early. Need to do a prior run first?)

For Prodigy, no LRS, batchsize=8. It seemed to reach peak value just after 2000 steps. (LR=7e-05)

Combining it with Cosine may be a good idea. With a learning period of 1, cosine vs constant looks like this(6.75e-05 peak):

A Redditor suggested that if I wanted to match prior papers, I might try LR=3.75e-6 for batch=32

That being said, prodigy with batchsize=32, e=4 seems to peak around 5000 steps, LR=8.48e-05

XLsd Phase 1 quality notes

Using prodigy at batchsize=8, it took approximately 1000 steps until the horror that was the initial mashup, was no longer way out of color matching and oddly pixellated.

It took 7000 steps until colors were reasonably "good".

Human figures at stage 1 training, however, remained pretty bad.. .all through 20,000 steps?

I suspect that when a model is this far out of whack, it is beneficial to keep batch size relatively small, until you get closer to target weights. Because higher step count gets you there faster.

But... waiting for confirmation on a redo with higher batch size.

I suspect that training on the same number of images with larger batch size will give inferior results, even though prodigy will automatically raise the LR because of larger batch size. So, I should probably do 1 run with epoch=4 (to match number of steps)

rather than 1 run with same epoch(1)

Early runs suggest batch32 converges to something non-pixellated faster.. but also hits errors faster. Such as the missing right lower arm, at only 500 steps.

It has somewhat improved, for b32 at 5500 steps. But... not great.

Would EMA help? Does "cosine with hard restarts" reset EMA, I wonder? If so, that might help.

(edit: turns out that no, hard-(reset) does NOT reset EMA curve)

Adjusted phase 1 plan

For my phase1 base, I am currently going with the results of

prodigy, b8, cosine hard restart (10 cycles) No EMA

across approximately 160,000 images. Final run was with the "set3" dataset mentioned below.

XLsd Phase 2 notes

Phase one training goal was a "get the thing to the right color scheme". It was a single 160k image run

Phase two goal is "realign all the concepts that got screwed up with the transition".

It will be a 2 million image training set, split into separate quarter pieces.

(Since I've noticed that the auto-adjusted LR is lower for each one, it doesn't seem to make sense to me to run the same LR value auto-adjusted for the first quarter, across all of them)

Adaptive oddities

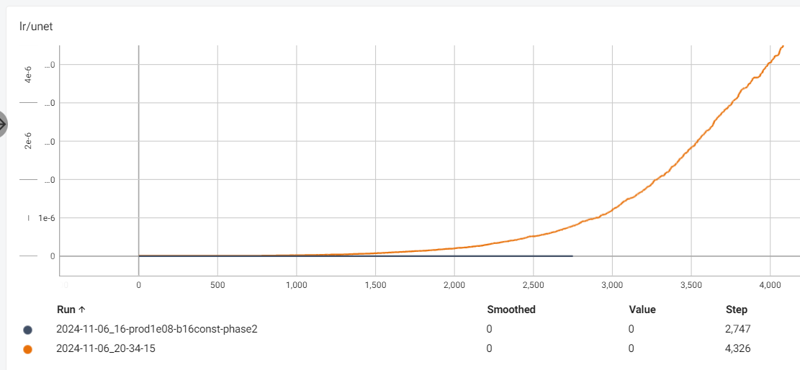

The interesting thing is, when I fed the phase1 model into training for phase 2, prodigy started at its 1e-06 baseline.. and stayed there.

I lowered the baseline to 1e-07. It still stayed there.

This is for batch=8. I think I shall see what happens if I make batch=16, since prodigy tends to auto-scale LR based on batch size, as that is typically required.

(nope, no help)

I am now comparing prodigy to dadapt_lion. Surprisingly,

After 2000 steps with initial at 1e-08, b=8, prodigy did not adapt

After 2000 steps with initial at 2e-08, b=8, dadapt_lion DID just start to adapt.

I am rather shocked by this, since prodigy self-adapted quite nicely in phase 1. So.. why does it stop now?

Potential cause: I discovered that I had weight decay set to 0.01 in the optimizer.. probably for some other one.. but it held over when I switched to prodigy. When I hit "restore defaults" for adap-lion, it changed the value to 0.0

So at some point, I might try prodigy again.Random update...

holey smokes its actually generating somewhat human faces in samples now! (sometimes)

This is halfway through phase 2, second quarter. That puts it at around... 500k images in?

Phase 2 Learning rates

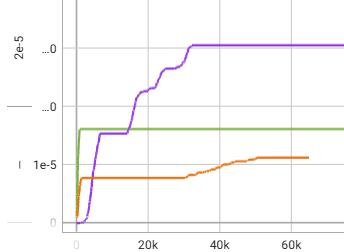

The first quarter batch was trained with dadapt-lion b8. It stabilized at 3e-05 (but had a brief plateau around 1.6e-05) (purple line)

The second quarter batch trained with dadapt-lion b8. It stabilized at 1.6e-05 (green)

Third quarter batch was initially around 7.7e-06, but eventually adjusted to about 1.1e-05 (orange)

To me, that suggests that a lazy approach to doing phase 2 would be to do the whole thing in a single batch in (non adaptive) LION @ 1.6e-05

I have noticed that after setting the optimizer values to straight "default", with weight decay = 0.00, the outputs are mostly monochromatic. Not sure now if I need to redo phase 2_2, or just keep going with weight decay=0.01 again

Phase 3 plan

Since all images in that dataset are cached, AND the adaptive learning rate is starting to converge neared to default starting value (1e06) I may do one single megarun, with increased batchsize. (probably b16)

... This is estimated to take 25 hours.

After 2000 steps, dadapt lion has settled on LR=4.7e-06

.. except it is now going up to 6e-06 and everything is monochromatic.

Seems like things have been increasingly monochromatic since phase2-1.

Time to restart there.

...

I gave up on that, and decided to start again at phase 2 level.

Tried multiple "whole dataset" approaches and didnt like any of them. Too many monochrome samples which suggest overtraining.

Gone back to my earlier strategy of "break into 4 sets, train seperately", but this time using cosine hard restarts, to avoid the adaptive training just peaking and staying there.

The problem with cosh, is that if you do just one epoch, then 1/3 of the dataset basically gets squashed out.

So I decided to double my batchsize and double my epochs (2 instead of 1! Wooo!)

That way I can use 3 learning cycles over two epochs, and get a much more fair distribution over each image.

Phase 2 reprise... (batchsize 16)

This time, I'm going to run dadapt-lion b16 to find a suggested LR, then run it with fixed lion.

The trick is... the adaptive things adjust TWICE. One plateux at around 5k, and a second around 10k. for batch16

So, just go with the first plateau value.

set0 first plateux is around 1.6e-05

(I should probably also try a whole nother phase2 with batchsize=8 again)

LION shocking notes

DADAPT LION Usable?

Reading https://github.com/lucidrains/lion-pytorch?tab=readme-ov-file it says that he observed much better results with LION when using Cosine scheduler, not Const.

With that in mind, was DADAPT-LION designed with the assumption that Cost would be used?

If so, then the ever-increasing LR makes sense.. because it has to fight Cosine damping on the back end!!

And if so.. that means I could potentially just use straight DADAPT-LION with Cosine, and forget about all this hand tuning!

?? !!

Additional dadapt lion notes:

It change its rate of scaling LR, if you change batch size (so automatically does the "double batch size, double LR" scaling, except it isnt neccessarily double)

It does NOT change rate, if you change epoch count

For same dataset, it has plateaus around similar step counts, even with different batchsize

Dont forget weight decay!

There is also mention that LION absolute has to have weight decay (standard is 0.01)

This is important if you press the OneTrainer defaults button, which will set them to 0.0

Phase 2 retry (#3??) With demo pictures!

Summary: I did a lot of experimenting with dadapt-lion. I found some things i liked, and some things I didnt. TL;DR: while it probably converges if you run it a long, long time... I'm not satisfied with just "run it until it eventually looks nice".

Problems I encournted:

adaptive optimizers seem to overtune when given a LARGE amount of steps.

The implementation I am using doesnt give enough options to restrain it in the way I like

using cosine "annealing" kinda helps... but not enough for my test

Unhappy samples

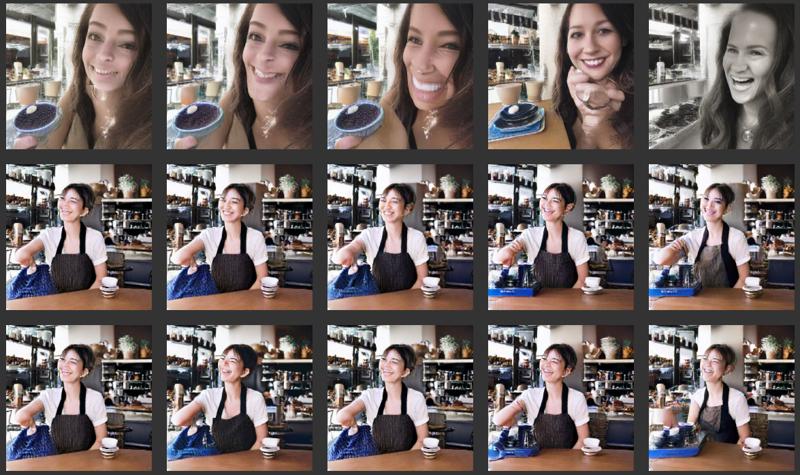

Here are 3 test runs. I believe they are all with DADAPT-LION. The last two are with cosine.

Either they are too varied... or they dont give ENOUGH change

Remembering my somewhat positive experience with smaller finetunes, I really wanted to use EMA. It tended to allow a decently high rate of change, yet still keeping that change somewhat "focused". However, it seemed like EMA wasnt working well with my large training.

Turns out... this problem is largely to do with batch size.

EMA needs batch size linked scaling

https://arxiv.org/abs/2307.13813

Unless your EMA implementation, implements some kind of batchsize related scaling... you have to use it only with batchsize=1, really.

Previous experience taught me that the "best" way to use EMA, was to find the largest LR that didnt completely break things, then stuff it down with EMA.

I gave up DADAPT-LION, and turned to regular LION.

Things break for me at 5e-05, so I tried at 4e-05.

It turns out that the OneTrainer implementation is somewhat broken and wont let me adjust the decay rate of the EMA values at present. So instead of just adjusting the slope of the ema decay curve, I had to adjust the limit.

I tried LR values from 4e-05 down to 1e-05, at EMA limit 0.9

Here is a small sampling of runs. As you might see, either it goes too far, or just doesnt improve the face enough.

I also tinkered with 4e-05 with EMA limit of 0.95 and 0.99, but it was too much or too little.

Finally, I gave up on 4e-05, and took LR down to 3e-05

Finally, I am seeing some semblance of what I want:

Enough improvements are being done in a reasonably short amount of steps, yet at the same time, the improvements are being channeled in a similar direction as each other, rather than randomly all over the place.

LION 3e-05, batchsize=1, EMA limit 0.95

(A reminder if you skipped down to this part directly: For many programs, EMA will not work properly unless you have batch size=1. Otherwise, the trainer would need to implement EMA scaling linked to batch size.)

The samples from the above, are 1/4 of the way through "set 1" in my latest phase2 redo.

Each set now takes 18 hours, so this is gonna take a while! But at least we have someplace good to head to now. .. I hope!

One interesting thing of note: batchsize=1 may be slower... but I am doing the (bf16) training, with EMA on gpu, in under 8GB of VRAM.

Update: that aint it, chief

Checking the results after 12+ hours, it doesnt change enough in the mid term. Im going to continue tweaking values.

I have decided to try "boost batch size, reduce EMA step".

So, going with roughly batch* (2^3), EMA step / (2^3), which gives batch 8, EMA 12, at LR=3e-05, that gives us initial results of (after 4000 steps -> 32,000 images)

I shall probably this this one run to completion, and then finally start on set 2 again....

Which will then require I adjust LR again, typically :-/

The nice thing is, I am back down to a training set taking 7 hours instead of 22.

2024/11/14

"day 32 of my incarceration. I may be going insane."

Actually, I've lost track of days, which is why i'm now going to put date stamps in :-/

Im still unhappy with results so far.. but I did learn some things.

I can fit LION, batch=64 on a 4090, at resolution 512x512

To use EMA with batch=64, a likely good update-step value is "2"

2024/11/15

I felt it prudent to restart training back at the beginning of phase 2

After some fiddling, and multi-hour comparisons, I discovered

`LION,batch=64,LR=4e05, EMA=0.995 EMA step=3"

holey smokes! Teeth? Fingers?!

The only hitch is that I have to stop set0 at 4100/9800 steps. It degrades after that.

Maybe I should have used cosine on top of ema?? Nahhh...

Onto set1! ... after I rerun the training to make an actual save at 4100 steps rather than just a sample image

2024/11/16

The work proceeds..

I ended up being the most happy for set 1, using dadapt-lion!

But for set 2... dadapt refuses to adapt any more, and just stays at its base value. Apparently, you can only use the magic bullet one time.

Here's a sample of the things I've been comparing output from recently:

2024/11/17

I would get depressed if I listed all the things I tried today and did NOT like.

But here's a little ray of sunshine.

I got annoyed that human faces were not improving as much as I hoped, in my "phase 2".

So I'm throwing out the idea of just training on the whole general dataset up front, and instead, I have made a subset specifically for humans. I now have a "woman" subset and a "man" subset.

Initial tests are looking promising. Lets see what turns up.

A little data:

Out of 2 million images in the segment of CC12m I am using, around 30000 images are of "A woman ...", and similarly around 30000 images are of "A man ...".

2024/11/18

My current base:

My current pain, is that further training is making it worse, so I need to get more delicate or something

2024/11/19

The good news is, I extracted all "A woman ..." images from CC12m (minus my filtering), which leaves me a set of around 400k images

The bad news is... I'm not getting much improved detail out of it, at batchsize=64.

Going to try dropping down to batch=16, with LION LR=1e-06

Initial testing with the new b=16 look very promising.

2024/11/22

AAAAAAAAAAAAAAAAAAAAhhh!!!!!

I cant take it any more, I'm starting from scratch again :(

Some of the results were iffy... some were seemingly not going anywhere...

Plus some of the chain of development was a bit murky.

So I decided to start from scratch again:(

This time though, Im going to try to make it fp32.

Previously, I only found the fp16 version. But the latest version of OneTrainer mentions the "new" huggingface repo for sd1.5, so I'm trying that as a starting point this time.

Plus, I have done a little more cleanup on my local caches of the datasets I used earlier.

(remove more watermarks, junk, grids, and children)

I am initially taking the onetrainer SD1.5 defaults... mostly...

ADAMW, const, 3e06, batchsize=4

Dataset plan:

Pexels 130k

General case 2 million image subst of CC12m

"A woman" subset of CC12m, (350k+)

"A man" subset of CC12m, (200k+)

FP32 batch sizes and speed

Here are batchsizes I have seen fit onto a 4090, for the SD1.5 model, and my datasets

(With no latent caching)

using FP32

(Reminder that with some optimizers, bf16 lets you get up to b=64, so b=16 limit should not be surprising)

ADAMW 4,16! (b16=2.5s/IT)

ADABELIEF 4,8,16! (b16=2.5s/IT)

ADAFACTOR 16! (b16=2.5s/IT)

LION 16! (b16=2.5s/IT)

DADAPT LION 16! (B16=2.5s/IT, LR=4e-06)

PRODIGY 4! (b4=1s/IT)

(With latent caching, fp32)

LION 16,?32? ( b16=1.8s/IT)

( b32=3.5s/IT but uses 24.0GB, will probably fail?)

(seems to work only if I set stop after 10,000 steps??)

PRODIGY ?8? (b8=1.2s/IT, but will probably overrun memory.

LR=2.7e-06, but adjusted to 4.3e-06 after 2000 steps)2024/11/24

fyi: I am currently waiting through a 48-hour caching job. Apparently thats how long it takes to cache 2 million images from 512x..... res on my setup.

2024/11/25

Cancelled the prior run. Didnt like how it was going.

Worked my way through LION, fp32, batchsize 32, partial runs at 6e06, 5e06, 4e06, 3e06.

Now doing a "phase 2" long run at 2e06.

Intermediate sample:

... and now I find that there are still some majornasty watermarks in the dataset messing up generations. So now Im going to be doing what I was trying to avoid, and running a semi-decent VLM across the dataset, to weed out watermarks.

InternLM 2b can process the 512x512 images at around 5/second.

Which means it will take around 4-5 DAYS, to process just the 2 million-image subset of CC12m I am working on.

Ugh.

The Rumor of 512

Today I heard a rumour about 512. That is to say, alleged, the optimum batch size for medium to large training, is min(512, datasetsize* 0.2 %)

Which I guess is another way of saying, "make sure you have a MINIMUM of 500 steps of training. Other than that, try making your batch size 512"

Which also says that minimum dataset size is 500, but "what you really want" is a minimum of 256,000 images.

Hey, good news, I just finished watermark-filtering 640,000 images in the dataset!

Time to try this thing out.

(Although I'm only going up to virtual b128, by way of b16xAcum8 )

2024/12/01

Working on improving the dataset further. I just realized there are some really bad shots in the CC12m, after I have gotten rid of most of the watermarky stuffs.

Some of that effort is here:

https://huggingface.co/datasets/opendiffusionai/cc12m-a_woman

2024/12/04

I have discovered that virtual batch 256 (b16,accum16) can give really good results... but requires a really large dataset size.

I have large datasets... and I have quality datasets.... but I dont have a large, quality dataset :(

I am half tempted to just run it over my naively cleaned 2million subset of CC12m. But... there are so many watermarks on it, it would take days just to set up the latent cache.

It wouldnt be so bad if the images were tagged that they have a watermark... but if they were tagged, I could just remove them to start with.

And watermark detection over the 2 million would again take days, if not weeks :(

Part 2 article

This has gotten too long, so "part deux" is now at https://civitai.com/articles/9551

---

Long term plans ... are now here

There is a non-zero chance that at some point I will redo the entire exercise from scratch, since I discovered there are certain things in CC12M that are still in the "cleaned" set, and would probably be best not in my new model. This covers a variety of things, including image grids..And I am now doing this. In FP32 at the moment.

So slow :(

Future future possibilities:

Do a 768x version ?