Lessons Learned from the Anatomica Flux LoRA

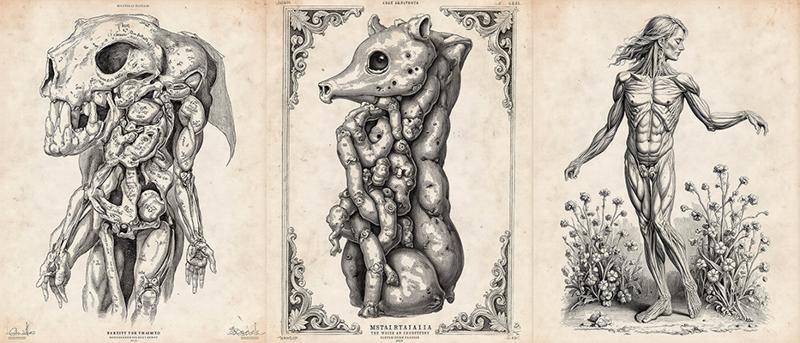

Along with a few other Flux LoRAs, I trained Anatomica (based on anatomy engraving plates) and Anatomica Chalk (based on anatomical chalkboard illustrations) through a total of 11 versions. The styles are distinctive, and they were chosen as an experiment to learn more about LoRA training with Flux. Now, it's time to share everything I learned in hopes that it will help others.

Note: Not every version was an improvement over the last, so I’ve only published 5 of the 11 versions on my model pages. If there is interest, I’ll happily publish the other versions for others to use to collect data on the variables I changed between versions.

Captionless vs. Captioned

Anatomica v1.0 was trained with no captions other than a trigger word. Despite what I’m going to discuss, I think it turned out really well as a LoRA to replicate the engraving style when making images that featured anatomical illustrations. Here’s a summary of what I learned from comparing captionless training with other versions with captions.

Captionless training is great for a heavy style application but not for generalizability, at least not with a dataset with too much similarity between image subjects.

Because Anatomica was trained exclusively on anatomical engravings, v1.0 had trouble not slipping the subject matter into generations that had nothing to do with anatomical drawings. Or it created a grotesque combination of the prompted subject and the anatomical engravings. See a simple prompt “a girl” and “a woman dancing in a field of flowers” below.

From future versions, I hypothesize that captionless training is more successful when your training images have a highly varied set of subjects in a common style. The more variety (as long as the style is consistent), the better.

Anatomica v1.0 was also the only model trained with just 20 images. The reduced number further reduced the variability of the images and complicated the issues.

The Importance of Editing Captions for Style LoRAs

I don't think I'm saying anything new when I mention that captioning makes all the difference when training a model. Caption anything unrelated to the style, character, or concept while leaving out anything that you want the model to learn as part of it. Caption things you want to be able to change. However, captioning, especially manually captioning, is a chore and likely causes more burnout among model creators than anything else. So, I experimented with a few different methods.

Anatomica v3.0 was captioned using a VLM with wd14 tags as input. I ran 30 images through a Danbooru-style tagger, then gave the same image to a VLM along with the tags and told it to create a caption using both as input. I used a custom script for this, but there are several available (like this one). I manually edited the tags to remove what I thought should be part of the style. Anatomica v3.0 had a few improvements over the captionless v1.0; they were limited, but I felt it was better overall. It still had a lot of issues with generalizability but made less distorted imagery when creating subjects outside anatomical illustrations.

Anatomica v6.0 used the same image dataset, but I ran the earlier captions through an LLM, specifically explaining that I was creating a style LoRA for engraving style art using anatomical engravings as my dataset images. Fully explaining everything about style LoRA creation and what my objectives in captioning were allowed the LLM to understand the task much better. It created a new set of captions that removed more than I did and greatly simplified the captions (it summarized details and cut the token count by about half).

The improvement between v3.0 and v6.0 was fairly dramatic. There were far fewer generation fails, the style looked better, and v6.0 had fewer issues when applying the style to subjects outside anatomical illustrations.

I looked closely at the differences in captions and noticed that v6.0 removed references to the style and medium while captioning all the details related to anatomy, medicine, etc. For example, I would take "An anatomical engraving style drawing of a human heart" and edit it to "A drawing of a human heart," and the LLM edited it to simply "a human heart." Essentially, for a style, LoRA's caption strictly describes the subject, and that's it. Later, I learned that taking out other references to emotion and feeling also helps (if they play a part in the style).

In addition, more straightforward captions are better. Captions in Anatomica v3.0 used a lot of detail, pointing out what was on the right side, left side, along the bottom, etc., while Anatomica v6.0 summarized all these details but didn't point out exact positions and every image aspect. I think using simplified or summarized captions helped as well.

My research didn't really go into what captioning engines/scripts worked best, so if you are interested in learning more about that, I suggest you review mnemic's training guides about captioning differences.

Using Multiple Training Resolutions

I think there is some confusion about training resolutions. Best practices have recommended using multiple aspect ratios when training, so most training datasets include images cropped to a variety of ratios, such as 4:3, 3:2, 1:1, 2:3, 4:3, etc., and the Kohya scripts "buckets" these during training. However, during training, you also have the option to train at a resolution like 512, 768, or 1024. When you pick one, the script downsizes all of your images to that resolution (so your 1024x1024 image essentially gets reduced to 512x512 for training at 512). As long as your settings are correct, the aspect ratios are preserved, just resized to be smaller. Most Flux LoRA creators agree that there is little difference between training at 512 and 1024 other than training time and system requirements; the quality of the LoRA doesn't seem to be affected.

All the published Anatomic Loras from v1.0 to v6.0 were all trained at a training resolution of 512, and I had no issues with using 512. Starting v3.0 I used five repeats, which seemed to work well. Images were crisp and clear, no matter the aspect ratio or size of the generated image. But out of curiosity, I started v7.0 using multiple training resolutions at the same time. Instead of having one dataset trained at 512 for five repeats, I set up the training with three datasets: the first with 256 resolution for 1 repeat, the second with 512 resolution for 3 repeats, and the third with 768 resolution for 1 repeat. The captions and images were the same -- in fact, I pointed the script to the same folder in all three instances.

I made a mistake with version 7.0, so just to be safe, I set up training the same way for version 8.0. I also trained Anatomica Chalk v1.0 using the same multiple resolution/dataset method. All of these versions showed one improvement in common: When compared to an image without the LoRA, it was obvious that they maintained the composition of the generation better than previous versions while still applying the style. I even took a step back and trained Anatomica Chalk v2.0 at a single resolution to confirm that this wasn't a quirk limited to my dataset or captions. Training at multiple resolutions at once seems to better maintain the composition and/or improve prompt adherence over single-resolution training. If I had to theorize, I would think that this better aligns with the training of the Flux base model.

Anatomica v9.0 also used multi-resolution training, but I only trained on two resolutions: 512 resolution for 3 repeats and 768 resolution for 2 repeats. It had the same superior compositional qualities of v8.0, but just looked better. The lines were cleaner, and the contrasts were deeper. It wasn't a huge difference, but it was a noticeable difference when looking at two images side by side that were generated with the same prompt and seed. It's possible that adding the repeat at 256 dropped the quality of the images produced (but not enough to notice the difference between the first multi-resolution training and the one at only 512), or it could have been a fluke. Unfortunately, the Anatomica dataset won't support a training resolution of 1024, so I can't continue to test it with higher, multiple-resolution settings without moving to a new set of images. I may use Anatomica Chalk v3.0 to retest and update the results here.

Conclusions

From this limited experiment, I can conclude these things about my LoRA training experience.

Captioned datasets will produce LoRAs of a better style if your dataset is not diverse.

Consider all the qualities that make up the style you are trying to create. Removing references to the style in captions may not be as effective as removing all references to the style, artistic techniques (crosshatching), medium (a photo, a drawing on parchment), and/or emotion/feeling (gloomy, sad, happy).

Training the same dataset at multiple resolutions during the same training may increase prompt adherence outside of the style and help maintain the composition of the base model.

Training at 512 is sufficient for most style LoRAs, but dipping down to 256, even with multiple-resolution training, may slightly lower the quality. Further testing is needed.

Questions and suggestions are welcome. I'll happily share my notes for each version with those who want to discuss these conclusions further or design future experiments. Special thanks to mnemic who has shared his research and engaged me in many conversations about my ideas and data.

Setup Notes

For anyone curious, here's what I used for training:

Trainer: ComfyUI Flux Trainer (https://github.com/kijai/ComfyUI-FluxTrainer)

Using a modified vision of the workflow here: https://civitai.com/models/713258?modelVersionId=871334

Models, VAE, and TE

flux1-dev-fp8.safetensors from https://huggingface.co/Kijai/flux-fp8/tree/main

t5xxl_fp8_e4m3fn.safetensors from https://huggingface.co/comfyanonymous/tflux_text_encoders/tree/main

clip_l.safetensors from https://huggingface.co/comfyanonymous/flux_text_encoders/tree/main

ae.safetensors from https://huggingface.co/black-forest-labs/FLUX.1-dev/tree/main

Hardware: RTX 3060 with 12G VRAM, i7-11700k, 80G system RAM