This article is my live note of my experience using Flux.1 on an Mac, particularly the experience of using ControlNets and IPAdaptor.

What's the experience of running Flux.1 on Apple Silicon like?

It sucks and if there were better services for running Flux + ControlNets then I'd use them.

As much as possible, I generate on Civitai.

With GGUF-Q4.1 an average generation takes 5 - 10 minutes on my 32GB M1 MAX.

That doubles to as much as 20 mins when using two ControlNets.

ComfyUI

Does ComfyUI Need to Run in CPU Only Mode?

No. I’ve read quite a few posts where folks said they had to run ComfyUI in CPU only mode using the --cpu flag but these are either the product of misunderstandings, or they relate to bugs that have now been fixed.

Does PyTorch Need to be Downgraded?

No. You can generate images using the latest preview (nightly) builds (late September 2024.)

This advice appears to come from early August 2024, when the MPS support in the nightly PyTorch builds was apparently broken.

Folks suggested downgrading to `torch==2.3.1 torchaudio==2.3.1 torchvision==0.18.1 and that advice appears to have stuck.

To illustrate, when I first tried running Flux using a PyTorch nightly build from June 2024 (torch-2.4.0.dev20240607), it generated this (50% actual size):

Updating PyTorch to the latest nightly build (torch-2.6.0.dev20240916), the same workflow generated this:

The Comfy Core SamplerCustomAdvanced Node

In my experience the Comfy Core SamplerCustomAdvanced node exhibits inconsistent behaviour when used with Flux.

The Civitai Quickstart Guide to Flux.1, uses this node as part of their Flux GGUF text2img Workflow (September 2024). In that workflow, the node is renamed to “Image Preview”.

In my experience, using the SamplerCustomAdvanced node (21.94s/it) adds an extra 5s per iteration compared to using the standard KSampler node (17.09s/it) for the same image.

Worse, I find the SamplerCustomAdvanced node sometimes produces broken images compared to the standard KSampler with the same inputs.

I could be misunderstanding something about the SamplerCustomAdvanced node but as of late September 2024, my recommendation is to avoid it.

With KSampler:

With SamplerCustomAdvanced:

GGUF HyperFlux Models

The K(_M) quants versions of the HyperFlux models appear not to work on Apple Silicon (M1, M2, etc). I've tried the Q4 and Q5 versions of both the 8-step and the 16-step models.

GGUF: HyperFlux 8-Steps - working, I've tested the Q4.1 version only

GGUF_K: HyperFlux 8-Steps K_M Quants - not working, I've tested the Q4 K_M and Q5 K_M

GGUF: HyperFlux 16-Steps - working, I've tested the Q4.1 version only

GGUF_K: HyperFlux 16-Steps K_M Quants - not working, I've tested the Q4 K_M and Q5 K_M

There are no warnings or errors just this same output, consistent across all the tested K_M quants:

XLabs-AI ControlNets

XLabs-AI have a curated set of ControlNets (Canny, Depth Map, and HED), IPAdaptor, and a few LoRAs.

I’m not a fan but there is currently (late September 2024) no practical alternative if you want to use ControlNets and Flux.

I don't like it that the XLabsSampler node doesn’t allow the user to control the sampler and scheduler that are used during generation. That makes it unnecessarily hard to reproduce consistent styles between images that use a ControlNet, and those that don’t.

I don't like that the code for their ComfyUI nodes isn’t tagged/versioned, which also makes debugging unnecessarily hard.

To use the XLabs-AI ControlNets and IPAdaptor, you must use their custom x-flux-comfyui nodes.

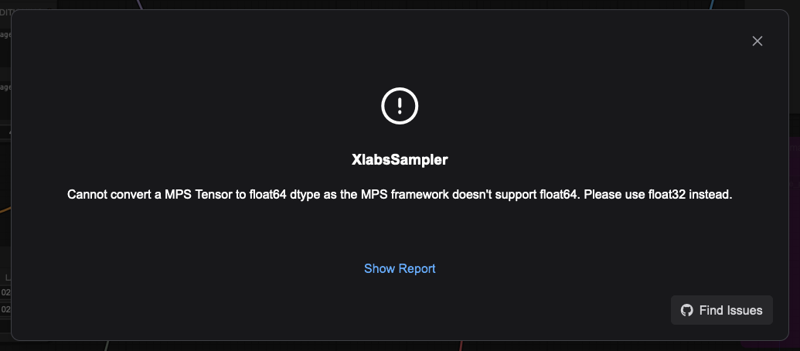

Unfortunately, the current version (late September 2024) of the XLabsSampler node crashes with the following error:

Note: starting ComfyUI with the --cpu flag doesn’t prevent the error, the XLabs-AI code still uses the GPU, in this case MPS.

It’s a straightforward bug, with a straightforward fix but as of late September 2024, there is an outdated PR with a fix but no indication if/when it will be merged.

So, the only solution is to install the nodes and then manually patch the Python code. (An ugly solution, but it works provided you don’t accidentally update the nodes.)

The Manual Patches

math.py

Edit ComfyUI/custom_nodes/x-flux-comfyui/xflux/src/flux/math.py as follows:

Replace this line (late September 2024 — line 17):

scale = torch.arange(0, dim, 2, dtype=torch.float64, device=pos.device) / dimWith these lines:

if torch.backends.mps.is_available():

# MPS supports float32

scale = torch.arange(0, dim, 2, dtype=torch.float32, device=pos.device) / dim

else:

scale = torch.arange(0, dim, 2, dtype=torch.float64, device=pos.device) / dimnodes.py

Edit ComfyUI/custom_nodes/x-flux-comfyui/xflux/src/flux/math.py as follows:

Changes these lines (late September — lines 358–361):

device=mm.get_torch_device()

if torch.backends.mps.is_available():

device = torch.device("mps")

if torch.cuda.is_bf16_supported():To explicitly set dtype_model = torch.bfloat16 for MPS devices:

device=mm.get_torch_device()

if torch.backends.mps.is_available():

device = torch.device("mps")

dtype_model = torch.bfloat16

elif torch.cuda.is_bf16_supported():Restart, ComfyUI and the issue should be resolved.

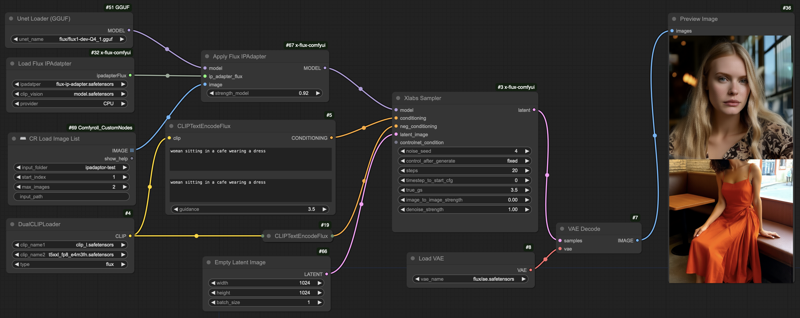

IPAdaptor

The XLabs IPAdaptor implementation is unsophisticated compared with the IPAdaptor implementations for Stable Diffusion.

At the most basic level, it works. You can give it an image and it patches the model with a reasonable approximation of the content of that image.

However, there appear to be four problem areas with the implementation:

You can only provide one image

The model patched using the XLabs Apply Flux IPAdapter node can only be used with the XLab the Xlabs Sampler

Images generated with the IPAdapter and Xlabs Sampler need a high number of steps (~ 40-50) in order to produce a good quality image

The majority of the test images I've generated have some unwanted artefacts. (This maybe because I'm testing with a GGUF quant and not the full Flux.1 Dev model.)

Multiple Images

As a baseline, with SDXL I'm used to chaining 2-3 IPAdaptors and using attention masking to affect different areas of the generated image.

For example: for a woman sitting in a wheelchair in a tulip field, I'd use 3 separate IPAdaptors, one for the wheelchair, one for the clothes, and one for the tulips. The IPAdaptor for the wheelchair might need a batch of 2-3 images at different angles to ensure that there are the correct number of wheels in the final image. Similarly, the clothes would typically be a batch of 2-4 images depending on the outfit.

XLabs-AI provide a multiple image workflow. This workflow demonstrates how to pass a batch of images to the Apply Flux IPAdapter node. However, the workflow generates a batch of images equal to the size of the input batch, rather than a single generated image that characterises the input batch.

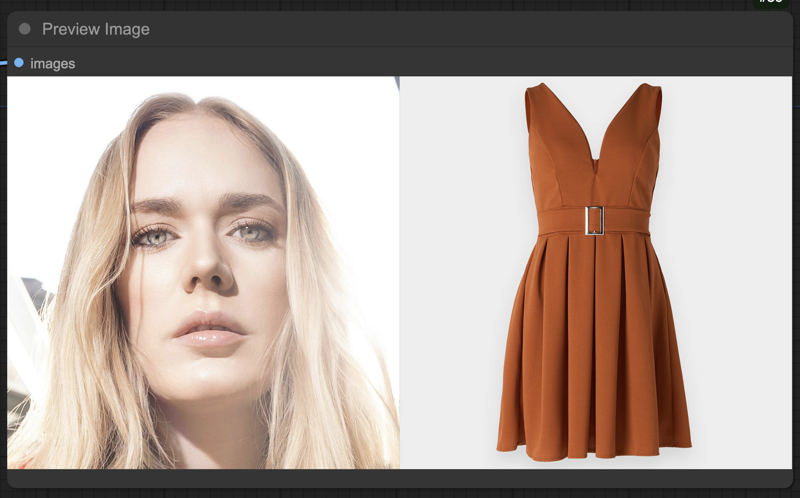

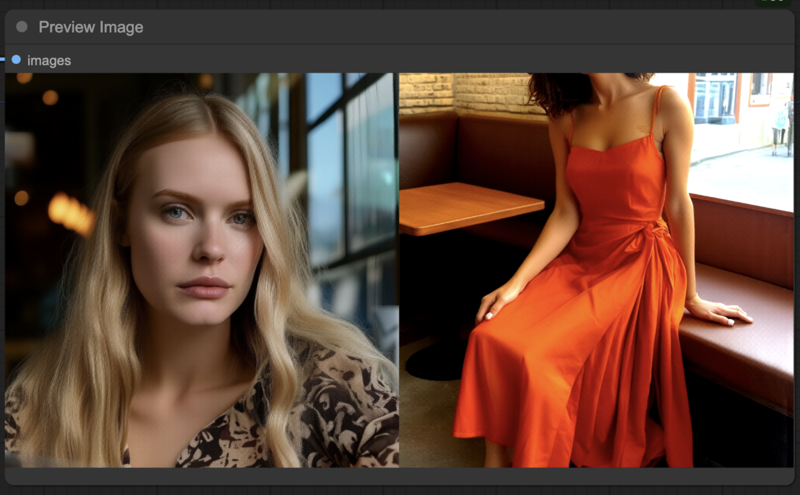

IPAdaptor input images (the headshot is taken from a photo by Janis Dzenis on Unsplash)

Generated Images

Workflow

Chaining IPAdaptors also doesn't work.