(Highly personal aesthetic standard inside; maybe not the same with your aesthetic standard.)

I think this is the problem partly caused by the "Model Collapse" mentioned in this paper:

[2305.17493v2] The Curse of Recursion: Training on Generated Data Makes Models Forget (arxiv.org)

We find that use of model-generated content in training causes irreversible defects in the resulting models, where tails of the original content distribution disappear. We refer to this effect as Model Collapse and show that it can occur in Variational Autoencoders, Gaussian Mixture Models and LLMs. We build theoretical intuition behind the phenomenon and portray its ubiquity amongst all learned generative models. We demonstrate that it has to be taken seriously if we are to sustain the benefits of training from large-scale data scraped from the web. Indeed, the value of data collected about genuine human interactions with systems will be increasingly valuable in the presence of content generated by LLMs in data crawled from the Internet.

When one generation of learned generative models collapses into the next, the latter is corrupted since they were trained on contaminated data and thus misinterpret the world. Model collapse can be classified as either “early” or “late,” depending on when it occurs. In the early stage of model collapse, the model starts to lose information about the distribution’s tails; in the late stage, the model entangles different modes of the original distributions and converges to a distribution that bears little resemblance to the original, often with very small variance.

In this approach, which considers many models throughout time, models do not forget previously learned data but instead begin misinterpreting what they perceive to be real by reinforcing their ideas, in contrast to the catastrophic forgetting process. This occurs due to two distinct mistake sources that, when combined throughout generations, lead to a departure from the original model. One particular mistake mechanism is crucial to the process; it would survive past the first generation.

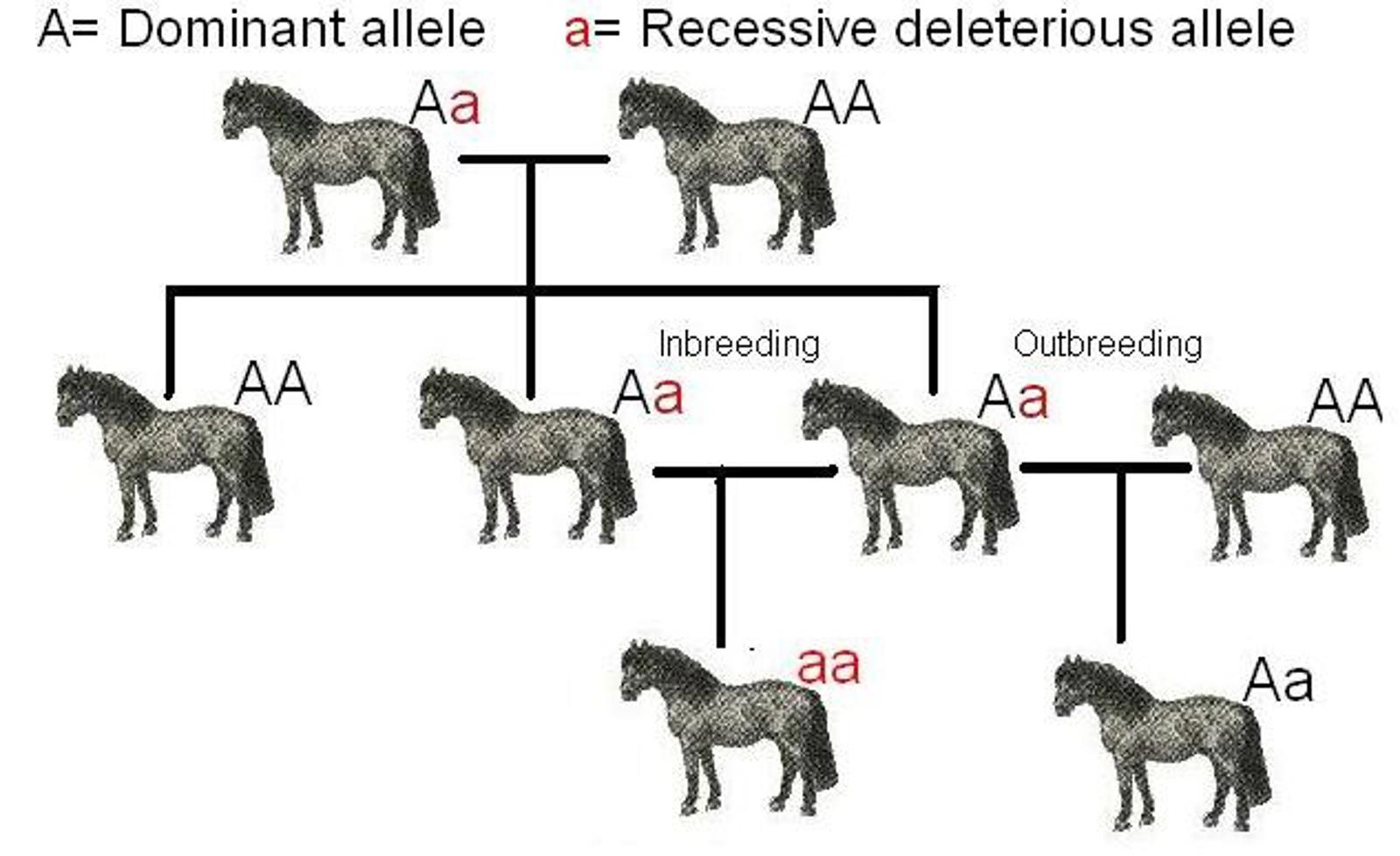

When you are mixing models, the problem of inbreeding depression is going to exist as well, sometimes you even don't know the model mixed how many times...

And sometimes it's just caused by people being bored by the style of the well known checkpoints after seeing them too many times, their brains can't react to that style anymore and feel so boring. Humans naturally avoid things that lack diversity in their aesthetic standards.

This is the reason I tend to avoid mixing existing models and AI generated images in training but keep feeding new human art with diverse artist styles into it. Except in the case of a not well known character with very few images. I started to have this idea when the first day I trained a Lora.

In most cases, I feed 100% human art when I'm training models. Just my opinion. For avoiding "Model Collapse" of course.

More and more fresh blood (100% human art) is very important for training models and AI art.