Hello all, I am Jason.

In the past month, I main focused on OpenKolors v2.2 training.

It is a huge training process and I might share more information about what I have learned in other article.

In this article, I wouldn't explain a lot of how to install the env and related things. It should be easy enough to install if you already have ability to install other trainers. If you have any question about the installation, you might leave the comment here.

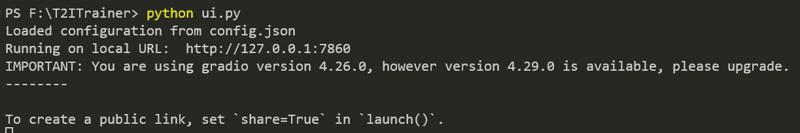

After setting up the environment, you could use python ui.py this command the startup the web service. You could ctrl+left click in cmd to open it on your browser or just type http://127.0.0.1:7860/ in url address. You should save it in bookmark for convenience.

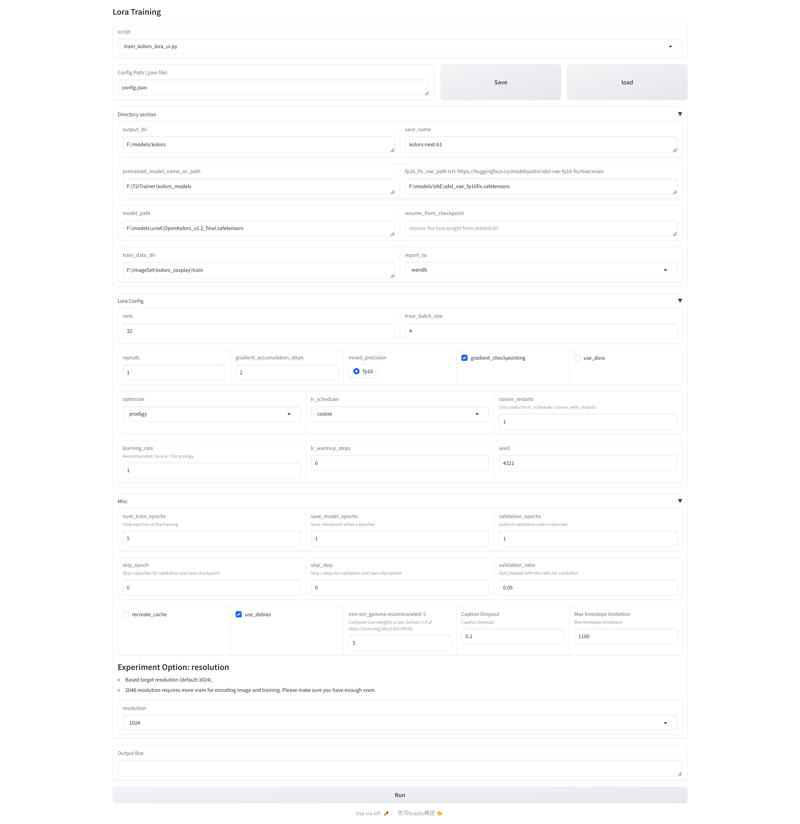

After successfully enter the page, you could see the following parameters

It is huge page and I would go through all of this parameters in the articles.

It has 5 section:

Script

Config Path (.json file)

Directory section

Lora Config

Misc

For the script and config path, you could just ignore them.

The Script section is mainly select which script to use and it only has one script train_kolors_lora_ui.py for now. This repo has a few more script to train sdxl, hunyuandit 1.1, pixart sigma, sd3 but I mainly focused on kolors. So, I archived them and remove the options from the script.

Config Path (.json file) is where your config file located. The default location is as same as the t2itrainer which fine enough for most people. The t2itrainer would auto loaded up the last config for convenience. Just keep it default if you don't need to adjust the location.

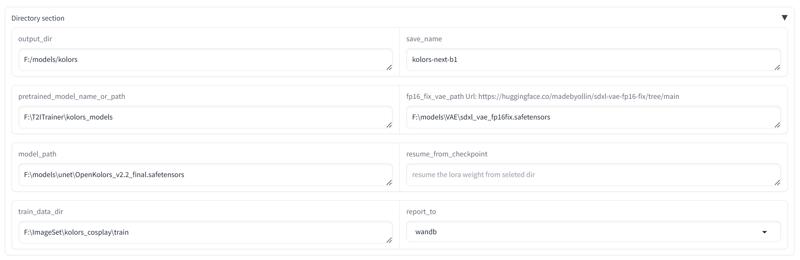

Directory section

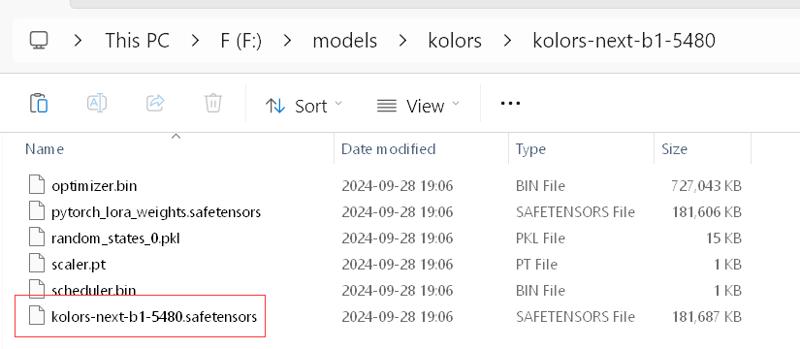

output_dir stores all output content. It might contains multiple save during training.

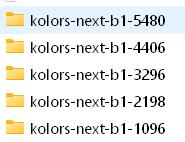

save_name is the dir and lora name prefix. In above example, the output folder named as {save_name}-{steps}

You could see a lora call {save_name}-{steps}.safetensors in the related dir.

You could use this lora directly in comfyui.

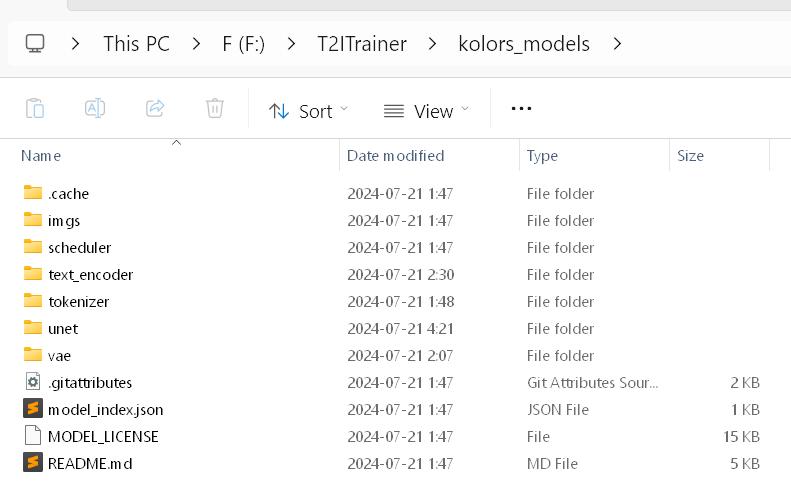

pretrained_model_name_or_path is to locate your kolors weight. Currently, it still requires a set of official hugging face weight to set it up. If you are using the setup.bat, it would suggested to download the weight to store in t2itrainer.

For example:

You need to download this repo from https://huggingface.co/Kwai-Kolors/Kolors

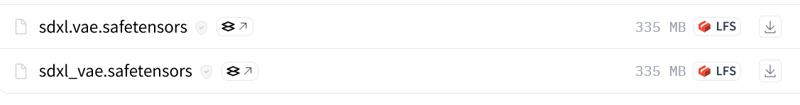

fp16_fix_vae_path Url: https://huggingface.co/madebyollin/sdxl-vae-fp16-fix/tree/main

It is a must. You need to download the seperated fp16 fix vae from above url.

You could use sdxl.vae.safetensors or sdxl_vae.safetensors. Both should be work.

You just don't let it blank or use the default vae from kolors repo which might introduce black output due to vae fp16 issue.

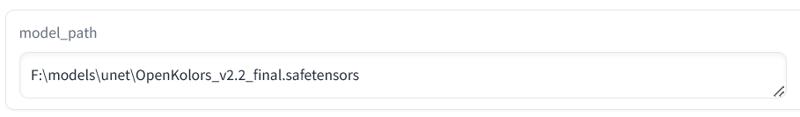

model_path By default, it is blank and it is fine. But you might see above screenshot, I filled in

It means I am using a seperate unet file as the training unet rather than original kolors unet.

It is good for continuous training but you still need to fill in the pretrained_model_name_or_path for other config.

resume_from_checkpoint By default, it is blank and it is fine. It is to continuous the training from stored config. For example, you have trained 10 lora and you would have 10 subdir in your output_dir, you could fill in the last subdir in this option and set the total epoch to 20 to continue the lora training. For example, I could fill in something like this F:\models\kolors\kolors-next-b1-5480 for this option.

train_data_dir stores all of your training image and text pairs. You could have multiple dir or subdir under train_data_dir. It would get all images with the following type: ['.jpg','.jpeg','.png','.webp'] It would use the same name with .txt as the caption. If the image doesn't have related .txt, it would considerate as no caption related and use '' for the caption.

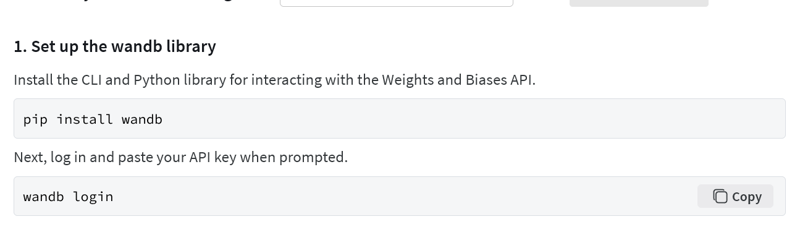

report_to by default, it use wandb. I strongly recommand to register one if you don't have it. It would store all training information and display as a chart in their website and it is free. You just need to setup it up very easily.

after the login, you need to copy and paste your api key to the cmd which is invissible but you could just enter it.

Lora Config

rank or dim is the lora rank. It usually use 4, 8, 16, 32. I rarely use 64 or above in sdxl or kolors but you could still set it. It just like other trainer.

train_batch_size is how many images would be processed in 1 batch. It also use more vram and cause problem when you set too high of it. I usually use 8 for max on my 3090. 4 should be ok in 16GB vram. Under 16GB vram I would only suggest 1 or 2.

repeats is how many time would the dataset repeats. It is good for small dataset like 20,30. It doesn't use folder prefix to identify the repeat of folders. It is the whole dataset repeats.

gradient_accumulation_steps something like train_batch_size but in another way. train_batch_size is process multiple image at once and gradient_accumulation_steps is to update the model when how many steps processed. Some people call it virtual batch size.

bs * gradient_accumulation_steps = total batch size

In larger dataset, like above 100k, larger batch size might be more useful. But in most general case, I usually use bs 4 or 8 and gradient_accumulation_steps 2.

mixed_precision only fp16 avalible for now. I tried to use bf16 and all messed up output. So, I removed it from the option.

gradient_checkpointing default is on and it saves vram.

use_dora default is off and I doesn't really tested it between normal lora.

optimizer default is adamw. I use Prodigy for all of my training but prodigy needs more vram?

lr_scheduler default is constant. I usually use cosine for my training. If it is not enough, I would resume from my last checkpoint and do more epoch which similar to cosine_restarts?

cosine_restarts only useful when you use cosine_restarts lr_scheduler. It set how many time of the lr restarts in this training.

learning_rate the start learning_rate of the training, prodigy always set to 1 and let it automatically find the lr. It would climb up and down when you use prodigy.

lr_warmup_steps how many steps before your lr climb to the learning rate. I rarely use it with prodigy.

seed the seed you like to use in your training, you could just set a random number for it. But don't use too large number.

Misc

num_train_epochs how many epochs you want to train. If you used resume_from_checkpoint, you need a larger epochs than previous training. For example, you trained 10 epoches and you resume it, you need to set 15 or 20 for the training. The trainer would continue the steps from your last epoch. It use the steps number in your last epoch dir name.

save_model_epochs I always set it as 1. Don't set it larger than your num_train_epochs. It might not save any output after the training.

validation_epochs I always set it as 1. It is used to do the validation process to calculate the validation loss. It related to validation_ratio. It would seperate your dataset by the validation_ration and take part of it as validation dataset. It would do the same prediction process without model update and see how close to your validation set when you haven't training them.

It is quite useful for small but similar dataset and very large dataset.

skip_epoch by default, it should be 0. It means always do the save and validation process. You could set it to 1, it would skip the first save and validation process and do the process in second time.

skip_step similar to skip_epoch but in steps. I never use it.

validation_ratio how many % would take from your training dataset. I usually set it 0.05 or 0.1 to do the validation.

recreate_cache by default, it is off. It used to force the caching process to recreate all cache, including all .npkolors and .nplatent It is useful when some file corrupted.

use_debias I always set it on. It could solve color issue if you have. Similar to noise_offset in early age.

min-snr_gamma recommanded: 5 to minimum the singal at last diffusion steps usually is 999 but in kolors, it is 1099. It is good and I always use 5. But I don't know why it can't load the config value. So, it need to set it as 5 manually.

Caption Dropout is quite important. I usually use 0.1. But in somecase, if you want to train a style lora you could increase it to 0.3 or high. When the caption is meet the dropout condition, it would use empty string to replace the original caption. It would modify the uncondition space to close with your training data. It is useful in small training dataset and large and diverse dataset.

Max timesteps limitation Usually, it is 1000 for most text to image model. But kolors is using 1100 timesteps. Just keep it default if you want a simple lora.

Experiment Option: resolution Might be removed in future. It is just an experiment. Kolors could adapt large resolution like 2048. Using 2048^ training set, it could directly output on 2048 resolution but it cause too much compute and not worth

Output Box It would output the training parameters when the training completed. For debug only. not thing to set.

Thanks for reading all of this.

If you have any question, feel free to dm me or leave the comment.

I am Jason, see you.

Contact: [email protected]

QQ Group: 866612947

![[Kolors Training] T2ITrainer For Parameters Explanation step by step](https://image.civitai.com/xG1nkqKTMzGDvpLrqFT7WA/008b6471-83f4-4425-ba03-f968eb15fb45/width=1320/ComfyUI_temp_plgaf_00001_.jpeg)