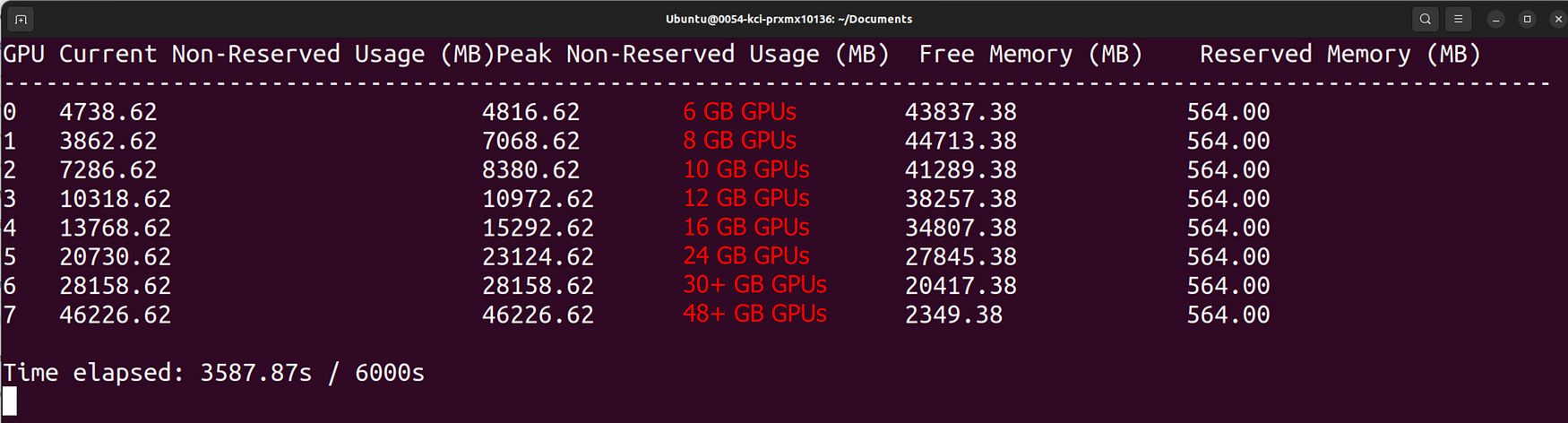

Huge news for Kohya GUI — Now you can fully Fine Tune / DreamBooth FLUX Dev with as low as 6 GB GPUs without any quality loss compared to 48 GB GPUs — Moreover, Fine Tuning yields better results than any LoRA training could

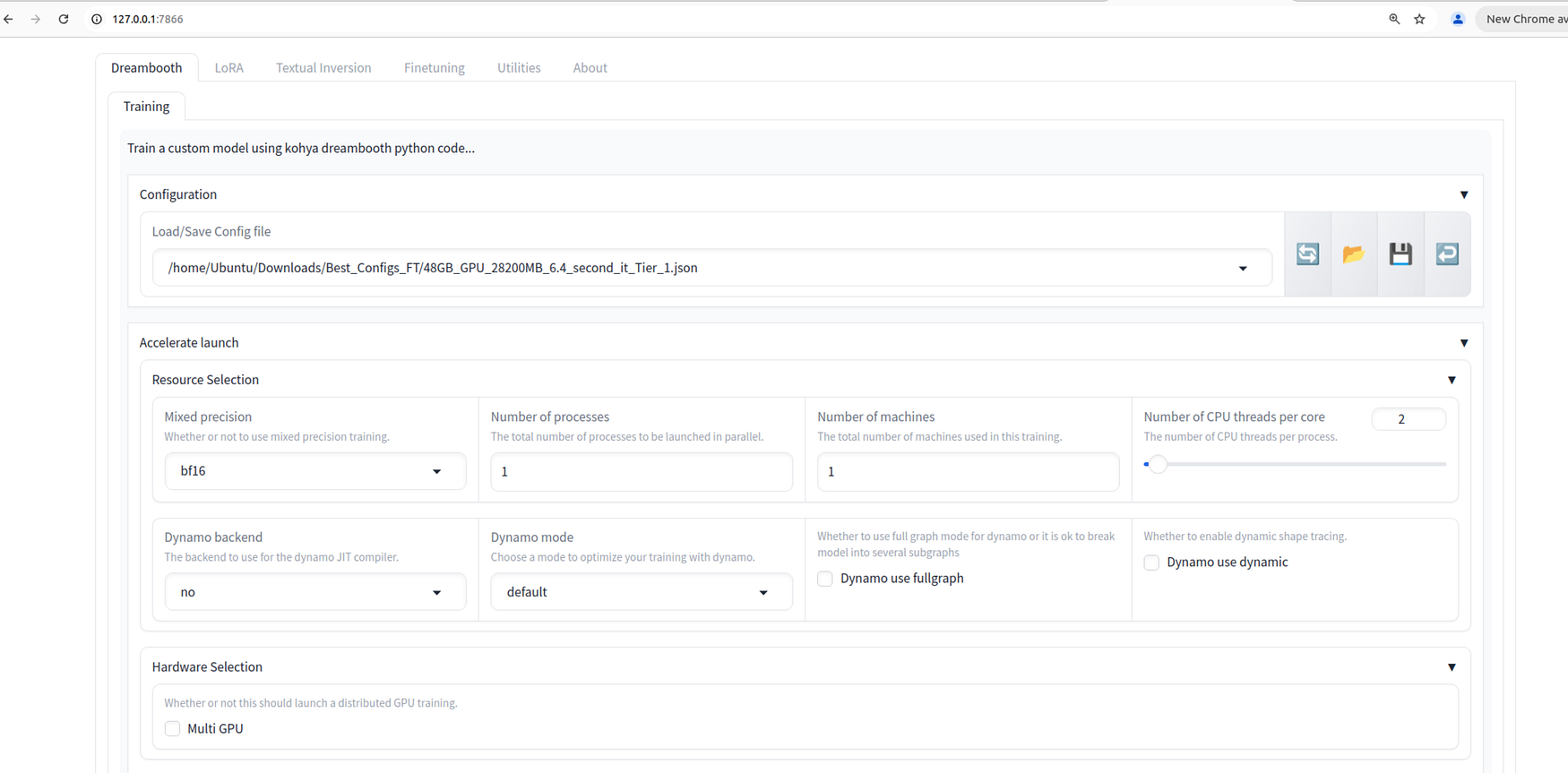

Config Files

I published all configs here : https://www.patreon.com/posts/112099700

Tutorials

Fine tuning tutorial in production

Windows FLUX LoRA training (fine tuning is same just config changes) : https://youtu.be/nySGu12Y05k

Cloud FLUX LoRA training (RunPod and Massed Compute ultra cheap) : https://youtu.be/-uhL2nW7Ddw

LoRA Extraction

The checkpoint sizes are 23.8 GB but you can extract LoRA with almost no loss quality — I made a research and public article / guide for this as well

LoRA extraction guide from Fine Tuned checkpoint is here : https://www.patreon.com/posts/112335162

Info

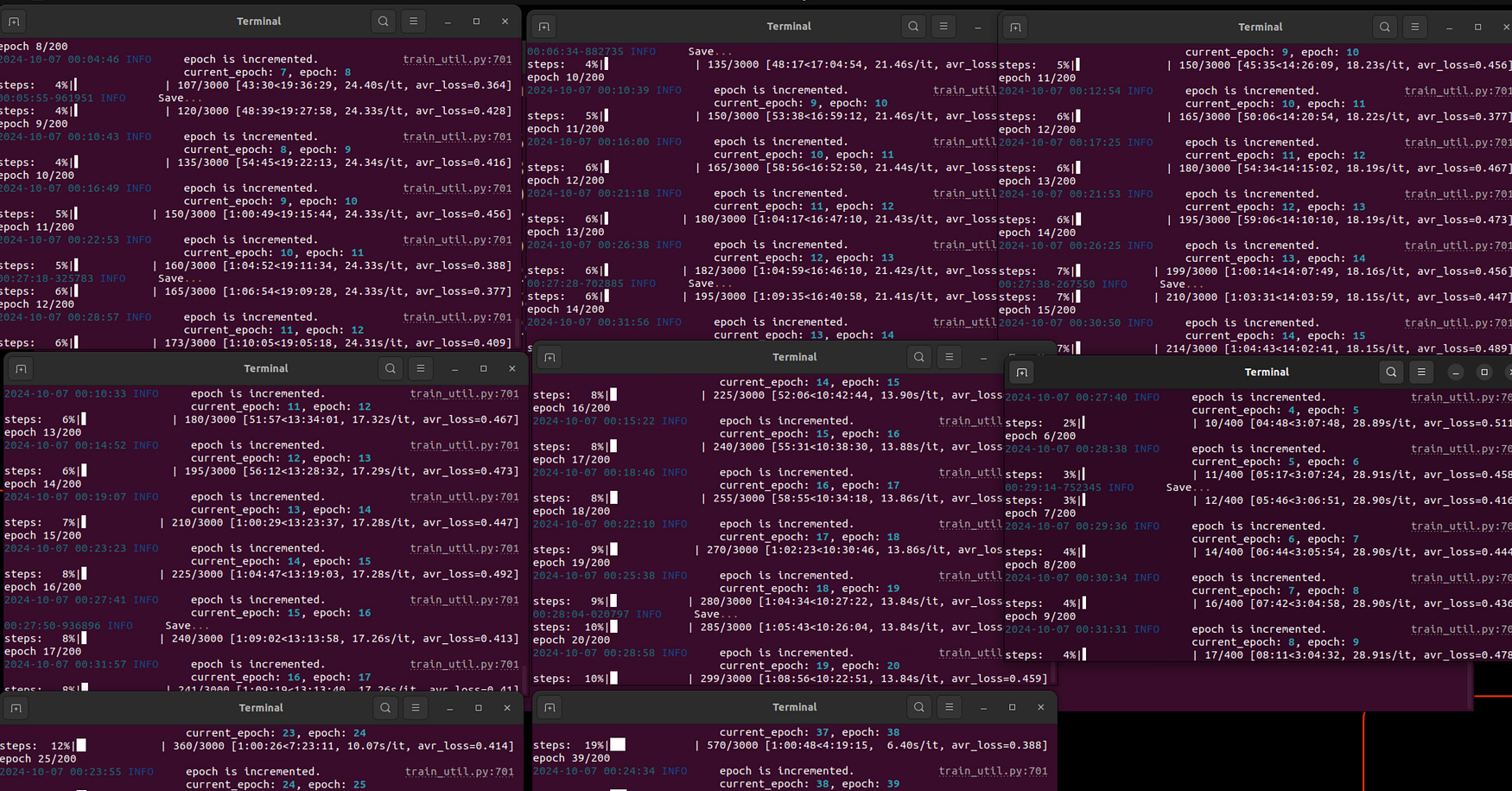

This is just mind blowing. The recent improvements Kohya made for block swapping is just amazing.

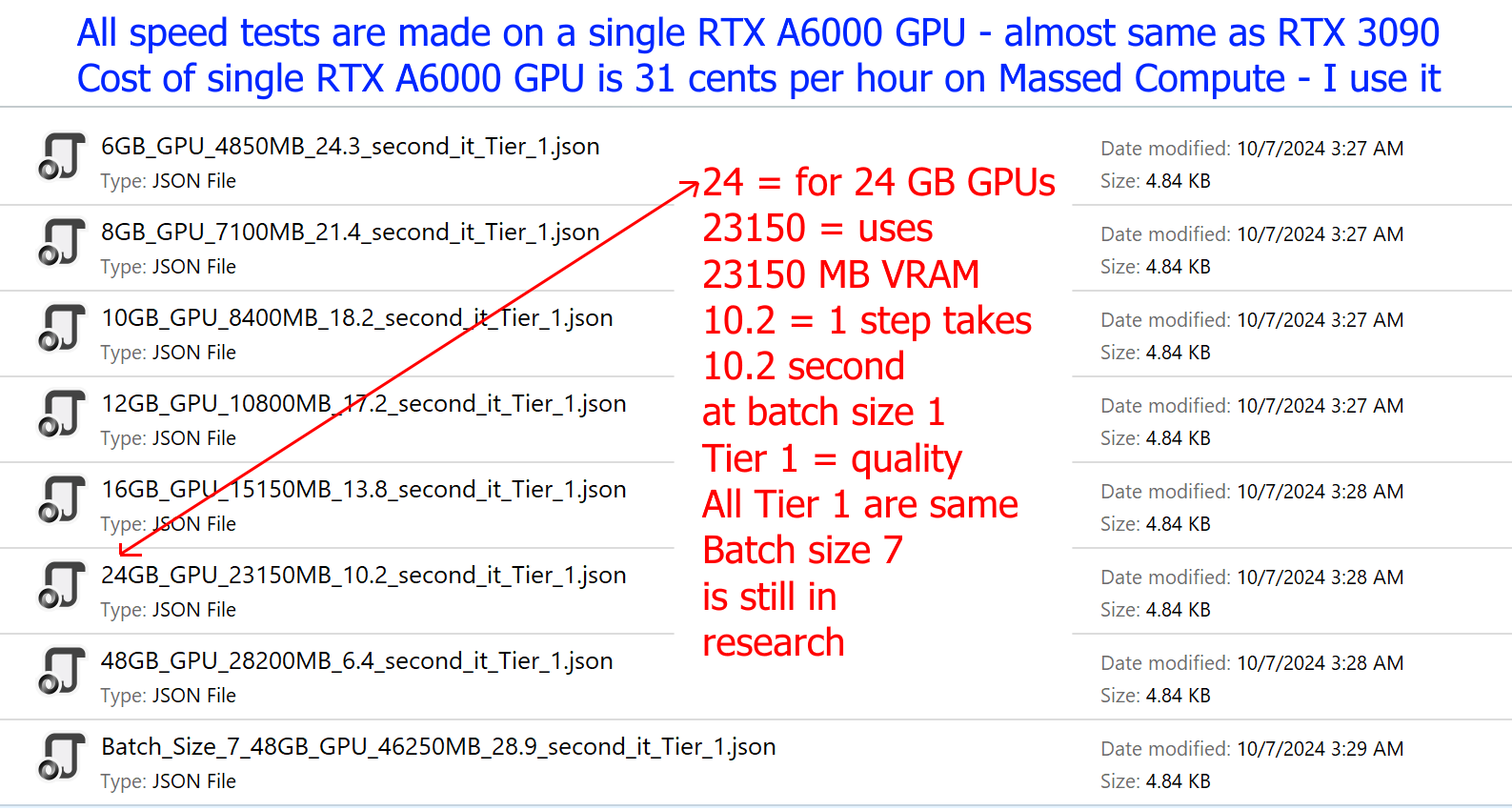

Speeds are also amazing that you can see in image 2 — of course those values are based on my researched config and tested on RTX A6000 — same speed as almost RTX 3090

Also all trainings experiments are made at 1024x1024px. If you use lower resolution it will be lesser VRAM + faster speed

The VRAM usages would change according to your own configuration — likely speed as well

Moreover, Fine Tuning / DreamBooth yields better results than any LoRA could

Installers

1-Kohya GUI accurate branch and Windows Torch 2.5 Installers and test prompts shared here : https://www.patreon.com/posts/110879657

The link of Kohya GUI with accurate branch : https://github.com/bmaltais/kohya_ss/tree/sd3-flux.1