Kohya_ss - ResizeLoRA_Walkthrough.

Features:

Model Selection: Users can choose the LoRA model they wish to resize by selecting from the available files or providing a custom path.

Dynamic Method: Provides options for dynamic methods like sv_ratio, sv_fro, & sv_cumulative to adjust the model's rank with additional parameters.

Precision & Device Options: Users can select the precision (fp16, bf16, or float) & the device (cpu or cuda) for the model resizing process.

Verbose Logging: An option for detailed logging is available to track the progress of the resizing process.

How to Use & Basic Feature breakdown:

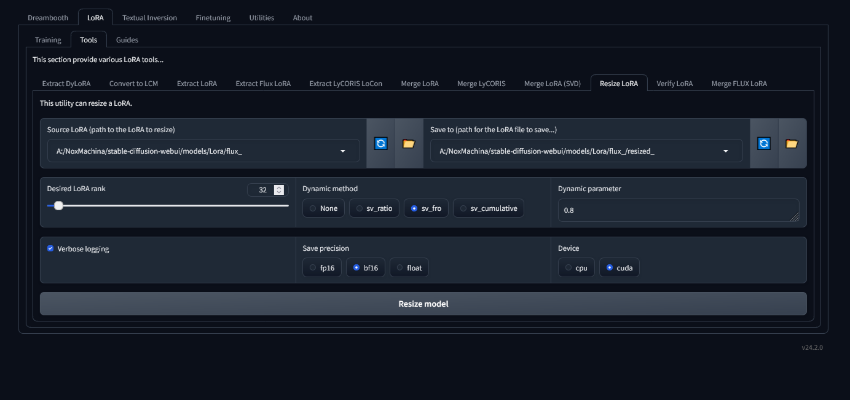

Once you have kohya_ss up & running navigate to LoRA/Tools/Resize LoRA in the GUI:

Model Selection:

Choose the source LoRA model from the dropdown menu or browse for it using the folder button (📂).

Refresh the list of available models using the refresh button (🔄).

Save Path:

Specify where the resized LoRA model should be saved. You can select an existing path or provide a new file name using the folder button (📂).

If the file doesn't end with .pt or .safetensors, the script will automatically append .safetensors to the file name.

New Rank: Use the slider to set the desired rank for the resized LoRA model.

Dynamic Method & Parameters:

Choose a dynamic method (sv_ratio, sv_fro, sv_cumulative, or None) & provide a dynamic parameter if necessary.

Ensure that the parameter values are within the allowed ranges (e.g., for sv_ratio, it must be ≥ 2).

Precision & Device:

Select the save precision (fp16, bf16, float) & the device to be used (cpu, cuda).

Verbose Logging: Enable or disable verbose logging to track the detailed process of resizing.

Resize the Model: Once all settings are configured, click the "Resize model" button to start the resizing process. The script will log the execution of the resize command & provide feedback once the resizing is complete.

Logging:

The script uses a custom logging system to track errors, warnings, & progress. The log messages can help diagnose issues such as invalid model paths or incorrect dynamic parameters.

Custom Functions & Logic:

resize_lora(): This function handles the resizing of the LoRA model based on user inputs. It builds & runs the command using subprocess to execute the resize_lora.py script [as seen below].

Dynamic Method Validation: Ensures that dynamic parameters fall within valid ranges depending on the method selected.

File Handling: The script includes helper functions to list models & save paths, with refresh buttons to update the file lists.

Additional Considerations:

If no device is specified, the default device used is cuda.

The script adds .safetensors to the save path if no extension is provided.

The script logs the full command being executed for easy troubleshooting.

You can set the following:

Change the default device to CPU or CUDA based on your hardware.

Adjust the maximum LoRA rank if your application requires a different range.

Add more precision or device options by modifying the dropdown menus for those fields.

Understanding the Dynamic method & Dynamic parameter functions & how to use them properly.

The Dynamic Method & Dynamic Parameter in the resize_lora.py script [again as seen below]. provide advanced control over the process of resizing a LoRA model. They allow the user to fine-tune how the resizing is performed by adjusting certain characteristics of the model's rank structure. Below is an in-depth explanation of each, including how to use them correctly.

Dynamic Method:

The Dynamic Method refers to a technique applied during the resizing of the LoRA model, which alters how the model's rank is reduced or adjusted. When resizing a LoRA model, its complexity is changed by modifying the rank, which essentially controls the number of factors the model uses for its adaptations. The Dynamic Method governs how this reduction in complexity occurs by applying different mathematical techniques to the singular value decomposition (SVD) process of the model's internal matrices.

The script supports four options for the Dynamic Method:

None - This is the default behavior where no dynamic method is applied. The rank is reduced based on the new rank specified, but no special techniques are used to preserve or adjust specific parts of the model. Use this option when you just want a straightforward resizing without applying any dynamic adjustments. Dynamic Parameter: Not required for this method.

sv_ratio - This method preserves a portion of the singular values based on a ratio. Singular values are factors in the model's internal structure that represent how important certain features are. The sv_ratio method uses the Dynamic Parameter to control how many of the top singular values are preserved. Dynamic Parameter - The value of the dynamic parameter for this method must be 2 or greater. It represents the ratio between the largest & smallest singular values to be kept. A higher ratio means more values are retained, preserving more of the model's knowledge but at a higher computational cost. Use sv_ratio when you want to ensure that the most significant features of the model (as defined by their singular values) are preserved during the resizing process. A larger parameter value preserves more features. Example: Setting the parameter to 3 would keep the top singular values up to a ratio of 3 between the largest & smallest values. This would ensure that important features aren’t discarded.

sv_fro - This method applies a threshold based on the Frobenius norm of the singular values. The Frobenius norm is a measure of the size or energy of the matrix representing the model's internal knowledge. The Dynamic Parameter controls the proportion of this norm that should be retained. Dynamic Parameter - The value must be between 0 & 1. A value closer to 1 retains more of the model's "energy" (knowledge), while a value closer to 0 discards more information, resulting in a more aggressive reduction in model complexity. Use sv_fro when you want to ensure that a specific percentage of the model’s overall knowledge (as measured by the Frobenius norm) is preserved. This is useful for maintaining the overall performance of the model during resizing. Example: Setting the parameter to 0.9 would retain 90% of the Frobenius norm, which means 90% of the model's knowledge or complexity is kept in the resized model.

sv_cumulative - This method focuses on cumulative singular value retention. It accumulates the singular values in descending order & retains them until the cumulative sum reaches a certain proportion of the total sum of singular values. The Dynamic Parameter specifies the proportion of the total singular values’ sum to retain. Dynamic Parameter - The value must be between 0 & 1. It represents the cumulative proportion of singular values that should be preserved. A value closer to 1 means more singular values will be kept, thus preserving more of the model’s fine-tuned knowledge. Use sv_cumulative when you want to preserve a certain proportion of the model's learned features. It provides control over how much of the total information encoded by the singular values is retained. Example: Setting the parameter to 0.8 would retain enough singular values such that their cumulative sum accounts for 80% of the total sum. This retains the most important singular values that account for the majority of the model's capacity.

Dynamic Parameter:

The Dynamic Parameter is a numeric value that directly influences how the chosen dynamic method behaves. It determines the threshold or ratio for singular value retention, giving the user control over how much of the model's complexity & knowledge is preserved when reducing its rank.

The Dynamic Parameter behaves differently depending on the selected Dynamic Method:

sv_ratio -

Value Range: Must be 2 or greater. It controls the ratio between the largest & smallest singular values to be kept. A higher ratio retains more singular values, preserving more complexity. Use a value like 2, 3, or 4 when you want to retain more singular values, keeping more of the model's knowledge intact.

sv_fro -

Value Range: Must be between 0 & 1. It controls the percentage of the Frobenius norm that should be retained. A value closer to 1 retains more of the model’s overall information. Use values like 0.9 or 0.8 to retain most of the model's learned knowledge. Lower values like 0.5 would make the resized model more lightweight but at the cost of potentially losing some performance.

sv_cumulative -

Value Range: Must be between 0 & 1. It determines the proportion of the total singular value sum to retain. A value closer to 1 retains more of the model's information. Use values like 0.9 or 0.8 to preserve the majority of the model's important features, while lower values like 0.6 would make the model more compact, potentially losing some details.

How to Use Dynamic Method & Dynamic Parameter Together:

Decide whether you want to apply a dynamic method based on singular value ratios (sv_ratio), Frobenius norm (sv_fro), or cumulative singular values (sv_cumulative), or if you prefer not to apply any dynamic method at all (None).

Setting the Dynamic Parameter:

If you've chosen a dynamic method, adjust the dynamic parameter to control how much of the model's internal information is preserved. The correct range for this parameter depends on the dynamic method:

For sv_ratio, choose a value 2 or greater (e.g., 3 to retain significant singular values).

For sv_fro & sv_cumulative, choose a value between 0 & 1 (e.g., 0.9 to preserve 90% of the model’s knowledge).

Example Configurations:

Retaining significant singular values - Use sv_ratio with a parameter of 3. This keeps the most important values while discarding less important ones.

Preserving most of the model’s knowledge - Use sv_fro or sv_cumulative with a parameter of 0.9 to retain 90% of the model’s internal information while resizing.

Practical Recommendations:

If you are concerned about losing important features when resizing a LoRA model, apply one of the dynamic methods.

Use sv_ratio for retaining the largest & most important singular values, which is often useful for models where only the top features matter.

Use sv_fro or sv_cumulative if you want to preserve a significant portion of the model's overall structure & knowledge.

When Not to Use:

If you simply want to resize the model without worrying about the details of how singular values are handled, select None for the dynamic method.

You can apply the Dynamic Method & Dynamic Parameter to control the trade-off between model size & performance, making your resized LoRA models more efficient while retaining as much information as necessary.

Hope you find this useful as a good addition to your LoRA Training, Merging & now Resizing toolbelt! - NoxMachina

Backend Script / Logic.

resize_lora_gui.py script:

import gradio as gr

import subprocess

import os

import sys

from .common_gui import (

get_saveasfilename_path,

get_file_path,

scriptdir,

list_files,

create_refresh_button, setup_environment

)

from .custom_logging import setup_logging

# Set up logging

log = setup_logging()

folder_symbol = "\U0001f4c2" # 📂

refresh_symbol = "\U0001f504" # 🔄

save_style_symbol = "\U0001f4be" # 💾

document_symbol = "\U0001F4C4" # 📄

PYTHON = sys.executable

def resize_lora(

model,

new_rank,

save_to,

save_precision,

device,

dynamic_method,

dynamic_param,

verbose,

):

# Check for caption_text_input

if model == "":

log.info("Invalid model file")

return

# Check if source model exist

if not os.path.isfile(model):

log.info("The provided model is not a file")

return

if dynamic_method == "sv_ratio":

if float(dynamic_param) < 2:

log.info(

f"Dynamic parameter for {dynamic_method} need to be 2 or greater..."

)

return

if dynamic_method == "sv_fro" or dynamic_method == "sv_cumulative":

if float(dynamic_param) < 0 or float(dynamic_param) > 1:

log.info(

f"Dynamic parameter for {dynamic_method} need to be between 0 and 1..."

)

return

# Check if save_to end with one of the defines extension. If not add .safetensors.

if not save_to.endswith((".pt", ".safetensors")):

save_to += ".safetensors"

if device == "":

device = "cuda"

run_cmd = [

rf"{PYTHON}",

rf"{scriptdir}/sd-scripts/networks/resize_lora.py",

"--save_precision",

save_precision,

"--save_to",

rf"{save_to}",

"--model",

rf"{model}",

"--new_rank",

str(new_rank),

"--device",

device,

]

# Conditional checks for dynamic parameters

if dynamic_method != "None":

run_cmd.append("--dynamic_method")

run_cmd.append(dynamic_method)

run_cmd.append("--dynamic_param")

run_cmd.append(str(dynamic_param))

# Check for verbosity

if verbose:

run_cmd.append("--verbose")

env = setup_environment()

# Reconstruct the safe command string for display

command_to_run = " ".join(run_cmd)

log.info(f"Executing command: {command_to_run}")

# Run the command in the sd-scripts folder context

subprocess.run(run_cmd, env=env)

log.info("Done resizing...")

###

# Gradio UI

###

def gradio_resize_lora_tab(

headless=False,

):

current_model_dir = os.path.join(scriptdir, "outputs")

current_save_dir = os.path.join(scriptdir, "outputs")

def list_models(path):

nonlocal current_model_dir

current_model_dir = path

return list(list_files(path, exts=[".ckpt", ".safetensors"], all=True))

def list_save_to(path):

nonlocal current_save_dir

current_save_dir = path

return list(list_files(path, exts=[".pt", ".safetensors"], all=True))

with gr.Tab("Resize LoRA"):

gr.Markdown("This utility can resize a LoRA.")

lora_ext = gr.Textbox(value="*.safetensors *.pt", visible=False)

lora_ext_name = gr.Textbox(value="LoRA model types", visible=False)

with gr.Group(), gr.Row():

model = gr.Dropdown(

label="Source LoRA (path to the LoRA to resize)",

interactive=True,

choices=[""] + list_models(current_model_dir),

value="",

allow_custom_value=True,

)

create_refresh_button(

model,

lambda: None,

lambda: {"choices": list_models(current_model_dir)},

"open_folder_small",

)

button_lora_a_model_file = gr.Button(

folder_symbol,

elem_id="open_folder_small",

elem_classes=["tool"],

visible=(not headless),

)

button_lora_a_model_file.click(

get_file_path,

inputs=[model, lora_ext, lora_ext_name],

outputs=model,

show_progress=False,

)

save_to = gr.Dropdown(

label="Save to (path for the LoRA file to save...)",

interactive=True,

choices=[""] + list_save_to(current_save_dir),

value="",

allow_custom_value=True,

)

create_refresh_button(

save_to,

lambda: None,

lambda: {"choices": list_save_to(current_save_dir)},

"open_folder_small",

)

button_save_to = gr.Button(

folder_symbol,

elem_id="open_folder_small",

elem_classes=["tool"],

visible=(not headless),

)

button_save_to.click(

get_saveasfilename_path,

inputs=[save_to, lora_ext, lora_ext_name],

outputs=save_to,

show_progress=False,

)

model.change(

fn=lambda path: gr.Dropdown(choices=[""] + list_models(path)),

inputs=model,

outputs=model,

show_progress=False,

)

save_to.change(

fn=lambda path: gr.Dropdown(choices=[""] + list_save_to(path)),

inputs=save_to,

outputs=save_to,

show_progress=False,

)

with gr.Row():

new_rank = gr.Slider(

label="Desired LoRA rank",

minimum=1,

maximum=1024,

step=1,

value=4,

interactive=True,

)

dynamic_method = gr.Radio(

choices=["None", "sv_ratio", "sv_fro", "sv_cumulative"],

value="sv_fro",

label="Dynamic method",

interactive=True,

)

dynamic_param = gr.Textbox(

label="Dynamic parameter",

value="0.9",

interactive=True,

placeholder="Value for the dynamic method selected.",

)

with gr.Row():

verbose = gr.Checkbox(label="Verbose logging", value=True)

save_precision = gr.Radio(

label="Save precision",

choices=["fp16", "bf16", "float"],

value="fp16",

interactive=True,

)

device = gr.Radio(

label="Device",

choices=[

"cpu",

"cuda",

],

value="cuda",

interactive=True,

)

convert_button = gr.Button("Resize model")

convert_button.click(

resize_lora,

inputs=[

model,

new_rank,

save_to,

save_precision,

device,

dynamic_method,

dynamic_param,

verbose,

],

show_progress=False,

)