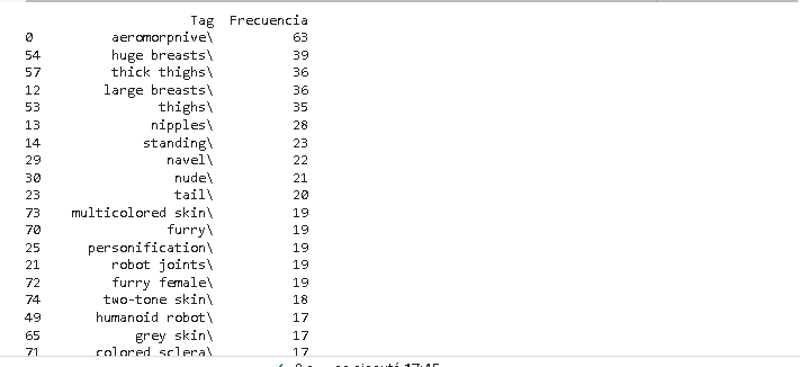

The program allows you to view the tags of any safestensor file, sort them by frequency, and display the corresponding activation tag.

import pandas as pd

import re

def process_file(file, encoding, tag_count):

with open(file, 'r', encoding=encoding) as f:

tag_content = f.read()

# Search for the content between the quotes of the "ss_tag_frequency" tag

match = re.search(r'"ss_tag_frequency":"({.+?})"', tag_content)

if match is None:

print("The 'ss_tag_frequency' tag was not found in the file.")

return

tag_content = match.group(1)

# Extract the tag-frequency pairs using regular expressions

pairs = re.findall(r'"([^"]+)": (\d+)', tag_content)

# Create a list of dictionaries with the tag data

data_list = [{'Tag': tag, 'Frequency': int(frequency)} for tag, frequency in pairs]

# Create a DataFrame from the list of dictionaries

df = pd.DataFrame(data_list)

# Sort the tags by frequency in descending order

df = df.sort_values(by='Frequency', ascending=False)

# Display the first "tag_count" complete tags and their frequencies

pd.set_option('display.max_rows', tag_count)

print(df.head(tag_count))

file_name = '/content/drive/MyDrive/Dataset/aeromorphAlo-03.safetensors' # Replace '/content/drive/MyDrive/Dataset/aeromorphAlo-03.safetensors' with the correct path to your file

encoding = 'latin-1' # Replace 'utf-8' with the correct encoding of your file

tag_count = 50 + 1 # Define the number of tags to display by replacing 50 with the desired value

process_file(file_name, encoding, tag_count)Example:

~~~~~~~

The second program provides all the metadata/hyperparameters of the safestensor and presents it in an executive summary.

def generate_report_from_file(file_name, encoding):

with open(file_name, 'r', encoding=encoding) as file:

content = file.read()

# Analyze the content to extract the necessary values

value1 = get_value(content, "ss_sd_model_name")

value2 = get_value(content, "ss_clip_skip")

value3 = get_value(content, "ss_num_train_images")

value4 = get_value(content, "ss_tag_frequency") # Replace with the correct key based on the data structure

value5 = get_value(content, "ss_epoch")

value6 = get_value(content, "ss_face_crop_aug_range")

value7 = get_value(content, "ss_full_fp16")

value8 = get_value(content, "ss_gradient_accumulation_steps")

value9 = get_value(content, "ss_gradient_checkpointing")

value10 = get_value(content, "ss_learning_rate")

value11 = get_value(content, "ss_lowram")

value12 = get_value(content, "ss_lr_scheduler")

value13 = get_value(content, "ss_lr_warmup_steps")

value14 = get_value(content, "ss_max_grad_norm")

value15 = get_value(content, "ss_max_token_length")

value16 = get_value(content, "ss_max_train_steps")

value17 = get_value(content, "ss_min_snr_gamma")

value18 = get_value(content, "ss_mixed_precision")

value19 = get_value(content, "ss_network_alpha")

value20 = get_value(content, "ss_network_dim")

value21 = get_value(content, "ss_network_module")

value22 = get_value(content, "ss_new_sd_model_hash")

value23 = get_value(content, "ss_noise_offset")

value24 = get_value(content, "ss_num_batches_per_epoch")

value25 = get_value(content, "ss_cache_latents")

value26 = get_value(content, "ss_caption_dropout_every_n_epochs")

value27 = get_value(content, "ss_caption_dropout_rate")

value28 = get_value(content, "ss_caption_tag_dropout_rate")

value29 = get_value(content, "ss_dataset_dirs") # Replace with the correct key based on the data structure

value30 = get_value(content, "ss_num_epochs")

value31 = get_value(content, "ss_num_reg_images")

value32 = get_value(content, "ss_optimizer")

value33 = get_value(content, "ss_output_name")

value34 = get_value(content, "ss_prior_loss_weight")

value35 = get_value(content, "ss_sd_model_hash")

value36 = get_value(content, "ss_sd_scripts_commit_hash")

value37 = get_value(content, "ss_seed")

value38 = get_value(content, "ss_session_id")

value39 = get_value(content, "ss_text_encoder_lr")

value40 = get_value(content, "ss_unet_lr")

value41 = get_value(content, "ss_v2")

value42 = get_value(content, "sshs_legacy_hash")

value43 = get_value(content, "sshs_model_hash")

# Generate the report using the extracted values

report = f'''Executive Summary Report:

Important Parameters:

- Model Name: {value1}

- Clip Skip: {value2}

- Number of Training Images: {value3}

- Tag Frequency:

- Epochs: {value5}

- Face Crop Augmentation Range: {value6}

- Full FP16: {value7}

- Gradient Accumulation Steps: {value8}

- Gradient Checkpointing: {value9}

- Learning Rate: {value10}

- Low RAM: {value11}

- Learning Rate Scheduler: {value12}

- Learning Rate Warmup Steps: {value13}

- Max Gradient Norm: {value14}

- Max Token Length: {value15}

- Max Training Steps: {value16}

- Min SNR Gamma: {value17}

- Mixed Precision: {value18}

- Network Alpha: {value19}

- Network Dimension: {value20}

- Network Module: {value21}

- New SD Model Hash: {value22}

- Noise Offset: {value23}

- Number of Batches per Epoch: {value24}

- Cache Latents: {value25}

- Caption Dropout Every N Epochs: {value26}

- Caption Dropout Rate: {value27}

- Caption Tag Dropout Rate: {value28}

- Number of Epochs: {value30}

- Number of Regression Images: {value31}

- Optimizer: {value32}

- Output Name: {value33}

- Prior Loss Weight: {value34}

- SD Model Hash: {value35}

- SD Scripts Commit Hash: {value36}

- Seed: {value37}

- Session ID: {value38}

- Text Encoder Learning Rate: {value39}

- UNet Learning Rate: {value40}

- Version 2: {value41}

- SSHS Legacy Hash: {value42}

- SSHS Model Hash: {value43}'''

return report

def get_value(content, key):

start = content.find(key) + len(key) + 3 # Add 3 to skip the characters ': ",'

end = content.find('"', start)

value = content[start:end]

return value

# Usage of the code to generate the report from a specific file

file_name = '/content/drive/MyDrive/Dataset/aeromorphAlo-03.safetensors' # Replace '/path/to/file.txt' with the correct path to your file

encoding = 'latin-1' # Replace 'utf-8' with the correct encoding of your file

generated_report = generate_report_from_file(file_name, encoding)

print(generated_report)Example:

~~~~~~

The third program analyzes thousands of .safestensor files to obtain dataframes with data to scan it.import os

import pandas as pd

def analyze_files_in_folder(folder, encoding):

# Get the list of files in the folder

files = os.listdir(folder)

# Create a list to store the data

data = []

# Iterate over the files

for file in files:

# Read the content of the file

file_path = os.path.join(folder, file)

with open(file_path, 'r', encoding=encoding) as file:

content = file.read()

# Generate the values for the executive summary

values = get_values(content)

# Add the values to the list

for value_name, value_count in values.items():

current_value = {'File': file, 'Value Name': value_name, 'Value Count': value_count}

data.append(current_value)

# Create a DataFrame from the data

df = pd.DataFrame(data)

# Display the DataFrame with the values

print(df)

def get_values(content):

# Analyze the content to extract the necessary values [This function is the one you'll actually need to modify]

values = {}

value1 = get_value(content, "ss_max_train_steps")

value2 = get_value(content, "ss_clip_skip")

value3 = get_value(content, "ss_num_train_images")

value4 = get_value(content, "ss_num_epochs")

# Add more values as needed

values = {'ss_max_train_steps': value1, 'ss_clip_skip': value2, 'ss_num_train_images': value3, 'ss_num_epochs': value4} # Update with the appropriate value names

return values

def get_value(content, key):

start = content.find(key) + len(key) + 3 # Add 3 to skip the characters ': ",'

end = content.find('"', start)

value = content[start:end]

return value

# Specify the folder you want to analyze

folder = '/content/drive/MyDrive/Dataset/Pruebas'

encoding = 'latin-1' # Replace 'utf-8' with the correct encoding of your files

# Call the function to analyze the files in the folder

analyze_files_in_folder(folder, encoding)Remember to update the folder variable with the folder you want to analyze and the encoding variable with the correct encoding of your files. Also, you can modify the get_values function to extract the necessary values according to your needs.Example:

Ejecutar en :